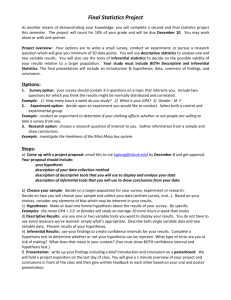

Inferential statistics, power estimates, and study design formalities

advertisement