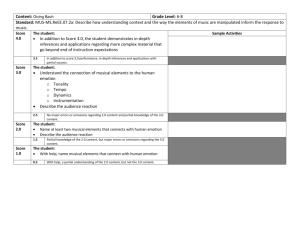

A Review of Music and Emotion Studies: Approaches, Emotion

advertisement