Gravlee et al - Panel Data - final

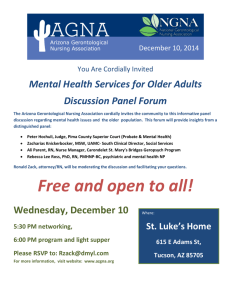

advertisement