Considering the Effects of Accounting Standards

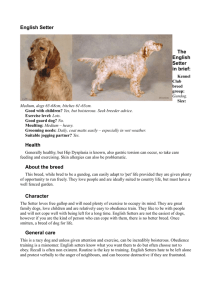

advertisement