Federated square root filter for decentralized parallel processors

advertisement

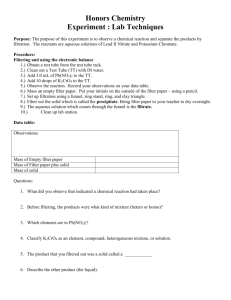

I. Federated Square Root Filter for Decentralized Para1le1 Processes NEAL A. CARLSON Integrity Systems, Inc. An efficient, federated Kalman filter is developed for use in distributed multisensor systems. This new design accommodates sensor-dedicated local filters, some of which use data from a common reference subsystem The local filters run in parallel, and also provide sensor data compression via prefiltering. The master filter runs at a selectable reduced rate, fusing local filter outputs via efficient square root algorithms. C o n " local process w i s e correlations are handled by use of a conservative matrix upper bound. The federated filter yields estimates that are globally optimal, or conservatively suboptimal, depending upon the master filter processing rate. This design achieves a major improvement in throughput (speed), is well suited to real-time system Inplementation, and enhances fault detection, isolation and recovery capability. Manuscript received April 2,1989. IEEE Log No. 33466. This work was supported by the Defense Small Business Innovation Research (SBIR) Program under Contract F33fj15-86-C-1087, administered by the Avionics Laboratory, WRDC/AAAN-2, Wright-Patterson Air Force Base,Dayton, Ohio. This work is based on a paper of the same title presented at NAECON '87, May 18-22, 1987, and printed in the Record of that conference. Author's address: Integrity Systems, Inc., 600 Main St., Suite 4, Winchester, MA 01890. 0018-9251/90/0500-0517 $1.00 @ 1990 IEEE INTRODUCTION This paper develops a federated Kalman filter architecture applicable to decentralized sensor systems with parallel processing capabilities. This architecture provides significant advantages for real-time multisensor applications such as integrated navigation systems. While multisensor systems embody the potential for high levels of accuracy and fault tolerance, that potential has not been fully realized via past application of classical Kalman filtering techniques. Classical techniques applied to multisensor systems can yield severe computation loads when implemented in strictly optimal fashion. Conversely, ad hoc simplifications are not always reliable, being subject to poor accuracy, instability, and even divergence under certain operating conditions. For these and other reasons, there has been considerable recent interest in the development of decentralized (or distributed) Kalman filter architectures. The advent of parallel processing technology and emphasis on fault-tolerant system design are additional factors motivating such development. While several different approaches to decentralized filtering have been developed, none appear to have been implemented in real-time system applications, e.g., aircraft navigation systems. Theoretical approaches to decentralized filtering were developed by Speyer [l], Chang [2], Willsky et al. [3], Levy et al. [4],and Castanon et al. [5].However, these early approaches are generally not suitable or practical for real-time estimation of time-varying systems, due to restrictive system assumptions or large data transfer requirements. A more recent and flexible decentralized filtering method was developed and implemented by Bierman [6], for filtering and smoothing of satellite orbit data. This method constructs a set of independent (local) state estimates, which can be optimally combined in straightforward fashion. The local state estimates are naturally disjoint with respect to their local measurement sets. They are then constructed to be disjoint with respect to common initial conditions and process noise, by assigning 100 percent of the associated information to one local estimate, and zero (infinite covariance) to the others. Thus no cross-correlations exist, and the local estimates can be optimally combined by a master filter via simple addition of the local information. While this method is both optimal and efficient on a per-cycle basis, it does require the master filter to operate at the maximum local measurement rate. The local filters with infinite process noise have no memory, losing all process information between measurement cycles. Hence they do not perform recursive filtering, but yield single-point least-squares solutions. This aspect of the method is of no concern IEEE 'IRANSACTIONS ON AEROSPACE AND ELECTRONIC SYSTEMS VOL. 26, NO. 3 MAY 1959 517 in its intended application, but is undesirable in those applications that require either a) physically meaningful local filters with individually usable results (e.g., for local sensor rate-aiding) or b) master filter rate reduction via local sensor data compression. A conceptual decentralized filtering structure was proposed by Kerr [7],in which several sma!ler filters run in parallel and process data from separate navigation subsystems (e.g., Global Positioning System (GPS)). The outputs of these local subsystem filters are to be combined by a master “collating” filter; however, no mathematical basis for that filter was presented. Given a sound basis, this decentralized filtering architecture would provide distinct advantages, e.g., asynchronous operation, fault detection and isolation, and reconfigurability. Recently, Hashemipour et al. [SI developed new parallel Kalman filtering structures for multisensor networks amenable to parallel processing. While this method yields globally optimal estimates and a linear speed-up rate, it requires significant data exchange among the filter components, and does not use local data compression to reduce the master rate. The new federated filter design developed here achieves the inherent advantages of distributed systems by means of a relatively simple yet pivotal extension of Bierman’s method [6]. This extension provides usable and physically meaningful local filters, and allows master filter rate reduction via local data compression (prefiltering). It yields globally optimal or suboptimal estimation accuracy, as a function of the selectable master filter rate, with a high degree of fault tolerance. The remaining sections of this paper describe the distributed filtering problem (Section 11), the new federated filter structure (Section 111), its covariance square root form (Section IV), alternative implementations (Section V), current and future applications (Section VI), summary and conclusions (Section VII), and formal mathematics (Appendix). II. PROBLEM STATEMENT In concept, federated filtering is a two-stage data processing technique in which the outputs of local, sensor-related filters are subsequently processed and combined by a larger master filter, as illustrated in Fig. 1. This figure shows the major flow of information, but does not attempt to represent all the possible data exchanges among components. As suggested by this figure, each local filter is dedicated to a separate sensor subsystem. One or more local filters may also use data from a common reference system, e.g., an inertial navigation system. This general structure applies to two federated system types of interest, denoted “A’ and “B”. Type A systems involve fixed local filter designs that have been developed elsewhere for stand-alone operation, without regard for federated applications. While their basic 518 d. f ’ R REFERfMCE SYSTEM d, ! YASEA FILER LOCAL SENSOR I 1111111 1 LOCAL LOUL SENSOR 2 I p--Lq.-..kq SENSOR Y FlLlER N Fig. 1. Federated filter structure. designs are fixed, some internal model parameters can be modified via initial data loads. Type A systems are of interest because of the need to use existing local filter designs (e.g., aided GPS) over the next few years. Type B systems involve totally flexible local filter designs that can be tailored to best support federated filtering operations. Type B systems permit all the federated filter components to be designed for cooperative operation, with design decisions based on global considerations rather than on local considerations alone. Type B systems are of interest because they represent the idealized case toward which future federated designs may evolve. The federated filter structure can be developed in terms of an optimal, linear estimation problem as follows. First, consider a system state vector x that propagates from time point t’ to t according to the following dynamic model: x = ax’ + Gu. (1) Here, @ is the state transition matrix between time points t’ and t , G is the process noise distribution matrix, and U is the additive uncertainty vector due to white process noise acting over the timestep. The initial state estimate So and the sequential values u ( t k ) are uncorrelated, per the following error statistics: SO = xo + eo; E [ d ]= 0 E[uke“] = 0 ~ [ e ’= ] 0; ~ [ e ’ e ’= ~ ]PO E[ukuJT]= + (2) (3) E[ukejT]= 0, k >j. (4) Our system also has access to external measurements 5 from i = 1, N separate local sensor subsystems. Measurements from different local sensors are independent, and comprise disjoint data sets. The discrete measurements from sensor i at time t are linearly related to the true state x: 2; = H j X + vj (5) IEEE TRANSACTIONS ON AEROSPACE AND ELECTRONIC SYSTEMS VOL. 26, NO. 3 MAY 1990 (21 - x1) uncorrelated, per the following error statistics: Q= E[$] = 0; E [ v ~= v @bkJ ~] (6) (13) (gN-xN) p-1 Ill. FEDERATED FILTER STRUCTURE This section develops the theoretical basis for the new federated filter structure. Section IV then provides an efficient square root implementation of this new structure. We first define a composite global filter, then partition its operations into independent local subsets. Consider the following composite state vector and corresponding covariance matrix definitions: 1x1 1 local partition 1 X = (9) local partition N common system states (i version) sensor i bias states In this case, the globally optimal solution is given by the following familiar result (where, for simplicity, we omit the effects of the noncommon states): This simple, optimal result is of real interest. Our new federated filter will be constructed to yield local estimates that are in fact uncorrelated; the above equations then represent the optimal combination of those local filter solutions. Consider next the globally optimal processing of a local measurement from sensor i, written in terms of the composite global variables: (10) 2; = H x + v ; ; H = [0...Hi ...01 P i = Pjk - P;iH,'A-'H;P,?;: (12) The full global state vector (9) is the composite of the N local state partitions xi. As indicated by (lo), each local partition contains the common system state elements, plus unique bias states for its own sensor. The composite global state contains N versions of the common system state x,. This redundancy causes no theoretical difficulty, and is optimally resolved below. The composite covariance matrix P can contain cross-partitions Pi; as well as the local partitions Pii. Now, given a set of N local state estimates f; and their composite covariance P , the globally optimal estimate off the full system state x minimizes this (17) (20) Equations (17) to (20) with j = i indicate that sensor i measurements affect the i state and covariance as if only i existed. Furthermore, i measurements do not affect other local states j # i unless the cross-covariances P;i are nonzero. Note that, if P;; is initially zero, then it stays zero, and other local partitions P,; plus all Pjk cross-covariances are unchanged. Thus, if the local filter states are initially uncorrelated, then the local measurement sets can be processed independently, and they remain uncorrelated. Consider next the global time propagation step. This step comprises the crux of the new federated filter method. The full global state and covariance CARLSON: FEDERATED SQUARE ROOT FILTER FOR DECENTRALIZED PARALLEL PROCESSES 519 propagation from t’ to t can be described as follows: + [Cl] U LGN @Tl .. (21) J The upper bound in (25)is “more positive definite” than the original matrix. That is, the upper bound minus the original matrix (the difference matrix) is positive semidefinite. Substituting this result in (22) then yields the following upper bound on the propagated global covariance P : ”’ .. 0 In (21) and (22), the global state transition matrix @ is block diagonal because each local filter state is dynamically self-contained (the redundant common states do not affect one another). However, the common system states (redundant local estimates) are driven by the same process noise, as indicated. The common process noise U serves to cross-correlate the separate local filter estimates, even if they were originally uncorrelated: pji = + j j ~ ; i + ; + G~QGT (23) We next make some modifications to the global representation of the common process noise covariance. First, we rewrite the process noise term in (25) as LGN J Thus, setting the global process noise and propagated state error covariances to their upper bounds above, we obtain the following partition results: pi;= @ii~,!i@; + G~~QGT p . . = @..P!.@.. I’ -0 if Pji = 0. (29) (30) Equations (29) and (30) represent a conservative result for the global time propagation step, in that the process noise covariance is replaced by a larger, upper bound. Thus, each local filter relies somewhat less on the propagated state value, and somewhat more on the latest measurements (consistent with good filter design practice, if not overdone). A similar upper bound to that of (25) can be placed on the initial value of the state covariance matrix, which is the other common element across the local partitions. This bound likewise yields disjoint, conservatively larger values for the local filter initial covariances, also multiplied by the factor 7; (normally NI. The net result of this upper-bounding approach for the state and process noise covariance matrices is quite significant: (24) Now, the N x N Q-matrix on the right side has the following upper bound (provable via determinants; also see Appendix A): 520 1) initial covariance matrix bounds are uncorrelated by construction; 2) process noise covariance bounds are uncorrelated by construction; 3) the global state transition matrix introduces no cross-correlations; 4) local measurement updates introduce no cross-correlations; 5) independent local filter estimates can be combined to yield a globally optimal solution via the relatively simple method of (15) and (16). IEEE TRANSACTIONS ON AEROSPACE AND ELECTRONIC SYSTEMS VOL. 26, NO. 3 MAY 19W Thus we have devised a conservative procedure whereby the global filter can be partitioned into a set of independent local filters, whose outputs are periodically combined by a master fusion filter. While the above approach to bounding the common process statistics may seem rather heuristic, it can be rigorously justified (see the Appendix). Furthermore, it yields the globally optimal estimate when applied via the following procedure, where the average y; factor is simply N: 1) initial local covariances are set to 7; x the common system value; 2) local filters use 7; x the common process noise covariance value; 3) local filters process own-sensor measurements via locally optimal (Kalman) algorithm; 4) master filter combines local filter solutions after each update cycle per (15) and (16); 5) master filter resets local filter states to master value, and local covariances to 7; x master value. For example, consider three local filters that share the same initial state estimate and the same process noise. Each local filter multiplies the common initial covariance and process noise covariances by y; = 3 (dividing the information by 3). After several time steps, the three local filter solutions are combined as “independent” estimates via (15) and (16). Their information is thus summed, yielding 3 x 1/3 = 1.0 times the correct value. Next, each local filter incorporates measurements from its own sensor. Since these data sets are naturally disjoint, the correct information sum again results from their combined solutions. However, if the local filters pass through several time/measurement cycles before the master filter combines their solutions, then some of the available information is lost, and the master filter estimate will be conservatively suboptimal (see the Appendix). In summary, the new federated filter design and operating procedures to obtain globally optimal estimation performance are these: 1) design local filters for stand-alone operation using local sensor measurements and reference system data (if locally needed); 2) multiply local filter covariances by y; for common initial estimates and process noises (usually y; = N, the number sharing common information); 3) combine local filter state estimates via optimal fusion algorithm; 4) reset local filters with fused state and y; times the fused covariance. IV. the federated filter structure in square root form. We can choose either covariance square root form, or information square root form, or a mix, to suit any particular application. Covariance form is recommended here for real-time system applications. It is computationally advantageous when actual state and covariance outputs are required frequently, e.g., every filter cycle. It also readily supports fault detection via tests of measurement residuals (actual minus predicted values) as a normal part of the update process. However, other applications such as satellite orbit determination may favor information form. We also recommend the P = UDUT factorization [9] for mechanizing square root filter algorithms. However, the equivalent square root representation P = SST [lo]is much simpler to use for analytical development. Once results have been derived in terms of S, they can readily be implemented in terms of the U-D factors. We first define matrix square roots of the state error covariance P ( n x n), the process noise covariance Q ( p x p), and the measurement noise covariance R(m x m): S = p1/2; V = R’12. W = Q1/2; The following equations present the recommended mechanization of the new federated filter structure in covariance square root form, first the local filters and then the master filter. The symbol TU represents an orthogonal transformation operator, constructed to reduce the preceding matrix to upper triangular form and/or the minimum number of columns, via successive row rotations [9]. Local Filters (i = 1,N ) (from t’ to t ) 9; = @ i ; i : (32) (33) (34) a; = a; + J;C;’(z; - f i g ; ) . (35) Master Fusion Filter (m) 2, = amma; [Sm 01 = [ @ m m S L Start: 2, = XI; Do for i = 2 to N: H, = (from t’ to t ) GmWmlT~r S , = SI. [? ; ;I; I;i (36) (37) (38) v, =s; FEDERATED SQUARE ROOT MECHANIZATION To maximize computational efficiency, numerical stability, and effective precision, we wish to implement (31) (39) (40) 2; = 9, + J m C i l ( a ;- H,?,). CARLSON: FEDERATED SQUARE ROOT FILTER FOR DECENTRALIZED PARALLEL PROCESSES (41) 521 Finish: for i = 1, N : (43) In (33), the multiplier y;l2 applies only to the common part of Wj. In (39), etc., LCi is a transformation matrix that allows for possible coordinate frame differences among the filters. In (43), subscripts c and b; refer to the common and sensor-i bias states. The scalar multipliers yfI2 will normally equal “I2, but can differ per (26). Note that the propagated master filter solution (36, 37) is not used in the optimal fusion algorithm (38-41); however, it has other uses. Si is lower triangular in (43). V. IMPLEMENTATION ALTERNATIVES The implementation of the new federated filter presented in the previous section yields the globally optimal solution to the distributed estimation problem. However, there are many practical applications in which global optimality (maximum estimation accuracy) is not required. In such cases, the system designer may prefer to give up some accuracy in order to gain throughput (computation speed), fault tolerance, or real-time system simplicity. Several alternative implementations of the new federated filter architecture can be devised to accommodate system design criteria besides strict optimality. In general, the new architecture provides a useful framework for assessing the tradeoffs among competing design criteria. The following paragraphs address some of them. Data CompresswnlRate Reduction: The new federated filter architecture in its fully optimal form already provides an N-fold speed-up over a single, global filter due to parallel processing by the N local filters (in multiprocessor systems). An additional speed increase is possible by using the local filters as prefilters to “compress” the local sensor data and thus reduce the master filter processing rate. This data compression feature of the new federated design is possible because the local filters perform real, recursive filtering. They maintain their ability to smooth the noise in a sequence of measurements (though this ability is somewhat reduced by the process noise multiplier 7;). A major additional benefit of this data compression technique is that the master filter is no longer locked to the local filter processing cycles, but can operate at a selectable multistep rate. Hence, the master filter can readily accommodate differing local filter measurement rates, and even asynchronous local 522 operations. It requires only that the local filter solutions be time-tagged or otherwise projectable to a common time point. During these longer intervals between master filter updates, the master filter state and covariance can be propagated via (36) and (37), to provide higher rate federated solution outputs for use by other real-time system functions. As discussed earlier, the master filter does ignore some potentially usable system information (knowledge of common process noises) when it combines local solutions on a multistep basis. However, the resultant estimates are quite valid, even though they fall short of globally optimal accuracy. An attractive aspect of this multistep implementation is that it permits a direct trade between estimation accuracy and computation load. In fact, the federated filter can readily be implemented with a n adjustable cycle rate, so that its performance can be tuned to different criteria under different operating conditions (e.g., mission phases). Fault Tolerance: The new federated filter architecture supports system fault detection, identification, and recovery at several levels. First, since the local filters provide real, recursive filtering capability, they can perform legitimate and effective screening of local sensor measurements via residual checks. (The process noise multiplier somewhat reduces the “tightness” of these checks.) Second, the master filter incorporates each local filter output except number 1 as a measurement, and computes a residual (master minus local state estimate) that can likewise be used for fault detection. In (41), C ’ A x is a vector of n; independent, unit-variance random numbers, for which fault thresholds can readily be defined. Note also that the propagated master filter solution (36), (37) can be employed to fault-check the number 1 filter solution used to start the fusion process in (38). From a fault-tolerance viewpoint, the globally optimal federated filter implementation does exhibit one serious drawback. In particular, feeding back the fused state estimates and covariances from the master to the local filters introduces the possibility of cross-contamination. A fault in one sensor subsystem, if undetected by both the local and master filters, will contaminate the fused solution. Feeding this solution back to the local filters will then contaminate each of them. The new federated filter design provides a simple but suboptimal solution to the cross-contamination problem. This solution is simply to bypass the feedback of fused state and covariance data from the master to the local filters. In this implementation, the master filter still optimally combines the local filter solutions via the normal method. However, it does not then transmit fused solution data back to the local filters. While suboptimal, this approach is theoretically correct, and all filter components maintain “honest” IEEE TRANSACTIONS ON AEROSPACE AND ELECTRONIC SYSTEMS VOL. 26, NO. 3 MAY 1990 covariances. The resultant estimation accuracy falls short of the global optimum, but still exceeds that of any of the stand-alone local filters (provided the yi factors are properly chosen). For many fault-tolerant system applications, this level of accuracy is quite good enough, and is more than offset by the increased fault detection, isolation, and recovery capabilities. VI. CURRENT A N D FUTURE APPLICATIONS The new federated filter design is applicable to both Type A and Type B systems (defined in Section II), in one or more of its alternate implementations. Type B systems represent idealized future applications with total design flexibility, hence, the local filters can be made to cooperate in support of global performance criteria. For example, they can be designed to accept feedback of fused state and covariance data from the master filter, for maximum estimation accuracy. Type A systems represent nearer-term applications. Here, the local filters comprise essentially fixed designs, developed elseNhere for stand-alone operation. These filters may provide some flexibility in terms of adjustable model parameters (e.g., process noise strengths or system time constants), but will not accept data feedback from a master fusion filter. The last federated filter implementation of the previous section is quite suitable for this Type A system application. While it cannot provide globaliy optimal estimation accuracy, it does improve on individual local filter performance. Furthermore, it provides a very high degree of fault tolerance. One may ask how these fixed local filters will be affected by the yi x N multiplier on common process noise components. The answer is that this multiplier will generally be unnecessary. Most real-time filters are designed with conservative (excess) process noise, in order to keep the filter "open" to new measurement information. Hence a process noise multiplier is already present in the ratio of modeled to real noise levels. VII. CONCLUSION The new federated filter architecture developed in this paper provides a number of significant advantages for real-time distributed system applications: 1) flexible implementation to support a variety of system requirements and facilitate performance trades; 2) globally optimal estimation accuracy in single-step implementation with feedback to local filters; 3) conservatively suboptimal estimation accuracy in multistep implementation with feedback; 4) increased throughput due both to parallel local filter processhg, and to sensor data compression via effective local prefiltering; 5) multilevel fault detection, isolation and recovery capability, highly fault tolerant in no-feedback implementation with totally independent local filter solutions; 6) minimal data transfer requirements: filter states and covariances only; 7) efficient, numerically stable implementation in covariance (or information) square root form; 8) simple real-time implementation due to independent local filter operations, particularly in no-feedback, fault-tolerant mode; 9) application to distributed systems with both fixed (current) and cooperative (future) local filters. Overall, this new federated filtering method seems quite attractive for a variety of real-time system applications, including distributed navigation systems. Several of its alternate implementations warrant further analysis and testing. APPENDIX. THEORETICAL FOUNDATION This Appendix presents a more rigorous derivation of the new federated filtering method outlined in Section 111. We prove single-step global optimality and define the multistep information loss. Consider the sequential system dynamics and discrete measurement processes described by (1) to (8). The globally optimal state estimate x for this system minimizes the following quadratic cost index [6], where S, W , and V are square roots of the state error, process noise, and measurement noise covariance matrices: (All There are N independent sensors designated by index i. Index j = 1, k refers to successive time steps. Rewrite (Al) so that all terms have index i partitions: The matrices $0 and W;, represent i = l , N scalar multiples of the original matrices SOand W,, constructed so that their root-sum-squares equal the CARLSON: FEDERATED SQUARE ROOT FILTER FOR DECENTRALIZED PARALLEL PROCESSES 5 23 transformation: original values: N N i=l k iel J Note that the multipliers 7i are 1.0 or larger; their average inverse equals 1/N. The optimal solution xi for each local problem can be determined independently at each step k, by applying a series of orthogonal transformations. The time propagation transformation is performed first, and then the measurement transformation. The result for local partition i after the fist complete step, i.e., at k = 1+, can be expressed as follows: + I %-'[U- -x)]11 k . (A4) The measurement update values Si',Ci and 2: are given by (34) and (39, and AZi = Zi - H& is the calculated residual. The remaining matrices Si, R, and E at k = 1 are determined as follows: I From (A4) we see that the locally optimal value of xi is g,?, which zeroes the fist cost index term. The second term is the irreducible measurement residual (a constant). The third term is the time propagation residual which, given x, can be zeroed by proper choice of U. The next step is the crucial one. To obtain the globally optimal solution, we must find the single value of x that minimizes the total cost index. This total is the sum over i of the local terms (A4), which can be written in similar form with composite N-row terms as follows: For simplicity, we omit the measurement residual terms, which do not affect the result. The value of x that minimizes the fist term in (A6) can be determined by the following orthogonal -c (AS) -x)II: + llrmII: Choosing x to zero the first term of (AS) is the apparent next step. However, we must still take into account the second term in (A6). Because of the initial construction (M),the N rows of this matrix are all identical except for the scalar multipliers l/7;'*. They thus readily reduce (RSS) to a single row, with multiplier equal to [ ~ ~ ( 1 / 7 ~ / ~ ) 2=11.0: '/* , (A6)1 = [ls:-l(k: (Aq2 2 @;'(ki These values of S,,,and k,,, are equivalent to the optimal values in (15) and (16). Following this transformation, the first term in (A6) becomes = pv-1[" - P s - ' ( 2 - x)]11;5. (A9) This second term from (A6) thus reduces to exactly the same form that would have resulted, had the single, globally optimal estimate been propagated to begin with. Thus, dividing the global problem into N local problems does yield the globally optimal solution, provided that 1) the common covariances are rescaled per (M),and 2) the local solutions are recombined after each measurement update cycle. What happens if we don't recombine the local estimates after each measurement cycle, but propagate them as independent local estimates over several cycles? The answer is evident from the second term in (A6). After several local filter cycles, the rows of that second term are no longer simply related by scalar multipliers, but differ due to disjoint measurement information from their local sensors. Via a triangularization process similar to (A7), we can reduce that N-row matrix to three rows, with this general result: (A6)2 = JIAiu+ Bix - cilli + IIB2x - C2)l2 + Ilcsll:. (A101 Here, the first term can be zeroed by proper choice of U, given x. The third is an irreducible residual. The second term is of the same form as the measurement terms H x - 2 in (Al). This "measurement" involves x, and in theory could be combined with (A8) to improve the estimate of x . A similar term arises at each propagation step. Thus, operating the local filters independently over several steps is equivalent to ignoring an available measurement (of process noise dimension p) at each such step. The resulting solution is quite valid but conservatively suboptimal, as it is for any filter that selectively uses part but not all of the information potentially available to it. REFERENCES [l] [2] [3] [4] [5] [6] Speyer, J. L. (1979) Computation and transmission requirements for a decentralized linear-quadratic-Gaussian control problem. IEEE Tramactwns on Automatic Control, AC-24, 2 (Apr. 1979). Chang, T.S. (1980) Comments on ‘Computation and transmission requirements for a decentralized linear-quadratic-Gaussian control’. IEEE Transactwm on Automatic Control, AC-25, 3 (June 1980). Wdlsky, A. S., Bello, M.G., Castanon, D. A., Levy,B. C., and Verghese, G. C. (1982) Combining and updating of local estimates and regional maps along sets of one-dimensional tracks. IEEE Tramactwns on Automatic Control, AC-21, 4 (Aug. 1982). Levy,B. U., et al. (1983) A scattering framework for decentralized estimation problems. Automatica, 19, 4 (Apr. 1983). Castanon, D. A., and Teneketzis, D. (1985) Distributed estimation algorithms for nonlinear systems. IEEE Tramactwm on Automatic Control, AC-30 (May 1985). [7] [8] [9] [lo] Bierman, G. J., and Belzer, M. (1985) A decentralized square root information filterhmoother. In Proceedngs of the 24th IEEE Conference on Decision and Control, Ft. Lauderdale, FL, Dec. 1985. Kerr, T. H. (1985) Decentralized filtering and redundancy managementKailure detection for multisensor integrated navigation systems. Presented at the Institute of Navigation National Meeting, San Diego, CA, Jan. 1985. Hashemipour, H. R., Roy, S., and Laub, A. (1987) Decentralized structum for parallel Kalman filtering. Presented at the International Federation of Automatic Control 10th World Congress, July 1987. Bierman, G. J. (1977) Factorization Methodr for Discrete Sequential Estimation. New York Academic Press, 1977. Carlson, N. A. (1973) Fast triangular formulation of the square root filter. AIAA Journal, 11, 9 (Sept. 1973). Neal A. Carlson earned his B.S.E. in aeronautical engineering from Princeton University, Princeton, NJ and his Ph.D. in aeronautics and astronautics from Massachusetts Institute of Technology, Cambridge, MA. Dr. Carlson is the founder and president of Integrity Systems, a small aerospace engineering firm located in Winchester, MA. Integrity Systems specializes in highly reliable avionics systems, software testing tools, and real-time software. Neal worked for Intermetrics in Cambridge, MA from 1970 to 1983, where he focused on integrated navigation systems, fault-tolerant avionics, and Kalman filtering. H e was a major contributor to the Space Shuttle fault-tolerant avionics system architecture, and to the GPS Phase I user navigation system. His triangular square root filter formulation was the first stable yet efficient mechanization of the Kalman filter, and the forerunner of today’s widely used U-D mechanization. With Integrity Systems since 1983, Neal has developed a new distributed Kalman filtering method for multisensor navigation systems. This new method provides notable advantages over conventional methods in speed, fault tolerance, and real-time system simplicity. CARLSON: FEDERATED SQUARE ROOT FILTER FOR DECENTRALIZED PARALLEL PROCESSES 525