Notes 15 - Wharton Statistics Department

advertisement

Stat 921 Notes 15

Reading: Chapter 4.1-4.3

I. Sensitivity to Hidden Bias

1959:

Fidel Castro takes over Cuba.

Hawaii becomes a state.

First satellite to land on moon (no human in space

yet)

Health risks of smoking still very controversial.

A matched pair study had been conducted that matched

smokers to nonsmokers on the basis of age, race, nativity,

rural versus urban residence, occupational exposure to

dusts and fumes, religion, education, marital status, alcohol

consumption, sleep duration, exercise, severe nervous

tension, use of tranquilizers, current health, family history

of cancer other than skin cancer and family history of heart

disease, stroke and high blood pressure (study published as

Hammond, 1964). Of the 36,975 pairs, there were 122

pairs in which exactly one person died of lung cancer. Of

these, there were 12 pairs in which the nonsmoker died of

lung cancer and 110 pairs in which the heavy smoker died

of lung cancer. In this study heavy smokers are more than

9 times as likely as nonsmokers to develop lung cancer.

For a matched pair randomized experiment with a binomial

outcome, we can test the null hypothesis that the treatment

1

(0)

(1)

does not have a causal effect on any unit, Yi Yi for all

i , with McNemar’s test:

The test statistic is the number of discordant pairs (pairs in

which the outcome differs between the members of the

pair) in which the treated unit has the outcome 1 and the

control has the outcome 0. Under the null hypothesis, this

test statistic has the binomial distribution with probability

0.5 and number of trials equal to the number of discordant

pairs.

For the smoking study, the test statistic is 110, where there

are 122 discordant pairs and the p-value for a one-sided test

is P(T 110 | T ~ Binomial (0.5,122)) 0.0001 . Thus, if

this study were a randomized study, there would be strong

evidence that smoking causes lung cancer.

But in an observational study, there is always a concern

about unmeasured confounders.

The famous statistician R.A. Fisher, the inventor of

randomized experiments, raised the concern that there

might be a gene that both makes a person more likely to

smoke and to develop lung cancer. Fisher thought the

observational studies on smoking were unconvincing.

Can anything more be said about an observational study

beyond association is not causation?

Cornfield et al. (1959): “If cigarette smokers have 9 times

the risk of nonsmokers for developing lung cancer and this

is not because cigarette smoke is a causal agent, but only

2

because cigarette smokers produce hormone X, then the

proportion of hormone X-producers among cigarette

smokers must be at least 9 times greater than that of

nonsmokers. If the relative prevalence of hormone Xproducers is considerably less than ninefold, then hormone

X cannot account for the magnitude of the apparent effect.”

This statement is an important conceptual advance beyond

the familiar fact that association does not imply causation.

A sensitivity analysis is a specific statement about the

magnitude of hidden bias that would need to be present to

explain the associations actually observed in a particular

study. Weak associations in small studies can be explained

away by very small biases, but only a very large bias can

explain a strong association in a large study.

A Model for Sensitivity Analysis

We say that a study is free of hidden bias if the probability

j that unit j receives the treatment is a function ( x j ) of

the observed covariates x j describing the unit. There is

hidden bias if two units with the same observed covariates

x have different chances of assignment to treatment.

A sensitivity analysis asks: How would inferences about

treatment effects be altered by hidden biases of various

magnitudes? Suppose the ’s differ at a given x . How

large would these differences have to be to alter the

qualitative conclusions of a study?

3

Suppose we have units with the same x but possibly

different ’s, so x j xk but possibly j k . Then units

j and k might be matched to form a matched pair to

control overt bias due to x . The odds that units j and k

receive the treatment are j /(1 j ) and

k /(1 k ) respectively and the odds ratio is the ratio of

these odds. Imagine that we knew that this odds ratio for

units with the same x was at most some number 1

1 j (1 k )

for all j , k with x j xk

k (1 j )

(1.1)

If 1 , then the study is free of hidden bias. For >1

there is hidden bias. is a measure of the degree of

departure from a study that is free of hidden bias.

The model expressed in terms of an unobserved covariate:

When speaking of hidden biases, we commonly refer to

characteristics that were not observed, that are not in x ,

and therefore were not controlled by adjustments for x

(e.g., matching on x ). We now reexpress the sensitivity

analysis model in terms of an unobserved covariate, say u,

that should have been controlled along with x but was not

controlled because u was not observed. Unit j has both an

observed covariate x j and an unobserved covariate u j .

The model has two parts, a logit form linking treatment

assignment S j to the covariates ( x j , u j ) and a constraint

on u j , namely

4

j

log

1

j

( x j ) u j with 0 u j 1

(1.2)

where () is an unknown function and is an unknown

parameter.

The following proposition says that the inequality (1.1) is

the same as the model (1.2).

Proposition:

With e 1 , there is a model of the form (1.2) that

describes the 1 , , N (where there are N subjects) if and

only if (1.1) is satisfied.

Proof: Assume the model (1.2) holds. Then

1 u[ j ] u[ k ] 1 . Note that under (1.2),

[ j ] (1 [ k ] )

exp (u[ j ] u[ k ] ) if x[ j ] x[ k ] .

[ k ] (1 [ j ] )

Combining the last two facts ,

[ j ] (1 [ k ] )

exp( )

exp( ) if x[ j ] x[ k ] .

[ k ] (1 [ j ] )

In other words, if (1.2) holds, then (1.1) holds.

Conversely, assume the inequality (1.1) holds. For each

value x of the observed covariate, find that unit k with

x[ k ] x having the smallest , so

[ k ] min [ j ] ;

{ j: x[ j ] x }

5

then set ( x ) log{ [ k ] /(1 [ k ] )} and u[ k ] 0 . If 1 ,

then x[ j ] x[ k ] implies [ j ] [ k ] , so set u[ j ] 0 . If 1

and there is another unit j with the same value of x , then

set

u[ j ]

( x) 1

(1 [ k ] )

log [ j ]

log [ j ]

1

(1 ) (1.3)

[ j]

[ j]

[k ]

1

Now (1.3) implies the logit form in (1.2). Since [ j ] [ k ] ,

it follows that u[ j ] 0 . Using (1.1) and (1.3), it follows

that u[ j ] 1 . So the constrain on u[ j ] in (1.2) holds.

II. The Distribution of Treatment Assignments

As in Chapter 3 of the book (Notes 7), we consider

grouping units into strata on the basis of the covariate x ,

e.g., matched pairs or matched sets. Under the sensitivity

analysis model, the conditional distribution of the treatment

assignment Z ( Z11 , , Z S ,nS ) given m is no longer

constant, as it was in Chapter 3.2.2 for a study free of

hidden bias. Instead, it is

S

exp( z T u)

exp( z T u)

P( Z z | m )

T

T

exp(

b

u

)

s 1 exp( b u) (1.4)

b

b s

6

where z ( z1 ,

containing the

, zS ), u (u1 ,

ns

ms

, uS ) and s is the set

different ns tuples with ms ones and

ns ms zeros.

(1.4) says that given m , the distribution of treatment

assignments no longer depends on the unknown function

( x ) but still depends on the unobserved covariate u. In

words, stratification on x was useful in that it eliminated

part of the uncertainty about the unknown ’s, specifically

the part due to ( x ) , but stratification on x was

insufficient to render all treatment assignments equally

probable.

If ( , u) were known, the distribution (1.4) could be used

as a basis for randomization inference. Since ( , u) is not

known, a sensitivity analysis will display the sensitivity of

inferences to a range of assumptions about ( , u) .

Specifically, for several values of , the sensitivity

analysis will determine the most extreme inferences that are

possible for u in the N -dimensional unit cube

U [0,1]N .

II. Sensitivity of Significance Levels: The General Case

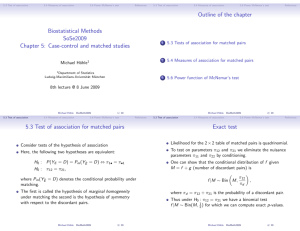

A transformation of McNemar’s test statistic of no

treatment effect for binary outcomes in a matched pairs

experiment and Wilcoxon’s signed rank statistic of no

7

treatment effect for an additive treatment effect model in a

matched pairs experiment both have the following form,

S

2

s 1

i 1

T t ( Z , r ) d s csi Z si

(1.5)

where csi is binary, csi 1 or 0 , and both d s 0 and csi are

functions of r , and so are fixed under the null hypothesis

of no treatment effect. The class of such test statistics are

called sign score statistics.

McNemar’s test as a sign score statistic: For binary

outcomes, let d s 1 and csi 1 or 0 according to whether

Rsi is 1 or 0. Then, t ( Z , r ) is the number of treated units

who have an outcome of 1. A pair is concordant if

cs1 cs 2 and is discordant if c j1 c j 2 . No matter how the

treatment is assigned within pair j, if both units have an

outcome of 0, then the pair contributes 0 to t ( Z , r ) and if

both units have an outcome of 1, then the pair contributes 1

to t ( Z , r ) , so in either case a concordant pair contributes a

fixed quantity to t ( Z , r ) . Removing concordant pairs

from consideration subtracts a fixed quantity from

t ( Z , r ) under the null hypothesis and does not alter the

significance level. Therefore, we can set concordant pairs

aside before computing t ( Z , r ) and we arrive at McNemar’s

statistic.

Wilcoxon signed rank statistic as a sign score statistic:

Recall that Wilcoxon’s signed rank statistic of no treatment

8

effect for S matched pairs is computed by ranking the

absolute differences | rs1 rs 2 | from 1 to S and summing the

ranks in pairs in which the treated unit had a higher

response than the control. In the notation of the sign score

statistic, d s is the rank of | rs1 rs 2 | with average rank used

for ties and

cs1 1, cs 2 0 if rs1 rs 2 ,

cs1 0, cs 2 1 if rs1 rs 2

cs1 0, cs 2 0 if rs1 rs 2 .

Sensitivity of significance levels: In a randomized

experiment, t ( Z , r ) is compared to its randomization

distribution under the null hypothesis, but that is not

possible under the sensitivity analysis model (1.4)

in which ( , u) is unknown. Specifically, for each

possible ( , u) , the statistic t ( Z , r ) is the sum of S

independent random variables, where the j th random

variable equals d s with probability

c exp( us1 ) cs 2 exp( us 2 )

ps s1

exp( us1 ) exp( us 2 )

and equals 0 with probability 1 ps . A pair is said to be

concordant if cs1 cs 2 . If cs1 cs 2 1 , then ps 1 while

if cs1 cs 2 0 , then ps 0 so concordant pairs contributed

a fixed quantity to t ( Z , r ) for all possible ( , u) .

Though the null distribution of t ( Z , r ) is unknown, for

each fixed , the null distribution is bounded by two

9

known distributions. With exp( ) , define ps and ps

in the following way:

0

0

if cs1 cs 2 0

if cs1 cs 2 0

ps 1

if cs1 cs 2 1 and ps 1

if cs1 cs 2 1

1

if cs1 cs 2

if cs1 cs 2

1

1

Then using the constraint on us in (1.2), it follows that

ps ps ps for s 1,..., S . Define T to be the sum of S

random variables where the jth random variable takes the

value d s with probability ps and takes the value 0 with

probability 1 ps . Define T similarly with ps in place of

ps . The following proposition says that for all

u U [0,1]N , the unknown null distribution of the test

statistic T t ( Z , r ) is bounded by the distributions of

T and T .

Proposition 1: If the treatment has no effect, then for each

fixed 0 ,

P(T a) P(T a) P(T a)

for all a and u U .

For each , Proposition 1 places bounds on the

significance level that would have been appropriate had u

been observed. The sensitivity analysis for a significance

level involves calculating these bounds for several values

of .

10

Note that the bounds in Proposition 1 are attained for two

values of u U and this has two practical consequences.

Specifically, the upper bound P(T a) is the distribution

of T t ( Z , r ) when usi csi and the lower bound

P(T a) is the distribution of T t ( Z , r ) when

usi 1 csi . The first consequence is that bounds in

Proposition 1 are the best possible bounds: they cannot be

improved unless additional information is given about the

value of u U . Second, the bounds are attained at values

of u which perfectly predict the signs. For McNemar’s

statistic and Wilcoxon’s signed rank statistic, this means

that the bounds are attained for values of u that exhibit a

strong, near perfect relationship with the response y .

The bounding distributions of T and T have easily

calculated moments. For T , the expectation and variance

are

S

E (T ) d s p

s 1

s

S

and Var (T ) d s2 ps (1 ps ) (1.6)

s 1

For T , the expectation and variance are given by the same

formulas with ps in place of ps . As the number of pairs

S increases, the distributions of T and T are

approximated by Normal distributions, provided the

number of discordant pairs increases with S .

IV. Sensitivity Analysis for McNemar’s Test and the

Smoking Study

11

Recall that for the smoking study there are 36,975 pairs,

122 in which exactly one person died of lung cancer. Of

these, there were 12 pairs in which the nonsmoker died of

lung cancer and 110 pairs in which the heavy smoker died

of lung cancer.

Let d s 1 and csi 1 or 0 according to whether rsi is 1 or 0

in. Then t ( Z , r ) is the number of treated units who have an

outcome of 1. As discussed above, we can remove the

concordant pairs (pairs in which the outcomes in the treated

and control units do not affect do not affect the distribution)

without changing the null distribution of t ( Z , r ) . With the

concordant pairs removed, T and T have binomial

distributions with 122 trials and probability of success

p /(1 ) and p 1/(1 ) respectively. Under the

null hypothesis of no effect of smoking, for each 0 ,

Proposition 1 gives an upper and lower bound on the

significance level, P (T 110) , namely for all u U ,

122 a

122 a

(

p

)

(1

p

)

P(T 110)

a

a 110

122 122

a

122 a

( p ) (1 p )

a 110 a

122

(1.7)

In a randomized experiment or a study free of hidden bias,

1

p

p

the sensitivity parameter is 0 so

2 and the

12

upper and lower bounds in (1.7) are equal, and both bounds

give the usual significance level for McNemar’s test

statistic. For 0 , (1.7) gives a range of significance

levels reflecting uncertainty about u .

#### Sensivitity Analysis for McNemar's Test Statistic

#### Let D be the number of discordant pairs

#### Tobs be the number of discordant pairs in which treated unit has a 1

#### and Gamma be the sensitivity parameter exp(gamma)

sens.analysis.mcnemar=function(D,Tobs,Gamma){

p.positive=Gamma/(1+Gamma);

p.negative=1/(1+Gamma);

lowerbound=1-pbinom(Tobs-1,D,p.negative);

upperbound=1-pbinom(Tobs-1,D,p.positive);

list(lowerbound=lowerbound,upperbound=upperbound);

}

Sensitivity Analysis of Smoking Study: Range of

Significance Levels for Hidden Bias of Various

Magnitudes

Minimum

Maximum

1

<0.0001

<0.0001

2

<0.0001

<0.0001

3

<0.0001

<0.0001

4

<0.0001

0.0036

5

<0.0001

0.03

6

<0.0001

0.1

13

For 4 , one person in a pair may be four times as likely

to smoke as the other because they have different values of

the unobserved covariate u . In the case 4 , the

significance level might be less than 0.0001 or it might be

as high as 0.0036, but for all u U , the null hypothesis of

no effect of smoking on lung cancer is not plausible. The

null hypothesis of no effect begins to become plausible for

at least some u U with 6 . To attribute the higher

rate of death from lung cancer to an unobserved covariate

u rather than to the effect of smoking, that unobserved

covariate would need to produce a sixfold increase in the

odds of smoking, and it would need to be a near perfect

predictor of lung cancer.

14