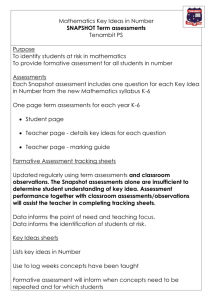

spiral assessments

advertisement