Complexity

advertisement

Complexity Revisited

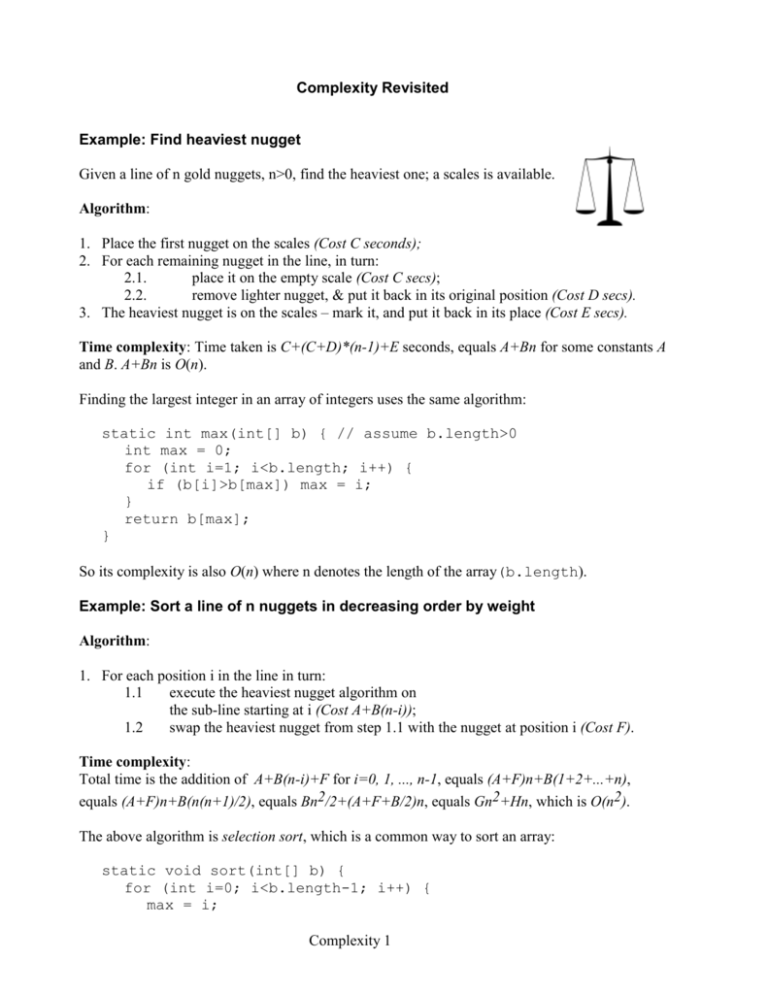

Example: Find heaviest nugget

Given a line of n gold nuggets, n>0, find the heaviest one; a scales is available.

Algorithm:

1. Place the first nugget on the scales (Cost C seconds);

2. For each remaining nugget in the line, in turn:

2.1.

place it on the empty scale (Cost C secs);

2.2.

remove lighter nugget, & put it back in its original position (Cost D secs).

3. The heaviest nugget is on the scales – mark it, and put it back in its place (Cost E secs).

Time complexity: Time taken is C+(C+D)*(n-1)+E seconds, equals A+Bn for some constants A

and B. A+Bn is O(n).

Finding the largest integer in an array of integers uses the same algorithm:

static int max(int[] b) { // assume b.length>0

int max = 0;

for (int i=1; i<b.length; i++) {

if (b[i]>b[max]) max = i;

}

return b[max];

}

So its complexity is also O(n) where n denotes the length of the array(b.length).

Example: Sort a line of n nuggets in decreasing order by weight

Algorithm:

1. For each position i in the line in turn:

1.1

execute the heaviest nugget algorithm on

the sub-line starting at i (Cost A+B(n-i));

1.2

swap the heaviest nugget from step 1.1 with the nugget at position i (Cost F).

Time complexity:

Total time is the addition of A+B(n-i)+F for i=0, 1, ..., n-1, equals (A+F)n+B(1+2+...+n),

equals (A+F)n+B(n(n+1)/2), equals Bn2/2+(A+F+B/2)n, equals Gn2+Hn, which is O(n2).

The above algorithm is selection sort, which is a common way to sort an array:

static void sort(int[] b) {

for (int i=0; i<b.length-1; i++) {

max = i;

Complexity 1

for (int j=i+1; j<b.length; j++)

if (b[j]>b[max]) max = j;

int t = b[i]; b[i] = b[max]; b[max] = t;

}

}

So the time complexity of selection sort is O(n2) where n = b.length.

Big-Oh notation

Big-Oh notation tells us how a function f(n) grows as n increases. In our case, that translates into

the growth of an algorithm’s execution time as the problem size increases.

Function

O-notation

In words

A

A+B(log2 n)

A+B√n

A+Bn

A+B(log2 n)+Cn

A+Bn+Cn(log2 n)

A+Bn+Cn2

A+Cn2+Dn3

A+B2n

O(1)

O(log n)

O(√n)

O(n)

O(n)

O(n log n)

O(n2)

O(n3)

O(2n)

constant

log

square root

linear

linear

n-log-n

quadratic

cubic

exponential

How good?

Sample time for

n=1000

almost instantaneous .0001 secs

stupendously fast

.001 secs

very fast

.03 secs

fast

.1 secs

fast

.1001 secs

pretty fast

1 sec

slow for large n

1 min 40 secs

slow for moderate n 28 hours

impossibly slow

millenia

Let f(n) and g(n) be functions from the naturals to the

reals. We say that eventually f(n)≥g(n) if f(n)≥g(n) for

all n after a certain point. A function f(n) is said to be

O(g(n)) if eventually Cg(n)≥f(n) for some positive

constant C. For example, 2+3n+5n2 is O(n2) because

7n2≥2+3n+5n2 for all n≥3 (the choice of 7 is arbitrary).

The function 3n is not O(n2) because it can be shown

that for any constant C, 3n>Cn2 for all but small values

of n. O-notation does not give a tight upper bound, e.g.

the function 10+20n is O(n) but it is also O(n2).

f

g

n

k

Best case, worst case, average case

The time complexity of an algorithm on an array (or any data structure) may also depend on its

contents. In that case we may make one of three simplifying assumptions. Best case: assume the

contents are as favourable as possible. Worst case: assume the contents are as unfavourable as

possible. Average case: assume the contents are randomly chosen (assuming all possible contents

are equally likely). The average case assumption is the most useful, but it is often important to

know also how bad the execution time can be, and the worst case assumption is used for that.

Time complexity of recursive methods

Complexity 2

Roughly, the time complexity of a recursive method f is the cost of executing the body of f

excluding any recursive calls, times the number of recursive calls. This assumes that the cost of

executing the body is the same for each invocation, which is commonly the case. For example,

the cost of invoking power(m,n) (first version) is O(n) because it involves n calls of power,

and the cost of each call is (more or less) constant. On the other hand, the second version of

power has complexity O(log2 n) as it entails at most 2log2n recursive calls (actually we don’t

write the logarithm base, and just write O(log n)).

Complexity 3