formato Word

advertisement

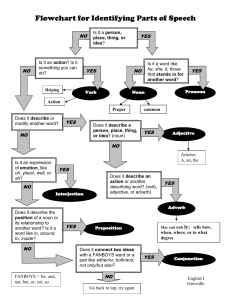

3 - Syntax: How to Put It Grammar, Efficiency And Recovering Unknown Words We will now take a closer look at the NLP way of analyzing texts. Any communication or speech act is built from seven distinct processes: intention, generation, synthesis, and perception, analysis, disambiguation and incorporation. Of these, the first three take place in the speaker or sender, and the last four happen in the hearer's mind. In this context, only generation, analysis and disambiguation are of interest to us. During generation, the speaker makes a choice of words or symbols appropriate to what he wants to convey to the hearer. Analysis means that the perceived string is being processed by the hearer in order to extract the possible meanings. This consists of both syntactic interpretation (also called parsing) and semantic interpretation, taking into account the words' meaning as well as their meaning in the current situation. The result of the analysis of a syntactically correct sentence is something equivalent to a parse tree(words connected to phrases). Disambiguation, finally, picks out the meaning that has most likely been intended by the sender, as some syntactically correct constructs allow for more than one semantic interpretation. You cannot know exactly what the sender wanted to express without having direct access to his knowledge. On this page: 3.1 - The Grammar of Formal Languages | 3.2 - Parsing| The Grammar of Formal Languages Certain rules apply to the structure of a message (a series of symbols with a special meaning). Of course, the message must be formulated in a language common to both the sender and the hearer; this can be either a formal - invented - or natural language - like English, German or Chinese. Natural languages can be partly represented by special formal languages. The basic parts of such a language are terminal symbols - symbols (tokens) that are final, i.e. words in natural languages. These terminal symbols form phrases parts of speech that stand for certain grammatical categories like nouns, verbs etc. Noun phrases, for example, describe nouns in detail - "the red herring", "the one I saw" etc., while verb phrases express action, behavior or state - "is dead", "reads quickly" and so on. We will refer to noun phrases by NP and to verb phrases by VP. These and other categories all combine to create a complete sentence S. These groups are called nonterminal symbols. In this context a language is a set of strings composed from terminal symbols according to a series of rules, the grammar. The terminal symbols (the words) must be present in a lexicon - a list that is subdivided into sections for nouns, verbs, adjectives etc. that includes all allowed words for this language. The grammar itself provides the framework for building sentences from phrases which in turn are built from terminal symbols. The single grammar entries are called rewrite rules because they substitute a part of the first string to obtain another. They have a similar form to this sample rule S - NP VP which expresses the fact that to build a full-fledged sentence S you need a noun phrase NP and a verb phrase VP (or vice versa: you can combine a NP and a VP to obtain a S). Of course there are analogous rewrite rules for NP, VP and all other phrase types. There may also be several rewrite rules with the same left-hand side symbol; e.g. in addition to the one above there might exist a S - NP VP CON NP VP, where CON stands for a conjunction. This rule could also be expressed as S - S CON S, as any occurrence of S can be replaced by NP VP according to our first rule. Parsing and Efficency Supposing that the perception work is done perfectly by the machine, we have to analyze what the string means and how it can be used. Effectively, we have to do the reverse job of generation during the analysis. This delinearization of the input string is called parsing - finding out which tokens represent which phrase and reconstructing the generation path that led to the perceived sentence. Because of the recursive structure of the rewrite rules, a hierarchical structure would be appropriate - thus a tree called parse tree is used in which the leaves, inner nodes and links represent tokens, phrases of any kind, and applied rewrite rules, respectively. The left example shows the parse tree for the sentence "The horse grazes." Six different rules were applied: 1. S - NP VP 2. NP - DET NOUN 3. VP - VERB 4. DET - "the" 5. NOUN - "horse" 6. VERB - "grazes" How are multiple legal interpretations to be handled? As for now, we will simply assume that the amount of ambiguous phrases is small enough to allow multiple parse trees which are stored parallelly. This restriction is important because of the influence of the ambiguities; each one is leading to exponential growth of the number of possible successive trees. A very intuitive version of an algorithm to recover the grammatic structure 3.2.1 A simple Parser from the linear word string would be the following: Iterating over all symbols in the string, the algorithm searches the right-hand sides of all rules for possible matching subsequences, replaces the matched symbols with the left-hand side of the applicable rule as a node and appends the substituted symbols as its children. The string in this algorithm is also called a parse forest. For our horseexample the parse trace would look like this: forest matched subsequence... of rule The horse grazes. "The" DET - the DET horse grazes. "horse" NOUN - horse DET NOUN grazes. DET NOUN DET NOUN - NP NP grazes. "grazes" VERB - grazes NP VERB VERB VP - VERB NP VP NP VP S - NP VP S This returns us the above parse tree for the sentence. However, this method can easily be proven too simple for even slightly more sophisticated grammars. Consider a phrase like "The Chinese signs are manifold." The algorithm from above might produce the following trace (of course when supposing that the needed lexicon upgrade was made): forest matched subsequence... of rule The Chinese signs are manifold. "The" DET - the DET Chinese signs are manifold. "Chinese" NOUN Chinese DET NOUN signs are manifold. DET NOUN DET NOUN NP NP signs are manifold. "signs" VERB - signs NP VERB are manifold. VERB VP - VERB NP VP are manifold. NP VP S - NP VP S are manifold. Obviously, this is not a valid parse, because you cannot append "are manifold" to a complete sentence in a grammatically correct way. This is a situation where the parser has committed an error and has to go back (backtrack) in order to undo this and try another possible parse. But more dramatic examples are easy to formulate Natural Language Processing | Project of Multimedia Systems | EECS 579 | update: 22/12/2000 Daniele Quercia