thesis-hes

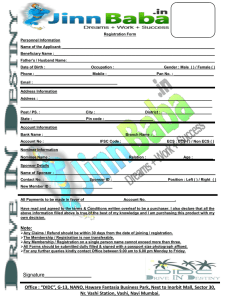

advertisement