B227_L5

advertisement

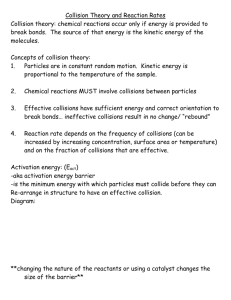

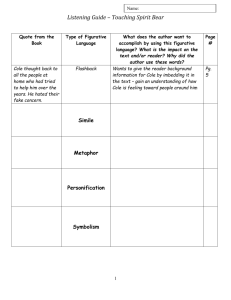

Medium Access Sub-Layer MAC The MAC sub-layer is the lower portion (protocol stackwise) of the Data-Link Layer, just above the Physical Layer Data Link Layer Medium Access Sub-Layer Physical Layer Used specifically for Broadcast (Multi-access/Randomaccess) channels used in LANs LANs Predominantly use multi-access channels B227 Data Communications Lecture 5-1 Peter Cole 2001 Channel Allocation Telephone lines use FDM where the available band width is chopped up into discrete sub-bands - channels, one for each user. Problem: if the line chopped up into 100 channels and only five users are busy then there is a 95% wastage of bandwidth Static channel allocation methods do not work well with bursty traffic therefore multi-user single channel systems become attractive B227 Data Communications Lecture 5-2 Peter Cole 2001 Dynamic Channel Allocation Five assumptions are made in this area 1. Station Model : each station (host) is independent of each other, ie. No coordination exists between hosts 2. Single Channel Assumption : a single channel is used for both transmitting and receiving (unlike Distributed Queue Dual Bus standard for MANs which has a bus for transmitting and a bus for receiving, or a token ring where transmission is strictly controlled by a rota) 3. Collision Assumption : if any part of two signals occupy the wire at the same time a collision has occurred. Only possible errors are caused by collisions and all stations are capable of detecting collisions 4. Time Assumptions : a) Continuous Time : No master clock and transmission can begin at any time b) Slotted Time : time divided into discrete portions slots. Frame transmission begins at the beginning of a slot. A slot can carry three different loads 0. frames = idle 1. frame = successful transmission 2. frame = collision B227 Data Communications Lecture 5-3 Peter Cole 2001 5. Carrier Sensing : if a host has the ability to sense if the line is busy or free so transmission can occur. Two types a) Can sense carrier : b) Can’t sense carrier : Assumptions 4a & 4b are mutually exclusive as are conditions 5a & 5b Any system that has host who run the risk of its transmissions colliding with a transmission from another host in the same system is know as a contention system New problem: with a common channel between all hosts on a broadcast LAN, The important issue is - who gets to use the channel? We require Multiple Access protocols to assist in solving this problem B227 Data Communications Lecture 5-4 Peter Cole 2001 Aloha Originally for ground-based radio communication systems (early 1970’s) uses fixed-size frames It operates on assumptions 1,2,3,4a & 5b ie. It meets all the common channel assumptions but cannot sense the carrier and transmission begins at any time B227 Data Communications Lecture 5-5 Peter Cole 2001 Each frame is said to be vulnerable until it has been received by all hosts in the system If propagation is slow ie. Satellite communication, then the chances of a collision occurring are exponentially greater. The frame is said to be vulnerable during this period B227 Data Communications Lecture 5-6 Peter Cole 2001 Slotted Aloha The problem with the Pure Aloha scheme is that at best a channel utilisation rate of 18% can be obtained. (see mathematical description in Tanenbaum pg 247-9) Slotted Aloha on the other hand doubled the utilisation rate by changing to a timed (synchronous) system (assumption 4b) Here one station acts to synchronise the system in some fashion Hosts cannot transmit on the press of carriage return but must wait for the next time slot Collisions still occur and in this situation both colliding parties back off for a random time period and retransmit at the beginning of a new time slot 37% utilisation of channel, successes, 26% collisions, balance idle B227 Data Communications Lecture 5-7 Peter Cole 2001 CSMA Protocols Carrier Sense Multiple Access Here we work on the principle that a vacant channel can be detected Different from collision detection basically there is a dichotomy in the methods used under the CSMA banner Persistent if channel is busy the sender continually checks to see when the channel is idle as soon as the channel is idle it transmits probability of 1 therefore known as 1-Persistent CSMA On long propagation delays collisions become more frequent Non-Persistent if channel is busy the sender waits a random period of time before sensing the carrier again as soon as it is free the sender transmits a frame B227 Data Communications Lecture 5-8 Peter Cole 2001 P-Persistent CSMA Slotted channels only Here the sender waits for more than 1 slot to be idle before sending therefore lower probability of a free channel is lowered if channel is busy the sender waits a random period of time before sensing the carrier again else transmits B227 Data Communications Lecture 5-9 Peter Cole 2001 CSMA/CD …./With Collision Detection CSMA networks with Collision Detect (CSMA/CD) reduce the cost of collisions by aborting transmission whenever a collision is detected If a collision occurs the detecting host may send out a jamming frame to indicate to all hosts that a collision has been detected B227 Data Communications Lecture 5-10 Peter Cole 2001 With ALOHA, a collision wastes an entire frame transmission time Reducing the time to detect and recover from collisions will improve overall efficiency CSMA/CD networks spans a large geographic distance, ie. the longer the physical distance, the longer the contention period Another approach to reducing collisions is to prevent them from happening in the first place B227 Data Communications Lecture 5-11 Peter Cole 2001 Basic Bit-Map Method 1. Assume N stations numbered 1-N, and a contention period of N slots (bits). 2. Each station has one slot time during the contention period (numbered 1-N). 3. Each station J sends a 1-bit during its slot time if it wants to transmit a frame. 4. Every station sees all the 1-bits transmitted during the contention period, so each station knows which stations want to transmit. 5. After the contention period, each station that asserted its desire to transmit sends its frame. Disadvantages: Higher numbered stations get better service than those with lower numbers. A higher numbered station has less time to wait (on average) before its next bit time in the current or next contention period At light loads, a station must wait N bit times before it can begin transmission. B227 Data Communications Lecture 5-12 Peter Cole 2001 IEEE 802 protocols The IEEE has produced a set of LAN protocols known as the IEEE 802 protocols. These protocols have also been adopted by ANSI and ISO: * 802.2: Logical link standard (device driver interface). * 802.3: CSMA/CD. * 802.4: Token bus. * 802.5: Token ring. B227 Data Communications Lecture 5-13 Peter Cole 2001 802.3 - ETHERNET The 802.3 protocol is described as follows. 1. It is a 1-persistent CSMA/CD LAN. A station begins transmitting immediately when the channel is idle. 2. It originally used coaxial cable. (there are other varieties) B227 Data Communications Lecture 5-14 Peter Cole 2001 3. Its development history is: (a) Started with ALOHA. (b) Continued at Xerox, where Metcalf & Boggs produced a 3 Mbps LAN version. (c) Xerox, DEC, and Intel standardised a 10Mbps version, started selling in early 1980s. (d) IEEE standardised a 10Mbps version (with slight differences from the Xerox standard). 4. The maximum allowable cable segment is 500 meters on the original baseband (Thicknet) system, up to 2000 meters for optic fibre. 5. Segments can be separated by repeaters. Repeaters are devices that regenerate or "amplify" bit signals (not frames); a single repeater can join multiple segments. 6. Maximum distance between two stations 2.5 km, maximum of four repeaters along any path (Thicknet). Why? To insure that collisions are detected properly. Longer lengths increase the contention interval. B227 Data Communications Lecture 5-15 Peter Cole 2001 Binary exponential backoff Algorithm 802.3 (Ethernet) LANs use a binary exponential back-off algorithm to reduce collisions: 1. It is 1-persistent. When a station has a frame to send, and the channel is idle, transmission proceeds immediately. 2. When a collision occurs, the sender generates a noise burst, this is the Jamming Frame, to insure that all stations recognise the condition and abort transmissions. 3. After the collision, each station waits 0 or 1 contention periods before attempting transmission again. Station has 5050 probability of waiting 0 or 1 contention periods. The idea is that if two stations collide, one will transmit in the first interval, while the other one will wait until the second interval. Once the second interval begins, however, the station will sense that the channel is already busy and will not transmit. 4. If another collision occurs: Randomly wait 0, 1, 2, or 3 slot times before attempting transmission again. 5. In general, wait between 0 and (2r - 1) times, where r is the number of collisions encountered. B227 Data Communications Lecture 5-16 Peter Cole 2001 6. Finally, freeze interval at 1023 slot times after 10 attempts, and give up altogether after 16 attempts. Result? Low delay at light loads, yet we avoid collisions under heavy loads. Also, note that there are no acknowledgments; a sender has no way of knowing that a frame was successfully delivered. It is up to the receiver to check the incoming frames checksum and if there is an error in the frame request re-transmission from the sender B227 Data Communications Lecture 5-17 Peter Cole 2001 Fact or Myth: Ethernet (802.3) performs poorly under heavy loads. That is, under heavy loads, do excessive collisions negatively impact performance? The following performance numbers were taken from an experimental configuration of an actual Ethernet. 25 hosts were transmitting packets as fast as they could (eg., the offered load >> 1). Major results: The average transmission delay increases linearly with increasing numbers of transmitters. This contradicts the myth that delay increases substantially as offered load increases. 25 hosts sending maximum sized packets obtain over 97% of the bandwidth. That is, less that 3% of the bandwidth is lost due to collisions. 25 hosts sending minimum sized packets (eg., 64 bytes) obtain about 85% of available bandwidth. While not great, losing 15% of the bandwidth to collisions does not make the network "unusable". B227 Data Communications Lecture 5-18 Peter Cole 2001 For bi-modal distributions (eg., mixtures of both small and large packets) utilisation increases. One large packet for every 8 small packets results in a utilisation of about 92.5%. Conclusion: Ethernets work well in practice. How then does one explain reports of poor performance in actual Ethernet LANs? Poor hardware design. The first generation of Ethernet hardware was designed at a time machines were unable to send or receive packets at fast rates. Today, a single workstation generating packets can completely saturate an Ethernet. However, many Ethernet cards cannot themselves process packets at such high rates. They have limited internal buffering, and frequently cannot handle more than 2 or 3 back-to-back packets without filling an internal buffer and discarding the remaining packets. Thus, the problems had not to do with the Ethernet per say, but with the implementation of hardware implementation. B227 Data Communications Lecture 5-19 Peter Cole 2001