2. Semantic Abstractions for specifying, designing and constraining

advertisement

COMPUTER TECHNOLOGY INSTITUTE

1999

Semantic Abstractions in the Multimedia Domain

Elina Megalou1,2 & Thanasis Hadzilacos1

1 Computer Technology Institute, and

2 Dept. of Computer Engineering and Informatics, University of Patras, Greece

Kolokotroni 3, GR-26221, Patras, Greece

e-mail: {megalou, thh}@cti.gr

http://www.cti.gr/RD3/

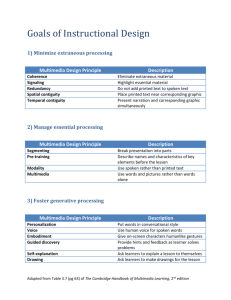

Abstract -- Information searching by exactly matching content is traditionally a strong point of machine searching; it

is not however how human memory works and is rarely satisfactory for advanced retrieval tasks in any domain multimedia in particular, where the presentational aspects can be equally important to the semantic information content

of multimedia applications. A combined abstraction of their conceptual and presentational characteristics, leading on

the one hand to their conceptual structure (with classic semantics of the real world modeled by entities, relationships

and attributes) and on the other to the presentational structure (including media type, logical structure, temporal

synchronization, spatial (on the screen) “synchronization”, interactive behavior) is developed in this paper. Multimedia

applications are construed as consisting of “Presentational Units”: elementary (with media object, play duration and

screen position), and composite (recursive structures of PUs in the temporal, spatial, and logical dimension. The

fundamental concept introduced is that of Semantic Multimedia Abstractions (SMA): qualitative abstract descriptions

of multimedia applications in terms of their conceptual and presentational properties at an adjustable level of

abstraction. SMAs, which could be viewed as metadata, form an abstract space to be queried. A detailed study of

possible abstractions (from multimedia applications to SMAs and SMA-to-SMA), a definition and query language for

Semantic Multimedia Abstractions (SMA-L) and the corresponding SMA model (equivalent to extended OMT), as

well as an implementation of a system capable of wrapping the presentational structure of XML-based documents

complete this work, whose contribution lays in the classically fruitful boundary between AI, software engineering and

database research.

Index Terms ---- Multimedia data model, semantic modeling, abstraction, semantic multimedia abstraction, spatio-temporal

retrieval, multimedia query language.

1.

Introduction

Looking for a piece of information among many, is one of the basic tasks in computer science -searching. The

traditional approach to searching makes two assumptions: First that we know, exactly, what we are looking for;

second that we can organize the data where we are looking to find it –the so called search space. These

assumptions are not true in all searching situations. For instance when as humans we try to recall information in

our minds we may have an approximate, fuzzy or incomplete description of it at a non uniform level of detail; as

for the “search space” if it does have an organization, it escapes us. This paper is about looking up multimedia

applications; on CD’s or in the Web they form a huge, loosely organized if at all, distributed search space with a

wealth of information.

“Get me multimedia applications on music teaching for string instruments; I recall seeing one with audio and video

(or was it a series of slides?) at the same time; half of the remaining screen was filled with music score and the rest

with textual instructions or explanations; it included Paganini’s Moto perpetuo”. This is the type of queries we deal

with. Why? Because from cognitive science we know that this is how people remember. But also because –and this

____________________________________________________________________________________________

TECHNICAL REPORT No. TR99/09/06

1

COMPUTER TECHNOLOGY INSTITUTE

1999

has been the original motivation of this work- this is how multimedia applications may be specified and such

specifications -available from the design phase or to be reconstructed- would be a most suitable search space.

The name of the game is abstraction. A fundamental human cognitive process and skill [27], and a basic

mathematical and computer science tool for problem solving in general [17], [22] and searching in particular [21].

It implies the transformation of our target objects by shedding some of their properties, those deemed irrelevant for

the task at hand. Such a transformation may be so drastic that it changes the domain of discourse: from land parcels

to rectilinear two-dimentional figures is the classic abstraction better known as Euclidean geometry. Properties

such as color, weight, substance become irrelevant and objects of the real worlds are mapped into their classes of

equivalence. All this is discussed, as background relevant research in Section 3.3.1.

From the example query, from our work in specification of multimedia titles in series (a software engineering

methodology developed to facilitate and automate the generation of classes of similar multimedia

applications)[16],[37],[38],[53] and from a wealth of research during the past few years [2],[7], [10],

[12],[19],[29], [39], [55] it is clear that we need to abstract on the conceptual and presentational characteristics of

multimedia applications at the same time. The conceptual structure can be neatly captured with classic semantic

models: entities, relationships and attributes are the basic tools augmented with higher level structuring concepts

(such as aggregation, grouping, and classification) for which both theory and tools are reasonably well developed

[46], [9], [42]. For the presentational structure of multimedia applications more is needed although a lot has been

done [12], [19],[33],[34],[36],[54]. Our analysis (Sections 2.2.1 and 2.2.2) indicates media type, logical structure,

temporal synchronization, spatial (on the screen) “synchronization”, and interactive behavior as being the main

aspects.

Our contribution to this analysis is the concept of Presentational Unit. A structurally scalable unit, the PU can be

elementary (just a media object positioned in time and place within a multimedia presentation, i.e. augmented with

playout duration and screen position) or composite (recursively consisting of simpler ones combined logically,

synchronized temporally or put together on the screen). This is detailed in Section 2.2.3.

The main contribution of the paper regards the analysis of abstractions in the multimedia domain. Semantic

Multimedia Abstractions (SMA) are qualitative abstract descriptions of a multimedia application in terms of its

conceptual and presentational properties at an adjustable level of abstraction (Section 2.3). For instance, while a

multimedia application needs absolute temporal durations for its media objects, an SMA would only retain their

relative temporal relationships. SMAs are metadata and they form an abstract space rather suitable for searching.

The base abstraction leads from representations of multimedia applications (in XML for example) to SMAs; from

then on hierarchies of abstract spaces can be created using SMA-to-SMA transformations which move up

abstraction level by relaxing constraints, and wrapping temporal, spatial or logical structures. The admissible types

of abstractions (with minor dependencies on the language used) are studied in detail in Sections 3.3 and 3.4

____________________________________________________________________________________________

TECHNICAL REPORT No. TR99/09/06

2

COMPUTER TECHNOLOGY INSTITUTE

1999

whereas the SMA definition and query language is given in Table 1 in BNF.

Although not the subject of this paper, a system has actually been developed to support the SMA model –itself

equivalent to OMT suitably extended with compatible definitions of temporal and spatial aggregation and

grouping. The system has been used to exemplify our ideas using XML as the base document language and Section

3.5 concludes the paper with an illustrative example.

Some of the most interesting research takes place in the boundaries of traditional computer science areas. This

work is a contribution in a classic fertile such boundary, between databases, artificial intelligence and software

engineering [9].

2.

Semantic Abstractions for specifying, designing and constraining multimedia applications

2.1 Automating the generation of multimedia applications in series: Motivation and the MULTIS systems

We started studying abstraction in the multimedia domain while approaching a software engineering problem: how

to specify a set of thematically and structurally “similar” multimedia applications (a multimedia series) in order to

design and build a special-purpose authoring environment which facilitates the development of each multimedia

application of the series. Towards this end, a production methodology called MULTIS (Multimedia Titles In

Series) [16] was proposed consisting of the following steps: initially, domain knowledge providers and multimedia

designers identify the desired common properties of all multimedia applications in the series and produce a generic

specification –called “Model Title Specification”- of the multimedia series in terms of them; based on the Model

Title Specification, computer engineers build a special-purpose authoring system –called a MULTIS system- that

embodies such properties in its own structure; using the MULTIS system, end-users “fill-in” the particular

properties of each personalized multimedia application and ask for the automatic generation of the application’s

source code.

Hence, the generic specification of a multimedia series is a fundamental issue in the MULTIS approach. The

Model Title Specification captures both the knowledge of the application domain (concepts and relations) and the

presentational and behavioral properties of the multimedia

Code Generator

(Presentation Layer)

applications, in a way, generic enough to represent the whole

Title Editor

(Control Layer)

series while adequately focused to entail in an easy to use

Application Database

(Application Layer)

MULTIS system.

Multimedia Database

(Data Layer)

For each multimedia series, the corresponding MULTIS

Model Title Specification

(Specifications Layer)

system consists of a custom multimedia database for the

Figure 1: The MULTIS Layered Architecture

organization and storage of the domain-specific multimedia

data

and

an editing environment for

the

definition

(instantiation), storage and automatic generation of each multimedia application in the series. From the database

point of view, MULTIS are special-purpose database systems enhanced with code generation functionality. The

____________________________________________________________________________________________

TECHNICAL REPORT No. TR99/09/06

3

COMPUTER TECHNOLOGY INSTITUTE

1999

Model Title Specification guides the development of two database schemata, the schema of the multimedia

database and that of the application database and it determines the design of an application-specific user interface

for the editing and instantiation of each personalized multimedia application. Both databases are modeled with

object-oriented models; each multimedia application forms a network of objects which reflects its specific

structure, behavior and data and is stored as an independent database.

In a MULTIS system, the knowledge about the presentational and structural characteristics of the multimedia

applications in the series is embedded in each object class and it is used in the code generation process; each object

“knows how to present itself”, it produces its code and propagates a pertinent message to the appropriate objects of

the network. Figure 1 depicts the MULTIS layered architecture; layers communicate with adjacent ones but

operate independently allowing the separation of the multimedia data and their presentation.

The MULTIS approach was validated in practice within the context of the EEC funded project “Valmmeth” [53]

whose aim was to demonstrate the feasibility and benefits of publishing series of multimedia applications using

this technology. Based on the Model Title Specifications of four multimedia series (foreign language training

applications, business presentations, point of information systems (POISs) and medical training applications) given

by domain experts and multimedia designers, the corresponding four MULTIS systems were developed and tested

at pilot sites in Greece, Belgium and UK.

2.2 Identifying the properties of multimedia applications in a semantic abstract representation

2.2.1

The specification of a multimedia series as an abstraction process

At the specification and design stage of a software system, the goal is to capture the desired “functionality”

ignoring implementation details. Taking the MULTIS example, when developing a Model Title Specification the

goal is to specify the functionality of a multimedia series by capturing only the desired common properties of

multimedia applications that the corresponding MULTIS system is able to produce and ignoring those properties in

terms of which these multimedia applications are allowed to differ. In other worlds, the Model Title Specification

constrains the applications to be generated to those considered “identical” in terms of certain properties.

Hence, specifying a multimedia series is an abstraction process; the particular abstraction goal determines both the

properties ignored at this stage and the selection of the “right abstraction level”. However, the “functionality” of

multimedia applications pertain to their conceptual and presentational structure and behavior: real-world objects

and relationships involved, spatio-temporal structure and synchronization during presentation, control flow and

behavior in various events etc.

For instance, if temporal issues of multimedia applications is of particular

importance and should be specified in detail, the abstraction process may ignore details such as the multimedia

content layout and text formatting and may keep only the relevant to the temporal dimension properties.

The decision on the “right abstraction level” in the MULTIS example is guided mainly by the desired diversity -or

similarity degree- of produced multimedia applications and is a trade-off between the complexity of a MULTIS

____________________________________________________________________________________________

TECHNICAL REPORT No. TR99/09/06

4

COMPUTER TECHNOLOGY INSTITUTE

1999

system and the range and diversity of multimedia applications that the system is able to produce -high abstraction

level leads to too open MULTIS systems which tend to resemble general purpose authoring tools. For instance, if

the temporal properties of a multimedia scene are specified using exact time instances and time distances from a

specific time point -e.g. a video starts at 5 from the beginning of the presentation and at the 3 of its playout an

image appears for 5 -, then “valid” scenes are considered only those that conform to these strict temporal

constraints; hence, there is no diversity of scenes in terms of their temporal synchronization.

At a higher

abstraction level, similar specifications could be given using temporal relations instead of time instances allowing

several multimedia scenes to “fall under” these temporal constraints e.g. “a video starts some time after the

beginning of the scene while two images appear sequentially and in parallel with the video”.

2.2.2

The Model Title Specification as an Semantic Abstract Description of a set of multimedia applications

In the MULTIS approach the Model Title Specification captures the following:

a.

The conceptual structure of multimedia applications (application layer), which consists of the “real-world”

objects of the application domain (objects that exist in the real, outside world –we call them conceptual

structure objects or conceptual units), their attributes and relationships. For instance, in a multimedia series of

tourist guides, a city, a hotel, or a museum are conceptual structure objects; a relationship could be “each city

has one or more hotels”.

b.

The presentational structure of multimedia applications (presentation layer), which consists of the

presentational objects (objects that appear “on the screen” during the execution of the multimedia application we call them presentational units), their attributes and relationships. The data types of multimedia content

(called media objects) that each real-world object “is presented by” are the basic objects for building

presentational units. The presentational structure reflects how the conceptual structure is mapped onto a

multimedia application. For instance, if the conceptual structure includes that “a company consists of a

number of departments”, one possible presentational structure is “a company is presented by an screen whose

background is an organizational chart with a number of departments; each department is presented by one

introductory screen, accessible through active hotspots on the organizational chart”. Note that the same

conceptual structure can be mapped onto many different presentational structures depending on the way the

multimedia content of the conceptual objects are assembled and structured in a multimedia application.

2.2.3

On the Presentational Structure of Multimedia Applications

To define the properties of multimedia applications captured by presentational structure we first define the concept

of the Presentational Unit (PU).

Definition 2.1: An elementary Presentational Unit (PU) is a triplet pu ( m, , p ) where

m is a media object,

____________________________________________________________________________________________

TECHNICAL REPORT No. TR99/09/06

5

COMPUTER TECHNOLOGY INSTITUTE

1999

τ is a time interval, called the “presentational duration” of pu (possible indefinite e.g. for a web page that

stays on the screen until an outside event occurs),

p is a region on the screen, called the “presentational position” of pu (possibly indefinite e.g. for the object of

type “audio”)

The “presentational duration” of an elementary PU pu, denoted with pu.τ, represents the temporal interval when

the pu is active during an execution of a multimedia application (e.g. it appears in a presentation). A temporal

interval is defined by two end points or time instances [3], [34].

The “presentational position” of an elementary pu, denoted with pu.p, represents the screen portion the pu

occupies during an execution of a multimedia application; the domain of presentational position is the set of 2D

polygons [13].

Definition 2.2: A composite PU is defined inductively by combining PUs in three orthogonal dimensions or views:

Logically, Temporally and Spatially. The presentational duration of a composite PU is a set of temporal intervals

representing the presentational durations of its constituents PUs. The presentational position of a composite PU is

a set of screen regions representing the presentational positions of its constituents PUs.

The following properties are captured by the Presentational Structure of a PU :

i.

The types of the constituent PUs (media objects and composite PUs) disregarding the specific content i.e.

two pictures (PUs) have the same type.

ii.

The logical structure of the PU (including constituent PUs), disregarding specific instances i.e. two slide

shows, one of 10 slides and the other of 25 have the same logical structure.

iii.

The temporal synchronization of the PU (including constituent PUs), disregarding the specific durations

and considering only the qualitative temporal information that is considered significant and relevant to the

specific abstraction goal. i.e. two pieces of synchronized audio-video (PUs), one of 5 and the other of 10

have the same temporal synchronization. We will refer to this as the “Temporal Structure” of a PU.

iv.

The spatial synchronization -on the screen relative positioning- of the PU (including constituent PUs)

disregarding i.e. specific sizes and taking into account only qualitative spatial information that is considered

significant and relevant to the specific goal i.e. two pairs of non-overlapping photos (PUs) have the same

spatial synchronization. We will refer to this as the “Spatial Structure” of a PU. Note that the spatial

synchronization of a PU is meaningful for visual PUs e.g. sub-scenes, web-pages, PUs of type image or

video.

v.

The interactive behavior of the PU (including constituent PUs), disregarding specific events, conditions and

actions and considering only types (classes) of the above i.e. two buttons, one activated by the “mouse

click” event and the other by the “mouse over” event have the same interactive behavior.

Dropping or under-specifying one of the axes (logical, temporal, spatial) creates a Presentational View.

____________________________________________________________________________________________

TECHNICAL REPORT No. TR99/09/06

6

COMPUTER TECHNOLOGY INSTITUTE

1999

Hence, a PU is a structured composition of simpler PUs which is semantically meaningful under a Presentational

View; depending on the Presentational View, a PU can be characterized as a Logical, Temporal or Spatial PU.

Examples: a) Let the specification of a multimedia application for company presentations include that “the

application starts with the company introductory video and while the video plays, various images appear on the

screen; when the video finishes, an image of a company organization chart appears”. The video duration –a

temporal interval- can be considered to define a PU whose “meaning” is “introduction”;

the duration that the

company organization chart stays on the screen defines another PU. The Macromedia Director [35] authoring

paradigm is based on temporal PUs: a time frame (a temporal interval) in the score window, or a set of such frames

can be considered a temporal PU. A temporal PU is defined by a temporal interval within which its constituent PUs

appear b) A space-oriented specification of the above PUs may include: “a company introductory scene contains a

video and a slide-show of images; a second scene contains an organization chart”. The two scenes are two spatial

PUs. A web page is another example of a spatial PU. c) A structured web document consisting of a header, one or

more author names and a set of paragraphs is an example of a logical PU.

Definition 2.4: The Presentational Structure of a multimedia application consists of the set of its constituent PUs

and their relationships during presentation; the relationships among PUs determine the control flow of the

application. In many cases, a multimedia application is a single PU.

Examples: a) In the “company presentation” example given above, the introductory screen and the department

screens are PUs linked together via active hotspots on the organizational chart; the relationship can be

characterized as link-oriented relationship between PUs. Link-oriented relationships used also in web-based

applications that consist of a number of hyper-linked spatial PUs (web pages). b) A multimedia presentation that

“plays” in automatic mode is considered a set of PUs with time-oriented relationships.

2.3 Semantic Multimedia Abstractions (SMAs) and the SMA model

Definition 2.5: A Semantic Multimedia Abstraction (SMA) is a qualitative abstract description of a multimedia

application in terms of the properties captured by the conceptual and presentational structure of multimedia

applications (defined in Sect. 2.2.2 and 2.2.3); we call such properties conceptual and presentational properties of

multimedia applications at the semantic level.

A number of models for multimedia information management that address certain aspects of multimedia

applications have been developed. Most of them emphasizes on individual media -images and video- following

various modeling approaches i.e. the knowledge-based semantic image model, proposed in [10] a four-layered

model that uses the hierarchical structure TAH (Type Abstraction Hierarchy) for approximate query answering by

image feature and content. Models for multimedia documents address the issues of spatio-temporal

synchronization and of structuring of multimedia documents: Time intervals and Allen’s temporal relationships [3]

and 2D spatial relationships [13] are extensively used for modeling the spatio-temporal synchronization of

____________________________________________________________________________________________

TECHNICAL REPORT No. TR99/09/06

7

COMPUTER TECHNOLOGY INSTITUTE

1999

monomedia data and of more complex multimedia structures and for representing temporal and spatial semantics

e.g. [34], [10], [11], [54]. Language-based models such as SGML[49], XML[14] and object-oriented approaches

[29], [48] have also been developed for modeling multimedia documents.

For the representation of SMAs we need a “model” based on well established concepts and techniques, able to

capture in a uniform way the conceptual and presentational properties of multimedia applications at the semantic

level. Al-Khatib et al. in [2] review, categorize and compare recent semantic data models for multimedia data at

different levels of granularity. According to this categorization, the model for representing SMAs should include

features from both compositional and organizational models for multimedia documents while it should emphasize

on multimedia databases and provide abstraction constructs for representing higher level structures. Using for

instance a graph-based model for multimedia applications -where nodes represent multimedia objects (simple or

composite) and arcs denote the execution flow, a model for SMAs could be created by mapping a detailed graph

representing one multimedia application –one instance- onto a less detailed, generic graph whose nodes and arcs

represent classes of objects and relationships of the initial graph.

The proposed model –called “SMA model”- is based on well established semantic data models used in database

conceptual modeling and knowledge representation [8], [9], [42]. These models provide structural concepts such as

entities (objects), relationships, attributes as well as forms of data abstraction for relating concepts: classification

(grouping objects that share common characteristics into a class), aggregation (treating a collection of component

concepts as a single concept), generalization (extracting from a set of category concepts a more general concept

and suppress the detailed differences) and association (treating a collection of similar member concepts as a single

set concept) [8], [9], [42]. Our building tools will be these classic forms of abstraction, extended to the temporal

and spatial dimensions to capture the presentational properties of multimedia applications.

2.3.1

Representing conceptual properties of multimedia applications at the semantic level (SMA’s conceptual

structure)

For the representation of the conceptual structure of SMAs, we shall use the provided structural concepts (entities,

attributes and relationships) and forms of abstraction (classification, generalization, aggregation, association) of

semantic data models.

2.3.2

Representing presentational properties of multimedia applications (SMA’s presentational structure)

Semantic Data Models use two abstraction constructs to allow the recursive formation of complex objects from

simpler ones: aggregation and grouping. For SMA’s presentational structure such models should encompass the

standard notion of “consists” for representing the logical structure of PUs (e.g. the PU “map” consists of an image

and a number of buttons), a “temporal consists” for representing the temporal structure of PUs (e.g. a PU “slide

show” consists of a temporal sequence of slides) and a “spatial consists” for representing the spatial structure of

PUs (e.g a PU “scene” consists of two disjoint pictures and a text on the bottom of the screen). By extending these

____________________________________________________________________________________________

TECHNICAL REPORT No. TR99/09/06

8

COMPUTER TECHNOLOGY INSTITUTE

1999

abstraction constructs to the temporal and spatial dimensions, temporal and spatial aggregation and grouping are

defined.

2.3.2.1 Representing Temporal Structure of multimedia applications at the semantic level (SMA’s temporal

structure)

a. Temporal Abstraction Constructs

Let U { pui | pui PU }, 1 i n} and pui . be the presentational duration of pu i for i 1...n . Let also

Rt ( pu. , pu . ) denote a temporal relationship R (such as “before” [3]) between pairs of presentational durations.

Temporal Aggregation

Aggregation is “the form of abstraction in which a relationship between component objects is considered as a

higher level aggregate object. Every instance of an aggregate object class can be decomposed into instances of the

component object classes, which establishes a part-of relationship between objects” [9]. E.g. a car is an aggregate

of its parts.

Temporal Aggregation is the form of abstraction in which a collection of PUs pu i , i 1...n with presentational

durations pui . form a higher level PU pu whose presentational duration pu. “temporally consists of” the

presentational durations pui . of its constituents (see Example 2.1). The higher level presentational unit pu is

called a temporal aggregate of pu i and its presentational duration pu. equals the union of the presentational

duration pui . of its constituents.

The important features of a Temporal aggregate are: a) it is also a PU with a presentational duration attribute, b)

the presentational durations of its constituent PUs are “within” (or play during) the presentational duration of the

temporal aggregate, c) the presentational duration of the aggregate PU pu. does not extend before the start time

or after the end time of any of its constituent PUs, d) pu. is a single temporal interval without “temporal” holes.

Definition 2.6: Let pu A( pu1 , pu2 ,.... pun ) be an aggregation of component PUs pu1 , pu2 ,.... pun . Then pu is a

temporal aggregation of pu1 , pu2 ,.... pun noted AT , with presentational duration AT . if and only if:

n

AT ( pu ) pui A, During ( pui . , pu. ) and Equals ( pu. , pui . )

(During

and

Equals

are

temporal

i 1

relationships [3] – see Figure 2).

Example 2.1: If a scene consists of three PUs of type image, video and audio, with presentational durations

τscene

τaudio

τvideo

τimage

pu I . , puV . and pu A .

Scene AT ( pu I , puV , pu A )

respectively,

then:

During ( pu I . , scene. ) During ( puV . , scene. ) During ( pu A . , scene. )

Equals ( scene. , pu I . puV . pu A . )

____________________________________________________________________________________________

TECHNICAL REPORT No. TR99/09/06

9

COMPUTER TECHNOLOGY INSTITUTE

1999

Examples of Temporal aggregations in common authoring paradigms

i. Macromedia Director [35] paradigm: Here, a PU (e.g. a multimedia scene) is typically specified as a sequence

of time frames each consisting of several elementary PUs i.e. media objects with presentational duration and

presentational position; such PU is a temporal aggregate of its constituent PUs with presentational duration equal

to the temporal interval defined by the set of time frames if and only if all the constituent media objects “play

within” this temporal interval and for each time frame there is at least one active media object (there are no

“temporal” holes). In case a PU e.g. a background music, continues to play to the succeeding time frames, the PU

is not a temporal aggregate. In this paradigm a multimedia application is typically a set of inter-linked temporal

aggregations.

ii. HTML / web authoring paradigm: a PU is usually determined by the media objects in a web page; such PU can

be characterized as a temporal aggregate if none of its constituent media objects plays outside -e.g. extends to the

previous or the next page- the temporal interval when the web page is active .

Temporal Grouping

Grouping or Association “is a form of abstraction in which a relationship between member objects is considered

as a higher level set object. An instance of a set object class can be decomposed into a set of instances of the

member object classes and this establishes a member-of relationship between a member object and a set object”

[9].

Temporal-grouping is a form of abstraction in which a collection (group) of similar PUs pu i , i 1...n (i.e. PUs

with the same presentational structure) with presentational durations pui . , temporally related with the same -or

similar- temporal relationship R t , form a higher level PU pu whose presentational duration pui . “is a temporal

group of” the member presentational durations pui . . (see example 2.2). The higher level PU pu is called a

temporal group of pui with temporal relation Rt and has presentational duration pu.τ equals to the minimal cover of

the pui . of PUs. The R t is a temporal constraint on the set.

A group of PUs, temporally related with “similar” temporal relationships can be considered a temporal grouping if

a more generic temporal relationship is used instead. For instance, a group of PUs with temporal relationship either

“meets” or “before” can be considered a temporal grouping where the temporal relationship “sequential” holds

for all members of the temporal grouping. A temporal grouping without temporal constraints emphasizes the

similarity of temporal relationships of the set and ignores the exact relationship (abstraction transformations on

abstraction constructs and temporal relations are discussed in section 3.4).

Definition 2.7: Let pu G( pu1 , pu2 ,.... pun ) be a grouping of similar PUs pui , i 1n and Rt be a temporal

relationship. Then pu is a temporal grouping of pu i , noted GT , with presentational duration GT . if and only if

Rt holds between all pairs ( pui . , pui 1 . ) : GT ( pu) pui , pui 1 G, Rt ( pui . , pui 1 . ) .

____________________________________________________________________________________________

TECHNICAL REPORT No. TR99/09/06

10

COMPUTER TECHNOLOGY INSTITUTE

1999

Example 2.2: A sequence of slides where the temporal relationship overlaps

τslide show

holds between every pair of slides is a temporal grouping if:

SlideShow GT ( slide1 , slide 2 ...slide n ){Overlaps}

slide i , slide i 1 , Overlaps ( slide i . , slide11 . )

SlideShow. [ slide1 . .start, slide n . .end ]

τslide1

τslide2

.....

τslide n

Notice that, for this to be presentationally maningful, suitable spatial

relationships must hold between successive slides.

b. Representing Temporal Relations of multimedia applications at the semantic level

Temporal

Aggregation

and

TEMPORAL CONSTRAINTS

parallel

sequential

Grouping defined above are

is-a

two

abstraction

constructs

certain

temporal

is-a

overlaps

before

during

starts

finishes

meets

equal

posing

constraints. However, temporal

synchronization information of

Figure 2: A hierarchy of temporal constraints

PUs refers also to temporal

constraints on such abstraction constructs, within the constituent PUs of a PU and among PUs. The 13 temporal

relationships of Allen’s between time intervals [3], namely before, meets, overlaps, during, starts, finishes, and

equals and their reverse relationships form the basic “vocabulary” for temporal constraints at the semantic level.

The

SMA

model

handles

these

relationships

and

their

combinations

utilizing

the

operators

, , , ,,, , (e.g. before meets ) as temporal integrity constraints. Quantitative values of presentational

durations (such as concrete start/end time instances of presentational durations as well as lengths of presentational

durations e.g. actual duration of a video) are ignored and abstracted to the corresponding qualitative information. A

generalization hierarchy of temporal relations allows a variable precision at this level (e.g. the hierarchy in Figure

2) e.g. less information is given by limiting the set of temporal relationships to sequential and parallel, while more

information is provided if qualitative distances (near, far etc) are captured as well [11].

2.3.2.2 Representing Spatial Structure of multimedia applications at the semantic level (SMA’s spatial structure)

a. Spatial Abstraction Constructs

In [52] it is noted that when dealing with spatial objects, i.e. those whose position in space matters to the

information system, it is often the case that if objects A, B and C constitute object X , then the position of

A, B and C form a subset of the position of X . Thus spatial aggregation and spatial grouping were introduced as

simple extensions to modeling primitives for conveying this extra piece of information. In [37] we identified the

particular interpretation of spatial aggregation and grouping in the multimedia domain and defined the

corresponding abstraction constructs.

____________________________________________________________________________________________

TECHNICAL REPORT No. TR99/09/06

11

COMPUTER TECHNOLOGY INSTITUTE

1999

Let U { pui | pui PU }, 1 i n} and pui . p be the presentational position of pu i for i 1...n . Let also

Rs ( pu. p, pu . p ) denote a spatial relationship R (such as “disjoint” [13]) between pairs of presentational positions.

Spatial Aggregation

Spatial Aggregation is the form of abstraction in which a collection of PUs pu i , i 1...n with presentational

positions pui . p form a higher level PU pu whose presentational position pu. p “spatially consists of” the

presentational positions pui . p of its constituents (see Example 2.3). The higher level presentational unit pu is

called a spatial aggregate of pu i and its presentational position pu. p equals the union of the pui . p of its

constituents.

The important features of a Spatial aggregate are: a) it is also a PU with a presentational position attribute, b) the

presentational positions of the constituent PUs are “within” (or appear inside) the presentational position of the

spatial aggregate, c) the presentational position of the aggregate PU pu. p does not extend the space limits of any

of the constituent PUs, d) pu. p is a region without “spatial” holes.

Definition 2.8: Let pu A( pu1 , pu2 ,.... pun ) be an aggregation of component PUs pu1 , pu2 ,.... pun Then pu is a

Spatial Aggregation of pu1 , pu2 ,.... pun noted AS , with presentational position AS . p if and only if:

n

AS ( pu ) pui A, Covers ( pui . p, pu. p ) and Equal ( pu. p, pui . p )

(Covers

and

Equal

are

spatial

i 1

relationships [13], see Figure 3).

Example 2.3: If a multimedia scene consists of three visual PUs of type image, video and text with presentational

positions pu I . p, puV . p and puT . p respectively, then:

Scene AS ( pu I , puV , puT )

scene

Covers( pu I . p, scene. p ) Covers( puV . p, scene. p ) Covers( puT . p, scene. p )

text

video

Equal ( scene. p, pu I . p puV . p puT . p )

text

background image

Examples of Spatial aggregations in common authoring paradigms

i. Macromedia Director [35] paradigm: Within a temporal interval, the visual PUs (e.g. visual media objects) that

appear simultaneously on a screen portion form a spatial aggregate; hence, each time frame in the score window

defines a spatial aggregate of the PUs exist in the score channels.

ii. HTML / web authoring paradigm: a web page forms a spatial aggregate of its constituents PUs.

Spatial Grouping

Spatial-grouping is a form of abstraction in which a collection (group) of similar PUs pu i , i 1...n with

presentational positions pui . p , spatially related with the same spatial relationship Rs , form a higher level PU pu

whose presentational position pu. p “is a spatial group of” the member presentational positions pui . p . (see

example 2.4). The higher level PU pu

is called a spatial group of pui with spatial relation Rs and has

____________________________________________________________________________________________

TECHNICAL REPORT No. TR99/09/06

12

COMPUTER TECHNOLOGY INSTITUTE

1999

presentational position pu. p equals to the minimal cover of the pui . p of PUs. The Rs is a spatial constraint on the

set.

Definition 2.9: Let pu G( pu1 , pu2 ,.... pun ) be a grouping of similar PUs pui , i 1n and Rs be a spatial

relationship. Then pu is a spatial grouping of pu i , noted G S , with presentational position GS . p if and only if

Rs holds between all pairs ( pui . p, pui 1 . p) : GS ( pu) pui , pui 1 G, Rs ( pui . p, pui 1 . p) .

Example 2.4: A group of buttons where the spatial relationship meets holds between every pair of successive

buttons is considered a spatial grouping if :

button1 button2 ... button n

ButtonBar G S (button1 , button2 ...buttonn ){Meets}

buttoni , buttoni 1 , Meets (buttoni . p, button11 . p )

ButtonBar. p [button1 . p.( x1 , y1 ), buttonn . p.( x n , y n )]

c.

d. Representing Spatial Relations of multimedia applications at the semantic level

Similarly

SPATIAL CONSTRAINTS &

GENERALISATION HIERARCHY

Temporal

to

the

Dimension,

general overlap

w ithin

boundary_overlap

is-a

y

is-a is-a

is-a

is-a

is-a

meets(x,y) overlaps(x,y) inside(x,y) covered by (x, y)

disjoint(x,y)

x

is-a

the

is-a

is-a

x

y

x

y

y

x

y

is-a

equal

covers

x

x

is-a

sixteen

2D

Topological

relations

[13],

disjoint,

namely

meets, overlaps, inside,

y

covered_by, covers and

is-a

is-a

boundary_disjoint

is-a

is-a

is-a

is-a

equal form the basic

boundary_meets

“vocabulary” for spatial

Figure 3 : A hierarchy of 2D spatial constraints

constraints

of

multimedia applications

at the semantic level. A generalization hierarchy of 2D topological relations (e.g. Figure 3) allows a variable

precision at this level e.g. less information is given by limiting the set of topological relationships to general

overlap and disjoint, while more information is provided if qualitative distances (near, far etc) are captured as well

[11].

2.3.2.3 Representing multimedia content at the semantic level

In conceptual modeling, Classification, a form of abstraction in which a collection of objects is considered a higher

level object class, is used to classify and describe objects in terms of object classes; hence, it is natural in the SMA

model to represent media objects by their corresponding classes (data types of multimedia content). Specific

properties of media objects are ignored at this stage. Abstraction hierarchies of multimedia data classes allow a

variable precision at this level. For instance, the content data type SELECTOR is a generalization of data types

MENU, EVENTER and BUTTON.

____________________________________________________________________________________________

TECHNICAL REPORT No. TR99/09/06

13

COMPUTER TECHNOLOGY INSTITUTE

2.4 The

1999

SMA model graphical notation (extended-OMT model notation) and the corresponding SMA

Definition and Query Language (SMA-L)

2.4.1

The Extended-OMT model graphical notation

The abstraction constructs proposed for representing SMA’s presentational structure are generic and can be used

with any semantic model which has the minimal functionality of allowing the construction of complex objects

from simpler ones. We illustrate this with the Object Modeling Technique (OMT)[46], resulting in an ExtendedOMT model.

Extensions to OMT-Aggregation construct

Extensions to OMT-Association construct

T{<Temporal Constraints>}

T{<Temporal Constraints>}

Temporal Aggregation

S{<Spatial Constraints>}

Spatial Aggregation

Class

Temporal Grouping

S{<Spatial Constraints>}

Class

Spatial Grouping

Figure 4 : Extensions to OMT Object Model graphical notation

2.4.2

Semantic Multimedia Abstraction (SMA) Definition and Query Language (SMA-L)

For the representation and manipulation of SMAs, the Semantic Multimedia Abstraction Definition and Query

language (SMA-L) has been defined, the formal syntax of which is given in BNF format (Table 1). The SMA-L

was built on the Extended-OMT model and thus any SMA modeled using the extended-OMT can be represented

with SMA-L. SMA-L is a declarative object-oriented language which:

allows the representation of the conceptual and presentational structure of SMAs

(c_units and p_units

represent conceptual and presentational units respectively).

contains predicates corresponding to the temporal and spatial abstraction constructs (aggregation and

grouping) defined for SMAs for forming PUs as well as to the Presentational View of PUs: logical, temporal

and spatial, allowing users to formulate queries on complex structures of multimedia applications.

provides a way for defining PUs and consequently SMAs in various abstraction levels in terms of their

conceptual and presentational properties at the semantic level, through abstraction hierarchies on abstraction

constructs, constraints and multimedia data types.

2.4.2.1 Syntax of SMA-L

The BNF notation of the SMA-L syntax is given in Table 1. Words in <italics> denote non-terminal elements of

the language. Clauses in [ ] are optional arguments. Bold is used to denote reserved words.

____________________________________________________________________________________________

TECHNICAL REPORT No. TR99/09/06

14

COMPUTER TECHNOLOGY INSTITUTE

1999

Table 1: Semantic Multimedia Abstractions Definition and Query Language (SMA-L)

<SMA>

<unit>

<c_unit>

:

:

:

<unit_name>

<c_unit_types>

<c_unit_type>

<simple_c_unit>

<composite c_unit>

<p_unit>

:

:

:

:

:

:

<p_unit_types>

<p_unit type>

<simple p_unit>

:

:

:

<content data type >

types

:

<composite p_unit>

<abstraction construct >

:

:

<abstraction view>

<temporal>

<spatial>

<logical>

<p_unit_list>

<member_unit>

<component_unit_list>

<category_unit_list>

< source_p_unit_list>

:

:

:

:

:

:

:

:

:

< target_p_unit_list>

:

<unit reference>

<c_unit reference>

<p_unit reference>

<constraint>

:

:

:

:

<condition>

:

<action>

<statement>

<temporal constraint>

:

:

:

<temporal relation>

:

< spatial constraint>

:

< spatial relation>

:

<query definition>

:

< match statement>

:

unit | unit <unit >

; An SMA is a sequence of conceptual and/or presentational units

<c_unit> | <p_unit>

C_UNIT <unit_name>

; conceptual unit

[TYPE <c_unit_types>]

[PRESENTED_BY <p_unit_list>]

identifier

<c_unit_type> | <c_unit_type>, <c_unit_type>

<simple_c_unit> | <composite_c_unit>

identifier | ABSTRACT | LINK (<source_ unit_list>) (<target_unit_list>)

< abstraction construct > [<{constraint}>]

P_UNIT<unit name>

; presentational unit

[TYPE <p_unit types >]

<p_unit_type> | <p_unit_type>, <p_unit_type>

<simple_p_unit> | <composite_p_unit>

<content data type > | ABSTRACT

| LINK (<source_p_ unit_list>) (<target_p_unit_list>)

CONTENT | MULTIPLEXED_CONTENT

; content data types can be extended to new

| COMPOSITE | VISUAL_OBJECT | INPUT

| OUTPUT | IMAGE | VIDEO | AUDIO | ANIMATION | TEXT | GRAPHICS

| PICKER | HOTSPOT | SELECTABLE_CONTENT | STRING | VALUATOR

| SELECTOR | MENU | EVENTER | BUTTON

| SLIDE_SHOW | INTERACTIVE_IMAGE

< abstraction construct > [ : <abstraction view> ] [<{constraint}>]

GROUP_OF (<member_unit>)

| AGGREGATION OF (<component_unit_list>)

| GENERIC (<category_unit_list>)

[<temporal>] [<spatial>] [<logical>]

; the “view” of presentational units

[T] [<{ temporal constraint }>]

; temporal view

[S] [<{ spatial constraint }>]

; spatial view

[<{constraint}>]

; logical view

<p_unit reference> | <p_unit_list>, <p_unit reference>

< unit reference>

<unit reference> | <component_unit_list>, <unit reference>

<unit reference> | <category_unit_list>, <unit reference>

<p_unit reference> [ : <condition>]

| < source_ p_unit_list>, < p_unit reference> [ : <condition>]

< p_unit reference> [ : <action>]

| < target_p_unit_list>, < p_unit reference>[ : <action>]

<c_unit reference> |<p_unit reference>

< unit_name> | < c_unit type>

< unit_name> | < p_unit type>

<statement> | not < constraint>

| < constraint> and < constraint>

| < constraint> or < constraint> | (<constraint>)

<statement> | not < condition >

| < condition > and < condition >

| < condition > or < condition > | (<condition >)

<statement>

string | function

<temporal relation> | not <temporal relation>

| <temporal constraint> and <temporal constraint>

| <temporal constraint> or <temporal constraint>

| (<temporal constraint>)

meets | met-by | before | after | during | contains | overlaps | overlapped-by

| starts | started-by | finishes | finished-by | equal

| sequential | parallel

< spatial relation> | not < spatial relation>

| < spatial constraint> and < spatial constraint>

| < spatial constraint> or < spatial constraint>

| (<spatial constraint>)

disjoint | meet | overlap | covered_by | covers | inside | contains | equal

| g_overlap | within | b_disjoint | b_meets | b_overlap

SELECT < semantic mm abstraction name>

<match statement>

MATCH (<semantic mm abstraction>)

| < match statement> and < match statement>

| < match statement> or < match statement>

< semantic mm abstraction name>:

identifier

____________________________________________________________________________________________

TECHNICAL REPORT No. TR99/09/06

15

COMPUTER TECHNOLOGY INSTITUTE

1999

2.5 Related Work

The M Model by Dionisio and Cardenas [12] and the ZYX model by Boll and Klas [7] follow a modeling approach

similar to the SMA model. The M Model is a synthesis of the Extended ER and Object-Oriented data models,

integrating spatial and temporal semantics with general database constructs; the basic construct introduced is the

stream”, an ordered finite sequence of entities or values; substream and multistream (an aggregation of streams that

creates new more complex streams) are the other two basic constructs of the model. In the SMA model, streams,

substreams and multistreams are modeled with temporal groupings and temporal aggregations, which are generic

extensions of the classic aggregation and grouping constructs. However, M Model and its MQuey language can

also be used for modeling and querying SMAs. The ZYX model introduces new constructs for multimedia

document modeling; the model uses a hierarchical organization for the document structure, an extension of Allen’s

model (which support intervals with unknown duration) for modeling temporal synchronization and a point-based

description for modeling spatial layout. The ZYX model is a tree-based model where nodes represent “presentation

elements” -a concept similar to our notion of presentational unit- and each node has a binding point that connects

it to other elements. Spatio-temporal synchronization and interactivity are modeled with temporal, spatial or

interaction elements (par, seq, loop, temporal-p and spatial-p, link, menu etc.); in the SMA model such

relationships are modeled as constraints on presentational units (including temporal/spatial aggregation and

grouping) which allow the modeling of the conceptual and presentational structure in a uniform way. As the Z YX

model uses a structure similar to language-based models (XML, SMIL) the abstraction process from these models

to ZYX is straightforward and abstraction transformations (discussed in 3.4) can transform ZYX representations to

SMA-L ones.

Conceptual modeling has been proposed for document information retrieval in [39], where principles from

database area are used in order to enhance retrieval of multimedia documents; the model focuses on multimedia

documents and is restricted on their logical, layout and conceptual view. Other object-oriented multimedia query

languages with the appropriate extensions/modifications can be used for the same purpose as SMA-L such as: the

Multimedia Query Specification Language along with the object-oriented data model for multimedia databases

proposed by Hirzalla et al. [19] which allows the description of multimedia segments to be retrieved from a

database containing information on media and on spatial and temporal relationships between these media; the

Query language of the TIGUKAT object management system [43];

the general purpose multimedia query

language MOQL [33] which includes constructs to capture the temporal and spatial relations in multimedia data.

2.6 Application: Validation of the MULTIS production approach

Figure 5 depicts a part of the conceptual database schema of the MULTIS system for a series of Point of

Information Systems (POIs), modeled using the extended-OMT model. The corresponding statements in SMA-L

are also given.

____________________________________________________________________________________________

TECHNICAL REPORT No. TR99/09/06

16

COMPUTER TECHNOLOGY INSTITUTE

1999

POIS

Interactive M ap

Geographic Area

HotSpot

Place

Image

Area View

Landmark

M useum

Castle

Hotel

S{meets}

sub-scene

T{meets},

S{equal}

Image

Video

T {equals}, S{disjoint}

Text

ButtonList

C_UNIT POIS

TYPE AGGREGATION_OF (GeographicArea)

C_UNIT GeographicArea

TYPE

AGGREGATION_OF

(

GROUP_OF

(GeographicArea)),

AGGREGATION_OF(GROUP_OF(Place),

GROUP_OF(AreaView) )

…………..

C_UNIT Landmark

TYPE GENERIC(Museum, …. , Hotel)

……………….

C_UNIT Hotel

PRESENTED_BY(AGGREGATION_OF (SubScene,

Video))

P_UNIT SubScene

TYPE AGGREGATION_OF (

GROUP_OF (IMAGE): T {meets}, S{equal},

TEXT,

ButtonList) : T {equal}, S {disjoint}

P_UNIT ButtonList

TYPE GROUP_OF (Button): T{equal}, S{meets}

T{equals} S{meets}

Button

Figure 5 : Extended-OMT model of MULTIS POIS

3.

Semantic Multimedia Abstractions for Querying Large Multimedia Repositories

3.1 The opportunity of abstraction in multimedia information retrieval

Organized units of interactive multimedia material are becoming rapidly available beyond their original format,

namely Compact Disks; the advent of the Web and the appearance of digital libraries enlarge the habitat of such

multimedia units which can now reside anywhere on the Internet, be distributed across local or global networks, or

even have a transient and virtual existence: a net-surfing session on the Web is a multimedia application of this

kind. Although such collections of applications are not organized as proper databases, they are very large

repositories of multimedia information. For large collections of such applications, browsers and query mechanisms

addressing the multimedia data alone while reasonably well developed are inadequate: we lack techniques for

efficient generic retrieval of structured multimedia information. To really tap the information resource we need a

different approach for querying and navigating in these repositories, one that would resemble our own way of

recalling information from our minds, human remembering [27].

Consider the following query: find multimedia electronic books explaining grammar phenomena of English

Language where phrasal verbs are explained through a page of a synchronized video and a piece of text in two

languages; the video covers half of the screen and when clicked a translation text appears. This is an abstract

specification of –possibly a part of- a multimedia application and regard its conceptual structure (a book has pages

with phrasal verbs), its presentational structure including its spatio-temporal synchronization and its interactivity. It

is exactly with respect to these characteristics that we would like to be able to query and navigate through a

multimedia repository.

____________________________________________________________________________________________

TECHNICAL REPORT No. TR99/09/06

17

COMPUTER TECHNOLOGY INSTITUTE

3.1.1

1999

Principles of Human Remembering and the Need for mixed granularity levels

According to cognitive science, we think and remember using abstractions [27]; we build abstraction models of

varying granularity that depend on the task at hand as well as on the state of our knowledge in a domain.

Moreover, the process of changing representation levels and the multilevel representation of knowledge are

fundamental in common sense reasoning [44]. Starting at a high abstraction level -coarse granularity- and moving

towards a more detailed one -fine granularity- is a common approach in solving a problem [40]. The technique

used in AI to imitate this process is the use of hierarchies of abstraction models, each one in a different abstraction

level, and the definition of the relations between the different models in a hierarchy [20], [47], [25].

However, when we think and recall information in our minds, we normally mix granularity levels in a single

representation. For instance, recalling a place visited, a description may include “an island, where in the harbor

exists a castle and a church of 16th century and there is a small village named “Sigri”. To answer such query using

maps, -a mature form of symbolic representation for complex information- we would need a multi-resolution map

with only names for some large cities but including details such as street names and museums for others.

Consequently, to allow information retrieval congruent with human remembering we need techniques that support

multilevel knowledge representation using various abstraction levels and mixed abstraction levels or resolutions at

the same representation (considered either granularity levels at the same abstraction level or hierarchies of different

abstraction levels).

In multimedia applications a number of factors affect the choice of abstraction level in both the design and the

retrieval of applications while an abstract representation may be "more detailed" for one part of it and “more

abstract” for another. When specifying MULTIS systems [53] we identified the following:

the user view: conceptual, logical, temporal, spatial, interactivity or content. E.g. if the temporal

synchronization matters most, the abstraction level of the other dimensions is kept high.

the user’s knowledge and recollection of the multimedia application in any presentational view. E.g. when

looking for an application with a slide show, one might or might not remember –or deem important- the slide

synchronization.

the tightness of a constraint in each dimension, implied from the significance of the behavior under

consideration

the temporal scope, determined by the temporal interval over which the behavior the application is analyzed.

E.g. is one interested in the behavior over the whole application or over a few seconds of it? If a query focuses

on the temporal interval of a slideshow in an application, the slide synchronization may be considered

important and specified in detail.

the spatial scope, determined by the area of the screen over which the behavior is analyzed. E.g. is one

interested in the behavior over the whole scene or over a small part of the scene?

____________________________________________________________________________________________

TECHNICAL REPORT No. TR99/09/06

18

COMPUTER TECHNOLOGY INSTITUTE

1999

the temporal and spatial grain size: the degree of temporal and spatial precision required in the answer e.g. is

one interested in the exact temporal or spatial synchronization between presentational units of a scene or just

in their temporal and spatial relationships?

the content grain size: the degree of precision for content types required in the answer e.g. image or just a

visual object?

the grain size of the presentational structure

3.1.2

Reducing complexity in search

Abstraction is used to decrease complexity in various problems, including search; speeding-up search by using

abstraction techniques is a common and widely studied approach in the field of AI [21]. Research aims to find

methods for creating and using abstract spaces to improve the efficiency of classical searching techniques such as

heuristic search (especially state-space search). The basic idea behind these approaches is that instead of directly

solving a problem in the original search space, the problem is mapped onto and solved in an abstract search space;

then the abstract solution is used to guide the search for a solution in the original space (guided search) [21].

In the multimedia domain reducing the complexity of the search space and hence improving information retrieval

is a “quantitative’ objective for introducing abstraction. A repository of SMAs forms an abstract search space of

which we can have a hierarchy (see Sect. 3.4). An abstract answer to a query can be found by searching in the

hierarchy of abstract spaces in principle a computationally easier task. Then, a method for using the abstract

answer to guide search can be followed, for instance, to use the length of abstract solution as a heuristic estimate of

the distance to the goal [45], or to use the abstract solution as a skeleton in the search process (“refinement

method”[47] or other variants such as path-marking and alternating opportunism [21] ).

3.1.3

Approximate match retrievals - Filtering large multimedia repositories

There are many cases where queries aim to filter out interesting parts of large repositories; in such cases

information retrieval is based on the similarity of the repository’s data to user’s query and approximate matching

techniques are used for query evaluation [26], [1]. In order to filter large multimedia repositories in terms of the

conceptual and presentational properties of multimedia applications, the user should be able to pose approximate

queries and get a set of approximate answers that match in a certain similarity degree the given query. Here, the

similarity measure should also agree to human perception of similarity in multimedia applications.

Abstraction and abstraction hierarchies seem to have a significant role in filtering large multimedia repositories.

An approximate query has the form of an SMA and given an abstract search space of SMAs approximate query

evaluation is performed as a “normal” search process in a simplified abstract search space. Abstraction hierarchies

are used as a basis in query evaluation process and relaxation of queries (see sect. 3.4).

____________________________________________________________________________________________

TECHNICAL REPORT No. TR99/09/06

19

COMPUTER TECHNOLOGY INSTITUTE

3.1.4

1999

Semantic Multimedia Abstractions and Existing Types of Metadata

One way to query the conceptual and presentational properties of multimedia applications is to capture these

properties by using metadata. The most significant works on metadata for digital media are presented in [30]. In

[6], the metadata used for multimedia documents are classified according to the type of information captured. The

categories include content-descriptive metadata, metadata for the representation of media types, metadata for

document composition; composition-specific metadata are knowledge about the semantics of logical components

of multimedia documents, their role as part of a document and the relationships among these components.

Metadata in SGML [49] and XML[14] documents are organized in document type definitions (DTDs) which are

themselves part of the metadata and contain “element types” of metadata. Additionally, there exist metadata for

collections of multimedia documents (DFR standard) [24]. Statistical metadata and metadata for the logical

structure of documents are expected to optimize query processing on multimedia documents. In [28] a three-level

architecture consisting of the ontology, metadata and data levels is presented to support queries that require

correlation of heterogeneous types of information; in this approach, metadata are information about the data in the

individual databases and can be seen as an extension of the database schema.

Metadata are usually stored either as external -text- files or along with the original information while objectrelational database systems could also be employed to manage them. In [18], Grosky et al. propose content-based

metadata for capturing information about a media object that can be used to infer information regarding its content

and use these metadata to intelligently browse through a collection of media objects; image and video objects are

used as surrogates of real-world objects and metadata are modeled as specific classes being part-of an image/video

class.

SMAs are a type of metadata that capture the conceptual and presentational properties of multimedia applications

at the semantic level. Based on existing types of metadata and extending them (e.g. extending content-based

metadata described in [18] from image and video surrogates of real world objects to PUs), SMAs can be viewed as

metadata on PUs, capturing both semantic information about real world objects that a PU represents and

information about the presentational properties of the PU. Introducing a new class as part-of any PU as a way to

model metadata implies that we should put conceptual and presentational structure as normal class attributes in a

metadatabase scheme which seems rather cumbersome. The approach of DTDs in SGML and XML seems quite

appropriate as it captures structural information of multimedia documents and given that the XML-based

recommendation SMIL[50] would allow to capture information on synchronization of media objects. However,

there is no link between objects –or element types- in an XML DTD and media objects in SMIL documents and

consequently, querying the spatio-temporal synchronization of a presentation of a real-world object requires

correlation between these sources. Finally, for query optimization we would need higher level metadata (statistical

metadata, metadata for collection of applications etc.) [18].

____________________________________________________________________________________________

TECHNICAL REPORT No. TR99/09/06

20

COMPUTER TECHNOLOGY INSTITUTE

1999

SMAs are modeled as a database schema using extended semantic models and can be stored either in a metadatabase containing SMAs’ representations or along with each PU of multimedia applications, forming a

distributed metadatabase. Thus, queries on the conceptual and presentational structure of multimedia applications

are queries in such a metadatabase. Hierarchies of SMAs allow the definition of higher level metadata (discussed

in detail in section 3.3).

3.2 On the Abstract Multimedia Space

Abstraction has been defined [17] as a mapping between two representations of a problem which preserves certain

properties. The set of concrete, original representations is the Ground space while an Abstract space is a set of

their abstract representations.

Definition 3.1 A “Ground Multimedia Space” is a set of concrete representations of multimedia applications

represented in various models and languages e.g. a set of HTML documents, a set of XML-based documents [14],

a set of Macromedia Director applications [35] form Multimedia Ground Spaces.

Definition 3.2: An Abstract Multimedia Space is a repository of Semantic Multimedia Abstractions (SMAs); we

call this repository Semantic Multimedia Abstractions Database or simply an SMA space.

Without affecting the generality we use the Extended-OMT model / SMA-L for representing the “content” of the

SMA space. Hence, an SMA space is a repository of Extended-OMT object models or sets of statements in SMA-L.

The SMA space is a set of

Ground Multimedia Space: Repository of Multimedia Applications

mm application

mm application

mm application

Database of

M ultimedia

Applications

M ultimedia

Database

Schema

multimedia

application

multimedia

application

HTM L/

XM L

Document

metadata

applications.

SM IL

document

multimedia

application

of

distributed

multimedia

It

is

a

repository

of

SMAs if metadata are stored

generic

specifications

(M TS)

SM A

SM A

along with the multimedia

applications of the ground

SM A

multimedia space. Figure 6

Abstract Multimedia Space (The SMA Space)

Figure 6 :Ground and Abstract Multimedia Spaces

shows

the two different

types

of

existing

repositories:

Multimedia

Repositories (Ground Multimedia Spaces), which contain multimedia applications too loosely organized to be

called a database, and the SMA space (Multimedia Abstract Space) whose instances are SMAs each representing

several multimedia applications.

Note that a number of concrete multimedia applications may correspond to the same SMA (Figure 7). Moreover,

given the hierarchies of abstraction constructs and constraints (defined in section 3.4) a specific multimedia

representation can have many SMAs in various abstraction levels.

____________________________________________________________________________________________

TECHNICAL REPORT No. TR99/09/06

21

COMPUTER TECHNOLOGY INSTITUTE

3.2.1

1999

Querying the Abstract Multimedia Space: Requirements from Query languages

A query on the conceptual and presentational structure of multimedia applications is an SMA and corresponds to

the key value in the query evaluation process. The search space is the SMA Space. The output of the query is the

M ultimedia Application

Semantic M ultimedia Abstraction 1

Semantic M ultimedia Abstraction 2

scene

scene

T{starts}, S{g_overlap}

T{meets}

Image1

3"

5"

1"

T{parallel}, S

T{sequential}

Image1

Image2

Image2

5"

17"

6

5"

3"

6

12"

4"

10"

5"

15"

Figure 7 : Semantic Multimedia Abstractions from a concrete multimedia application

set of SMAs that “match” this key SMA. “Matching” the key SMA means that the retrieved SMAs contain a

fragment which “can be abstracted to the key SMA” if abstraction transformations are applied on it. Hence, the

query language should at least have the expressive power of the language for the representation of the SMA space.

Among the requirements for the query language are:

i.

to support queries on combinations of conceptual and presentational properties of multimedia applications

and to allow queries on complex structures of multimedia applications (by providing predicates

corresponding to abstraction constructs).

ii.

to support queries on temporal and spatial synchronization of multimedia applications at various abstraction

levels.

iii.

to allow mixing abstraction levels in a single query and logical combinations of queries.

iv.

Given that the structure of the Abstract Multimedia Space queried is unknown to the user, the query

language should provide mechanisms for efficient filtering multimedia applications by fuzzy and

incomplete queries, which, together with techniques for partial matching in query evaluation and relaxation

of queries, would improve the efficiency of the query evaluation process.

Based on the proposed SMA model, a query can be defined a) By a set of statements in SMA-L b) Graphically, by

drawing the Extended OMT-model scheme that represents the query or c) Visually, by an approximate description

of the presentational structure of the application.

Towards this visual approach, a software tool has been developed, initially for the purpose of partially specifying

the presentational structure of MULTIS systems (part of the MTS). The tool is customizable by application

domain, it allows the WYSIWYG definition of spatial PUs and it produces the corresponding statements in SMA-L.

____________________________________________________________________________________________

TECHNICAL REPORT No. TR99/09/06

22

COMPUTER TECHNOLOGY INSTITUTE

1999

3.2.1.1 Example Queries in the SMA model / SMA-L

To illustrate how the SMA model and SMA-L meet the above requirements, we give a number of example queries

expressed in SMA-L or with the Extended-OMT model (our SMA model).

i.

Queries on presentational spatial structure of PUs.

Example 3.1:

“Get multimedia applications containing scenes with two photos, where the first is either

covered_by the second or it is

Image1

within it”. The spatial constraint

scene

Image2

a

here can be expressed either by a

OR

combination of two simple spatial

scene

S {within}

S{covered_by inside}

Image1

Image1

Image2

Image1

Image2

Image2

relationships or by a generalized

relationship such as“within”.

ii.

Queries on presentational temporal structure of PUs

Example 3.2: “Get multimedia applications containing a slide-show where each slide is synchronized with a piece

τ1

τ2 τ3

τ4

of audio”

SlideShow

T{meets}

sync slide_audio

....

T{equals}

Image

Audio

SELECT mm_applications

MATCH (P_UNIT SlideShow)

P_UNIT SlideShow

TYPE = GROUP_OF(sync slide_audio): T{meets}

P_UNIT sync slide-audio

TYPE = AGGREGATION_OF (IMAGE,AUDIO):T{equals}

iii. Queries on a combination of conceptual structure, data types and presentational structure of multimedia

applications

Example 3.3: The Extended-OMT model and SMA-L statements of the example query given in Section 3.1 is

English Grammar

Phrasal Verb

Phr_V_page

Video_mode

Text_mode

T{meets}

sync v-t

T{equals}

S{disjoint}

Video

Text

T{meets}

sync t-t

T{equals}

S{disjoint}