Class 5 notes: Feb. 8, 2006

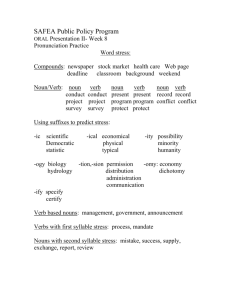

advertisement

Class 5 notes: Feb. 8, 2006 More work on the lexicon: Notice that grammars are not written with 40+ parts of speech – not practical! Lexical categories used in grammars: (roughly) Pronoun ProperNoun Noun Aux Modal Verb Adjective Adverb Preposition Determiner Conjunction ‘s or ‘ (possessive ending) Everything else is represented using features. Here is a better format for your lexicons: A lexical entry: CAT – the part of speech BASE – base or “root” form of the word Features: an attribute value list LEX – the word itself For nouns: NUMBER = MASS, SG, PL For verbs: FORM = BASE PRES PAST PPRT ING 3PS COMP = DOBJ IOBJ TO-VP THAT-S FOR-ING FOR-TO For pronouns determiners and adverbs: WH For pronouns: REFLexive, SUBjective, ACCusative, POSSessive For adjectives and adverbs: BASE, COMParative, SUPerlative Re-cast your lexicon as shown above, and encode a set of words to be provided on the class web site very soon. (All of these words will appear in the 2000-word sample of the Brown corpus, where you can find at least some of their Brown tags, which can help you.) Continue discussion of parsing: Function TOP-DOWN-PARSE (list of words, grammar) // returns a parse tree // only returns the first parse found Push([Initial S tree, list of words], agenda) //a state = [tree, unconsumed-words] While agenda not empty loop current-state = Pop(agenda) if successful-parse? (current-state) // no non-terminal leaves and no more input return tree if Node-to-expand(current-state)) is hterm (a POS or base form of a word) if instance-of(Next-word(current-state), CAT(Node-to-expand …)) // Next-word must return empty so instance-of fails if no more input Push ([Attach-word (Node-to-expand . . ), Rest-of-input], agenda) else continue loop else Push-all(Apply-rules (current-state, grammar), agenda) end loop return reject S VP | Aux NP VP | NP VP NP Pronoun | Proper-Noun | Det Nominal ADJP| Det Nominal Nominal Noun | Noun Nominal | VP Verb | Verb PP | Verb NP | Verb NP PP PP Preposition NP ADJP ADJ | VP Example 1: Harry disappeared. 1. ([S, Harry disappeared]) Apply-rules 2. ([S VP, Harry disappeared] [S aux NP VP, Harry disappeared] [S NP VP, Harry disappeared]) Apply-rules 3. ([S <VP <verb>> , Harry disappeared], [S <VP <verb PP>> , Harry disappeared] [S <VP <verb NP>> , Harry disappeared] [S <VP <verb NP PP>> , Harry disappeared] Continue [S aux NP VP, Harry disappeared], [S NP VP, Harry disappeared]) 4. [S <VP <verb PP>> , Harry disappeared] [S <VP <verb NP>> , Harry disappeared] [S <VP <verb NP PP>> , Harry disappeared] [S aux NP VP, Harry disappeared], [S NP VP, Harry disappeared]) Continue 5. [S <VP <verb NP>> , Harry disappeared] [S <VP <verb NP PP>> , Harry disappeared] [S aux NP VP, Harry disappeared], [S NP VP, Harry disappeared]) Continue 6. [S <VP <verb NP PP>> , Harry disappeared] [S aux NP VP, Harry disappeared], [S NP VP, Harry disappeared]) Continue 7. [S aux NP VP, Harry disappeared], [S NP VP, Harry disappeared]) Continue 8. [S NP VP, Harry disappeared]) Apply-rules 9. [S <NP <Pronoun>> VP, Harry disappeared] Continue [S <NP <Proper-noun>> VP, Harry disappeared] [S <NP <Det Nominal ADJP>> VP, Harry disappeared] [S <NP <Det Nominal>> VP, Harry disappeared] 10.[S <NP <Proper-noun>> VP, Harry disappeared] Attach-word [S <NP <Det Nominal ADJP>> VP, Harry disappeared] [S <NP <Det Nominal>> VP, Harry disappeared] 11.[S <NP <Proper-noun Harry>> VP, disappeared] Apply-rules [S <NP <Det Nominal ADJP>> VP, Harry disappeared] [S <NP <Det Nominal>> VP, Harry disappeared] 12.[S <NP <Proper-noun Harry>> <VP <verb>>, disappeared] Attach-word [S <NP <Proper-noun Harry>> <VP <verb PP>>, disappeared] [S <NP <Proper-noun Harry>> <VP <verb NP>>, disappeared] [S <NP <Proper-noun Harry>> <VP <verb NP PP>>, disappeared] [S <NP <Det Nominal ADJP>> VP, Harry disappeared] [S <NP <Det Nominal>> VP, Harry disappeared] 13.[S <NP <Proper-noun Harry>> <VP <verb disappeared>>, ] SUCCESS!! [S <NP <Proper-noun Harry>> <VP <verb PP>>, disappeared] [S <NP <Proper-noun Harry>> <VP <verb NP>>, disappeared] [S <NP <Proper-noun Harry>> <VP <verb NP PP>>, disappeared] [S <NP <Det Nominal ADJP>> VP, Harry disappeared] [S <NP <Det Nominal>> VP, Harry disappeared] Illustrates the benefit of Bottom Up Filtering: Top down parsing with bottom up filtering A table LC mapping each non-terminal into its possible “left corner” terminals (POS) that can begin a constituent of that type. Apply-rules filters the new search-states it creates to exclude rules where the LC of the first symbol of the RHS is incompatible with the current input. Example2: Are many students studying at Northeastern (assume bottom up filtering is being used) 1. [S, Are many students studying at NU] Apply-rules S 2. [S aux NP VP, Are many students studying at NU] Attach-word aux 3. [S <aux Are> NP VP, many students studying at NU] Apply-rules NP 4* [S <aux Are> <NP <Det Nominal ADJP>> VP, many students studying at NU] [S <aux Are> <NP <Det Nominal>> VP, many students studying at NU] ignore for the moment the fact that “many” can be a pronoun Attach-word Det 5. [S <aux Are> <NP < < Det many> Nominal ADJP>> VP, students studying at NU] Apply-rules Nominal [S <aux Are> <NP <Det Nominal>> VP, many students studying at NU] 6. [S <aux Are> <NP < < Det many> <Nominal <Noun>>ADJP>> VP, students studying at NU] Attach-word Noun *[S <aux Are> <NP < < Det many> <Nominal <Noun Nominal>>ADJP>> VP, students studying at NU] [S <aux Are> <NP <Det Nominal>> VP, many students studying at NU] 7. [S <aux Are> <NP < < Det many> <Nominal <Noun students>> ADJP>> VP, studying at NU] Apply-rules ADJP * 8. [S <aux Are> <NP < < Det many> <Nominal <Noun students>> <ADJP <VP>>> VP, studying at NU] Apply-rules VP * 9. [S <aux Are> <NP < < Det many> <Nominal <Noun students>> Attach-word <ADJP <VP <verb>>>> VP, studying at NU] verb [S <aux Are> <NP < < Det many> <Nominal <Noun students>> <ADJP <VP <verb PP>>>> VP, studying at NU] [S <aux Are> <NP < < Det many> <Nominal <Noun students>> <ADJP <VP <verb NP>>>> VP, studying at NU] [S <aux Are> <NP < < Det many> <Nominal <Noun students>> <ADJP <VP <verb NP PP>>>> VP, studying at NU] * 10.[S <aux Are> <NP < < Det many> <Nominal <Noun students>> Apply-rules <ADJP <VP <verb studying>>>> VP, at NU] VP (none pass filter) [S <aux Are> <NP < < Det many> <Nominal <Noun students>> <ADJP <VP <verb PP>>>> VP, studying at NU] [S <aux Are> <NP < < Det many> <Nominal <Noun students>> <ADJP <VP <verb NP>>>> VP, studying at NU] [S <aux Are> <NP < < Det many> <Nominal <Noun students>> <ADJP <VP <verb NP PP>>>> VP, studying at NU] * 11. [S <aux Are> <NP < < Det many> <Nominal <Noun students>> Attach-word <ADJP <VP <verb PP>>>> VP, studying at NU] verb [S <aux Are> <NP < < Det many> <Nominal <Noun students>> <ADJP <VP <verb NP>>>> VP, studying at NU] [S <aux Are> <NP < < Det many> <Nominal <Noun students>> <ADJP <VP <verb NP PP>>>> VP, studying at NU] * 12.[S <aux Are> <NP < < Det many> <Nominal <Noun students>> Apply-rules <ADJP <VP <<verb studying> PP>>>> VP, at NU] PP [S <aux Are> <NP < < Det many> <Nominal <Noun students>> <ADJP <VP <verb NP>>>> VP, studying at NU] [S <aux Are> <NP < < Det many> <Nominal <Noun students>> <ADJP <VP <verb NP PP>>>> VP, studying at NU] * “at NU” parses as a PP, thus satisfying the expansion of the subject NP as a NP Det Nominal ADJP. However, there is still a VP in the tree, with no more input. Therefore BACKTRACKING will occur, to Step 4, where the rule NP Det Nominal will be selected. All the work we did since then is discarded. Parsing of “students” as a Nominal, and of “studying at NU” as a VP must be done all over again (exactly the same way). Only the higher level NP and S structures are different. Remaining problems with top-down parsing: Building and discarding the same structure many times over (when it is parsed in the wrong attachment context) Infinite loop if the grammar contains left-recursive rules The Early Algorithm (also known as Chart Parsing) Instead of building a parse tree, create a chart: Each NODE represents a place between words. Each EDGE represents a word or constituent. N0 fish N1 head N2 up N3 the N4 river N5 in N6 spring N7 We add edges to the chart representing possible constituents. Every edge has a LABEL, with a CAT component, whose value is a terminal or non-terminal symbol from the grammar. The goal is to get one or more S edges going from N0 to N7. This method naturally produces all possible parses. (Unfortunately, frequently several hundred). The shortest edges are “lexical edges” connecting adjacent nodes, whose labels contain the information from the lexicon for a word. If a word has more than one POS, there will be several lexical edges representing that word, one for each POS. Example: see what edges can be built. Edges can be complete (producers) or incomplete (consumers). There is a queue of complete edges waiting to be added to the chart. Initially it contains all the lexical edges with the leftmost word first. There is a queue of incomplete edges waiting to be added to the chart. 0-length incomplete edges (loops) are initially created from the grammar rules, and then longer incomplete edges are generated as matching RHS constituents are consumed. Whenever an edge is added to the chart, it triggers a procedure. When a new incomplete edge is added, it represents a partially fulfilled rule. The parser must look to the right for the next constituent from the RHS of the rule. When a new complete edge is added, it represents a found constituent. The parser must look to the left to see if anyone is waiting for this constituent. These two processes are instantiations of the “fundamental rule” of chart parsing. The control schemes for a chart parser can be top-down, bottom up, depth first or breadth first with only slight changes in the algorithm. It is easy to parameterize this. (Also: right to left can easily replace left to right, but we will not consider that.) Just as in tree search, depth first always adds new edges to the front of the queue, breadth first adds them to the end. We will look at top down and bottom up chart parsing using the tutorial from the NLTK. Natural Language Semantics What could it mean to say a computer “understands” NL? FOPC as a representation language: truth-functional semantics with sound and complete inference algorithm canonical form relative to synonyms and simple syntactic variations ambiguity is exposed Limitations of expressiveness: vagueness is ignored statements about mental states create major problems Relation to syntax of simple declaratives: main verb defines a “predicate” with some expected arguments related NP’s provide values for the arguments attempt to define a set of standard semantic roles (thematic roles, case roles) such as agent, patient, instrument, direction the Verb’s subcategorization frame(s) map syntactic roles such as subject, direct object, with-object into semantic case roles. selectional restrictions define what types of objects can fill the semantic roles therefore define a “semantic grammar” based on world knowledge taxonomy. John broke the window. The window broke. John broke the window with a hammer. The window was broken by John (passive transformation) Selectional restrictions: A hammer broke the window. (?) Set-theoretic semantics of FOPC Use of FOPC for modeling NL meanings: 1. Individuals and categories 2. Events 3. Ambiguity 4. Propositional attitudes (mental states) 1. Individuals and categories Old approach (from philosophy): constants & variables refer to individuals; categories and properties represented as predicates All men are mortal; Socrates is a man Socrates is mortal (modus ponens) AX [man(X) mortal(X)] man(socrates) mortal(socrates) student(jun), wizard(harry), green(ball1) . . . Lack of expressive power: ball1, ball2: can’t express that they are the same color unless: green(ball1) ^ green(ball2) || red(ball1) ^ red(ball2) || ….. Answer: reification – making a concept into an object about which things can be asserted (in FOPC, a constant instead of a predicate). Now let all categories be constants. Individuals by convention are represented with numeric suffixes. (not part of logical formalism but for understandability). isa(socrates1, man) isa(sappho2, woman) ako(man, human) ako(woman, human) AX (isa(X, human) mortal(X)) “Inheritance rule” AXY Z[ako(X, Y) ^ isa (Z, X)) isa(Z, Y)] Property values also become objects; can express many more things. color(ball1, green) color(ball2, green) AX [color(ball1, X) color(ball2,X)] AXY[color(X,Y) =(Y, green) || =(Y, red) || ….] 2. Events A similar evolution occurred: Old way: John ate a pizza: EX[isa(X, pizza) ^ eat(John1, X)] can’t say he ate it quickly, or that he ate it after noon, etc. Need an object to represent the event of his eating. Reification. New way: EXW [isa(W, eating-event) ^ eater(W, John1) ^ eaten(W, X) ^ isa(X, pizza)] This representation derives from the use of “frames” or “schemas” in AI, which is a precursor of object oriented programming. eater, eaten, etc are the arguments of the eat predicate, but the predicate has been reified. Benefits: after(W, 12:00-noon) or on(W, Wednesday) maybe we don’t know what john ate: John got sick from eating EXWV [isa(W, eating-event) ^ eater(W, John1) ^ isa(V, getsick-event) ^ patient(V, John1) ^ cause(W, V)] 3. Ambiguity Famous example: Every man loves a woman - has two interpretations AX [ isa(X, man) EY [isa(Y, woman) ^ loves(X, Y)]] (for every man, there is some woman whom he loves) EY [isa(Y, woman) ^ AX [isa(X, man) loves (X, Y)]] (there is a woman whom every man loves) 4. Propositional attitudes believe/know want, fear, doubt, hope (BDI semantics: belief/desire/intention) Mary believes that Sam arrived. No way to express this without asserting Sam is here. EVW[isa(V, belief-event) ^ believer(V, Mary1) ^ believed (V, W) ^ isa(W, arrival-event) ^ arriver(W, Sam2)] We want to re-ify the arrival event: EV[isa(V, belief-event) ^ believer(V, Mary1) ^ believed (V, EW[ isa(W, arrivalevent) ^ arriver(W, Sam2)]] Problem: Re-ification won’t work -- we can’t make a complete proposition into an object and stay within FOPC. One answer: modal logic. Believe becomes an operator. Problem: We lose the clear truth-functional semantics and sound & complete inference algorithms. Specifically: Interaction of modal operators with quanitifiers, negation and inference rules such as substitution of equals is unclear. Another famous example: John believes the evening star is visible. The evening star is the planet Venus ????????????????????????????????????? John believes the planet Venus is visible Referentially opaque meaning is true; Referentially transparent meaning is false. Another example: Oedipus wanted to marry Jocasta Jocasta was his mother Oedipus wanted to marry his mother Why this only works in restricted domains and environments.