View paper

advertisement

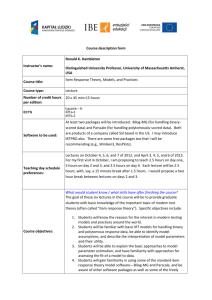

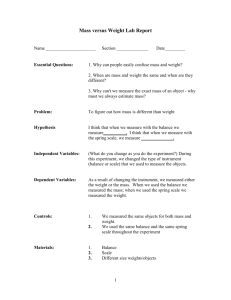

ITEM RESPONSE THEORY (IRT) AS A MEASUREMENT MODEL TO ASSESS STUDENTS’ CHARACTERISTICS IN BROAD SCALE: A STUDY ON MULTIPLEINTELLIGENCE INSTRUMENT ABSTRACT Measurement model that has been used so far in assessing students’ characteristics is classical theory model. It has a weakness in the analysis of the characteristic instrument, because it is bound to the sample. The weakness of this model is solved by the emergence of a theory called Item Response Theory (IRT). IRT has an advantage, in which it is not sample bound, so that it can be used in a population or in a broad scale. This study aims to develop a model of IRT measurement on multiple intelligences (MI) instrument in order it can be used in a broader scale. The study develops the MI instrument using the following steps: determining the construct and specification of the instrument, writing items, trying out the instrument, and analyzing the items using IRT. The item analysis was done to estimate the parameters of index difficulty, item discrimination, and item fit model. The estimation of parameters was analyzed using the graded response model (GRM) with parscale program. The result of parameter estimation were used to estimate the parameter of ability (θ) and item-information function. The results of data analysis attained the parameter of difficulty index and item discrimination as well as the fit model of each item. The item-difficulty indices in general were at the medium level. Further, the items index-discrimination indices were acceptable. Nevertheless, not all items were fit according to the graded response model. In addition, it was also found out that the average of item information function was 2.816 at the score of ability scale 1.273. Thus, the MI item which met the requirement of fit model can be used to measure the characteristics of student’ intelligences in a broad scale. Objectives or Purposes Measurement is giving the score or number to the learning outcomes and student ability. There are two models of measurement theory, the classical and modern theory. The classical theory is often used in the assessment of learning outcomes and the identification of students' abilities. Although this model has a weakness because the score did not considerate the items’ characteristics and depend on the sample. This weakness was solved by items response theory (IRT). This theory laid that the instrument characteristics are not dependent to the characteristics of groups or samples, but it can be used in population, because it estimate every person and item. Thus, the modern theory approach is considered more powerful than the modern theory. Multiple intelligences (MI) is Gardner theory of intelligence that assume each person has a different form of intelligence. He divides intelligence into 9 forms, namely linguistic, logical-mathematical, musical, kinesthetic, interpersonal, intrapersonal, naturalistic and existential. Development of MI instrument used to determine the profile intelligence students have be done by several experts. The MI instruments can be used by students in various places, IRT analysis is needed in order to more effectively use. The purpose of this study was to develop an instrument MI and analyzed with IRT. Perspective(s) or Theoretical framework Item response theory (IRT) popularized by Hambleton and Swaminathan in 1985. IRT models use mathematical concept which states that the probability of the subject correctly answered an item depends on the ability of the subject and grain characteristics. In this case note that a person who has the ability or latent trait that will provide high response at a different point with someone who has a low capacity. Related to grain characteristics, IRT analysis is invariant, meaning grain parameter does not depend on how well the sample, and vice versa. Thus subject scores will not change despite being in the group with high or low ability. IRT can be grouped in two models, namely models and politomi dichotomy. Dichotomy model is a model that is used when a response or reply from the person taking the test is a dichotomy of data (scores 1 and 0). Score 1 if the participant can answer correctly, and 0 if the participant fails or wrong answer. Score of 1 and 0 can also show the response of participants, where a score of 1 indicates a higher response than a score of 0. There are many kinds of instruments that use traditional dichotomous models, including: test wrong, agreedisagree, appropriate-inappropriate, or yes-no. Dichotomous item response theory developed by using three logistic models, ie one parameter logistic model, two parameters, and the three parameters. 1 parameter logistic model is used when only one parameter, which is item difficulty index. 1 parameter logistic model is a very well-known Rasch models developed by George Rasch, which provide the characteristics of the item difficulty unbiased, efficient, consistent estimates of the person and item calibration. 2 parameter logistic model used two parameters: item difficulty index and different power. In the 3 parameter logistic models in addition to item difficulty index and the difference, or guesses pseudoguessing parameters are also apparent. There are several IRT models used in scoring politomi, including: tiered or graded response model of response model (GRM), a tiered response model of modification or modified graded response model (M-GRM), a partial model of credit or partial credit model (PCM), generalized partial credit model (G-PCM), a model or a rating scale rating scale model (RSM) and nominal response models or nominal response model (NRM) (Embretson & Reise, 2000:95). And M-GRM GRM based on 2 parameter logistic model. G-PCM and PCM using Rasch models or 1 parameter, RSM uses location-scale model of Andrich and NRM used for irregular response categories. Politomi one IRT models that can be used to measure the Likert scale was graded response model (GRM) or tiered response models. This model was developed by Samejima 1969 (Ostini, 2006: 61, van der Linden & Hambleton, 1997: 86). Graded response model is used when the participant responses to an item category scores and tended to increase sequentially. Methods, Techniques or Modes of Inquiry The study develops the MI instrument using the following steps: determining the construct and specification of the instrument, writing items, trying out the instrument, and analyzing the items using IRT. The item analysis was done to estimate the parameters of index difficulty, item discrimination, and item fit model. The estimation of parameters was analyzed using the graded response model (GRM) with parscale program. The result of parameter estimation were used to estimate the parameter of ability (θ) and item-information function. Data sources or evidence The source of research data is the data of multiple intelligences instrument on 443 student. Results and/or conclusions/points of view IRT models used in the analysis of this instrument is the graded response model (GRM). The model provides estimates of item parameters and item fit. On this instrument, there are 4 kinds of parameter b between categories, moving from very easy to difficult. Parameter b1 on all items have scores below -2, b2 mostly ranged from -2 to 0, ranging from 0 to 2 b3 and b4 above 2. Overall, the average item level of difficulty of -0956. B Average of each category is b1 = -3481, b2 = -1042, b3 = 1,088, and b4 = 2,902. In general, this instrument has had a moderate level of item difficulty. Different power parameters on all items above 0.2. Thus, it can be said that the items to the instrument can distinguish people who have high and low ability. Fit test results point to the model estimated ICC 37 items that fit obtained from 72 grains made. Shows the accuracy of the information function point average item information function of the instrument by 2816 at 1,273 theta. Educational importance of this study for theory, practice, and/or policy IRT analysis useful in the study of the instrument that is used on a wide scale and the large number of subjects. It is related to the invariant nature of the IRT analyzes where the resulting score was not associated with grain characteristics. Results of this analysis is not required if the test again to be used elsewhere. Thus, standardized measurements are required in various areas such as the UN tests, TPA tests, and psychological tests, will be appropriate for use with IRT analysis. Connection to the themes of the congress This study is relevan with thema : Educational standards, equity and quality in education Assessment and evaluation in standards-based education