Rethinking Spatial Thinking: an empirical study and some

advertisement

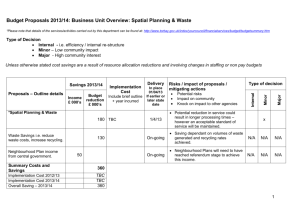

Rethinking Spatial Thinking: an empirical case study and some implications for GI-based learning. Thomas Jekel, Maria-Lena Pernkopf & Daniel Hölbling 1. Introduction Early GI-based learning content focused on the extraction of data and its use in learning processes. In the last few years two major advances emerged. First, the volume of ‘Learning to Think Spatially‘ (Downs et al. 2006) has provided an in-depth theory based on spatial thinking. Although criticised for its purely absolute space approach, this book was the first to propose a clear, competence-oriented agenda for research and development in GI based learning environments. Second, a revived interest in empirical studies has sprung up from these theoretical foundations to justify or disprove some basic assumptions on learning to think spatially. Research activities were directed to the development of concepts of space with education (see Huynh 2008) as well as the development of minimal GIS for school use (Marsh et al. 2007). An increased interest into empirical studies can also be traced via recent HERODOT – supported conferences, namely Learning with GI (Jekel, Koller & Donert (eds.) 2008). Still, most of the contributions are satisfied with spatial learning and spatial thinking rather than everyday problem solving. This looks into the role of spatial preconceptions for a problem of spatial citizenship. Participative spatial planning is of course rooted in a wide range of spatialities. We try to exemplify the needs for a wider conception of space in citizenship education as well as spatial problem solving. Based on an extensive research design of a specific learning environment, three main basic assumptions of ’Learning to think spatially’ are challenged, namely: 1) Thinking spatially argues that concepts of (absolute) space are prerequisite for spatial problem solving. 2) Through the use of GI(S) in learning processes, the development of finer concepts of space is fostered. 3) The knowledge of tools for the representation of space is essential for problem solving. We think that empirical data concerning these assumptions does pose implications for the development of further GI-based learning environments. This paper provides preliminary results on learning processes evaluated within a participative planning and learning environment. Besides the questions above, it focuses on the motivation factor of GI-based learning environments that has been discussed earlier by Jekel, Pree & Kraxberger (2007) based on the purely quantitative indicator of participation. Here, the results of a fresh batch of students have been complimented with the results of a two-time motivation test (QAM), looking at the development of motivation through the application of the treatment. 2. Treatment and research design The empirical research design was moulded at a classical pre-test – treatment – post-test method. The treatment consisted of a free and open-to-vision collaborative planning environment of the Old Town of Salzburg. Learners were asked to pin down their visions of central Salzburg within a collaborative learning environment in a contest of planning strategies. Collaboration was based on .kml files and was handled via Blackboard, an instructional design similar to the learning environments proposed by Jekel (2007) and Jekel, Pree & Kraxberger (2007). Each learner had to provide at least two contributions in the discussion forum and at least one step of visualisation on a digital globe. In this learning environment Google Earth (free version) was used. The LMS blackboard was used to store all contributions to group discussion as well as to visualisation. It is therefore possible to individualize results and link individual contributions to each learner and his/her pre-test/posttest results for analytical purposes. Pre-test and post-test included the same three groups of items: a) an IQ Cube Test to determine a measurement of absolute concepts of space; b) a mental map projection of a path in the city known to all; and c) a questionnaire of actual motivation (QAM). These where given to the students before and after the treatment with exactly the same ‘problems’. Fig. 1: Overall research design The first evaluation of the learning environment under this research design took place in winter term 2007/08 with students distributed in ten groups. Groups were formed based on the ranking of the individual IQ cube test measuring the abilities in the dimension of absolute space. E.g. students with similar cube test results were grouped together giving a ranking of groups with similar abilities. Across these an additional group with random IQ cube test results was laid due to organizational reasons. Students were not aware of the logics of group formation. Of all students, 36 took part in both pre-test and post-test. None of them used digital globes before to actively visualize, communicate and collaborate. Participants were students at the beginning of their first term at university. The resulting data is therefore expected to be reasonably comparable to high school students. 3 Results This contribution only presents preliminary results from the overall research project. Thus we concentrate on results for the following dimensions: 1) Absolute space and problem solving capacity, 2) learning effects for subjective space / topography learning, and 3) motivation and participation. 3.1 Absolute conceptions of space and problem solving capacity. No direct correlation could be established between the absolute conception of space and the capacity to solve problems. The evaluation of the quality of city planning was provided by both, the participating students and the teacher. Groups with the highest spatial abilities in terms of absolute space were not rated significantly higher than others (see table 1). Group 1 2 3 4 5 6 7 8 9 10 (c) Cube test average (correct answers) 1,4 4,2 5,2 6,25 7,2 8,25 9,75 11,4 13,8 n.a. Points (student assessment) 14 11 10 25 19 6 12 4 14 8 Table 1: Absolute conceptions of space (cube test) and quality of planning results as assessed by students This hints at a need to diversify our concepts of spatial problem solving; the treatment clearly included a socially constructed political version of the concept of space. If the aim of GIbased learning is ‘spatial citizenship’ rather than spatial thinking, our didactics should therefore pay attention to the fact that pushing the individual conceptions of absolute space may not contribute to problem solving abilities. 3.2 Learning effects for subjective space / topography learning To determine the effects of the treatment towards topography learning mental maps were evaluated before and after the treatment. Two different measures were applied: the first taking into account the position of three prominent points of interest; the second evaluating line error. For the results of error in points, no significant learning effect could be determined (see Fig. 2). A calculated mean vector between the actual and the estimated points shows that the effects are minimal. Both results at t1 and t2 show a systematic distortion in nearly the same direction, while the length of deviation remains the same. Systematic distortion here is compliant with Lloyd & Heivly (1987) who worked on systematic clockwise distortions of mental maps in American cities. This distortion was hardly effected by the treatment. Fig. 2: Learning effects: Topography learning based on points (n = 36 at both t1 and t2) Line error was tested via a newly developed method to determine error, the evenly distributed sample points method (EDSP). This is based on comparing pairs of sample points which are evenly distributed on the ’true’ and the estimated line (see Hölbling et al. 2008). A mean vector for each sample point was calculated indicating the mean directional deviation of the estimations as well as the average distance. Figure 3 shows these mean vectors for both points in time (t1, t2). The start points of the vectors lie on the reference line whereas the endpoints on the estimated line indicate the deviation. In order to achieve a better comparability between t1 and t2 these endpoints have been linked. As for the learning effects, we observe that on the basis of line error a small but significant learning effect takes place when working on digital globes. Within this learning environment, this can be considered a positive side effect of the learning environment on city planning. Fig. 3: Learning effects: Topography learning based on line error. Mean deviation at t1 (pretest) and t2 (post-test) (n = 36 each) Combining the results from topography learning we observe a slight improvement in the perception of space as shown by the evaluation of mental maps. Topography learning is discussed exhaustively elsewhere, and this slight improvement is probably an insufficient argument for the inclusion of GIS at school level. Sum (all vectors) Mean (per sketch) Std. deviation (per Median (per sketch) sketch) EDSP (t1) 123,219.02 171.14 80.14 154.90 EDSP (t2) 104,750.71 145.49 74.01 129.93 Change (m) -18,468.31 -25.65 -6.12 -24.97 Table 2: Learning Effects: Topography learning. General outline of the ESDP method applied to 36 participants at t1 and t2 3.3 Motivation and participation Motivation and participation, however, has been the great advantage of the GI-based learning environment. As with earlier studies (Jekel, Pree & Kraxberger 2007), participation in purely quantitative terms has been much higher than anticipated by the teacher reaching close to 300% of the minimum participation. One of the main positives of the open learning environment has been the inclusion of subjective knowledge in the problem-solving process, thus widening the base of hypotheses for problem solving. More than 50% of all contributions in the discussion board referred to knowledge and data from outside the learning environment. The questionnaire of actual motivation has also established that motivation has been reasonably high right from the beginning of the treatment and has been growing from pre-test to post-test. This hints at positive overall atmosphere within the course, but also at the increasing amount of mastery of techniques and growing self-esteem. It may also be an indicator of students liking open and self-directed learning environments. This is supported by the verbal evaluation of the learning environment. Participants here stated a high motivation after overcoming initial technical difficulties as well as high usability for the classroom. 4. Conclusion: The problem with spatial problems For future applications of similar learning environments we argue that a much clearer picture of what we consider to be a spatial problem is needed. Most authentic problems such as city planning, nature conservation or migration and integration are not easily transformed into a simple absolute space problem. The idea of Learning to Think Spatially (Downs et al. 2006) goes around this issue by defining problems that are of course to be solved with an absolute conception of space in hand. However, the empirical results shown above demonstrate that a clear conception of absolute space did not contribute to solve political ‘spatial’ problems that are rooted in subjective spatialities. For these types of problems we suggest more input from modern theory of geovisualization (e.g. MacEachren 2004). Here it is argued that geospatial visualization may act as catalyst for socio-spatial problem solving through its support of hypothesis development. We do have some support for this idea from data of the evaluated learning environment, and this is also true for more subtle personal feedback by students after the treatment. - It may also be questioned if problems in absolute space may be considered central to school curricula. If we argue for spatial citizenship, participation in planning processes and communication of spatial visions come to the fore (see for example Strobl 2008, Jekel 2007). If we keep in mind the idea of the geospatial citizen (Strobl 2008), we need further research and development in at least the following three areas: a) the competences required by the geospatial citizen; b) the role of collaborative geovisualization in group decision making based on support of hypotheses development and c) the inclusion of subjective spatialities in GI-based learning environments as these are the starting point for learning processes linked to everyday problem solving References Downs, R. et al. (2006), Learning to Think Spatially. GIS as a Support System in the K-12 curriculum. Washington DC. Hölbling, D., Pernkof, M.-L., Jekel, T. & Albrecht, F., (2008), An Exploratory Comparison of Metrics for Line Error Measurement. In: Car, A., Griesebner G. & Strobl, J., GI-Crossroads@GI-Forum. Heidelberg: Wichmann, pp 134 - 139.. Huynh, N.-T. (2008), Measuring and Developing Spatial Thinking Skills in Students. In: Jekel, T., Koller, A. & Donert, K.(2008), Learning with Geoinformation III. – Heidelberg: Wichmann, pp. 116 - 125. Jekel, T., Koller, A. & Donert, K. (2008), Learning with Geoinformation III. – Heidelberg: Wichmann. Jekel, T. (2007), "What you all want is GIS 2.0!" - Collaborative GI based learning environments for spatial planning and education. In: Car, A., Griesebner G. & Strobl, J., GI-Crossroads@GI-Forum. Heidelberg: Wichmann, pp. 84 - 89. Jekel, T., Pree, J. & Kraxberger, V. (2007), Kollaborative Lernumgebungen mit digitalen Globen - eine explorative Evaluation. In: Jekel/Koller/Strobl, Lernen mit Geoinformation II. Heidelberg: Wichmann, pp. 116 - 126. Lloyd, R. & Heivly, C. (1987), Systematic Distortions in Urban Cognitive Maps. In: Annals of the Association of American Geographers, 77 (2), pp. 191 - 207. MacEachren, A. M., Gahegan, M., Pike, W., Brewer, I. Cai, G. & Lengerich, E. (2004), Geovisualization for Knowledge Construction and Decision Support. – In: IEEE Computer Graphics and Applications, pp. 13-17. Marsh, M., Golledge, R. & Battersby, S.E., (2007), Geospatial Concept Understanding and Recognition in G6Colledge Students: A Preliminary Argument for Minimal GIS. - In: Annals of the Association of American Geographers, 97, 4, 696 - 712. Strobl, J. (2008 in press), Digital Earth Brainware. A framework for Education and Qualification Requirements.