To appear in Common Sense, Reasoning and Rationality

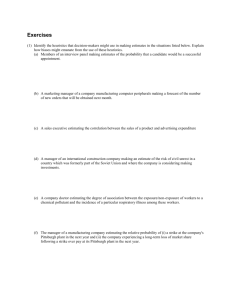

advertisement