An Analysis of Online Discussions Assessment Wei

advertisement

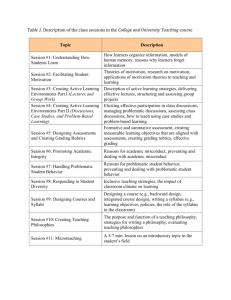

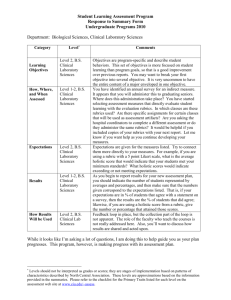

An Analysis of Online Discussions Assessment Wei-Ying Hsiao, Ed.D. Associate Professor University of Alaska Anchorage USA whsiao@uaa.alaska.edu Abstract: Online discussion has been used as a crucial assessment in online courses in higher education. The purpose of this study was to investigate the elements/criteria of grading rubrics for assessing online discussion both in synchronous and asynchronous courses across different discipline areas in higher education. This study analyzed 69 online discussion grading rubrics. Of the 69 grading rubrics, 4 elements were used more frequently in assessing online discussions: content/focus on topic (68%), stylistics/writing (67%), reflection/connections (64%), and frequency (61%). Three elements were used often in assessing online discussions including timeliness (46%), references or resources citations (46%), and initial posting (42%); in addition, the other nine elements were used in assessing online discussions including interaction/response (38%), new ideas/uniqueness (36%), critical thinking (35%), clarity (28%), tone/positive/encouragement (23%), quality (16%), length (15%), organization (9%), and integrity (1%). Introduction The demand for eLearning (electronic learning), defined as “learning and teaching online through network technologies” (Hrastinski, 2008, p.51), has been growing in higher education (Wagner, Hassanein, & Head, 2008). Online delivery is widely used in higher education for a number of reasons, including increasing demand by learners’ and institutional budget cuts (New Media Consortium, 2007). However, the concern about the assessment of online learners has become an issue (Speck, 2002). Clearly online asynchronous or synchronous discussions were an essential component of online learning (Liang & Alderman, 2007). Online discussions allow students to practice active thinking and provide opportunities to interact with others (Salmon, 2005). However, how to assess online discussions can be challenging. When assessing online discussions, simply counting the frequency of postings by online learners does not lead to a good quality assessment in learning (Meyer, 2004). Wishart and Guy (2009) indicated many course designers and instructors were aware of the value of online discussions; however, in order to keep the online discussion “lively and informative is a challenge.” (p.130) Levenburg and Major (2000) discussed the importance of assessing online discussions in order to recognize students’ time commitment and encourage students’ efforts. Assessment criteria should be a guide to lead students in learning and should be used to evaluate students’ learning outcomes (Celentin, 2007). Therefore, it is important to find ways to assess online discussions. The purpose of this study was to analyze the elements/criteria of grading rubrics for assessing online discussions. Method Participants A total of 69 grading rubrics across different discipline areas were analyzed in this research. Sixty-seven rubrics were from the USA and two were from UK. The grading rubrics were selected from both asynchronous and synchronous online courses in both graduate and undergraduate at different universities. Data Collection Procedures All grading rubrics were selected from online resources. The researcher searched online courses’ grading rubrics and some rubrics from research papers. Sixty-nine rubrics were selected in this research. All the rubrics were selected from different discipline areas such as Education, Health Education, Engendering, Science, Psychology, and so on. Sixty-five percent of the grading rubrics were from graduate courses and thirty-five percent of the grading rubrics were from undergraduate courses. Data Analysis Excel of Microsoft Office 2010 was used to analyze the data. Descriptive statistic was used to analyze the frequency usage of grading elements. The researcher exam the elements (criteria) of all the rubrics and finalized into 16 elements on these 69 grading rubrics. Results A total of sixteen grading elements (criteria) were found in these online discussion grading rubrics. These grading elements were including content/focus on topic (68%), reflection/connections (64%), critical thinking (35%), length (15%), new ideas/uniqueness (36%), timelines (46%), stylistics/writing (67%), resources (46%), interaction/response (38%), organization (9%), frequency (61%), initial posting (42%), Clarity (28%), Tone/positive words (23%), quality (16%), and integrity (1%). See Figure 1 for these 16 elements and percentage. See Table 1 for the 16 elements and summery of indicators. Figure 1. The Sixteen Elements of the Online Discussions Grading Rubrics Table 1 Sixteen elements of the grading rubric and indicators Elements Content/Focus on Topic % 68% Indicators understanding of the course materials and the underlying concept being discussed. Reflection/Connections 64% reflective statements, reflect to professional experiences Critical Thinking 35% consistently analytical, thoughtful, perceptive, & provide new insides related to topic addressed, refutes bias Length of Posting 15% at least one paragraph, at least 250 words, New Ideas/Uniqueness 36% a new way of thinking about the topic, depth and detail Timeliness 46% meet deadlines, before due date, complete all required postings Stylistics/Writing 67% free of grammatical, spelling, & punctuation errors facilitate communication, well written Resources 46% connections to other resources, site research papers using references to support comments, Interaction/Response Organization Frequency/Follow up postings 38% 9% 61% interacting with a variety of participants, information is organized, 5-6 postings/week; 4-5 times/week Analysis of others’ posts & extending meaningful discussion Initial Posting 42% posts well developed; fully address all aspects of the task Clarity 28% clear, concise comments formatted, easy to read clear logic beliefs & thoughts Tone/positive/Manners 23% following online protocols, positive attitude, encouraging, appropriate in nature, Quality 16% excellent understanding of the questions through well Reasoned and thoughtful reflections Integrity 1% learners’ own idea, posting by learners References Celentin (2007). Online education: Analysis of interaction and knowledge building patterns among foreign language teachers. Journal of Distance Education, 21(3), 39-58. Hrastinski, S. (2008). A study of asynchronous and synchronous e-learning methods discovered that each supports different purposes. Educause Quarterly 31(4), 51-55. Levenburg, N.,& Major, H. (2000). Motivating the Online Learner: The Effect of Frequency of online Postings and Time Spent Online on Achievement of Learning & Goals and Objectives. Proceedings of the International Online Conference On Teaching Online in Higher Education, 13-14 November 2000. Indiana University-Purdue University: Fort Wayne. Liang, X. and Alderman, K. (2007). Asynchronous Discussions and Assessment in Online Learning. Journal of Research on Technology in Education. 39 (3), 309-328. Meyer, K. (2004). Evaluating Online Discussions: Four Different Fames of Analysis. JALN 8 (2), 101-114 National Center for Educational Statistics (2008, December). Distance education at degree-granting postsecondary institutions: 2006-07. Retrieved Oct 12, 2011, from http://www.nmc.org/pdf/2007_Horizon_Report.pdf Salmon, G. (2005). E-tivities. Great Britain: Routledge Falmer. Speck, B. W. (2002). Learning-teaching-assessment paradigms and the online classroom. New Directions for Teaching and Learning, 91, 5-18. Wagner, N., Hassanein, K., & Head, M. (2008). Who is responsible for E-Learning Success in Higher Education? A Stakeholders’ Analysis. Educational Technology & Society, 11 (3), 26-36. Wishart, C., & Guy, R. (2009). Analyzing Responses, Moves, and Roles in Online Discussions. Interdisciplinary Journal of E-Learning and Learning Objects, 5, 129-144.