Online and Computer-based Assessment Tools

Presented by Jennifer Fager

For

University of Wisconsin-Superior

Enhancement Day

1/19/2011

Advice for Assessing Student Learning in an

Online Environment

Multiple Choice and Short Answer Questions

Not always the best technique in either online or ground formats

Lend themselves to cheating more so in online

Questions, regardless of ground or online, must be written well

The “test” has been overused and relied upon unless the instructor is an expert in writing good test items

Advice continued

Use multiple forms of assessment

Has corroborating evidence of student achievement

More personalized assessments reduce the likelihood of plagiarism

“Term paper” vs. application paper using personal examples

General research paper vs. select topics focus

Use examples more “authentic” in format

Consider problems of the field

Engage in “conversation’s” online

Advice continued

Security options

Lockdown browser

Student can’t go to another website during the test

NOTE: Most students now have multiple devices so they can go to their IPhones, blackberries, laptops, IPads, etc. to research the “answers”

Advice Continued

Set test time limits

Even if the student gets help, time restricts the amount

Can punish the slower readers/processors

What do you want in terms of meaningful, thoughtful responses

Advice continued

Assume any online test is, by definition, open book

And perhaps open notes, and open neighbors

Don’t try to make it otherwise

Advice continued

Quiz/test software products are available

Can generate multiple versions of tests

Each student can receive a “different” test

http://www.fau.edu/irm/instructional/respondus.php

Have students explain their answers

25% have to explain ‘A’ answers, 25% ‘B’ answers, etc.

Advise continued

Other security ideas

“thumb” identification

Webcams

Face-to-face testing at a testing center

Online Assessment Resources

Commercial Assessment Data Management Tools –

E.g. TracDat, WeaveOnline, Chalk & Wire

Curriculum Design Tools

E.g. WIDS – link outcomes to Performance Assessment Tasks

Survey Instruments

Survey Monkey

Zoomerang

Portfolios

Course Management Systems Quiz and Analysis Functions

Blackboard and others

Resources Continued

Google Docs

Jing

Reflective Instruments

Leadership

Personality Style

Teamwork

Merlot (has content template builder)

Clickers

AdobeConnect with polling features

Lock-down Browsers for online testing

Fundamental Questions

What evidence do you have that students achieve your stated learning outcomes?

How have you articulated these outcomes to students and adjunct faculty?

In what ways do you analyze and use evidence of student learning?

Assessment Process

The same process is used for online programs and courses as for ground (traditional):

Needs Assessment

Needs of the profession

Needs of the learners

Articulate your expectations

Measure achievement of expectations

Collect and analyze data

Use evidence to improve learning

Assess effectiveness of improvement

Measure Online Learning

Direct Methods

Mostly the same as ground

Can use any student work products that can be saved in an electronic form

Some challenges exist with lab work, oral performances, etc.

Indirect Methods

Again, same as ground

Survey response rates are notoriously low

Advantages of Online

Assessment

Artifact collection can be automated through the course management software

Can work for ground as well

Assignments, rubrics can be standardized and mapped to specific competencies/outcomes

Again, can work for ground as well

New Opportunities with Online

Assess quality of discussions, group work

Particularly effective with asynchronous

Assess individual learning in more depth

Student advising can be more robust

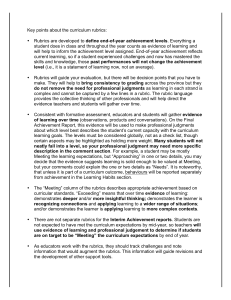

Use of standardized rubrics can result in greater consistency in grading

Particularly effective when combined with periodic instructor norming and use of anchor samples

Discussion & Group Work

Faculty can isolate and organize contributions to a threaded discussion and give feedback on how to improve

Can archive individual contributions and group discussions

Formative and summative

Course, program, and institutional levels

Create a record of instructor effectiveness

Useful for annual review, tenure

Individual Student Learning

Individual learning/achievements can be tracked across the educational experience

E-format allows for wide dissemination of collected data

Current software packages provide various levels of data collection & dissemination

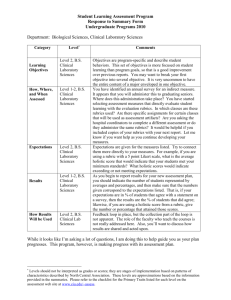

Rubrics

Clear articulation of expectations that are linked to specific course & program outcomes

A means through which correspondence of online & ground rigor can be ensured (alignment)

Increases consistency in grading across sections, courses, programs, even colleges

Holistic vs. Analytic

Example of a Successful Online

Assessment Strategy

Develop course/program maps which articulate learning outcomes and link them to specific courses and course assignments

Collect and archive artifacts through the course management software

Post rubrics through course management software for student and faculty use

Ensure Validity, Reliability

Tracking inter-rater reliability helps achieve consistency in grading

Post rubrics, anchor samples for 24/7 faculty use

Full-time/core faculty should formulate competencies, achievement standards & interpret results

Sample Assessments

Review the Video Gaming Assignment included at the end of this packet.

Determine how this assignment could be adapted for online learning

General Education Outcomes

Example

The ability and inclination to think and make connections across academic disciplines

How would you assess this outcome in ground or online courses?

Websites and Resources

http://fod.msu.edu/oir/TeachWithTech/onlinecourses.asp

http://www.uwstout.edu/soe/profdev/assess.cfm

http://www.fgcu.edu/onlinedesign/index.html

http://www.pvc.maricopa.edu/~lsche/resources/onlin eteaching .htm

http://sloanconsortium.org/

Questions and Concerns

What issues have you encountered when assessing online learning?

What are your concerns regarding the use of online assessment techniques?

What policies and/or procedures need to be in place to reduce or alleviate these concerns?

Questions Still Burning?

What else do you need to know?

The Bottom Line?

Assessment in online learning can be the same as assessment on ground—artifacts and tools can be the same even if the medium is different!