Psychometric problems in the context of genetic association

advertisement

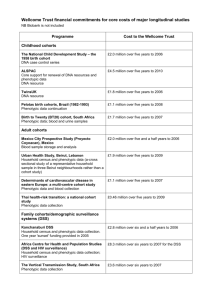

Phenotyping in GWAS 1 SUPPLEMENTAL MATERIAL Power in GWAS: Lifting the curse of the clinical cut-off Sophie van der Sluis1 Danielle Posthuma1 Michel G. Nivard2 Matthijs Verhage1 Conor V. Dolan2,3 1 Complex Trait Genetics, Dept. Functional Genomics & Dept. Clinical Genetics, Center for Neurogenomics and Cognitive Research (CNCR), FALW-VUA, Neuroscience Campus Amsterdam, VU University medical center (VUmc). Email: s.vander.sluis@vu.nl 2 Biological Psychology, VU University Amsterdam. 3 Department of Psychology, FMG, University of Amsterdam, Roeterstraat 15, 1018 WB Amsterdam, The Netherlands Phenotyping in GWAS 2 The simulation Conditional on the gene-effects, we assumed a normally distributed (N~(0,1)) underlying latent trait (i.e., the trait of interest that we wish to measure and for which we wish to identify the genetic background), and we randomly generated latent trait scores for Nsubj=5000 unrelated subjects. Ten causal variants were then simulated with minor allele frequency (MAF) of .2 (genotype groups coded 1/2/3 in the phenotype-creating simulation, with 1 corresponding to the homozygous minor allele genotype), which explained .2 to 2% of the variance in the latent trait score. Adding the gene-effects to the standard normal conditional latent trait score resulted in an approximately normally distributed unconditional latent trait score with a mean of ~1 and a variance of ~1.09. We then simulated 30 extreme items (Figure S1a) and 30 items that covered the entire phenotypic range (Figure S1b). The 30 extreme items mainly distinguish between cases and controls, i.e., between people with and without an extreme phenotype, while the 30 items that cover the entire phenotypic range also distinguish between subjects whose phenotype falls well within the normal range. Figure S1 S1a: 30 extreme items were simulated, which distinguish between cases (subjects scoring on the high end of the phenotypic distribution) and controls. S1b. 30 items were simulated that distinguish between subjects with widely varying phenotypic scores, ranging from very low (left on the scale), to average (middle) to very high (right of the scale, i.e., cases) phenotypic scores. Phenotyping in GWAS 3 The reliability of these items, defined in the context of a latent factor model, ranged from 0.01 to 0.25 (corresponding to factor loadings ranging from .1 to .5, respectively). In terms of the internal consistency (Cronbach’s alpha), this resulted in a 30-item instrument (either consisting of 30 extreme items, or 30 items covering the entire phenotypic range) with a reliability of .84, which is realistic for depression questionnaires (Beck, Steer & Garbin, 1988; Radloff, 1991, 1977) and scales that measure behavioural problems like ADHD (e.g. for Conners’ behavioural rating scales: Sparrow, 2010). We simulated 3-point scale items (e.g. “0: does not apply”, “1: applies somewhat”, “2: does certainly apply”), with endorsement rates of the three answer categories depending on what is called “the difficulty of the item” in the context of Item response Modelling (IRT). In short, easy items (located at the left of the phenotypic scale, Figure S1b) are endorsed by almost everybody (i.e., most subjects score 2 on these items), while difficult items (or extreme items: on the right hand of the phenotypic scale, Figure S1a) are endorsed by only a small percentage of the general population (i.e., most subjects score 0 on these items). So for the 30 extreme items, 95-98% of the subjects in a normal population would score 0, 1.5-4 % would score 1, and only .5-1% of subjects in a general population would score 2 on these items. For the 30 items covering the entire phenotypic range, the endorsement rates of the three categories varied widely. Figure S2 plots the endorsement rates for the 30 extreme (Figure S2a) and the 30 items covering the entire phenotypic range (Figure S2b). In fact, for every individual, continuous item scores were created first, which were subsequently categorized. The score on item j for subject i was calculated as yij = λj*θi + εij , where λj denotes the factor loading of item j, θi the latent trait score of subject i, and εij is the individual-specific residual of the item (i.e., the part of the item score that is not related to the subject’s latent trait score). The resulting continuous (normally distributed) item scores were subsequently categorized into 3 scores (0, 1, 2) depending on the endorsement rates illustrated in Figure S2. Phenotyping in GWAS 4 Figure S2 Endorsement rates of all the items, which are sorted by “difficulty”, with easy items on the left, and difficult items on the right hand of the scale. In green the endorsement rate of score 0, in red the endorsement rate of score 1, and in blue the endorsement rate of score 2 for each of the 30 extreme items (S2a) and each of the 30 items covering the entire phenotypic range (S2b). For these latter items, it can be seen that the easiest items (left of the scale) are endorsed by almost everyone (score 2 has highest endorsement rate: statement applies to almost everyone), while the more difficult items (right of the scale) are endorsed by almost no-one (score 0 has the highest endorsement rate: these statements apply to only a few people). The categorized item scores of the 30 extreme items and the 30 items covering the entire phenotypic scale, were subsequently summed to get the individuals’ overall test scores: sum_skew (based on the 30 extreme items) and sum_tot (based on the 30 items across the scale). Using a 2-parameter IRT model, we also calculated the individual subjects’ expected factor scores, either using their scores on the 30 categorized extreme items, or the 30 categorized items covering the entire phenotypic range. In addition, the sum score based on the 30 extreme items (sum_skew) was dichotomized in two ways: we either used a “clinical” cut-off criterion such that the 12% subjects with the highest sum_skew scores were coded as 1, and the remainder of the sample as 0, or we coded the highest 50% scoring subjects as 1, and the lowest 50% as 0. We also categorized the sum_skew score into 3 categories, each covering approximately Phenotyping in GWAS 5 33% of the sample. Finally, the sum_skew scores were subjected to a square-root or a normal scores transformation. Note that these latter two transformations are often recommended before conducting an analysis like regression (which assumes normally distributed dependent variables) when the dependent variable of interest (in our case the sum_skew score) is not normally distributed. The distributions of all 10 discussed phenotypic operationalisations are illustrated in Figure S3. Phenotyping in GWAS 6 Figure S3 Based on Nsubj=5000 subjects, the distributions of: A: the simulated latent trait score θ, B: sum of 30 items covering the entire phenotypic scale, C: latent factor scores based on the 30 items covering the entire scale, D: sum of 30 extreme items “sum_skew”, E: square root transformation of sum_skew, F: Normal scores transformation of sum_skew, G: latent factor scores based on the 30 extreme items, H: dichotomized sum_skew (50%-50%), I: dichotomized sum_skew (88%12%), J: categorized sum_skew (33%-33%-33%). Phenotyping in GWAS 7 All 10 phenotypic operationalizations were subsequently regressed on the 10 causal variants. In these regression analyses, the homozygous major allele genotype was coded 0 (i.e., carrying 0 minor alleles), the heterozygous genotype was coded 1, and the homozygous minor allele genotype was coded 2. We also included 1 genetic variant which was not related to the phenotype so that we could examine the type-I error (false positive) rate. This entire data simulation + analysis was repeated Nsim=2000 times, and for each simulated genetic variant we counted the number of times that it was picked up in the regression given a genome-wide criterion α of 1e-07 (i.e., the number of times out of Nsim=2000 that the observed p-value in the regression was < 1e-07). The results (in percentages) are shown in Table S1, and plotted in Figure S4. (Note that Figure S4 is similar to the published figure in the manuscript, except that a) it also includes the results for other operationalisations of the phenotype and b) the numbering of the models is different). In short, the results show that the skewed sum score (2) performs worse than the latent trait score (1) and the normally distributed sum score (7), and the power decreases dramatically when the skewed sum score is categorized (3-5), especially when a clinical cutoff criterion is used (3). Clearly, when the trait is polygenic (rather than Mendelian), and cases and controls differ quantitatively, as stipulated in the common-trait common-variant hypothesis underlying GWAS, the test statistic associated with the correlation between the genotype and the case-control phenotype, is generally smaller compared to the test statistics associated with the other phenotypic measures. This is mainly due to the larger standard error of the estimate. Consequently, the power to detect the causal locus drops dramatically. Phenotyping in GWAS 8 Figure S4 Power plot for simulations using factor loadings ranging from .1-.5, with on the x-axis the effect sizes of the 10 simulated causal genetic variants with MAF=.2 each, and on the y-axis the power to detect these causal variants for the following phenotypic operationalisations: 1: latent trait, 2: sum_skew, 3: 88-12 dichotomization of sum_skew, 4: 50-50 dichotomization of sum_skew, 5: categorization into 3 equal groups of sum_skew (33-33-33), 6: factor score based on the 30 extreme, categorized items, 7: sum based on 30 categorized items covering the entire phenotypic scale, 8: factor score based on the 30 categorized items covering the entire phenotypic scale, 9: square root transformation of sum_skew, 0: normal score transformation of sum_skew . Results of all 10 scenarios are based on Nsubj=5000 subjects and Nsim=2000 simulations. Phenotyping in GWAS 9 Table S1: Power in percentages (for MAF=.2 and factor loadings ranging from .1-.5) Phenotypic operationalisations Causal variant effect size (%variance explained) 0.2% 0.4% 0.6% 0.8% 1% 1.2% 1.4% 1.6% 1.8% 2% Check: 0 % Latent trait (1) 0.75 10.45 34.20 64.30 85.90 96.10 98.65 99.70 100.00 100.00 5.65 Sum extreme items (2) 0.10 1.15 3.40 11.65 24.80 42.00 56.30 72.20 84.40 90.85 5.50 Dich (88% - 12%) (3) 0.00 0.00 0.05 0.10 0.35 1.05 1.85 3.50 5.90 9.15 5.40 Dich (50%-50%) (4) 0.00 0.15 1.00 3.65 6.20 16.10 25.35 36.00 49.25 59.65 5.25 Categorized (33%/33%33%) (5) 0.00 0.65 2.50 7.20 17.00 31.20 44.90 56.80 71.90 79.85 5.30 Factor score extreme items (6) 0.05 1.15 5.50 17.00 33.55 53.55 68.20 82.40 90.80 95.60 5.55 Sqrt transformation (9) 0.10 1.05 4.15 13.35 27.35 46.05 58.95 74.60 86.30 92.20 5.50 Normal score transformation (0) 0.10 0.95 4.35 14.00 28.20 48.05 61.40 75.85 87.20 93.10 5.65 Sum items covering entire phenotypic range (7) 0.15 2.60 12.55 31.20 53.90 75.50 86.20 93.95 97.95 99.25 4.95 Factor score items covering entire phenotypic range(8) 0.20 3.30 14.20 35.35 57.60 79.70 89.35 95.40 98.80 99.45 5.35 Note: Power in percentages for simulations using factor loadings ranging from .1-.5: the percentage of Nsim=2000 simulations that picked up the causal variants with MAF=.2 and with effect size varying from .2 to 2% (i.e., variance explained in the latent trait score). Between brackets the numbers corresponding to Figure S4. The last column shows the false positive rate given α=.05 (none of the false positive rates deviate significantly from the expected 5%) 1. Nsubj=5000 is all 10 scenarios. 1 Given a nominal α of .05, the percentage of false positive hits is expected to be close to 5%. Note that the standard error of the ML-estimator of the p-value in the simulations is calculated as sqrt(p*(1 - p)/N), where p denotes the percentage of significant tests observed in the simulations (nominal p-value) given a chosen α, and N the total number of simulations. The 95% confidence interval for a correct nominal p-value of .05 (given α = .05) and N = 2000 thus corresponds to CI-95 = (p - 1.96 * SE, p + 1.96 * SE), and thus equals .04–.06. This implies that, given α = .05, any observed nominal p-value outside the .04–.06 range should be considered incorrect. Phenotyping in GWAS 10 To assure generalizability of the simulation results to scenarios in which the MAF of the causal variants are not .2, we repeated the study with exactly the same simulation setting except changing minor allele frequencies to MAF=.05 (Figure S5 and Table S2) or to MAF=.5 (Figure S6 and Table S3). The main results remain the same: dichotomizing a skewed sum score using a clinical cut-off is very deleterious for the statistical power to detect causal variants. The difference in power between a skewed sum score and a sum score based on items covering the entire trait range is less dramatic when MAF is really low (.05 versus .2 and .5). In addition, we repeated the simulations with MAF=.2, but changed the settings for the factor loadings. In the original simulation, the factor loadings ranged between .1 and .5, which corresponds to inter-item correlations ranging between .01 and .25, which is rather low but results in a 30-item instrument with a realistic reliability of .84. We added two simulations: a) factor loadings ranging between .3 and .6 (inter-item correlations between .09 and .36, and reliability of the 30-item instrument of .91: Table S4 and Figure S7), and b) factor loadings ranging between .3 and .9 (inter-item correlations between .09 and .81, and reliability of the 30-item instrument of .98: Table S5 and Figure S8). Again, the main results remained the same: the power to detect a trait-associated SNP diminishes dramatically if categorization, and especially a clinical cut-off criterion, is used to dichotomize a skewed but continuous trait-measure before analysis. In addition, the power of the sum score based on items covering the entire trait range remains considerably higher compared to the sum score based on extreme items only. Phenotyping in GWAS 11 The power of future GWAS studies could thus improve considerably if researchers would use phenotypic instruments that resolve individual difference in cases as well as controls, i.e., across the entire trait range. Practically, there are at least two ways in which current instruments could be adjusted. 1) One could complement the current extreme items with easy items and items of medium difficulty. This is, however, easier said than done. For example, suppose an attention deficit /hyperactivity scale like the Child Behavior Check List (CBCL, Achenbach, 1991) including items like “Often fails to pay close attention or makes careless mistakes”, “Often does not seem to listen when spoken to directly”, and “Often has difficulty organizing tasks and activities”. One could add items stating almost the opposite, e.g., “Pays meticulous attention” and “Listens carefully when spoken to”, but items of medium difficulty level are difficult to compose. 2) Rather than adding easy/medium items, one could adjust the items’ answer categories. For instance, answer categories for the attention deficit/hyperactivity items mentioned above are: “this item describes a particular child not at all / just a little / quite a bit / very much”. Swanson et al. (2006) suggested to change the reference of the scale and to rather ask: “compared to other children, does this child display the following behaviour far below average / below average / slightly below average / average / slightly above average / above average / far above average”. These authors developed the SWAN (Strengths and Weaknesses of ADHD symptoms and Normal behaviour scale), an instrument very much like the CBCL but due to the different rating scale, overall scores on the SWAN are approximately normally distributed, while the original CBCL scores are very skewed (see e.g. Polderman et al., 2007 for an illustration). That is, in asking teachers/parents to compare a child’s behaviour to the average of other children’s behaviour, and offering not only the option to display certain behaviour much more often, but also to display it much less often than average, overall scores on the SWAN are normally distributed in a general population sample. For many traits/instruments, changing the answer option and frame of reference, rather than the actual items, is probably easier to implement in practice. Also, changing the rating scale is directly applicable to many types of instruments. For instance, the Phenotyping in GWAS 12 answer option of depression items like “I felt sad”, “I felt lonely”, “I felt my life was a failure”, and “I felt people disliked me” are usually something like “Rarely / sometimes / occasionally / often”. Changing the answer options to “compared to other people, did you feel […] far less often/ less often/ slightly less often/ about as often/ slightly more often/ more often/ far more often?” is easy to implement. This resulting scale will still allow distinction between cases and controls, but also distinguishes between controls: some people do have feelings of loneliness or sadness but only as often as anyone, while other really hardly ever experience loneliness or sadness. A drawback of this rescaling, however, could be that participants are not only asked to evaluate their own behaviour, but to also compare their behaviour to that of others. This, of course, requires some insight / knowledge about “average behaviour” and what is considered “average” may differ from person to person. However, answer option like “rarely” and “occasionally” are also open to subjective evaluation. Self-report instruments often suffer from this “frame-of-reference” dependency. Important to note is that simple rescaling will not always result in normally distributed scores. For instance, schizophrenia symptoms like odd believes, unusual perceptual experiences, delusions, hallucinations, apathy, and catatonic behaviour are simply quite extreme and may not be very suited to evaluation on a “gradual” scale. Whatever strategy one chooses to obtain more normally distributed test scores, the newly developed instruments of course require careful validation, standardization, and especially: close comparison to the original instruments for which valuable information is already available. Phenotyping in GWAS 13 Figure S5 Power plot for simulations using factor loadings ranging from .1-.5, with on the x-axis the effect sizes of the 10 simulated causal genetic variants with MAF=.05 each, and on the y-axis the power to detect these causal variants for the following phenotypic operationalisations: 1: latent trait, 2: sum_skew, 3: 88-12 dichotomization of sum_skew, 4: 50-50 dichotomization of sum_skew, 5: categorization into 3 equal groups of sum_skew (33-33-33), 6: factor score based on the 30 extreme, categorized items, 7: sum based on 30 categorized items covering the entire phenotypic scale, 8: factor score based on the 30 categorized items covering the entire phenotypic scale, 9: square root transformation of sum_skew, 0: normal score transformation of sum_skew . Results of all 10 scenarios are based on Nsubj=5000 subjects and Nsim=2000 simulations. Phenotyping in GWAS 14 Figure S6 Power plot for simulations using factor loadings ranging from .1-.5, with on the x-axis the effect sizes of the 10 simulated causal genetic variants with MAF=.5 each, and on the y-axis the power to detect these causal variants for the following phenotypic operationalisations: 1: latent trait, 2: sum_skew, 3: 88-12 dichotomization of sum_skew, 4: 50-50 dichotomization of sum_skew, 5: categorization into 3 equal groups of sum_skew (33-33-33), 6: factor score based on the 30 extreme, categorized items, 7: sum based on 30 categorized items covering the entire phenotypic scale, 8: factor score based on the 30 categorized items covering the entire phenotypic scale, 9: square root transformation of sum_skew, 0: normal score transformation of sum_skew . Results of all 10 scenarios are based on Nsubj=5000 subjects and Nsim=2000 simulations. Phenotyping in GWAS 15 Figure S7 Power plot for simulations using factor loadings ranging from .3-.6,with on the x-axis the effect sizes of the 10 simulated causal genetic variants with MAF=.2 each, and on the y-axis the power to detect these causal variants for the following phenotypic operationalisations: 1: latent trait, 2: sum_skew, 3: 88-12 dichotomization of sum_skew, 4: 50-50 dichotomization of sum_skew, 5: categorization into 3 equal groups of sum_skew (33-33-33), 6: factor score based on the 30 extreme, categorized items, 7: sum based on 30 categorized items covering the entire phenotypic scale, 8: factor score based on the 30 categorized items covering the entire phenotypic scale, 9: square root transformation of sum_skew, 0: normal score transformation of sum_skew . Results of all 10 scenarios are based on Nsubj=5000 subjects and Nsim=2000 simulations. Phenotyping in GWAS 16 Figure S8: .3-.9 Power plot for simulations using factor loadings ranging from .3-.9, with on the x-axis the effect sizes of the 10 simulated causal genetic variants with MAF=.2 each, and on the y-axis the power to detect these causal variants for the following phenotypic operationalisations: 1: latent trait, 2: sum_skew, 3: 88-12 dichotomization of sum_skew, 4: 50-50 dichotomization of sum_skew, 5: categorization into 3 equal groups of sum_skew (33-33-33), 6: factor score based on the 30 extreme, categorized items, 7: sum based on 30 categorized items covering the entire phenotypic scale, 8: factor score based on the 30 categorized items covering the entire phenotypic scale, 9: square root transformation of sum_skew, 0: normal score transformation of sum_skew . Results of all 10 scenarios are based on Nsubj=5000 subjects and Nsim=2000 simulations. Phenotyping in GWAS 17 Table S2: Power in percentages (for MAF=.05 and factor loadings ranging from .1-.5) Phenotypic operationalisations Causal variant effect size (%variance explained) 0.2% 0.4% 0.6% 0.8% 1% 1.2% 1.4% 1.6% 1.8% 2% Latent trait (1) 0.80 9.45 36.80 64.25 85.90 94.65 98.85 99.40 99.95 100.00 Sum extreme items (2) 0.00 1.75 9.90 23.80 46.55 65.25 81.90 91.05 96.60 99.00 Dich (88% - 12%) (3) 0.00 0.00 0.00 0.00 0.00 0.05 0.10 0.10 0.30 0.85 Dich (50%-50%) (4) 0.00 0.45 2.15 6.60 14.40 24.35 36.00 52.45 69.50 78.70 Categorized (33%/33%33%) (5) 0.00 0.70 4.50 12.30 26.55 43.15 58.00 71.65 84.85 91.65 Factor score extreme items (6) 0.00 2.50 14.40 31.70 55.20 73.30 88.20 93.95 97.85 99.50 Sqrt transformation (9) 0.10 2.40 13.25 31.05 53.00 71.50 86.55 93.00 97.90 99.30 Normal score transformation (0) 0.05 2.30 12.85 30.60 52.70 70.60 86.65 92.85 97.75 99.35 Sum items covering entire phenotypic range (7) 0.20 2.60 14.45 31.25 53.55 73.70 87.25 93.30 97.75 99.60 Factor score items covering entire phenotypic range(8) 0.30 3.15 15.80 34.75 57.25 77.00 89.90 94.80 98.75 99.70 Note: Power in percentages: the percentage of Nsim=2000 simulations that picked up the causal variants with MAF=.05 and with effect size varying from .2 to 2% (i.e., variance explained in the latent trait score). Between brackets the numbers corresponding to Figure S5. Nsubj=5000 is all 10 scenarios. Phenotyping in GWAS 18 Table S3: Power in percentages (for MAF=.5 and factor loadings ranging from .1-.5) Phenotypic operationalisations Causal variant effect size (%variance explained) 0.2% 0.4% 0.6% 0.8% 1% 1.2% 1.4% 1.6% 1.8% 2% Latent trait (1) 0.90 9.05 34.80 65.60 85.90 95.10 98.75 99.70 100.00 99.95 Sum extreme items (2) 0.00 0.30 1.85 5.05 13.00 22.95 36.15 47.60 60.90 71.60 Dich (88% - 12%) (3) 0.00 0.00 0.00 0.55 0.60 1.55 2.75 3.85 7.20 10.90 Dich (50%-50%) (4) 0.00 0.15 0.50 1.30 3.35 6.55 10.95 16.95 25.35 31.85 Categorized (33%/33%33%) (5) 0.00 0.30 1.10 2.45 6.75 12.20 19.40 28.95 40.55 50.90 Factor score extreme items (6) 0.00 0.50 3.15 7.85 19.10 32.60 46.00 60.75 73.25 83.10 Sqrt transformation (9) 0.00 0.30 1.95 4.60 11.65 21.25 33.25 44.10 58.30 69.60 Normal score transformation (0) 0.00 0.45 2.05 5.20 13.00 24.10 36.75 48.30 62.20 72.60 Sum items covering entire phenotypic range (7) 0.25 2.50 11.80 29.75 52.05 70.70 85.60 91.75 97.10 98.95 Factor score items covering entire phenotypic range(8) 0.25 2.75 13.80 33.35 58.00 75.50 89.00 94.60 98.35 99.35 Note: Power in percentages: the percentage of Nsim=2000 simulations that picked up the causal variants with MAF=.5 and with effect size varying from .2 to 2% (i.e., variance explained in the latent trait score). Between brackets the numbers corresponding to Figure S6. Nsubj=5000 is all 10 scenarios. Phenotyping in GWAS 19 Table S4: Power in percentages (for MAF=.2 and factor loadings ranging from .3-.6) Phenotypic operationalisations Causal variant effect size (%variance explained) 0.2% 0.4% 0.6% 0.8% 1% 1.2% 1.4% 1.6% 1.8% 2% Latent trait (1) 0.45 9.80 35.65 65.50 85.80 95.65 99.30 99.70 100.00 100.00 Sum extreme items (2) 0.05 1.60 7.95 20.20 41.60 61.45 76.15 87.25 94.85 97.55 Dich (88% - 12%) (3) 0.00 0.00 0.30 1.05 1.00 3.75 5.15 10.40 15.65 22.15 Dich (50%-50%) (4) 0.05 0.45 2.65 8.75 16.85 31.15 46.15 61.35 74.90 83.05 Categorized (33%/33%33%) (5) 0.05 1.05 7.50 17.10 34.35 53.25 71.30 81.75 90.20 94.95 Factor score extreme items (6) 0.10 2.95 13.45 30.10 53.95 74.90 86.85 94.60 98.00 99.20 Sqrt transformation (9) 0.10 3.00 12.00 28.75 51.80 71.65 85.05 93.10 97.50 99.20 Normal score transformation (0) 0.10 3.10 12.35 29.05 52.05 72.10 85.60 93.25 97.80 99.25 Sum items covering entire phenotypic range (7) 0.20 5.25 22.40 45.60 69.85 86.55 94.30 98.15 99.70 99.80 Factor score items covering entire phenotypic range(8) 0.15 4.90 22.55 47.40 71.45 87.35 95.10 98.30 99.55 99.95 Note: Power in percentages: the percentage of Nsim=2000 simulations that picked up the causal variants with MAF=.5 and with effect size varying from .2 to 2% (i.e., variance explained in the latent trait score). Between brackets the numbers corresponding to Figure S6. Nsubj=5000 is all 10 scenarios. Phenotyping in GWAS 20 Table S5: Power in percentages (for MAF=.2 and factor loadings ranging from .3-.9) Phenotypic operationalisations Causal variant effect size (%variance explained) 0.2% 0.4% 0.6% 0.8% 1% 1.2% 1.4% 1.6% 1.8% 2% Latent trait (1) 0.55 10.10 32.50 64.95 85.70 96.70 98.65 99.80 100.00 100.00 Sum extreme items (2) 0.10 2.20 8.70 25.20 49.10 68.45 83.15 92.25 96.45 98.50 Dich (88% - 12%) (3) 0.00 0.05 0.30 0.95 2.30 6.25 11.00 16.60 23.30 31.85 Dich (50%-50%) (4) 0.10 0.65 4.55 14.90 28.90 49.55 64.05 77.50 87.30 93.35 Categorized (33%/33%33%) (5) 0.20 2.35 9.25 25.25 46.80 68.95 81.60 90.90 95.70 98.60 Factor score extreme items (6) 0.30 4.35 17.75 41.95 67.50 86.65 94.60 98.00 99.30 99.85 Sqrt transformation (9) 0.25 4.20 16.40 39.30 63.35 84.10 93.40 97.15 99.15 99.65 Normal score transformation (0) 0.25 4.20 16.40 39.75 64.20 83.85 93.20 97.30 99.20 99.70 Sum items covering entire phenotypic range (7) 0.60 7.80 26.90 56.05 79.95 93.10 97.80 99.60 100.00 99.95 Factor score items covering entire phenotypic range(8) 0.50 7.80 27.45 56.95 81.15 94.15 97.85 99.60 100.00 99.95 Note: Power in percentages: the percentage of Nsim=2000 simulations that picked up the causal variants with MAF=.5 and with effect size varying from .2 to 2% (i.e., variance explained in the latent trait score). Between brackets the numbers corresponding to Figure S6. Nsubj=5000 is all 10 scenarios. Phenotyping in GWAS 21 References: Achenbach, T.M. (1991). Manual for the Child Behavior Checklist/4–18. Burlington, VT: University of Vermont, Department of Psychiatry. Beck, A.T., Steer, R.A., & Garbin, M.G. (1988). Psychometric properties of the Beck Depression Inventory: Twenty-five years of evaluation. Clinical Psychology Review, 8, 77-100. Polderman, T.J.C., Derks, E.M., Hudziak, J.J., Verhulst, F.C., Posthuma, D., & Boomsma, D.I. (2007). Across the continuum of attention skills: a twin study of the SWAN ADHD rating scale. Journal of Child Psychology and Psychiatry, 48(11), 1080-1087. Radloff, L.S. (1977). The CES-D Scale : A Self-Report Depression Scale for Research in the General population. Applied Psychological Measurement, 1, 385-401. Radloff, L.S. (1991). The use of the Center for Epidemiologic Studies Depression Scale in adolecscents and young adults. Journal of Youth and Adolescence, 20(2), 149-166. Sparrow, E.P. (2010). Essentials of Conners’ Behavior Assessments. John Wiley & Sons, Inc., Hoboken, New Jersey. Swanson, J.M., Schuck, S., Mann, M., Carlson, C., Hartman, K., Sergeant, J.A., Clevinger, W.,Wasdell, M., & McCleary, R. (2006). Categorical and dimensional definitions and evaluations of symptoms of ADHD: The SNAP and SWAN Rating Scales. Retrieved May 2006 from http://www.ADHD.net. Acknowledgement Sophie van der Sluis (VENI-451-08-025), Danielle Posthuma (VIDI-016-065-318), and Michel G. Nivard (912-100-20) are financially supported by the Netherlands Scientific Organization (Nederlandse Organisatie voor Wetenschappelijk Onderzoek, gebied Maatschappij-en Gedragswetenschappen: NWO/MaGW). Michel G. Nivard is also supported by the Neuroscience Campus Amsterdam (NCA). Simulations were carried out on the Genetic Cluster Computer which is financially supported by an NWO Medium Investment grant (480-05-003), by the VU University, Amsterdam, The Netherlands, and by the Dutch Brain Foundation. The R-simulation code is available from the following website: http://ctglab.nl/people/sophie_van_der_sluis