Notes

advertisement

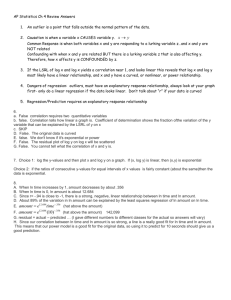

REGRESSION ANALYSIS Primary business application -- FORECASTING. For forecasting to be possible, the future must, in some way, be like the past. Forecasting methods seek to identify relationships in the past, and use them to make predictions. The assumption (and the hope) is that the past relationships will persist. VARIABLES IN REGRESSION Dependent Variable -- the one being predicted. Independent variable(s) -- the one(s) used to make the prediction. TYPES OF REGRESSION Based on the type of regression line: Linear -- straight regression line: y' = a + b(x) a = vertical or y-intercept b = slope Non-linear -- curved regression line Exponential -- y' = a (bx) a = vertical or y-intercept b = growth factor (1 + growth rate) Logarithmic Polynomial Trigonometric Over a restricted range (relevant range) a curve can be approximated with a straight line. Based on the number of independent variables: Simple -- one independent (predictor) variable to predict the dependent variable. Multiple -- two or more independent (predictor) variables to predict the dependent variable. y' = a + bx1 + cx2 + dx3 + . . . Based on the nature of the independent variable: Causal regression Time-series -- time is used as a substitute for the true causative factors. CORRELATION r = sample coefficient of correlation or correlation coefficient. r2 = sample coefficient of determination. No correlation: r = 0; r2 = 0 Positive correlation: 0 < r 1; 0 < r2 1 Negative correlation: -1 r < 0; 0 < r2 1 Note that the coefficient of determination (r2) is never negative. ρ (rho) and ρ2 are the population parameters corresponding to r and r2. THE LINEAR REGRESSION LINE: y' = a + b(x) a: sample intercept -- the vertical or y-intercept of the regression line -- the predicted value of y when x = 0. b: sample slope -- slope of the regression line -- the change in the y-prediction for a one-unit change in x. α and β are the population parameters corresponding to a and b. TYPES OF VARIATION IN REGRESSION INITIAL or ORIGINAL VARIATION The sum of the squared deviations between the data points and the mean of the data y-values: ( y- y ) 2 RESIDUAL VARIATION The sum of the squared deviations between the data points and the regression line (measured vertically): ( y - y )2 REMOVED or EXPLAINED VARIATION Initial variation minus residual variation. Sample coefficient of non-determination (k2) is the ratio of residual variation to initial variation. Sample coefficient of determination (r2) is the ratio of removed variation to initial variation. (r2 = 1 - k2). LEAST-SQUARES regression line is the line that produces the minimum residual variation. UNCERTAINTY IN REGRESSION Uncertainties exist with respect to the intercept and the slope. Confidence intervals may be computed for each. Uncertainties also exist with respect to the predictions of y. Confidence intervals may be computed for y-predictions. Predictions have the least uncertainty when x is near the average x in the data. Time-series forecasting, therefore, has inherent uncertainties not necessarily present in causal models. Time series -- two reasons for using less rather than more data: The future is more likely to be like the recent past than the more distant past. X is closer to the average x when less data are used. HYPOTHESIS TESTING IN REGRESSION Ho: no correlation (relationship) between x and y; ρ = 0; ρ2 = 0; β = 0 One-sided or two-sided alternate hypotheses are possible. Reject Ho if tc tt (small n) or zc zt (large n). The tt is based on (n-2) degrees of freedom. Reject Ho if p α. EXPONENTIAL REGRESSION Theory underlying the computational procedure: If y is an exponential function of x, then ln y (or log y) is a linear function of x. Procedure: Transform the y-values to ln y (or log y). Perform linear regression using the ln y-values in place of the original y-values (page 2 of computer printout). Result is a linear equation for the ln of the predicted y: ln y' = a + b(x) (nos. 16 & 15 on page 2) Obtain the intercept and growth factor for the exponential prediction equation y' = a (bx) by taking the inverse ln's of the a and b values on page 2. Results are the exponential intercept and growth factor on page 3: y' = a (bx) (nos 16 & 15 on page 3) THE EXPONENTIAL REGRESSION LINE: y' = a (bx) a: sample intercept -- the vertical or y-intercept of the regression line -- the predicted value of y when x = 0. b: sample growth factor = (1 + growth rate). Growth rate (r) is the % change in the y-prediction for a one-unit change in x. (r = b - 1) and (b = 1 + r). CORRELATION AND CAUSATION The presence of correlation does not, in itself, prove causation. To prove causation, three things are needed: Statistically significant correlation must be present between the alleged cause and the effect. The alleged cause must be present before or at the same time as the effect. There must be an explanation as to how the alleged cause produces the effect. TWO-POINT REGRESSION Simple extrapolation of a trend determined by two observations. Example: Property taxes in 1995: $2,000. Property taxes in 2000: $2,800. Make predictions for 2003. Linear Model: b-value: ($2,800 - $2,000) / 5 = $160 annual increase a-value: $2,000 2003 linear prediction: $2,000 + $160(8) = $3,280. Exponential Model: b-value: ($2,800 / $2,000)1/5 = 1.069610. 6.961% is the annual growth rate (r = b - 1). a-value: $2,000 2003 exponential prediction: ($2,000)(1.069610)8= $3,426.