Author Guidelines for 8 - Center for Software Engineering

advertisement

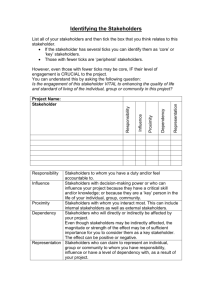

Analysis of Stakeholder/ Value Dependency Patterns and Process

Implications: A Controlled Experiment

Di Wu1, Qi Li1, Mei He2, Barry Boehm1, Ye Yang2, Supannika Koolmanojwong1

1

University of Southern California, 941 W. 37th Place Los Angeles, CA 90089-0781

2

Institute of Software, Chinese Academy of Sciences, Beijing 100080, China

{diwu, qli1, boehm, koolmano}@usc.edu, {hemei, ye}@itechs.iscas.ac.cn

Abstract

Different classes of information system

stakeholders depend on different values to be

successful. Understanding stakeholders’ value

dependencies is critical for developing software

intensive systems. However, there is no universal

one-size-fits-all stakeholder/ value metric that can be

applied for a given system. This paper presents an

analysis of major classes of stakeholders’ value

priorities using the win-win prioritization results

from 16 real-client graduate software engineering

course projects. Findings from this controlled

experiment further verify and extend the hypotheses

that “different stakeholders have different value

propositions”, bridge the value understanding gaps

among different stakeholders, beneficial for further

reasoning about stakeholders’ utility functions and

for providing process guidance for software projects

involving various classes of stakeholders.

1. Introduction

A major challenge in the development of software

intensive systems is to define requirements to satisfy

the needs of its multiple inter-dependent stakeholders

[1, 25, 26]. The 2005 Standish Group research report

[2] reported 53% of the projects were challenged due

to lack of user input. Developing the wrong

functionalities and developing the wrong user

interface are among the top software risk items [4].

Making incorrect assumptions of different classes of

users and the incompatible needs among these users

have led to many product disasters [5].

This indicates that software developers need to

better understand the dependability of the software

they produce. By dependability, we mean “to what

extent does the system satisfy the value propositions

that its stakeholders are depending on” [6]? The USC

2004 NASA high dependability computing study [6]

provides a good example of the resulting

dependability conflicts across different classes of

stakeholders, and provides high level guidance for

reasoning about stakeholders’ desired levels of

service. A key finding from the study is that different

stakeholders have different value dependencies, but

most of the relationships are hypotheses yet to be

tested. Moreover, it is even more challenging for

practitioners to come up with a win-win set of desired

functional needs because functional dependencies

must be considered within an operational context,

which varies from system to system. However, we

have had the opportunity to test the hypotheses in [6]

and extend it to functional needs in the context of a

graduate team-project software engineering course,

since most projects are web-based applications for

community service organizations and small

businesses. We collected major classes of

stakeholders’ priority ratings on their win-win

agreements, and applied the analysis approach

suggested in [6]. During the win-win prioritization,

some students were instructed to role play as client,

user, and maintainer. The role-play client’s ratings

were then compared with real client’s ratings to

assess the confidence of using role-player in this

activity. The results from the controlled experiment

quantitatively verify the hypotheses that “Different

stakeholders have different value propositions” and

by means of mutual learning process and

experiences, role-play stakeholders could be able to

represent missing real stakeholders to some extent.

The remainder of the paper is structured as

follows: section 2 introduces related work; section 3

describes the experiment design step by step; section

4 presents results from the experiment; section 5

discusses threats to validity, and section 6, 7

concludes the paper with discussions on process

implications and future work.

2. Related work

2.1. VBSSE “4+1” Theory -The underlying

theory

This paper aims to empirically verify the

dependency theory from the “4+1” Value-Based

Systems and Software Engineering (VBSSE) theory

[7, 8]. The center of the theory is the success-critical

stakeholder (SCS) win-win Theory W, which

addresses what values are important and how success

is assured for a given software engineering

organization. The four supporting theories that it

draws upon are dependency theory (Identifying all of

the success-critical stakeholders), utility theory

(Understanding how the success-critical stakeholders

want to win), decision theory (Having the successcritical stakeholders negotiate win-win product and

process plans) and control theory (Adaptively

controlling progress toward a success-critical

stakeholder win-win outcome). This process has been

integrated with the spiral model of system and

software development and evolution [7] and its nextgeneration system and software engineering

successor, the Incremental Commitment Model [8].

Thus, the approach can be used for non-software

intensive systems, but our experiment here was

performed in the context of software-intensive

systems.

2.2.

Top-Level

Stakeholder/

Value

Dependency Framework-The hypotheses to

be verified and extended

The USC 2004 NASA high dependability

computing study [6] provides a good example of the

resulting dependability conflicts across different

classes of stakeholders, and provides high level

guidance for reasoning about stakeholders’ desired

levels of service. Table 1 shows the top-level

stakeholder/ value metric in the framework identified

in [6]. The key elements in the framework are: A

definition of major classes of success critical

stakeholders; a classification of dependability

attributes; and dependency strength on these

attributes for each class of stakeholders.

Table 1. Top-level stakeholder/ value metric

The dependability attributes in Table 1 are

essentially the same as the attributes frequently

associated with software quality. In this paper, we

will continue to refer to them as dependability

attributes.

As already mentioned in section 1, the main

finding from this study is that different stakeholders

have different value propositions of level of services,

but most of the relationships are hypotheses yet to be

tested quantitatively. Moreover, it is even more

challenging for practitioners to come up with a winwin set of desired functional needs because

functional dependencies must be considered within

an operational context, which varies from system to

system. In this paper, we try to empirically test the

hypotheses in Table 1 and extend the dependability

attributes to functional requirements based on 16

projects.

2.3. Other Related Work

It is generally accepted that requirements need to

consider multiple viewpoints perspectives. Darke and

Shanks [9] pointed out that viewpoint “represent a

particular perspective or set of perceptions of the

problem domain”. The range of these perspectives is

not limit to stakeholders. It also includes

organizational and domain sources, such as database,

policy, documents [10]. A number of requirements

methods have been developed for defining different

viewpoints. Such work include Easterbrook’s work

[11] on identifying user viewpoints during

requirements acquisition, Finkelstein et al.[12] and

Niskier et al.[13]’s work on identifying developer

viewpoints during requirements modeling, Kotonya

and Sommerville [14] ‘s object-oriented approach to

viewpoint analysis. Several researches also proposed

techniques for identifying conflicting viewpoints [15,

16]. Our approach differs from these works in that we

use the stakeholder WinWin negotiation approach to

elicit and reconcile viewpoints. In addition, the

prioritization step during negotiation enables

stakeholders to identify value priorities from their

viewpoints. Existing methods often lack this direct

input from stakeholders.

Early research on the characteristics of software

quality and dependability were the 1973 TRW-NBS

characteristics of software quality study [17] and the

1977 GE-RADC factors in software quality study

[18]. Subsequent significant contributions were

Gilb’s techniques of defining quantitative quality

goals [19], Grady’s quality management metrics used

at Hewlett-Packard [20, 21], Kitchenham’s

COQUAMO estimation model [22], the SEI’s

architecture tradeoff analysis method [23, 24], the U.

of Toronto non-functional requirements method [25],

and the Fraunhofer-Maryland survey of software

dependability properties [3]. Links between these and

stakeholder value propositions are addressed in the

Basili et al. Goal Question Metric Paradigm [26], the

stakeholder win-win related research [5, 6, 27], AlSaid’s study of stakeholder value model clashes [5],

and several chapters in the Value-Based Software

Engineering book [28]. These works provided the

basics for defining and analyzing the dependability

attributes used in this study. However, the main

shortcoming of these works is lacking quantitative

evidence. In this paper, we design a controlled

experiment to collect and analyze data to

quantitatively verify the hypotheses that “Different

stakeholders have different value propositions” and

this study can be used to complement the current

research in this field.

3. Controlled Experiment Methodology

3.1. Experiment Objective and Background

As mentioned in Section 1, the objective of this

study is to identify stakeholder/ value dependence

patterns using real-client projects being developed at

the USC graduate software engineering course. More

specifically, we want to 1) identify the primary

dependability attributes in terms of functional tasks,

level of service, and project related activities; 2)

evaluate the hypotheses of dependency relationships

shown in Table 1.

Of the 16 projects in fall 2008, six belonged to a

category called multi-media information services.

The media included art gallery items, cinema

artifacts, writing essays, theater scripts, housing

information, and clothing and garment information.

Five involved community services such as youth

services, thrift stores, financial literacy education,

rivers and parks, and housing services. The other five

were

miscellaneous

applications

such

as

neighborhood small businesses, anthropology

research data management, and social networking.

Project teams were formed by first year graduate

students taking the course. The project clients came

from USC-neighborhood small business, non-profit,

and campus organizations needing improved eservices applications.

3.2. Experiment Design

Preparation: Prior to the experiment, all teams

conducted win-win negotiations with real client

representatives and agreements were reached. To

make sure the experiment is under control, we also

gave students two tutorials, one is for teaching

students how to role-play stakeholders in step 1, the

other is for teaching students how to rate

meaningfully in step 3.

Step 1: Define major classes of success critical

stakeholders (SCSs) and identify role players: We

focused on three main types of stakeholders in the

software engineering process: client, user, and

maintainer. Al three types of stakeholders prioritize

from the perspective of business importance.

Developer prioritization was not included in this

study because it was done from a different value

perspective: ease of implementation.

As happens with many software projects in

practice, we often just had real clients and did not

have real user and real maintainer representatives. As

an alternative, students were instructed to role play

client, user, and maintainer, which created an

opportunity to assess the effectiveness of using role

players to represent actual stakeholders. We will

compare the results between real-client and role-play

client. If the results show a strong correlation, it will

provide some confidence for accepting results from

role-play users and role-play maintainers.

To make sure that the experiment is under

control, in this step we teach students how to roleplay: A challenge in this experiment is to identify

role players who are able to prioritize from the

perspective of other stakeholders. We suggested to

teams to identify role players based on who best

understands the type of stakeholder. For example, the

person who works on the operational concept could

be a good candidate to role play user. We also

provided some value proposition hints for the role

players to consider. For example, the role play client

may consider on time, within budget, functions are

correctly implemented, and are interoperable with

existing system, etc.

Step 2: Extend the classification of

dependability attributes: In order to analyze the

dependency relationship, we extended attributes from

Table 1, and defined three sets of attributes: level of

service goals, functional tasks, and project activities,

as shown in Table 2. The levels of service attributes

were

compatible

with

the

corresponding

dependability attributes in [6]. Functional tasks

attributes were added according to 16 projects’

specific operational context. Project activities were

extended from cost and schedule to more project

related activities such as requirements of transition,

deployment etc. The meanings and examples of these

attributes can be found in [27].

Table 2. Classification of Dependability Attributes

1.Functional tasks

Administration support

2.Level of services

Correctness

3.Project Activities

User interface standards

Financial support

Availability

Hardware interface

Query

Security

Communications interface

Display

Privacy

Other software interface

Update

Interoperability

Course required process& tools

Storage

Usability

Other development tools

Tracking

Performance

Language

Scheduling

Evolvability

Computer hardware

Notification

Reusability

Computer software

Automation

Computer communication

Social networking

Deployment

Scan and conversion

Transition

Status message

Support environment

Usage analysis

Budget

Others

Schedule

Step 3: Rate dependency strength on these

attributes: The WikiWinWin tool [30] has the

capability of providing a rating page for each

participant. The rating page displays all the win-win

agreements to be rated as shown in Figure 1. All role

players and real-client representatives rated on

business importance on a scale of 1 to 9:

1-Not Worth Having, 3-Want to Have,

5-Could Have, 7-Should Have, 9-Must Have

Use of intermediate values between two adjacent

judgments (2, 4, 6, 8) was allowed. A participant

might bypass rating on an agreement if he/ she felt it

did not have direct significance. Participants were

encouraged to provide comment for their ratings.

In this step, we teach students how to rate

meaningfully: It is likely that a participant would

simply treat every agreement as equally important,

e.g. all rated as “Must Have”. To minimize such

instances, we encourage participants to consider the

following factors when giving their priority ratings:

Whether this is a core function: a basic function

that a system must have. e.g. user administration

is a must have function for most of the projects

Whether this function depends on any other

functions: if a function is rated highly, the

functions that it is strongly dependent on should

be at least that high.

Figure 1 An Example of Rating Using

WikiWinWin

Step 4: Data collection and consolidation: After

all participants submitted their ratings, the results

were reviewed for completeness. Projects that didn’t

have any priority ratings from real client

representative were excluded. After this step, 10

projects remain for the study (6: multi-media, 2:

community service, 2; other).

Next, every rated agreement was classified based

on the list of dependability attributes in Table 2. If

duplicate ratings were given on one agreement, it

counted as one rating. We calculated the following on

each attribute:

a). Mean rating from each participant - take the

average of all his ratings belonging to that

attribute.

b). Mean rating from each project – for participants

playing the same role, average their results from

a).

c). Mean rating from each domain – for projects

belong to the same domain, average results from

b).

At the end of step 4, we had the dependency ratings

per each stakeholder class and domain.

4. Experiment Results

Here we will present and discuss the results from

the multi-media projects, but only summarize results

for community services and other projects due to

their small numbers of projects that can be used.

4.1

Analysis of

Stakeholder/ Value

Dependency Patterns in 6 Multi-Media

Projects

For this part, we have done a thoroughly analysis

for 6 multi-media projects, this would include the

correlation analysis among different stakeholders and

respective value dependency patterns for different

stakeholders.

4.1.1 Correlation among Different Stakeholders in

6 Multi-Media Projects

From Table 3, we can see that the average ratings

from different stakeholders are all around 7 which

mean “Should Have”. For one thing, the six projects

are all new projects, the initial requirements are

generally the core requirements with few goldenplating requirements, so the average ratings for all

requirements are “Should Have”.

For the other thing, generally, the rating given by

a participant is subjective to his/ her own

interpretation of the rating criteria. In this way, it

might cause non-uniform understanding of rating

criteria which might finally threat the validity of the

experiment. However, in our experiment, similar

average ratings with small standard deviation from

different stakeholders might imply the uniform

understanding of rating criteria from different

stakeholders, which means that different stakeholders

understand the rating “Must Have”, “Should Have”,

“Want to Have” “Not Worth Having” the same way,

which is also the expected result that we give

students tutorials on how to rate meaningfully.

Table 3. Average Ratings from Different

Stakeholders

Stakeholders

Average Ratings

rating sometimes included the user perspective since

the client may also be the users themselves.

Table 4. Correlation among Different

Stakeholders

*User

1.00

*User

0.32

*Maintainer

0.44

Client

0.29

*Client

*-role play stakeholders

*Maintainer

Client

*Client

1.00

0.05

0.33

1.00

0.53

1.00

To explore what the value dependency patterns

for each type of stakeholder are, we sort the average

ratings on attributes from each type of stakeholder

according to the ratings, and list the top 10 attributes

with the highest ratings and the last 10 attributes with

the lowest ratings for each type of stakeholder in

Table 5 . The number 1,2,3 before each attribute

stands for that whether the attribute belong to

Functional Tasks, Level of Services or Project

Activities. And we will explain in details each type of

stakeholder’s value dependency pattern respectively

in the following three sections.

Table 5. Stakeholder/ Value Dependency Patterns

-Top 10 and Last 10 Attributes for Every Type of

Stakeholders

Top 10

Last 10

*User

1. Others

3. Deployment

3. Support environment

3. Computer software

1. Query

2. Security

*User

*Maintainer

Client

* Client

2. Availability

3. Other software interface

7.16

6.75

7.22

6.74

1. Automation

3. Schedule

3.Computer hardware

2. Interoperability

2. Correctness

1. Notification

2. Performance

3. Language

1. Update

3. Budget

3. Transition

3. Course required process& tools

1.53

Standard Deviation

*-role play stakeholders

1.35

1.44

1.37

However, having a uniform understanding of

rating criteria doesn’t mean that different

stakeholders have the same value dependency pattern.

We did a correlation analysis on the stakeholders’

ratings of 39 attributes listed in Table 2, and the

result is shown in Table 4. We can see that except

the client and the role play client’s highest correlation

(0.53), correlations among other stakeholders are low.

Especially the correlation between role play

maintainer and real client is as low as 0.05, which

implies that different stakeholders have different

value dependency patterns. Another interesting

finding is that the client and role-play user have a not

low correlation (0.44). This might imply that client’s

*Maintainer

2. Security

2. Performance

3. Transition

1.Others

2. Availability

2. Usability

1. Query

2. Interoperability

2. Correctness

1.Notification

3.Computer software

3.Course required process& tools

3.Deployment

1.Usage analysis

3.Language

3.Computer communication

3.Schedule

3.Budget

3.Computer hardware

3.Other software interface

Client

2.Security

3.Computer hardware

2.Correctnes

3.Computer communication

3.Support environment

1. Display

1. Usage analysis

3. Budget

1. Administration support

3. Deployment

1. Notification

1. Scheduling

1. Query

3. Computer software

1. Others

3. Course required process& tools

1. Automation

3. User interface standards

3.Transition

3. Language

* Client

1. Scheduling

3.User interface standards

2.Security

1 Others

2.Correctnes

3.Support environment

3.Budget

3.Computer communication

1.Administration support

3.Computer software

3.Schedule

3.Deployment

1. Usage analysis

3.Computer hardware

2.Availability

3.Course required process& tools

1. Storage

3.Other software interface

2.Usability

3.Language

*: role- play stakeholders

1: this attribute belongs to Functional Tasks

2: this attribute belongs to Level of Services

3: this attribute belongs to Project Activities

: The ratings decreases downwards, for the Top 10 part, it means

the first one is the most important; for the Last 10 part, it means

the last one is the least important.

4.1.2. Role-play user value dependency pattern

From Table 5, we can see that among top 10

attributes of role-play user, 4 are functional attributes,

3 for level of services and project activities

respectively. Users are more concerned about query,

automation, update and other functional attributes.

They also care about some level of services, such as

availability,

correctness

and

performance.

Performance was valued to some extent, because too

slow would disturb users but super powerful system

was not required. Ratings on project activities depend

on whether the activities are related to users. For

example, users need to know whether end-user

programming is necessary, whether there is an

instructional user manual or not (support

environment), whether a minimum of 512MB

memory, 1GB hard-drive space and a Pentium

700MHz are enough for the system (Computer

hardware), whether there is user training time or not

(Transition). So these user-related project activities

are rated higher. However, for other user-independent

project activities, such as what process, agile or

discipline, the development team will adopt,

developing languages, Java or C#, software tools,

Eclipse or .NET, whether the budget for the system is

enough or not, the schedule for developing the

system is reasonable or not, users tend to not care

about them at all.

4.1.3. Role-play maintainer value dependency

pattern

For role-play maintainers, the most important

levels of service attributes for maintainers are

information security, availability, and correctness.

Development software and hardware tools, languages,

transition tasks (training, testing, and documentation)

and deployment were major concern for ease of

maintenance. And

4.1.4. Real client value dependency pattern

For real clients, 6 out of Top 10 are functional

attributes. The most important functional attributes

are administration support, usage analysis, and email

notifications. Since most clients are also

administrative users, capabilities included in the

administration support such as user and access

management, are essential to know who has what

access to the system. Usage analysis, including

various report statistics etc. provides much benefit to

clients. Notification is very important because users

must be notified via email or message once

appointment is scheduled or request is approved.

Automation was rated high in this case, because

administrative users prefer having computer do such

tedious but necessary tasks, which is usually the

reason why clients invest for the system.

The most important levels of service attributes are

security and correctness. This indicates clients

concerned about the risks associated with loss of

information and incorrect results from system

operations. Since most of the systems are web-based,

it is essential to be accessible via the internet, but

some downtime is acceptable. When considering

online viewing and accessing digital assets, security

measures are important for keeping these assets

secure. For online systems such as the online art

gallery and film archives, clients consider usability a

key factor for retaining their online shoppers.

The most important project attributes are support

environment and transition. These reflect clients’

concerns that the development teams will not be

generally available for life-cycle support.

Some further observations and explanations of

anomalies are: clients generally do not consider

development tools and languages important to

business outcomes, as long as it works; user interface

standard, including online form replicating exactly

the paper form, was considered less important by

clients because they believe users can learn how to

use new system. It is also interesting to find that in

this experiment, clients rated the budget lower

importance that is because for these students’ courses,

clients would not pay students to complete the system,

so budget is not a sensitive factor for them in this

case.

4.2 Role Player Representativeness Checking

In this experiment, due to the unavailability of

real users and maintainers, we train students to roleplay different stakeholders. Table 4 shows that the

correlation between real clients and role-play clients

is the largest (0.53) among all stakeholders. It also

can be seen that for the most part, the role-players

priority ratings could have been used as

representative client ratings, but with some

significant exceptions.

However, correlation coefficient 0.53 only shows

real clients and role-play clients’ rating are

intermediately correlated overall. To further check

the representativeness of role players, we track back

to each project to get the respective correlations

between real clients and role-play clients in the 6

multi-media projects. The correlation values in Table

6 show that three of the six projects have correlations

above 0.6 for all ratings, and net five of the six have

ratings above 0.6 when the top two differences from

each project are excluded. Especially for project 2, 3,

4, all three are above 0.8, which means highly

correlated. In the other side, these also points out the

need for follow up with role-playing stakeholders on

questionable ratings via interviews or other means.

Table 6. Correlation between Real Clients and

role-play Clients in 6 Multi-Media Projects

Project

P1

P2

P3

P4

P5

P6

Correlation

Correlation

without top 2

differences

0.48

0.92

0.78

0.64

0.36

0.40

0.60

0.92

0.94

0.84

0.63

0.45

One explanation of the strong correlations is that

the clients and developers have participated in several

mutual-learning activities before and during the winwin negotiations. These include goal-setting

exercises, visits to clients’ work sites, and

development of operational scenarios, use cases, and

benefits chains linking initiatives to desired

outcomes. Without these, the correlations would

likely have been considerably lower. Overall, the

role-players’ ratings tend to be somewhat lower, with

some exceptions. Across all six projects and all

attributes, the average rating for clients is 7.22 and

6.74 for client role-player as shown in Table 3.

Some further observations of role-players’ ratings

are: clients’ major concerns such as system secure

information, correct results, and availability were

well identified, but there were some understanding

problems on interoperability with the existing system

and interfacing with related databases. The most

obvious difference from real client is that role-play

client rates budgets higher, and this might be

explained by the reason that students are taught to

think from client perspectives that they usually

consider about the budget but neglecting the real

situation for these projects, which is that clients don’t

pay students for the course projects in this case.

4.3 Community Services Projects Results

Most of the community services projects share

common functions such as administration of

community services, tracking of volunteers, donors,

and grants, payment processing, event tracking and

management, and community communications

involving web sites, e-newsletters, and social

networking capabilities. Due to client availabilities,

only two of the five projects had well-formed

negotiation records. But these two had consistent top

priorities of administrative support, query, and

usability, and also high priority for availability/

backup-restore, data correctness, performance and

transition and deployment.

The consistency between real and role-playing

clients was generally quite high, with the exception

of two wide differences on the second project in

which the role-players undervalued backup and

restore, treating it just as data storage; and schedule,

for which the client had some under expressed earlyavailability needs. For the first project, the ratings

correlation was 0.67. For the second project, the

ratings correlation was 0.00 with the two wide

differences, and 0.59 without them. These points out

the need for follow up with role-playing stakeholders

on questionable ratings.

The consistency between clients and role-playing

users and maintainers was lower, as with the multimedia projects. The corresponding correlations were

0.28 for users, and 0.37 for maintainers. Again, in the

second project, role play user had a radically lower

schedule rating; without this, the correlation was

0.34.

4.4 Other Projects Results

As discussed earlier, the other projects were a

mixed bag of neighborhood small businesses,

research support, and social networking applications.

Still, they had some consistency in top-ranked

priorities:

availability/

backup-recovery

and

administration support. Also, their correlations

between real clients’ ratings were higher for roleplaying clients (0.64) than for users (0.51) and

maintainers (0.32).

5. Limitation and Threats to Validity

Non-representativeness of projects. The number

of projects included in this study is fairly small.

Although five projects were available for

representing community service type, only two had

sufficiently consistent and complete ratings to be

used. Also, the projects are small and are performed

by graduate level computer science students.

However, the off-campus team members were

working professionals, and the clients were from

real-world organizations needing the resulting

applications. The findings obtained in this study may

not represent other projects in a broader population.

Multiple perspectives. In this study, we

experimented with using students to role play nondevelopment stakeholders. It was unknown whether a

role-player’s rating was mixed with developer’s

perspective. It was noticed during the analysis that

client’s rating sometimes included the user

perspective since the client may also be the users

themselves.

Attributes

classification.

Generalized

classification of dependability attributes may bring

some risks to the dependability strength. For

example, end users may only be concerned with the

training provided to their work responsibilities, and

training provided to maintainers is less emphasized.

When these two training requirements were classified

by the same attribute - training, the averaged priority

rating tended to underestimate a particular

stakeholder’s priority on his concerned requirement.

6 Conclusions and Process Implications

In this paper, a role-play experiment was

conducted during the win-win prioritization to verify

the hypotheses that “Different stakeholders have

different value propositions” and at the same time to

assess the confidence of using role-players for

missing stakeholders. The results indicate that

attributes such as correctness, availability, budget and

schedule are generally high priorities for

acquirers/clients; usability, performance, and

interoperability are reasonably important for lessthan-critical

application

information

consumers/users. As administrators, functional

capabilities, such as administration support,

notification, and information storage/query/update

are generally high priorities. Maintainers have

reasonably high priorities on development tools and

transition attributes. Due to similar stakeholder

profiles across projects and the multi-dimensional

nature of stakeholder value propositions, there are

some similarities among stakeholders’ priorities. The

Results also show that with mutual-learning activities

and experiences, there are generally high

consistencies between client representatives’

priorities and role-players’ priorities.

6.1 Quantitative Extension of Table 1

One of the main objectives of the study has been

to obtain more quantitative information than Table 1

in section 2.2 about the relative priority of various

classes of system stakeholders with respect to various

system dependability attributes. The study has some

limitations in that the clients prioritizing the

dependability attributes show some aspects of being

information consumers, administrators, and acquirers.

For the main class of six multi-media archive

applications for which we have data, we would say

that their applications are not mission-critical in

terms of safety, but we would encounter a challenge

with respect to their Maslow need hierarchy [31].

This is that lower-level needs in the Maslow

hierarchy such as safety and security of one’s job or

enterprise are high-priority until it meets a given

level of satisfaction, but is not high priority

thereafter. Thus, we see that the clients had high

priorities for availability and backup/ recovery up to

a basic level, but did not need more extreme levels

such as needed in such mission critical domains as

passenger aircraft and nuclear power plants. From a

process standpoint, this implies that the use of Table

1 to baseline the attributes of interest to stakeholder

classes needs to reflect whether their value

dependencies are hygiene factors vs. mission-critical

factors.

We can see from Table 5 that attributes such as

correctness, availability/ backup-recovery, budget,

and schedule are generally high priorities from an

acquirer standpoint. As administrators, functional

capabilities such as administration support,

notification, and information storage/ query / update

are generally high priorities. And those as

representatives, of less-than-critical information

consumers,

usability,

performance,

and

interoperability are reasonably important, but not as

high as most of the others. However, the multidimensional nature of the clients makes it more

difficult to allocate priorities to stakeholder classes.

As the other client classes were similar in their

stakeholder profiles, it is not surprising that their

priority profiles were generally similar. From a

process standpoint, these conclusions imply that

stakeholder negotiation process agendas need to

consider multi-role stakeholders.

6.2 Representativeness

Stakeholders

of

Role-Playing

Even though the role-playing developers were

initially inexperienced in their clients’ domains, they

had gone through a sufficiently extensive set of

mutual learning process and experiences (site visits,

goal-setting, prototypes, etc.), which make their

priorities comparable with those of the clients

(correlations generally above 0.6 with some

exceptions, and as high as 0.92). Without the mutual

learning process and experiences (as with roleplaying as users or maintainers), there was generally

a positive correlation, but below 0.6. However, there

were occasional wide differences, indicating the

process implication for follow up on questionable

role-player ratings.

6.3 Future Work

For the incoming fall 2009 semester, we plan to

do replicate experiments to collect more date to

further quantitatively validate the hypotheses that

“Different stakeholders have different value

propositions”.

Lessons learned from this experiment could

provide us refined guidelines to improve the

experiment design and control. This would

include how to teach students role play different

stakeholders, how to teach students rate

requirements importance meaningfully, how to

make the dependability attributes’ granularity

worthwhile and not ambiguous for stakeholders’

rating. And when un-ignored differences arise,

how to follow up with these questionable ratings.

We also plan to extend the classes of

stakeholders, not only client, user, maintainer,

but also tester, IIV&Ver, and developer to see

their value dependency patterns respectively.

Based on the repeated experiments, we plan to

analyze the interaction among different

attributes, further reason about stakeholders’

utility functions, and provide process guidance

for software projects involving various classes of

stakeholders.

Acknowledge:

This work is cooperatively supported by the National

Natural Science Foundation of China under Grant

Nos. 60573082, 60873072, and 60803023; the

National Hi-Tech R&D Plan of China under Grant

Nos. 2006AA01Z182 and 2007AA010303; the

National Basic Research Program (973 program)

under Grant No. 2007CB310802.

References

[1] B. Boehm, H. In, “Identifying Quality-Requirement

Conflicts”, IEEE Software, 1996.

[2] J. Johnson, “My Life Is Failure”, Standish Group, Inc.,

2006.

[3] I. Rus, et al., “Software Dependability Properties: A

Survey of Definitions, Measures and Techniques”,

Fraunhofer technical Report, 2003.

[4] B. Boehm, “Software Risk Management”, IEEE CS

Press, 1989.

[5] M. Al-Said, “Identifying, Analyzing, and Avoiding

Software Model Clashes”, USC CSSE Technical

Report, 2003.

[6] B. Boehm, et al., “The Nature of Information System

Dependability: A Stakeholder/Value Approach”,

USC-CSSE Technical Report, 2004.

[7] B. Boehm, et al., “Guidelines for Lean Model-Based

(System) Architecting and Software Engineering

(Lean MBASE)”, USC-CSSE, 2007.

[8] B. Boehm, J. Lane, “Using the Incremental

Commitment Model to Integrate System Acquisition,

Systems Engineering, and Software Engineering”,

CrossTalk, 2007.

[9] P. Darke, G. Shanks, “Stakeholder Viewpoints in

Requirements Definition: A Framework for

Understanding Viewpoint Development Approaches”,

Requirements Engineering, 1(2): 88-105.

[10] I. Sommerville, P. Sawyer, “Viewpoints: principles,

problems and a practical approach to requirements

engineering”, Ann. Softw. Eng., 1997.

[11] S. Easterbrook, “Handling conflict between domain

descriptions with computer-supported negotiation”,

Knowledge Acquisition 1991.

[12] A. Finkelstein, et al., “Viewpoints: a framework for

integrating multiple perspectives in system

development. Int J Software Knowledge Eng, 1992.

[13] B. Nuseibeh, J. Kramer, A. Finkelstein, “A framework

for expressing the relationships between multiple

views in requirements specification”, IEEE Trans

Software Eng, 1994.

[14] G. Kotonya, I. Sommerville, “Viewpoints for

requirements definition”, IEE Software Eng J 1992.

[15] WN. Robinson, “Integrating Multiple Specifications

Using Domain Goals”, Proc. IWSSD-5 - 5th Intl.

Workshop on Software Specification and Design,

IEEE, 1989.

[16] S. Easterbrook, “Resolving Requirements Conflicts

with

Computer-Supported

Negotiation”,

In

Requirements Engineering: Social and Technical

Issues, 1994.

[17] B. Boehm, et al., “Characteristics of Software

Quality”, TRW Report to National Bureau of

Standards, 1973.

[18] J. McCall, et al., “Factors in Software Quality”, NTIS

AD-A049-014,015, 055, 1977.

[19] T. Gilb, “Principles of Software Engineering

Management”, Addison Wesley, 1988.

[20] R. Grady, D. Caswell, “Software Metrics: Establishing

A Company-wide Program”, Prentice Hall, 1992.

[21] R. Grady, “Practical Software Metrics for Project

Management and Process Improvement”, Prentice

Hall, 1992.

[22] B. Kitchenham, L. Pickard, “Towards a Constructive

Quality Model”, Software Engineering Journal, 1989.

[23] L. Bass, et al., “Software Architecture in Practice”,

Addison Wesley, 1998.

[24] P. Clements, et al., “Evaluating Software Architecture

Methods and Case Studies”, Addison Wesley, 2002.

[25] L. Chung, et al., “Non-Functional Requirements in

Software Engineering”, Kluwer, 1999.

[26] VR. Basili, et al., “Goal Question Metric Paradigm”,

Encyclopedia of Software Engineering, 1994.

[27] B. Boehm, et al., “The ROI of Software

Dependability: The iDAVE Model”, IEEE Software,

2004.

[28] S. Biffl, et al., “Value-Based Software Engineering”,

Springer, 2005.

[29] USC

Software

Engineering

Course,

2008,

http://greenbay.usc.edu/csci577/fall2008/site/

[30] D. Yang, et al., “WikiWinWin: A Wiki Based System

for Collaborative Requirements Negotiation”, HICSS

2008.

[31] AH. Maslow, “Motivation and Personality”, New

York, NY: Harper and Bros, 1954.