Title: Models and Uses of Peer Review – Some Challenges

advertisement

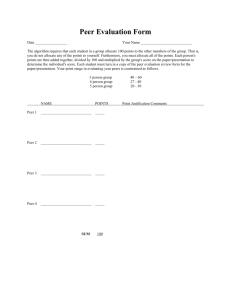

New Frontiers in (R&D) Evaluation Conference April 2006 Methods and Uses of Peer Review Challenges and Lessons Learned From a Canadian Perspective Jennifer Birta, National Research Council of Canada jennifer.birta@nrc-cnrc.gc.ca Flavia Leung, National Research Council of Canada flavia.leung@nrc-cnrc.gc.ca Abstract Peer reviews have long been used as a means to determine the quality and level of scientific excellence of research. The term “peer review”, however, can have various interpretations and the process can take different forms. At the National Research Council of Canada (NRC), different methods of peer review have been applied for a variety of purposes over the years. Alone, or combined with other methods, peer reviews have been used to support the evaluation of project and program relevance and impact. In addition, reviews by peers have provided input into planning future research directions and related project selection. Based on NRC’s experiences and lessons learned, the organization is continuing to refine its methodology and looking at possible new approaches to address some ongoing challenges related to its use of peer review processes. This paper explores the different uses of peer review and describes NRC’s practical experience relative to literature findings. The final section details a case study of an NRC horizontal initiative in which peer review was used as a means to support project selection as well as evaluate past performance. 1.0 Introduction Peer review is a well established, systematic quality control technique that has been in use since the 1800s. The term “peer review”, however, can have various interpretations and the process can take different forms. Most often when the term is mentioned, it refers to the process used prior to publishing research findings in a scientific journal. However, peer review also has a long history of being employed as a means to determine the quality and level of scientific excellence of past and future research conducted within science-based organizations. In addition, peer review is seen as an effective method to determine past or potential impacts of research either from a scientific or economic perspective and the extent to which resources (i.e. financial, human and capital) have been or will be used effectively and efficiently to achieve stated objectives. 1.1 Definitions of Peer Review from the Literature According to the Merriam-Webster Online Dictionary, a peer is “one that is of equal standing with another”. From a scientific perspective, the definition is more specific. The United States General Accounting Office (GAO) defines peers as “scientists or engineers who have qualifications and expertise equivalent to those of the researcher whose work they review” [1]. The GAO goes on to define peer review as: “a process that includes an independent assessment of the technical, scientific merit of research by peers who are scientists with knowledge and expertise equal to that of the researchers whose work they review.” An alternate but similar definition of peer review is “a documented, critical review performed by peers who are independent of the work being reviewed.”[2] 1 New Frontiers in (R&D) Evaluation Conference April 2006 1.2 Different Purposes of Peer Review The scientific community uses peer review for three main purposes, all with the underlying aim of improving quality [1]. Journal manuscript peer review (retrospective) – review of research results for competence, significance and originality prior to publication [3]. Proposal peer review (prospective) – assessment of proposals to make future funding decisions. Evaluation peer review (retrospective and prospective) – performance assessment of the level of excellence and impacts of past work. The intent of the performance assessment is to highlight areas where adjustments are needed to improve the quality of future work. This type of review may be used to make funding reallocation decisions [4]. 1.3 Methods for Conducting Peer Reviews There are three main methods for conducting a peer review: Paper-based reviews/or mail-in reviews - individuals are sent materials to assess and are asked to provide a written opinion on the work. The various reviewers never convene as a group. This type of review is typically used for journal manuscript review, but is also applied to proposal and retrospective evaluation reviews. Panel of experts - individuals are specially selected for their particular expertise as it relates to the subject/entity under review. The group comes together only for the purpose of a single review. This approach is usually applied to proposal or evaluation peer review. Standing committee – individuals are asked to sit on a committee for a set term, for example three years. During this timeframe they may be asked to participate in a number of reviews of different projects/programs. Standing committees tend to include individuals with a broad range of expertise covering many subject areas. This method is frequently used to review proposals or past performance. 1.4 Challenges Identified in the Literature While peer review is a valued and accepted means to assess scientific quality, it is not without shortcomings. Most of the published literature focuses on journal manuscript and proposal review, nevertheless, some common challenges have been documented around the use of peer reviews, regardless of the purpose. It is broadly recognized that peer review, by its nature, is subjective. Bias is possible due to various factors, including the three “sins” to which peer reviewers are susceptible: envy, favouritism, and the temptation to plagiarize [5]. These are reflected in some areas of concern which have been identified around the use of peer reviews, including: leakage of ideas (deliberate or subconscious incorporation of ideas proposed in work being reviewed into reviewer’s own work); conservatism (reduced likelihood of presenting radical ideas if it is believed that they will have less chance of being funded); neutrality (difficulty in assembling a review panel that is both knowledgeable and neutral); transparency (need to maintain integrity in the process); halo effect (prestigious applicants are automatically judged more favourably by lesser know colleagues); and, fraud (sometimes unpreventable) [5] [6] [7]. Given the difficulty associated with 2 New Frontiers in (R&D) Evaluation Conference April 2006 detection and measurement around such issues as plagiarism and fraud, these challenges are not always easy to identify and to address. Not surprising then, interest has been given to the topic of ethics and peer reviews [5] [8]. Alternatives suggested in the literature as a means to address some of the bias and ethical issues include: open reviews (all names are known to all parties) [10] [7], fully anonymous (blind) reviewing [7], open publishing via the internet to allow multiple experts to comment [11] [7] and productivity-based formula systems that look at track record (e.g., publications, advanced degrees) [9]. NRC has experienced many of the challenges outlined in the literature first-hand and has developed several approaches to minimize their influence on the process. Described in further detail later in this paper are: subjective bias, confidentiality, conflict of interest, supporting materials required for reviews, level of commitment, timing, costs, and reviewer fatigue. 2.0 Peer Review at the National Research Council of Canada 2.1 The National Research Council of Canada (NRC) NRC is one of 39 science-based departments and agencies within the Canadian government. It is the principal scientific research body conducting intramural research within the federal system. Total expenditures for the organization in 2004 were $712M (Canadian). NRC comprises 18 research Institutes across the country. An institute is a collection of programs that have a specific scientific or technology focus. NRC’s Institutes engage in leading-edge research in a broad range of scientific and technical areas. These include: nanotechnology, aerospace, biotechnology, information technology, molecular sciences, photonics, ocean engineering, and environmental technologies. NRC’s activities span the innovation spectrum. It conducts basic research, creating new knowledge and developing technology platforms. NRC also supports Canadian industry through fee-forservice and collaborative research projects. NRC additionally plays a key role in supporting innovation in Canada through other means, including its role as a catalyst for the development and growth of technology clusters – dynamic regional economies/businesses built up around a core of highly skilled scientific expertise and infrastructure. 2.2 Uses of Peer Review at NRC At NRC, different methods of peer review have been utilized for a variety of purposes in recent years. Peer reviews have ranged from simpler paper-based approaches to the more complex two-tiered process combining both mail-in reviews and panels of experts. NRC has used peer review at various levels of the organization, looking at Institute programs (a collection of projects) right down to individual projects. The peer review methodology has been used to determine the feasibility of future programs/projects as well as assess past performance of ongoing research. Peer review has been used on its own and in combination with other methods (e.g., literature review, interviews). In 2005, NRC employed over 2000 researchers working in diverse areas ranging from astrophysics to biochemistry. In spite of access to a large internal pool of specialized researchers, the organization draws solely on external expertise to conduct its peer reviews. The reasoning behind this decision is it is felt inappropriate for researchers 3 New Frontiers in (R&D) Evaluation Conference April 2006 within the organization to comment on the work of their colleagues. Consequently, NRC recruits reviewers from other organizations within Canada in addition to entities abroad. Peers are drawn from academia, other government laboratories as well as the public sector. Attention is paid to selection of well qualified, credible reviewers who have reputations for objectivity and neutrality. As a science-based organization, peer review is a particularly important methodology for NRC as it provides external opinions on whether proposed or past research is considered “world class” or “cutting edge”. Peer review can improve both the technical quality of projects and the credibility of the decision-making [2]. NRC values the judgements of its research provided by peers and there is evidence that significant decisions relating to the reorientation of research areas have been made over the years by senior management based on recommendations resulting from peer reviews. Over the past five years, NRC has observed increasing demands on scientists to participate in a growing number of reviews. In Canada, there is a move towards providing more accountability for the use of federal government funds and peer review is seen as the best method of providing an assessment of R&D activities. Canadian programs such as Genome Canada, the primary funding source relating to research in the areas of genomics and proteomics, and Canada Research Chairs, aimed at attracting and retaining some of the world's most accomplished researchers, have also adopted peer review as a key component in their decision-making processes [12]. Correspondingly, in 1993, the US Congress passed the Government Performance and Results Act which requires federal agencies to engage in program and performance assessment. In order to meet the requirements of this Act, many science-based R&D organizations are relying on various forms of peer review to justify and evaluate their performance. Furthermore, a report by the Committee on Science, Engineering and Public Policy concluded that the most effective means of evaluating federally funded research programs is through the use of a peer review process [13]. Consequently, there has been a marked increase in the number of requests received by US experts to serve on various reviewing committees and panels. As a result of these developments, NRC and others are finding it increasingly difficult to find peers willing and able to participate in reviews. NRC has been looking for ways to improve its processes and develop more streamlined approaches that make most efficient use of the time of those involved; both the researchers performing the review and those being reviewed. Access to qualified experts, however, is not the only challenge facing the peer review methodology. Peer reviews have multiple facets that make them difficult to manage. This next section elaborates on other challenges that NRC has experienced in recent years. 3.0 Peer Review Challenges Encountered by the National Research Council of Canada Some of the challenges that NRC has encountered, mainly in relation to peer review as an evaluation methodology, are highlighted in this section. 3.1 Subjective Bias Subjective bias is inherent in peer review results because of the need to rely on human judgement [11]. Peer review results can be substantially affected by the level of 4 New Frontiers in (R&D) Evaluation Conference April 2006 knowledge or lack of independence of the peers that participate. Level of “excellence” is dependent on each individual’s background and past experiences [14]. NRC takes various actions to minimize the effect of subjective bias. Firstly, the organization tends not to use peer review in isolation as the sole approach for assessment. In evaluation peer reviews, it is usually combined with other lines of evidence, such as interviews and analysis of performance data, so that key findings are balanced with other sources of information. Secondly, citation statistics are occasionally included in the documentation sent to reviewers, as an objective measure to aid in their judgements (notwithstanding other challenges that come into play with citation measures which are out of the scope of this paper). Thirdly, NRC recognizes the extreme importance of selecting individuals that are known to be objective and credible and do not have preconceived opinions about the research under review. NRC commits substantial effort to the identification of individuals with the appropriate credentials by consulting with upper management, requesting input from participants of previous reviews and searching various web sites (e.g., award/prize winners, editorial boards). Subjective bias remains a difficult challenge to manage and one that cannot entirely be resolved. At some point it becomes necessary to trust scientists to delivery an objective review based on their desire not to diminish their own reputation as a credible and fairminded reviewer. 3.2 Confidentiality Issues One of the concerns often cited in the literature is the potential for peers to exploit knowledge gained during a review for their own benefit [5], [6]. A reviewer necessarily obtains information and insight about the research under review. Reports highlighting data and describing processes and techniques in use must be shared with reviewers in order for them to form an opinion about the work. The challenge is to keep the material shared with reviewers confidential and safe from being used inappropriately. NRC is not aware of any profiting from access to its privileged information through peer review activities. Nevertheless, to guard against information presented as part of the review process being used by reviewers for other purposes, NRC requires its reviewers to sign confidentiality agreements. The agreement specifies that the information provided, as part of the review process, cannot be shared with others or used outside the confines of the review. NRC stipulates that the review materials must be returned or destroyed in a secure manner when the review is completed. By requiring reviewers to sign this document, the confidential nature of the information is explicitly brought to their attention. Unfortunately, this serves only as a precautionary measure as it is difficult to enforce. Legal opinion advises that the best defence for keeping particularly sensitive information confidential and ensuring that proprietary information is not misused is to not disclose it to reviewers. 3.3 Conflict of Interest Peers must be able to act objectively, honestly and in good faith. Individuals should not participate in a review where their own interests conflict with their responsibilities as a reviewer. Reviewers are chosen because of their scientific knowledge of the research areas being assessed. It is often not possible to find peers that are both unbiased and sufficiently knowledgeable in the area under review [6]. To minimize the effects of conflict of interest, NRC sends all reviewers a form for their signature. This document 5 New Frontiers in (R&D) Evaluation Conference April 2006 requests declaration of any real, potential or apparent conflict of interest to the peer review coordinator prior to the review or at any time during the review. A particular challenge for NRC is identifying appropriate industry representatives that can serve as peer reviewers and not be put in a conflict of interest situation. Participation by members of the private sector is seen as increasingly important as the federal government moves to expand its links to the wealth creation sector of the economy. A large number of NRC’s Institutes work closely with industry through collaborative partnerships. This creates close ties between many of the Institutes and Canadian industry. The challenge that arises from having close working relationships with companies makes it difficult to identify appropriate industry representatives that could serve as objective peer reviewers. This difficult position has regrettably led to minimum representation from industry on many of NRC’s review panels. The source of reviewer names is another issue with which NRC wrestles. It is advantageous to use reviewers that have a certain level of knowledge and familiarity with the program under review. Those whose research is under review are the most familiar with experts in their field, however, asking these individuals to provide names of potential reviewers can place them in a conflict of interest situation. Researchers will tend to provide names of reviewers who are “friendly” or are known to be supportive of the projects being evaluated. To alleviate this challenge, it is best if names suggested by the scientists who will be reviewed are augmented with names from other sources. In Canada, there is no central database that can be drawn upon identifying reviewers and their areas of expertise. The Canadian granting agencies (e.g., Natural Sciences and Engineering Research Council) do have databases containing contact and area of expertise information on each of their applicants, however, these are not public and NRC cannot access them directly. In the recent past, NRC has collaborated with two of these funding agencies with some degree of success. The organizations were able to provide NRC with the names of a number of prospective reviewers and the possibility remains to expand on this approach in the future. Other sources of potential names that have been used by NRC are web sites of university professors working in similar areas, listings of recent top prize winners in related research areas and editorial boards of journals in which the scientists under review publish. 3.4 Supporting Material A key component supporting the peer review process is the provision of relevant background documentation. Sufficient details about the project/program under review must be assembled and sent to reviewers to allow for an informed, valid assessment to be completed. The challenge is determining the appropriate type of materials and the quantity that should be sent. Existing documentation has usually been written with a certain audience in mind and is frequently of limited applicability to a peer review. To complete the assessment most efficiently, reviewers need customized information. They often find it frustrating when extraneous information about the program is included. In cases when information was not customized, NRC has received feedback from reviewers indicating they found it difficult to conduct the review because of the poor quality of the materials provided. To deal with these challenges, NRC attempts to tailor materials sent to reviewers whenever possible. 6 New Frontiers in (R&D) Evaluation Conference April 2006 3.5 Level of Commitment The level of commitment individuals make to a review is influenced by several factors, many of which are difficult to control. Firstly, different types of reviews require different levels of commitment. A paper-based, mail-in review can be completed without having to travel to the institution under review and without the same time constraints imposed by a site visit. Participating as a committee member requires individuals to factor in the time needed to travel to and from the organization, and to be available for a number of consecutive days. There is, however, a sense of community that results from being part of a committee that does not exist if reviewers never assemble face-to-face. Reviewers who serve on a committee or panel style review have an obligation to each other, as well as the entity under review to come to meetings prepared, conduct themselves in an objective manner and not provide either overly negative or positive comments. NRC has clearly observed the different levels of commitment from reviewers resulting from the type of review used. Unfortunately, the style of review is often dependent on factors such as timing and cost so it is not always feasible to use a committee-type of review to raise the level of reviewer’s dedication to a project. Secondly, the concept of anonymity can be linked to level of commitment. It is common place to keep the identity of reviewers from those undergoing the review. If the identity of reviewers is not known, there is less motivation to meet the deadline and provide a quality review as there is no possibility of damage to the individual’s reputation if a mediocre review is submitted. Conversely, if a reviewer’s identity is disclosed, there is less incentive to be frank, open and honest. NRC has used both approaches and has observed the consequences to the level of commitment. For mail-in reviews, historically NRC has kept the identity of reviewers confidential. In the case of panels or committees, the identity of individuals is automatically revealed through the interactions the members have with the researchers under review. Thirdly, the reputation or status in the scientific community of the submitting institution influences the commitment of reviewers. The higher the visibility or profile of the program, the greater the interest will be in participating in the review. NRC has experienced this challenge first-hand. With some of its lesser known programs it has had more difficulty securing individuals to participate in a review. This is problematic as often an evaluation of lesser known or newer programs is more crucial than those that have an established reputation. NRC has yet to develop a solution for this challenge. Finally, a certain level of commitment from reviewers can potentially be obtained by providing them with an honorarium. Although many organizations pay a nominal amount of money to their reviewers, it is NRC’s policy not to provide compensation beyond covering travelling expenses. This is with the recognition that responsibility for peer review is a professional obligation and that the reviewer will, at some point, also need his/her work assessed by peers. This stance places NRC at a certain disadvantage, particularly if individuals have other requests for them to participate in reviews for which a payment is provided. A possible resolution for NRC might be to revise this policy and offer honoraria to its reviewers in the future. 3.6 Timing There are several challenges related to timing of peer review both from the perspective of a coordinator and a reviewer. A significant amount of time is needed to plan and execute a peer review, regardless of the approach taken. In the planning stage, NRC’s 7 New Frontiers in (R&D) Evaluation Conference April 2006 experience has been that a minimum of four months is needed as lead-time between sending the invitation to participate and the site visit date. This upfront time is reduced to two months between securing individuals and sending out materials if a mail-in review is conducted. Another aspect of timing is that it tends to be more difficult to attract individuals who are top in their field if a significant amount of time must be committed to the review. Paperbased reviews demand less time to complete than panel or committee style assessments, both from the standpoint of the reviewers and the entity under review. However, mail-in reviews do not offer the opportunity for those under review to provide clarity, correct misunderstandings or rectify erroneous interpretations as there is no direct interaction amongst the parties. Participating as a panel or committee member requires more a greater time commitment. Panel members not only attend the site visit but also commit time before the meeting to review documentation and additional time after the visit has concluded to complete the report. It is NRC’s experience that it is becoming impractical to engage committee members in writing the report once they have returned to the pressures of their office. To deal with this, NRC has incorporated into its site visit agendas time not only for in-camera committee discussions but also for the committee to write the first draft of the report. The timing of the review during the calendar year may also become a challenge. Often, it is desirable to conduct a review at a particular time of the year which may not necessarily be conducive to reviewers’ schedules. For instance, reviewers that are drawn from the university sector have predetermined commitments during the academic calendar (e.g., the beginning of the semester, examination time) which prevent them from taking on additional duties. Another occasion which reduces the availability of scientific experts is at points during the year when granting agencies call for proposals. These times make it difficult for those either submitting or reviewing proposals to make a commitment to participate in another peer review project, for instance at NRC. To overcome these issues, NRC attempts to schedule its peer reviews at points during the year that are less likely to coincide with these other events. 3.7 Costs There can be substantial costs associated with peer review, more so when convening a group of people together for a site visit over several days. The costs are somewhat dependent on the extent to which a panel has international representation and therefore the distance people are required to travel. As mentioned above, some organizations pay honoraria which need to be factored into the overall expenditures as well. In addition to these very real expenses, there are other costs involved in peer review if the process is looked at in its entirety. Besides travel, honoraria and hospitability charges to host a committee there are the costs associated with lost productivity for each individual while they are away from their office participating in a site visit. In NRC’s case, typically $40K - $50K (Canadian) is budgeted for peer reviews to cover travel and hospitability expenses (as noted above, NRC does not pay honoraria to its reviewers). 3.8 Reviewer Fatigue As discussed above, more and more S&T organizations around the world are looking to peer review to answer questions about quality, relevance and future directions. As the 8 New Frontiers in (R&D) Evaluation Conference April 2006 demands for reviewers increase exponentially, it becomes more and more difficult to find individuals in specialized fields who are willing and available to participate in reviews. NRC has yet to overcome this challenge but the organization is looking at possible ways in which Institute advisory boards might play a role in the peer review process. Presently these standing committees have a mandate to provide external guidance on the long-term directions of NRC's Institutes, however, there may be a possibility in the future to enlarge their role to become engaged in evaluation peer reviews. 4.0 Case study – Peer Review Used for Project Selection and Evaluation of Past Performance 4.1 Description of the Genomics and Health Initiative (GHI) NRC launched the Genomics and Health Initiative (GHI) in 1999 to exploit advances in genomics and health, building on NRC's expertise in its biotechnology research Institutes and its regional innovation networks across Canada. GHI is a complex initiative comprising eight research programs cutting across 10 different NRC institutes and involving approximately 400 research staff. Annual expenditures for the Initiative are in the order of $25M (Canadian) annually. GHI research advances fundamental and applied technical research in areas such as the diagnosis of disease, aquaculture and agricultural crop enhancement. In addition to research collaborations, GHI focuses on transferring the knowledge developed at NRC in genomics and health to a variety of industry sectors. The initiative falls within the responsibility of NRC’s Vice-President Life Sciences and is managed on a day-to-day basis by a coordination office. GHI also has a standing committee of external experts (referred to as the Expert Panel) that provide advice to NRC senior management on scientific and management issues relating to the initiative. 4.2 Description of How Peer Review Was Used GHI is presently funded in phases that are three years in length. Research programs for each new phase are chosen through a competitive, peer review process. In addition to the cyclical competitive process, as an agency of the federal government, NRC is required to conduct periodic evaluations of the initiative. These evaluations are conducted to provide NRC senior executives and the federal government at large, with information on progress, quality, and management issues. Below is a more detailed description of how proposal and evaluation peer review were recently implemented. 4.3 Review of GHI Proposals Since its inception, GHI has used a rigorous competitive process to select research programs. Programs are assessed against both scientific and market-relevant criteria (e.g., originality of the proposed research and the expected results; contribution to recruitment and retention of highly qualified personnel; and, contribution to long term economic growth and enhancement of productivity for firms operating in Canada). The competition is used to ensure the programs that will provide the most value for Canada, both from a scientific and economic perspective, are selected. The first step in the proposal process is the writing and submission of letters of intent (LOI) by principal investigators. These documents contain a summary of the proposed research, a description of the potential market applications and a listing of the planned research team. The LOIs are reviewed by members of the Expert Panel who provide 9 New Frontiers in (R&D) Evaluation Conference April 2006 written comments regarding the feasibility and quality of the proposed work and a recommendation as to whether a full proposal should be submitted. If it is determined that the proposed research should move on to the next stage, a full-length proposal is written and submitted for assessment. Each proposal is sent to a minimum of three external reviewers who are asked to provide written comments concentrating on the scientific merit and quality of the proposal. Once this feedback has been received, the Expert Panel meets to discuss the merits of each proposal in relation not only to scientific excellence but market potential. Applicants are given an opportunity to meet with the panel to respond to concerns and questions. At the completion of a two day meeting, the panel provides recommendations regarding which proposals should be funded and what resources should be allocated. This information is provided to NRC’s Vice Presidents who are responsible for final program approval and funding allocation decisions. Figure 1 depicts the proposal process. Using this rigorous process of having the proposals reviewed first by individuals with Figure 1 - GHI Proposal Process Formal web posting of GHI guidelines and criteria Review LOIs by Expert Panel (EP) Prepare and submit Letters of Intent (LOI) by Principal Investigators (PIs) Proposal preparation workshop Proposals prepared by PIs Proposals reviewed by external reviewers EP reviews proposals and provides recommendations VPs review EP recommendations and approves new programs specialized, in-depth scientific knowledge of the research area and then by an expert panel with management and industry experience results in the “best” science being funded, in terms of originality and commercial viability. Final decisions made by NRC senior executive ensure future projects are aligned with the priorities of the organization. 4.4 Evaluation of GHI Programs The recent evaluation of GHI examined issues around ongoing relevance or rationale for the initiative; the extent to which the activities of GHI led to the achievement of the initiative’s objectives; whether the most appropriate and efficient means were used to 10 New Frontiers in (R&D) Evaluation Conference April 2006 achieve objectives; and the appropriateness and effectiveness of program delivery. The project applied several methodologies including review of internal documents, published literature and performance data; impact studies, interviews and an international comparison with other organizations were used to gain insights into these evaluation issues. A paper-based, mail-in peer review was chosen to obtain external feedback on the progress made by the research programs. A set of peers, external to NRC, were asked to provide their opinions and comments on the scientific progress made by the programs over the past three years in comparison to the original objectives, scope of work, deliverables and resources expended. The initial intent was to recruit a minimum of three external reviewers per GHI program (total 24). Due to challenges around the recruitment of reviewers and difficulties in receiving completed reviews back only one program out of the eight had three reviews returned. Fifty-five individuals were contacted to participate, of those 21 agreed to act as a reviewer and 14 reviews were returned to NRC. Reviewers were sent two main documents to complete their assessment - the research program charter (which contained the original program objectives and planned timelines) and a document which summarized the results the program had achieved to date (based on existing annual performance reports). Once the individual reviews were received, they were sent on to members of the same Expert Panel used in the proposal peer review, to summarize the results. Only the summaries for each program were used in the final evaluation report. The individual reviewers’ comments as well as the identity of the reviewers were kept confidential. 4.5 Challenges and Opportunities of this Approach These two approaches highlight some of the challenges of peer review but also identified some opportunities for NRC. There were two key difficulties with this approach. The greatest challenge encountered was the short timeframe between the two peer reviews, the evaluation project followed the proposal review by only 12 months, making it difficult to recruit the required number of reviewers for the evaluation. Individuals most qualified to participate had already contributed as part of the earlier proposal review. The evaluation team was therefore required to request the participation of experts who were not as familiar with GHI and whose expertise was on the peripheral of the research under review. As highlighted above, both of these issues affect the level of commitment reviewers have for a project. It is believed the low review return rate (67%) for the evaluation was a direct result of the low profile GHI had with the reviewers recruited. It is apparent from this experience that two different approaches to peer review on the same initiative should not be attempted within such a short timeframe. In cases where both proposals and past performance must be assessed, the two should be integrated to make optimum use of the review. The second challenge related to the materials provided to reviewers for the evaluation. Both documents had been previously prepared to serve other purposes making it difficult for reviewers to satisfactorily answer the questions asked of them. As a result, the completed reviews were not as detailed as originally expected. One of the opportunities that became apparent from this approach is the benefit of having a standing committee in place. A standing committee establishes a familiarity and sense of obligation to the program that does not exist when individuals are 11 New Frontiers in (R&D) Evaluation Conference April 2006 contacted to participate on a single review. In the case of the GHI reviews, the value of having access to these experts allowed for more comprehensive reviews to be completed in a shorter timeframe because standing committee members had a high level of commitment to the initiative. These two back-to-back reviews of GHI highlight how important the peer review approach is to a research-based organization such as NRC. Peer review can not only provide a judgement of the quality, feasibility and future applicability of research but also a credible retrospective assessment of past research that other methodologies cannot provide. 5.0 Conclusion There is no refuting that the current peer review system faces a number of challenges. Although some have come forward with suggestions to modify the peer review system, few of these have been implemented to any great degree [7], [9], [11]. None of proposed approaches help to reduce the pressure on the system resulting from the growing volume of peer review requests. Despite the difficulties with the present system, peer review has the support of most researchers [6], [11]. It is still viewed as the most appropriate means to assess the scientific merits of past and future work. For this reason, NRC will continue to use peer review in the foreseeable future. Peer review remains the most appropriate means to answer questions relating to quality and level of scientific excellence for project selection and assessment of past performance. Based on its experiences and lessons learned, NRC strives to refine its methodology and streamline its techniques in the context of the many challenges the peer review approach presents. Future considerations for NRC include formalizing its relationships with the science-based granting agencies to gain easier access to their expert databases, reconsidering its policy on not paying honorarium to reviewers and incorporating standing committees into its peer review approaches. References [1] United States General Accounting Office. March 1999. Federal Research – Peer Review Practices at Federal Science Agencies Vary. Report to Congressional Requesters, GAO/RCED-99-99. [2] National Research Council. 1998. Peer Review in Environmental Technology Development Programs. Committee on the Department of Energy – Office of Science and Technology’s Peer Review Program, Washington, D.C.: National Academy Press, http://www.nap.edu/catalog/6408.html (accessed 24 February 2006). [3] Brown, T., Peer Review and the Acceptance of New Scientific Ideas – Discussion Paper from a Working Party on equipping the public with an understanding of peer review, November 2002-May 2004, Sense About Science, May 2004, http://www.senseaboutscience.org.uk/PDF/peerReview.pdf (accessed January 2006). [4] Guston, D. H., The Expanding Role of Peer Review Processes in the United States, 4 Public Research, innovation and Technology Policies in the US, pp. 4-31-4-41. [5] Abate, T., What’s the verdict on peer review?, Ethics in Research, 21stC, 12 New Frontiers in (R&D) Evaluation Conference April 2006 http://www.columbia.edu/cu/21stC/issue-1.1/peer.htm (accessed 08/12/2003). [6] The Royal Society (UK). 1995. Peer Review – An assessment of recent developments, http://www.royalsoc.ac.uk/displaypagedoc.asp?id=11423 (accessed 3 January 2006). [7] Kundzewicz, Z.W., Koutsoyiannis, D., Editorial – The Peer Review System: Prospects and Challenges, Hydrological Sciences Journal, Vol 50, No. 4, 577-590. [8] Armstrong, J.S., Peer Review for Journals: Evidence on Quality Control, Fairness, and Innovation, Science and Engineering Ethics, Vol. 3, 1997, 63-84. [9] Kostoff, R. N., 1997b, Research Program Peer Review: Principles, Practices, Protocols, http://www.dtic.mil/dtic/kostoff/peerweb1l.html (accessed in July 2002). [10] Walsh, E., Rooney, M., Appleby, L., Wilkinson, G., Open Peer Review: A Randomised Control Trial, British Journal of Psychiatry, Vol. 176, 2000, 47-51. [11] McCook, A., Is Peer Review Broken? The Scientist, Vol. 20, Issue 2, 2006, p. 26 [12] Brzustowski, T., Is Peer-Review Fatigue Setting In?, NSERC Contact, Summer 2000, Vol. 25, No. 2, ISSN 1188-066X. [13] National Academy of Sciences. February 1999. Evaluating Federal Research Programs: Research and the Government Performance and Results Act. Committee on Science, Engineering and Public Policy, Washington, D.C: National Academy Press. [14] Finn, C. E. Jr., The Limits of Peer Review, Education Week, May 8, 2002. 13