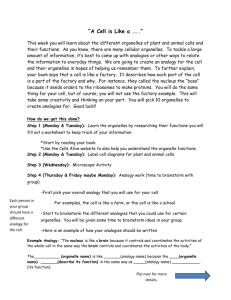

It`s like, you know - American Statistical Association

advertisement