Informative Content

advertisement

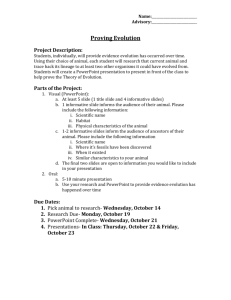

Informative Content Extraction By Using Eifce [Effective Informative Content Extractor] ABSTRACT Internet web pages contain several items that cannot be classified as the ―informative content,‖ e.g., search and filtering panel, navigation links, advertisements, and so on. Most clients and end-users search for the informative content, and largely do not seek the non-informative content. As a result, the need of Informative Content Extraction from web pages becomes evident. Two steps, Web Page Segmentation and Informative Content Extraction, are needed to be carried out for Web Informative Content Extraction. DOM-based Segmentation Approaches cannot often provide satisfactory results. Vision-based Segmentation Approaches also have some drawbacks. So this paper proposes Effective Visual Block Extractor (EVBE) Algorithm to overcome the problems of DOM-based Approaches and reduce the drawbacks of previous works in Web Page Segmentation. And it also proposes Effective Informative Content Extractor (EIFCE) Algorithm to reduce the drawbacks of previous works in Web Informative Content Extraction. Web Page Indexing System, Web Page Classification and Clustering System, Web Information Extraction System can achieve significant savings and satisfactory results by applying the Proposed Algorithms. Existing System • The problem of information overload: – Users have difficulty assimilating needed knowledge from the overwhelming number of documents. • The situation is even worse if the needed knowledge is related to a temporal incident. – The published documents should be considered together to understand the development of the incident. Proposed System: For further Effective Informative Content Extraction, it needs to segment the web page into semantic blocks correctly. By applying the Proposed EVBE Algorithm, the blocks such as BL3 and BL4 can be extracted easily. However, VIPS algorithm cannot segment them as separate blocks when the Permitted Degree of Coherence (PDoC) value is low. It can segment them as separate blocks only if PDoC value is high. However, when the PDoC value is high, it segments the page into many small blocks although some separate blocks should be a single block. It is unreasonable and inconvenient for any further processing. Although BL3 contains the informative content of the web page, BL4 doesn‘t contain any informative content of the page. Actually the content nature of BL3 and BL4 is different and they should be segmented as separate blocks. However, when the PDoC value is low, VIPS algorithm assumes BL3 and BL4 as a single block. The great rules of EVBE Algorithm can reduce the drawbacks of previous works and can help for getting finer results in Web Page Segmentation. Some solutions proposed DOMbased Approaches to extract the informative content of the web page. Unfortunately DOM tends to reveal presentation structure other than content structure, and is often not accurate enough to extract the informative content of the web page. CE needs a learning phase for Informative Content Extraction from web pages. So it couldn‘t extract the informative content from random one input web page. FE can identify Informative Content Block of the web page only if there is a dominant feature. So the Proposed Approach intends to introduce EIFCE Algorithm which could extract the informative content that is not necessarily the dominant content and without any learning phase and with one random page. It simulates the concept of how a user understands the layout structure of a web page based on its visual representation. Compared with DOM-based Informative Content Extraction Approaches, it utilizes useful visual cues to obtain a better extraction of the informative content of the web page at the semantic level. The efficient rules of the Proposed EVBE Algorithm in Web Page Segmentation Phase can help for getting finer results in Web Informative Content Extraction. MODULES: 1. Text Segmentation 2. Text Summarization 3. Web Page Segmentation 4. Informative Content Extraction Modules Description 1. Text Segmentation The objective of text segmentation is to partition an input text into nonoverlapping segments such that each segment is a subject-coherent unit, and any two adjacent units represent different subjects. Depending on the type of input text, segmentation can be classified as story boundary detection or document subtopic identification. The input for story boundary detection is usually a text stream. 2. Text Summarization Generic text summarization automatically creates a condensed version of one or more documents that captures the gist of the documents. As a document’s content may contain many themes, generic summarization methods concentrate on extending the summary’s diversity to provide wider coverage of the content. 3. Web Page Segmentation Several methods have been explored to segment a web page into regions or blocks. In the DOM-based Segmentation Approach, an HTML document is represented as a DOM tree. Useful tags that may represent a block in a page include P (for paragraph), TABLE (for table), UL (for list), H1~H6 (for heading), etc. DOM in general provides a useful structure for a web page. But tags such as TABLE and P are used not only for content organization, but also for layout presentation. In many cases, DOM tends to reveal presentation structure other than content structure, and is often not accurate enough to discriminate different semantic blocks in a web page. The drawback of this method is that such a kind of layout template cannot be fit into all web pages. Furthermore, the segmentation is too rough to exhibit semantic coherence. Compared with the above segmentation, Vision-based Page Segmentation (VIPS) excels in both an appropriate partition granularity and coherent semantic aggregation. By detecting useful visual cues based on DOM structure, a tree-like vision-based content structure of a web page is obtained. The granularity is controlled by the Degree of Coherence (DoC) which indicates how coherence each block is. VIPS can efficiently keep related content together while separating semantically different blocks from each other. Visual cues such as font, color and size, are used to detect blocks. Each block in VIPS is represented as a node in a tree. The root is the whole page; inner nodes are the top level coarser blocks; children nodes are obtained by partitioning the parent node into finer blocks; and all leaf nodes consist of a flat segmentation of a web page with an appropriate coherent degree. The stopping of the VIPS algorithm is controlled by the Permitted DoC (PDoC), which plays a role as a threshold to indicate the finest granularity that we are satisfied. The segmentation only stops when the DoCs of all blocks are not smaller than the PDoC. 4. Informative Content Extraction Informative Content Extraction is the process of determining the parts of a web page which contain the main textual content of this document. A human user nearly naturally performs some kind of Informative Content Extraction when reading a web page by ignoring the parts with additional non-informative contents, such as navigation, functional and design elements or commercial banners − at least as long as they are not of interest. Though it is a relatively intuitive task for a human user, it turns out to be difficult to determine the main content of a document in an automatic way. Several approaches deal with the problem under very different circumstances. For example, Informative Content Extraction is used extensively in applications, rewriting web pages for presentation on small screen devices or access via screen readers for visually impaired users. Some applications in the fields of Information Retrieval and Information Extraction, Web Mining and Text Summarisation use Informative Content Extraction to pre-process the raw data in order to improve accuracy. It becomes obvious that under the mentioned circumstances the extraction has to be performed by a general approach rather than a tailored solution for one particular set of HTML documents with a well-known structure. System Configuration:H/W System Configuration:Processor Pentium –III - Speed - 1.1 Ghz RAM - 256 MB (min) Hard Disk - 20 GB Floppy Drive - 1.44 MB Key Board - Standard Windows Keyboard Mouse - Two or Three Button Mouse Monitor - SVGA S/W System Configuration: Operating System :Windows95/98/2000/XP Application Server : Tomcat5.0/6.X Front End : HTML, Java, Jsp Scripts Server side Script Database : Mysql Database Connectivity : JDBC. : JavaScript. : Java Server Pages. CONCLUSION Web pages typically contain non-informative content, noises that could negatively affect the performance of Web Mining tasks. Automatically extracting the informative content of the page is an interesting problem. By applying the Proposed EVBE and EIFCE Algorithms, the informative content of the web page can be extracted effectively. Automatically extracting Informative Content Block from web pages can help for increasing the performance of Web Mining tasks. The empirical experiment of the Proposed Approach is planned as the future work.