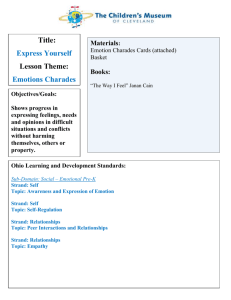

expression of emotions: cues for emotion recognition.

advertisement

Chapter 3 EXPRESSION OF EMOTIONS Chapter 3 EXPRESSION OF EMOTIONS: CUES FOR EMOTION RECOGNITION. 3.1 Introduction. The purpose of this work is the classification of emotions through certain attributes of the spoken communication. Therefore, it is worthwhile to go into a short overview to see how emotions are expressed within the human communication and, particularly, how emotions are enclosed within the oral expression. 3.1.1 Expressing emotions. There is a large literature on the signs that indicate emotion, both within the psychological tradition and beyond it. The vocal cue is one of the fundamental expressions of emotions, on a par with facial expression. Primates, dolphins, dogs and all the mammals have emotions and can convey them by vocal cues. Humans can express their feelings by crying, laughing, shouting and also by more subtle characteristics of the speech. Consequently, in expressing and understanding emotions, different types of sources need to be considered. Emotional manifestation has also a wide range of somatic correlates, including heart rate, skin resistivity, temperature, pupillarity diameter and muscle activity. They have been widely used to identify emotion-related states, for instance in lie detection. However, key studies convey the idea, that signs of emotion are clearer identified through facial expression [Rus94] and voice. Most works in automatic 27 Chapter 3 EXPRESSION OF EMOTIONS recognition of emotions use only a single modality, either speech or video as data, but there are also some works that try to combine both sources to improve the recognition performance (s. [Che98, Cow01, Iwa95]). Most of the literature concerning this field is essentially based on the suggestion, stemming from Darwin, that signs of emotion are rooted in biology and are therefore universal [Dar65]. 3.1.2 Different kinds of information within the oral communication. In general, speech presents two broad types of information. It carries linguistic information insofar as it identifies qualitative targets that the speaker has attained in a configuration that conforms to the rules of the language. On the other hand, paralinguistic information is carried by allowed variations in the way that qualitative linguistic targets are realised. This paralinguistic signal is non-linguistic and non-verbal but tells the interlocutor about the speaker's current affective, attitudinal or emotional state. It also includes the speaker's regional dialect and characteristic sociolect. Paralinguistic aspects also conduct control of the active participation of time sharing in the conversation. The paralinguistic markers, however, are to some extent culturally determined and their respective interpretation must be learned [Mar97]. This non-verbal information is primarily extracted from variations in pitch and intensity having no linguistic function and from voice quality, related to spectral properties that aren’t relevant to word identity. Emotional Expression Verbal Expression Linguistic Information Facial Expresion Acoustic Information Prosody Voice quality Figure 3.1. Different channels in the communication of human emotions. 28 Somatic Cues hearth rate, skin resistivity, swear, pupilarity... Chapter 3 EXPRESSION OF EMOTIONS In terms of oral manifestation, emotion can be expressed in at least two verbal means: linguistically (lexical, syntactic and semantic features) and acoustically. The semantic information of a word, concerning its meaning, can be an important cue in emotion recognition, since there are words that tend to be used only in certain situations, e.g. swears words or some affect bursts (s. [Scö00, Sch94]). In the listening tests carried out by Mark Schröder [Scö00], where a database of affect bursts was presented to 20 subjects, the overall recognition rate of the uttered emotion reached 81%. Since the recordings were presented audio only and without context, affect bursts were found to be an effective mean of expressing emotions. Nevertheless, this kind of linguistic indications is not enough to assure the emotional intention of the speaker because, in some languages, a swear word can also be said with friendly meaning and its connotation could only be detected by non-semantic information. As a result, spoken messages meaning depends, not only on what it’s said, but also strongly on how it’s said. In [Cow95] two channels have been distinguished within human interaction: one transmits explicit messages, and is more related to semantic issues; the other transmits implicit messages about the speakers themselves or the situation and is associated with acoustic properties of the speech (see figure 3.1). These two channels of human communication interact: the implicit channel tells people “how to take” what is transmitted through the explicit channel. Though not all the studies on the subject of voice-emotion correlation agree about which attributes of the speech signal are the most favourable to detect the speaker’s emotional state, all of them coincide in the idea that emotion strongly influences the acoustic characteristics of the speech. Furthermore, Branka Zei, phsicologyst by the Universital Hospital of Geneva, refers to three clearly differentiated levels of influence of emotion on speech: suprasegmental: overall pitch, energy, timing, intonation contour. segmental: articulation, timing. “intrasegmental”: voice quality. Although segmental features and the content of the utterance themselves carry emotion, suprasegmental features, play an important role in conveying emotion [Ban96]. Murray and Arnott have conducted a literature review on human vocal emotion (table 3.1) 29 Chapter 3 EXPRESSION OF EMOTIONS and concluded that in general, the correlation of the acoustic characteristics, both prosody and voice quality, and the speaker’s emotional state are consistent among different studies, with only minor differences being apparent [Mur93]. 3.1.3 Vocal correlates of emotion and attitude. A major question in work on acoustic correlates of emotion is to what extent recognition of the speaker’s emotional state is due to pure acoustic indications and to what extent it is due to bordering factors such as the implicit message included in the utterance, the context or the social convention. Speech Rate Anger Happiness Sadness Slightly Faster or Slightly faster slower slower Pitch Very much Average higher Pitch Range Much wider Much wider Intensity Higher Higher Voice Breathy, Breathy, Quality chest blaring tone Pitch Abrupt on changes stressed Articulation Tense Much higher Smooth, upward inflections Normal Fear Much faster Disgust Very much faster Slightly Very much Very much lower higher lower Slightly narrower Lower Resonant Much wider Slightly wider Normal Lower Irregular Grumble voicing chest tone Wide, Downward inflections downward Normal terminal inflects Slurring Precise Normal Table 3.1. Emotions and speech parameters (from Murray and Arnott, 1996). With the aim of examine how well emotions can be recognised from acoustic properties of the speech signal, the following listening experiment was carried out by L. Yang and N. Campbell in [Yan01]: 13 phonetically untrained native speakers of Chinese (5 females and 8 males) and 5 Americans (1 female and 4 males), with no knowledge of Chinese, were asked to identify 21 examples of a variety of emotional speech samples uttered in Chinese. Results show that, despite the existence of some seeming 30 Chapter 3 EXPRESSION OF EMOTIONS discrepancies, there is a basic correspondence between sound and meaning. For example, strong disgust and dismissive emphasis, as well as the degree of intensity, were clearly differentiated by both the Chinese and American respondents, and both had a single unambiguous interpretation. Consequently, the fact that the American respondents do not have any knowledge of Chinese and were unaware of the semantic content, and were still able to differentiate the speaker state purely based on the sound pattern further supports the idea of a sound-meaning link. Another revealing study related to the recognition of emotions based only on acoustic cues was performed by A. Tickle in [Tick00]. There, the comparison of crosscultural emotional decoding accuracy of vocalisations encoded by native English and native Japanese speakers yield some language independent, and therefore message independent, results: Japanese subjects decoded English vocalisations with greater accuracy than they decoded Japanese vocalisations. This fact may suggest that, despite possibly being exposed to less emotional expression, Japanese subjects are capable of decoding emotions where acoustic correlates are due to psycho-biological response mechanisms. It is amply proved that certain emotional information is enclosed within the oral communication relying only on the acoustic aspects of the speech signal. A huge number of studies have been accomplished in order to determine which acoustic attributes, contained in the oral expression, are the most advantageous candidates to unequivocally detect the speaker’s emotional state. An important research is the one accomplished by Murray and Arnott [Mur93], whose results, yielding the best acoustic attributes for detecting basic emotions, are presented in table 3.1. This study, in spite of being one of the most mentioned works in the field of emotion recognition through the speech signal, is not the only one. In a recent review of research on the acoustic correlates of speaker states, Pittam and Scherer [Pit93] have summarised the state of the literature to date as follows: Anger/Frustration: Anger generally seems to be characterised by an increase in mean F0, F0 variability, and mean energy. Further anger effects include increases in high frequency energy and downward directed F0 contours. The rate of articulation usually increases. 31 Chapter 3 EXPRESSION OF EMOTIONS Anxiety/Nervousness: High arousal levels would be expected with this emotion (see chapter 2), and this is supported by evidence showing increases in mean F0, in F0 range, and high frequency energy. Rate of articulation is reported to be higher. An increase in mean F0 has also been found for milder forms of the emotion such as worry or anxiety. Resignation/Sadness: A decrease in mean F0, F0 range, and mean energy is usually found, as are downward directed F0 contours. There is evidence that high frequency energy and rate of articulation decrease. Contentment/Happiness: Findings converge on increases in mean F0, F0 range, F0 variability and mean energy. There is some evidence for an increase in high frequency energy and rate of articulation. In summary, acoustic parameters, such as F0, intensity and duration, which have seemingly clear relationships with the most prominent perceptual characteristics of speech (i.e. pitch, loudness and speech rate) have received the most attention. These parameters also tend to be the easiest to analyse. In comparison, the modulation of spectral components of the speech signal, as revealed by the formant structure, and overall structure of the average spectrum, has been less studied. Such aspects of the speech signal, more related to voice quality attributes, do not have so obvious perceptual correlates and they are more difficult and time consuming to analyse. However, some evidences indicate that adding voice quality features could provide resolving power when distinguishing between emotions with otherwise similar prosodic profiles. As a conclusion, both prosodic and voice quality features must be considered within the emotional content of the speech. Next sections, 3.2 and 3.3, introduce how these two acoustic profiles particularly help the discourse in the aim of expressing and understanding emotions. 3.2 Prosody. Emotion is an integral component of human speech, and prosody is the principle conveyer of the speaker’s state and hence is significant in recovering information that is 32 Chapter 3 EXPRESSION OF EMOTIONS fundamental to communication. Some studies have tried to demonstrate how slightly differentiated meanings are introduced in speech communication by prosodic variations and how differences in shape communicate the grade of uncertainty or certainty with respect to the speaker’s knowledge state, specific emotional states, the intensity of emotion, and the effects of other co-occurring emotions (s. [Yan01, Pae00]). 3.2.1 Classification of prosody. Prosody is a general term for those aspects of speech that span groups of syllables or words [Par86]. These properties are not typical attributes of the individual speech sounds, but are characteristic of longer stretches of speech. Prosody conveys information between a speaker and a listener on several layers. In [Kie96] two main categories of prosodic feature levels are distinguished: acoustic and linguistic prosodic features. The acoustic prosodic features are signal-based attributes that usually span over speech units that are larger than phonemes (syllables, words, turns, etc). Within this group two types can be further distinguished: Basic prosodic features are extracted from the pure signal without any explicit segmentation into prosodic units. Here, fundamental frequency (F0) and energy framebased extraction, as detailed in section 7.1, are included. These features are not normally used directly for prosodic classification; instead, they are the basis to calculate more complex prosodic features. Structured prosodic features can be seen as variations of basic prosodic attributes over time. Consequently, they are computed over a larger speech unit. Structured prosodic features can derive from the prosodic basic features or can be based on segmental information provided e.g. from the output of a work recognizer. The structures or compound prosodic features used during this Thesis are detailed in section 7.2. The second main category [Kie96] includes linguistic prosodic features, which can be extracted from other knowledge sources, as lexicon, syntax or semantics and usually have an intensifying or an inhibitory effect on the acoustic prosodic features. These linguistic properties are not taken into consideration in the framework of the present Thesis. In the following, the term “prosodic features” only refers to the acoustical prosodic features. 33 Chapter 3 EXPRESSION OF EMOTIONS The principal prosodic variables of interest, as indicated in section 3.1.3, are energy, pitch and durational measurements such as speaking rate. Every prosodic feature has an acoustic correlated. However, this correspondence is not unique, since prosodic attributes refer to perceived phenomena. 3.2.2 Energy as a prosodic cue for emotion detection. Energy is the acoustic correlated of loudness; their relation is not linear, because it strongly depends on the sensitivity of the human auditory system to different frequencies. Hence, the influence of energy on emotional perception is a combination of different surrounding factors and valuable conclusions can also be extracted from global statistics directly derived from the energy contour. 350 450 400 300 350 Angry 300 Bored 250 Happy 200 Neutral 150 200 150 250 Sad 100 100 (a) Energy mean for male speaker (b) Energy mean for female speaker Figure 3.2. Energy mean of the sentences uttered by (a) a male speaker and (b) a female speaker. In terms of global statistics, energy is proved to be higher in emotions whose activation is also high. On the contrary, low energy levels of energy are found in emotional states with a low activation value (see table 3.1). Figure 3.2 represents the mean of the energy means over a whole sentence for all the utterances, separated by emotions, of a male speaker and (b) a female speaker. Equally, figure 3.3 represents the energy maximum under the same conditions. 34 Chapter 3 EXPRESSION OF EMOTIONS 2000 2500 2000 Angry Bored 1500 1500 1000 Happy 1000 Neutral 500 Sad 500 0 (a) Energy maximum for male speaker (b) Energy maximum for female speaker Figure 3.3. Pitch mean of the sentences uttered by (a) a male speaker and (b) a female speaker. Both statistics are in agreement with the relational theory proposed in [Mur93] summarised in table 3.1. High energy levels are found in angry and happy utterances, while sadness and boredom yield lower intensity values. Energy is one of the most intuitive indicators in the relation voice-emotion, since, as we said before, it is unequivocally related to the acoustical loudness. Even if we are not experts in this matter, we could easier imagine someone angry shouting than gently whispering. 3.2.3 Pitch as a prosodic cue for emotional detection. The acoustic correlate of pitch is the fundamental frequency or F0. Voice pitch is certainly a key parameter in the detection of emotion. Pitch variation over a sentence, also called intonation, is used in most languages to give shape to a sentence and indicate its structure, although the way in which this is done varies widely between languages. In some languages pitch is also used to help indicate the meaning of words. For instance, a Chinese word does not use a stress system like that in English. Besides that, Chinese word lacks flexion, so that its formation needs the help of prosody. Words formatted in prosodic features are the basic units of rhythmic construction in Chinese. Pitch ranges between 80 and 160 Hz for male talkers and between 160 and 400 Hz for females. Pitch can be characterised by means of its level or its contour. In English, pitch is quantized into four levels (low, mid, high and extra high) and three terminal contours are distinguished: fading (decreasing pitch and amplitude), rising (increasing pitch, amplitude nearly constant until the end), and sustained (pitch and amplitude 35 Chapter 3 EXPRESSION OF EMOTIONS approximately constant). Fundamental frequency is considered to be one of the most important attributes in emotion expression and detection (s. [Mon02, Abe01]). 250 400 350 200 Angry 300 Bored 150 Happy 100 250 Neutral 200 Sad 150 50 100 (a) f0 mean for male speaker (b) f0 mean for female speaker Figure 3.4. Pitch mean of the sentences uttered by (a) a male speaker and (b) a female speaker. Statistics extracted from the basic prosodic features contain relevant information about the uttered emotion. From the pitch contour of one utterance we extract the mean, maximum, minimum, variance and standard deviation among other features listed in chapter 7. Figure 3.4 represents the mean of the pitch mean over a whole sentence for all the utterances, separated by emotions, of (a) a male speaker and (b) a female speaker. According to the theory [Mur93], we obtain clearly differentiate statistics for the pitch mean depending on the uttered emotion (see figure 3.4). In both cases, regardless of the scale difference due to the sex condition, happiness and anger present a higher pitch average, while boredom and sadness means are slightly slower with reference in the neutral emotion (absence of emotion). Pitch range, calculated over the same two speakers, also depends strongly on the emotion, as it can be seen in figure 3.5, according to the conclusions achieved by Murray and Arnott [Mur93] and resumed in table 3.1. Different statistics for other prosodic features can be found in section 7.2. Apart from global statistics extracted from fundamental frequency, pitch shape characteristics such as pitch slope and concavity and convexity are very important features in differentiating intonational meaning. For instance, the concavity or convexity of slope is critically related to the perceived grade of severity or softness of the utterance and these shape characteristics reflect the underlying expressive states that often arise 36 Chapter 3 EXPRESSION OF EMOTIONS from the discourse process itself. Therefore, not only global statistics of the pitch, but also attributes related to the pitch contour, should be taken into consideration. The pitch contour can be estimated following different models: Mathematical approximation, i.e. linear regression coefficients. Symbolic representation, i.e. stylisation into concatenation of straight lines according to rules (local maximum and minimum). Intonation models, i.e. two-component model superposition of fast pitch movements on a slowly declining line (baseline) Despite the fact that some sources claim that none of the models preserve the information necessary for emotional speech, various studies stated the importance of intonation as a medium for expressing emotions (s.[Wil72, Cos83, Cah90]). Accordingly, Sylvie J.L. Mozziconacci completed a study concerning the expression of emotions when the pitch variation is represented in the theoretical framework of an intonation model [Moz00]. There, the power of existing intonation models for modelling expressiveness was contemplated. She considered that studying speech variability (pitch level and range) through such crude measures as pitch mean and standard deviation, can obscure a substantial part of the variation present in the speech signal, and do not provide any information concerning relevant deviations. Finally, a cluster analysis of different emotional pitch contours was performed and it was concluded that predominantly the final configuration of the contour plays a role in conveying emotion. On the other hand, most of the world-known languages employ a structural parameter called stress. It’s characteristic of these languages that certain parts are felt to be more prominent than others, whether in isolated polysyllabic words or in larger stretches of continuous speech [Slu95]. Such prominent parts stand out from their environment due to (among other things) increased vocal effort (intensity), more accurate articulation, longer duration and pitch changes; stress tends to raise pitch and stress-produced pitch irregularities are superimposed on the pitch contour. In spite of being introduced in this section, stress could be also considered as an acoustic correlated of pitch, energy and duration, due to the influence of each one of these attributes on the stress perception. A related study by Paeschke, Kienast and Sendlmeier, showed that the intensity of stressed syllables differentiated between the groups excited and non-excited emotions. 37 Chapter 3 EXPRESSION OF EMOTIONS 200 120 100 Angry 80 150 Bored 60 Happy 40 Neutral 20 Sad 100 50 0 0 (a) f0 range for male speaker (b) f0 range for female speaker Figure 3.5. Pitch range of the sentences uttered by (a) a male speaker and (b) a female speaker. 3.2.4 Durational cues for emotional detection. Prosody involves also duration-related measurements. One of the most important durational measurements in the aim to discriminate among speaker’s emotional states is the speaking rate. An acoustic correlated of the speaking rate can be defined as the inverse of the average of the voiced region length within a certain time interval. Also pauses contribute to the prosody and can be divided into two classes: unfilled and filled pauses. An unfilled pause is simply silence or it may contain breathing or background noises, whereas a filled pause is a relatively long speech segment of rather uniform spectral characteristics. During a filled pause a hesitation form is usually uttered indicating that one is about to start or to continue speaking: e.g. English [[schwa][long]], [[schwa ]mm], etc. In conclusion, the significance of prosodic meaning to communicate judgements, attitudes and the cognitive state of the speaker, this makes it essential for speech understanding projects such as emotion and intention tracking and to the development of natural-sounding spoken language systems. 3.3 Voice Quality Most of the studies of the past decades related to the expression of emotions through the voice dealt with the investigation of prosodic parameters, mainly fundamental frequency, intensity and duration characteristics. In addition, parameters describing 38 Chapter 3 EXPRESSION OF EMOTIONS laryngeal processes on voice quality have been recently taken into account (s.[Kla00, Ban96]). 3.3.1 Voice quality perception. Voice quality is defined by Trask [Tra96] as the characteristic auditory colouring of an individual’s voice, derived from a variety of laryngeal and supralaryngeal features and running continuously through the individual’s speech. A wide range of phonetic variables contributes to the subjective impression of voice quality. The human voice possesses a wide range of variation, and the natural and distinctive tone of speech sounds produced by a particular person yields a particular voice, which is rarely constant in the course of a conversation. The reasons for these changes are diverse; voice quality is influenced by the social context and situation and phonetic habits are also a part of our personal style. It’s possible to change our voice by modifying the phonetic settings, but several components of voice quality are innate and outside the speaker’s control. Voice quality is usually changed to strengthen the impression of emotions. As it was said in section 3.1, prosody suffers some changes relying on the speaker’s emotional state, but voice quality is an additional valuable phonetic cue for the listener. Definitions for voice quality or phonation types used in normal speech manly come from studies of pathological speech (laryngeal settings) and it is hard to describe voice quality, especially variations in a normal voice. The term voice quality subsumes attributes, which concern the overall phone independent spectral structure, for example, shimmer, formant frequencies variation or some relative energy spectral measurements. Since the notion of voice quality is unquestionably connected to the speech production theory, a short overview is given in following section 3.3.2. There, basic concepts are clarified in order to reach a better understanding of how emotional state can influence the speaker’s voice quality. 3.3.2 Speech production theory overview. Acoustic speech signal in humans is commonly considered to result from a combination of a source of sound energy (e.g. the larynx) modulated by a transfer (filter) function determined by the shape of the supralaryngeal vocal tract. This combination 39 Chapter 3 EXPRESSION OF EMOTIONS results in a shaped spectrum with broadband energy peaks. This model is often referred to as the "source-filter theory of speech production" and stems from the experiments of Johannes Müller (1848). The source-filter theory of speech production hypothesises that an acoustic speech signal can be seen as a source signal (the glottal source, or noise generated at a constriction in the vocal tract), filtered with the resonances in the cavities of the vocal tract downstream from the glottis or the constriction. Figure 3.6 shows a schema of this process for the case of voiced speech (periodic source signal). Figure 3. 6. Source filter model of speech production According to the generalised speech production theory [Mar97], the source for voiced speech is generated by the vibration of the vocal folds. An air pressure difference is created across the closed vocal folds by contraction of the chest and/or diaphragm muscles. When the pressure difference becomes sufficiently large, the vocal folds are forced apart and air begins to flow through the glottis; this is the opening phase of the glottal cycle, as depicted in figure 3.7. When the pressure difference between the subglottal and supra-glottal passages is sufficiently reduced, airflow begins to reduce and the glottis begins to close. This is the closing phase of the glottal cycle. Closing occurs more rapidly than opening, apparently because the tension on the vocal folds is reinforced by Bernoulli effects1. The glottis quickly closes, resulting in the closed phase of the glottal cycle. These phenomena are cyclically repeated, with period T0, yielding a periodic 1 For a non-viscous, incompressible fluid in steady flow, the sum of pressure, potential and kinetic energies per unit volume is constant at any point: p + ρv2/2 + ρgy = constant where p is the pressure, ρ is the density, v the velocity and y the height in a gravitational field of strength g, all measured at the same point. This quantity is then constant throughout the fluid. In few words: where the pressure is high, the velocity is low and where the pressure is low the velocity is high. 40 Chapter 3 EXPRESSION OF EMOTIONS source signal. This periodic signal is the origin of voiced sounds. On the other hand, the source of unvoiced sounds has no periodicity and is considered to be white noise. T0 - duration of the pitch period. t1 - begin of the airflow, t2- instant of the maximum glottal flow of the amplitude AV through the glottis, t3- moment of the glottal closure and maximum change of glottal flow. t4 - instant of complete glottal closure. Figure 3.7. Description of the glottal waveform and its derivative. An important measure parameter of the glottal flow is the Open Quotient (OQ), which is the ratio of the time in which the vocal folds are open and the whole pitch period duration ((t4-t1)/T0). Another parameter, used to characterise the glottal source, is the Speed Quotient (also called skewness or rk), which is defined as the ratio of rise and fall time of the glottal flow ((t2-t1)/(t4-t2)). These two parameters can change relying on the voice quality nature, as remarked in next section 3.3.3. Voiced sounds consist of fundamental frequency (F0) and its harmonic components produced by vocal cords (vocal folds). But not only the source participates in the speech generation process, also the vocal tract, which acts as a filter (see figure 3.8), modifies this excitation signal causing formant (pole) and sometimes anti-formant (zero) frequencies [Wit82]. Human perceptual system translates patterns of formant frequencies into specific vowels. Each formant frequency has also amplitude and bandwidth and it may be sometimes difficult to define some of these parameters correctly. The fundamental frequency and formant frequencies are probably the most important concepts in speech processing in general. 41 Chapter 3 EXPRESSION OF EMOTIONS With purely unvoiced sounds, there is no fundamental frequency in the excitation signal and therefore no harmonic structure either, and the excitation can be considered as white noise. The airflow is forced through a vocal tract constriction, which can occur in several places between glottis and mouth. Some sounds are produced with complete stoppage of airflow followed by a sudden release, producing an impulsive turbulent excitation often followed by a more protracted turbulent excitation. Unvoiced sounds are also usually more silent and less steady than voiced ones. Figure 3.8. Spectral manifestation of the speech production. 3.3.3 Influence of the source on voice quality. Phonation is the contribution that the larynx makes to speech. The phonation type strongly influences the properties of a generated sound. People may use different types of phonation in different situations, either consciously or unconsciously (e.g., whisper when telling a secret, breathy voice when excited, creaky voice during a hangover). A person's tendency to use various (combinations of) types of phonation is one of the most important things that make their voice "theirs". People who need to talk in two or more different identifiable voices (e.g., puppeteers) will usually use different phonation types for the different voices. The differences in phonation are caused by a change in the excitation pulse. Thus, the glottal waveform is different for every individual phonation type. Its specific 42 Chapter 3 EXPRESSION OF EMOTIONS characteristics depending on the respective phonation type are described in comparison to modal (normal) phonation, taking over different approaches: Breathy voice: This phonation is a combination of breath and voice, which occurs if the vocal cords do not close completely along their entire length while they are vibrating, the air which flows through the remaining aperture adds whisper to the vocal cord vibrations. It is characterised by a longer fall phase and a more gradual flow cut; the glottal pulse is more symmetrical; high Open Quotient; high peak flow glottal and lower pitch. Whispery voice: Air passes with turbulence through a small opening at the back of the glottis. It presents high OQ, but lower than for breathy voice; pulses more skewed than for breathy voice, but more symmetrical than for normal voice; a high peak glottal flow, but lower than for breathy voice, which implies that H1 is lower in the source spectrum. Creaky voice: In this kind of voicing, the vocal cords are stiffened, so that they are very rigid as they vibrate. It presents extremely low fundamental frequency; irregular pulses; low OQ and low glottal flow rate, resulting in weaker low frequency components, particularly H1; impulses are relatively symmetrical, with a relatively short rise time which dampens the low frequency components. Falsetto: The vocal folds are stretched tightly so that they become very thin. The resulting vibrations can have over twice the frequency that a speaker can produce using modal voicing. It causes very high pitch; rather low glottal peak flow; often with glottis slightly open, thus, the effect of turbulent flow is observed in the spectrum; pulses are quite symmetrical. Tense voice: sharp cutting off of the glottal flow, boosting the higher spectrum components, very high skewness of the glottal pulse following a longer rise time; small Open Quotient; low frequency components of spectrum attenuated in comparison to the higher components. Lax voice: comparable to breathy voice; long rise time of the glottal pulse. 43 Chapter 3 EXPRESSION OF EMOTIONS 3.3.4 Influence of the filter on voice quality. The vocal tract, which acts as the filter in the speech production mechanism, is a continuous tube extending from the glottis to the lips. The length of the vocal tract, from vocal folds to lips, is about 17 cm in adults. The shape of the vocal tract will change as we move our tongue, jaw, palate, and lips (see figure 3.9). Depending on the position of these structures and the overall shape of the vocal tract, certain sound frequencies will be amplified by the tract and others will be dampened (see figure 3.8). Those frequencies that are amplified are called the formants. In speech, the resonating cavities in the vocal tract "shape" the glottal tone in ways consistent with the laws of acoustic resonance. Subtle changes in tongue, jaw, palate or lips position can produce a significant change in formant frequencies, and therefore a totally different vowel sound. The process of modifying the shape of the vocal tract to produce different sounds is called articulation. Figure 3.9. Different resonant cavities affect the speech source. Even when the same vowel is uttered, slightly changes in the shape of the filter (tongue, jaw, palate or lips) cause variations in the formant structure. These changes might not be significant enough to change the vowel perception, but can provide some cues when trying to find particular non-semantic aspects of the speech. Some consequences of the filter shape variation on the formant structure are: All formant frequencies decrease uniformly as the length of the vocal tract increases. This rule is good common sense; larger objects resonate sound at lower 44 Chapter 3 EXPRESSION OF EMOTIONS frequencies. For this reason, long organ pipes, long strings, large drums, and big loudspeakers are used to produce low notes. However, humans cannot greatly change their vocal tract length. Approximately a 10% increase or decrease is possible by lowering or raising the larynx and by protruding or retracting the lips. This produces a comparable percentage shift in the formant frequencies. The result is a darker (or brighter) colouring of the vowels. All formant frequencies decrease uniformly with lip rounding and increase with lip spreading. Lip rounding is similar to partially covering the mouth. In both cases, the effective tube length increases (acoustically). This lowers all the resonant frequencies. It is likely that the term "covered sound" originated as a result of musicians covering the mouth ends of their instruments, particularly brass players. In combination with larynx height adjustments, lip rounding or spreading can be very effective in darkening or brightening the vowels. A mouth constriction lowers the first formant and raises the second formant. This creates a more diffuse vowel spectrum. The acoustic energy is spread out over both low and high frequencies, as in vowels [i] and [e]. A pharyngeal constriction raises the first formant and lowers the second formant. This makes the vowel spectrum more compact, as in the case of or [o]. 3.3.5 Influence of emotions on voice quality. Most studies, focusing on the expression of emotions, have concentrated on the prosody and, thought these researches yield successful emotional expression in synthetic speech by manipulating pitch and duration, a lack of expressiveness is founded due to the absence of voice quality considerations. Apart from the influence of emotional state on prosodic parameters (F0, speech rate), influences on the glottal pulse shape (e.g. open-toclosed ratio of the vocal chords), harmonics-to-noise ratio, spectral energy distribution and correlations with some kind of voicing irregularities have been proven [Kla97]. In particular, some hypotheses [Alt99] defend that some parameters as the Open Quotient and the Glottal Opening (see section 3.3.2), could reflect special properties of the vocal tract during the production of emotions, since spectral content is closely related to voice quality parameters correlated directly to speaker emotional state. 45 Chapter 3 EXPRESSION OF EMOTIONS With the aim of detecting properties of physiological processes during the production of different emotional states, voice source quality parameters are analyzed in [Alt99] for a subset of the acoustic signals including three different emotions: happiness, neutral state and anger. The data indicate that the opening quotient and the glottal opening tendentially reflect differences between anger and neutral statements. First observations confirm that happiness induces more breathiness in comparison with negative and/or neutral emotional state. Therefore, they suppose that happiness is produced with a higher subglottal pressure than angry or neutral statements, what should be noted by means of the open quotient. On the other hand, the amplitude of the first formant depends on the grade of glottal opening during an open cycle. The intensity of this formant therefore depends also on the Open Quotient. For the calculation of both parameters, they use spectral measures. This method is also employed in this Thesis and is further detailed in section 7.3.4. Breathings and roughness are also used as parameters involving emotion and they are estimated by the harmonic-to-noise ratio (HNR). They show significantly higher HNR in the sentences expressed with anger than the neutral expressions. However, [Pin90] found that estimation of the HNR is also influenced by other attributes like frequency variations (jitter) or amplitude variations (shimmer). In a different experimental study performed by M. Kienast and W.F. Sendlmeier [Kie00] it was shown how various characteristics concerning vowel quality, segmental reduction and energy distribution in voiceless fricatives were specific for each one of five different emotions: fear, sadness, boredom, anger and happiness. Particularly, it was revealed that vowel formant analysis yields useful tools for emotion discrimination. Sentences expressing fear, sadness or boredom are characterised for a formant shift towards a centralized position in all different vowels. The results obtained by analysing the formant values in angry sentences present differences from the findings for sadness, fear and boredom. In angry sentences a displacement of the vowels in a more extreme part of the vowel chart was observed in the speech samples of both male and female speakers. Overall, the vowel chart became larger and the different vowels became more distinct (see figure 3.4). In sentences with happy emotion, the analysis of the formant frequencies revealed an increase of both the first and second formant. Following the influence rules of section 3.3.3, a general shortening of the vocal tract could explain this 46 Chapter 3 EXPRESSION OF EMOTIONS result. The shortening could be caused by lip spreading occurring during smiling as noted by Tartter [Tar80]. A supplementary factor for this effect can also be seen in a rising of the larynx, which is often accompanied by a high fundamental frequency. A setting of raised larynx in happy speech is probable, because happy speech is reported to have generally a great increase in pitch mean. The result that the first formant was more affected than the second than the second can be interpreted by a greater opening degree during mandible movements in happy speech. (a) (c) (b) (d) Figure 3.10. Average formants value for (a) sadness, (b) boredom, (c) happiness and (d) anger (grey symbols) compared with neutral (black symbols). From experiments in [Kie00] 47 Chapter 3 EXPRESSION OF EMOTIONS With the aim to contrast empirically this emotion-formant relation, the following experiment is performed with one male speaker from our own database (see Chapter 4): Mean of the first and second formant frequencies are calculated independently for each one of the five emotions considered in the framework of this Thesis. The calculations are made over a selected voiced region considered the most likely to an /a/ (see section 7.3), since it’s proved that spectral estimations can be more accurately calculated over open vowels. Results are presented in figure 3.11. 510 1320 500 1300 490 Angry 1280 480 Bored 1260 470 Happy 1240 460 Neutral 1220 Sad 450 1200 1180 440 1st formant frequency 2nd formant frequency Figure 3.11. Variation of first and second formant frequencies through different emotions. Same conclusions can be extracted from our data, as it was expected. Happy emotion tends to raise both formant frequencies, as it happened in the study by Kienast and Sendlmeier [Kie00]. Some other studies explain this notorious change in formant structure arguing that happy expression usually comes together with a slightly smiling, which causes lips movements and force the rounded vowels to sound unrounded. Comparison of different quality features from utterances with dissimilar emotional content is presented in section 7.2. Another study related to expression of emotions, [Zet98], also concludes that voice quality, as well as pitch and speech rhythm, plays an important role in giving the impression of different emotions. There, one male actor corpus is analysed. In angry utterances he uses rather high pitch and a high intensity. The impression is strengthened by the slow speech rate and the very distinct articulation. The speaker ‘s voice quality is breathy, as it usually happens in the expression of anxiety (see figure 3.13). This observation is based in the phonation properties introduced in section 3.3.3, where general characteristics of each voice quality type were given. Figures 3.12, 3.13 and 3.14 48 Chapter 3 EXPRESSION OF EMOTIONS show the temporal signal of the same sentence uttered in three different emotional states: neutral (no emotion), anxiety and sadness and differences based on voice quality become obvious. Figure 3.12. Neutral voice. Figure 3.13. Anxiety – breathy voice. Sadness has a low pitch and intensity and there is only a small pitch range. The speech rate is rather high with a lax articulation. He uses a creaky voice quality (s. figure 3.13) and that strengthens the impression of the emotion. In the expression of joy, the recordings show variations in intonation and a rather high pitch compared with the neutral case. Figure 3.14. Sadness – creaky voice. As a conclusion of this chapter, both prosodic and quality voice features are found to influence the recognition of the emotional state of the speaker. As Murray asserted in [Mur93], features related to the pitch envelope are the most important parameters in differentiating between the basic emotions2, and it is the voice quality, which is important 2 Murray and Arnott [Mur96] describe the vocal effects on five basic emotions: fear, anger, sadness, happiness, and disgust. 49 Chapter 3 EXPRESSION OF EMOTIONS in differentiating between the secondary emotions. He also noted that the acoustic correlates of basic emotions are cross-cultural, but those of the secondary emotions are culturally specific. This last idea anticipates some of the problems that are found when detecting emotions in a speaker independent way: Difficulties that are found when creating an automatic emotion recognition system for one specific person, will be gravely increased if the intention is to make this system ignore the specific properties of each speaker. 3.4 Non-speech related ways of expression and detection of emotions. As figure 3.1 shows, emotions are also expressed through other human communication channels. Therefore, though information extracted from the speech signal seems to be capable of distinguishing certain emotions, there is also an increasing interest in the problem of recognizing emotion from facial expressions. These studies are based on Darwin’s pioneering work [Dar65] and are possible thanks to the extensive studies on face perception during the last two decades (s.[Ekm73, Dav75, Sch84]). In contrast to speech, emotion recognition through face analysis counts with a good deal of information, mainly resultant from neurobiological research. Techniques like noninvasive brain imaging have been applied in order to localize face regions in the human brain. Nevertheless, the automatic recognition of emotions using facial expression is not an easy task: “Analysis of the emotional expression of a human face requires a number of preprocessing steps which attempt to detect or track the face; locate characteristic facial regions such as eyes, mouth, and nose on it; extract and follow the movement of facial features, such as characteristic points in these regions; or model facial gestures using anatomic information about the face.“ [Cow01] Most of the information about facial expression can be extracted from the position of the eyebrows and positions of the corners of the mouth. With this aim, two main 50 Chapter 3 EXPRESSION OF EMOTIONS categories of approaches derive from computational studies of facial expression recognition: Target oriented: Only one single image of a face at the apex of the expression is analysed. Gesture oriented: Uses facial temporal information from a sequence if images in an emotional episode. Independently of the approach, the first step is to perform face detection or tracking. As well as in speech analysis there are many pitch estimation techniques, face tracking has been attempted using different tracking systems. Simplest methods try to differentiate regions through the colour, based on the idea that the distribution of the chrominance components of a human face is located in a very small region of the colour space [Gra96]. Other systems use templates information and results obtained improve the recognition achieved in colour-based methods. But they are generally computationally expensive. In all the cases, estimation of the pose of the face is undoubtedly necessary, since changes in expression can be due to changes in the viewing angle. Despite all the physiological studies related to face expression provide a consistent base for the development of automatic recognition systems, target-based approaches do not transfer easily to machine vision in real applications. On the other hand, facial-gesture tracking approaches have produced promising results, but their psychological basis needs further exploration. Some approaches have also tried to combine different sources to facilitate automatic recognition of emotions. They defend that machine recognition should improve when combining the acoustical source with an optical source that contains information from the facial region such as gestures, expressions, head-position, eyebrows, eyes, ears, mouth, teeth, tongue, cheeks, jaw, neck, and hair. Facial expression in combination with emotional speech cues is undertaken in lately approaches. These attempts are also supported by the idea that human speech production and facial expression are inherently linked by a synchrony phenomenon, where changes often occur simultaneously with speech and facial movements. An eye blink movement may occur at the beginning or end of a word, while oral-cavity movements may cease at the end of a sentence. 51 Chapter 3 EXPRESSION OF EMOTIONS According to an assessment by the MIT Media Lab, “research in computer recognition and expression of emotion is in its infancy”. Two of the current research efforts at the MIT Media Lab focus on recognition of facial expression and voice affect synthesis. These are not, of course, the only ways to recognize affective states; posture and physiological signs like gestures and increased breathing rate, for example, also provide valuable cues. Finally, somatic changes are also being used for emotion recognition. IBM (Almaden computer science research) is working on a method and system for correlating physiological attribute including pulse, temperature, general somatic activity (GSA), and galvanic skin response (GSR) to the emotion of someone touching a computer input device, such as mouse. That means, by simply touching the mouse, the computer will be able to determine a person’s emotional state based on the somatic channel of emotional communication (see figure 3.1). 52