Espace: electronic system for peer assessment and coaching

advertisement

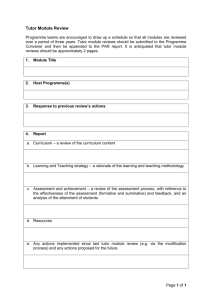

Espace: A new web-tool for peer assessment with in-built feedback quality system Maurice De Volder (Open University Netherlands) Marjo Rutjens (Open University Netherlands) Aad Slootmaker (Open University Netherlands) Hub Kurvers (Open University Netherlands) Marlies Bitter (Open University Netherlands) Rutger Kappe (Inholland University, Netherlands) Henk Roossink (University of Twente, Netherlands) Jan de Goeijen (University of Twente, Netherlands) Hans Reitzema (Rotterdam University, Netherlands) Abstract In education and training, formative assessment and feedback by peers has a number of benefits for learners as well as teachers. A number of software systems for supporting peer assessment exist, but what all these applications lack is an in-built and easy-to-use quality system for monitoring and improving the quality of the peer assessment processes. Therefore, we developed new software called Espace using Java, Apache and MySQL with as main features: free license, modifiable open source code, platform-independent web application with in-built and easy-to-use quality system. Depending on his software settings, the teacher will be able to set the level of quality control and at the same time the level of time expenditure. Student users found the tool easy to use, socially and intellectually stimulating, and ultimately leading to better assignment outcomes. Teacher users found that the tool saved them a lot of time. Introduction In the context of higher education, peer assessment refers to: (a) the critical evaluations by students of the products or performances of other students or peers, and (b) the giving and receiving of quantitative and qualitative feedback between students or peers. Peer assessment can be summative or formative. Formative assessment is used to aid learning (learning-oriented assessment) while summative assessment is used for grading purposes (certification-oriented assessment). The formative use of peer assessment increases student acceptance of this assessment process because it is non-threatening and informal as opposed to high-stakes formal assessment. Most students appreciate feedback so that they can improve on their work before it is actually graded (Lim Yuen Lie, 2003; Bostock, 2004; Falchikov, 2004). According to Keppell et al. (2006), peer assessment should focus on formative assessment because summative assessment changes the cooperative nature of the peer learning relationship. Boud, Cohen, and Sampson (2001), Race (2001), Bostock (2002) and Topping (2003) have described the potential benefits of using peer assessment for students as assignment makers and reviewers, and for teachers. As assignment makers, students gain much more feedback than with teacher feedback alone, especially when the teacher has little time available and when multiple peers give feedback. While feedback from other students may not be as authoritative as from an expert teacher, peer feedback might be available in greater volume and with greater immediacy. By using the feedback information, assignment makers can improve their final product or performance. As reviewers, students feel responsible for their 1 peers’ learning and are more motivated to study well in order to be able to give constructive feedback. Applying assessment criteria to essays, reports, presentations, etc., also results in more deep-level learning than just reading texts. Both as assignment makers and reviewers, students learn the peer assessment skills needed by lifelong learners, not only in their continuing learning, but also in contexts of professional life where teamwork and interpersonal skills are highly valued. For teachers, there are possible gains in cost effectiveness: teachers can be managing peer assessment processes rather than giving feedback to large numbers of students directly. Peer assessment allows teachers to assess individual students less, but better, and to spend their scarce time on activities where teacher involvement is essential. Peer assessment can take place in different ways. Oral feedback is usually given during group meetings but has as drawback that no evidence remains. Even if audio- or videotaped, the evidence is not easily stored and retrieved. Via paper documents peer feedback can be given in written form. However, a lot of time and effort is needed to organize this (Hanrahan & Isaacs, 2001). Peer assessment can be digitally organized via available software such as Blackboard and other Virtual Learning Environments (Keppell et al., 2006). However, this kind of software does not have special features for supporting peer feedback, so the teacher still has a lot of organizational work such as sending messages to students and checking messages between students. Finally, it is possible to use applications tailored for peer feedback. In that case, one can use existing software such as Moodle Workshop and Turnitin Peer Review, or build one’s own tailored software in which all desired peer feedback processes are automated. We decided to build our own software, called Espace (an acronym for Electronic System for Peer Assessment and Coaching Efficiency) because of a number of advantages compared to existing software. Turnitin Peer Review is a commercial package, meaning it is expensive and not modifiable. Moodle Workshop is Open Source Software, but it is focused on quantitative feedback and summative assessment (grading). A number of systems with the same features as Moodle Workshop have been developed throughout the higher education sector (Davies, 2000; Bostock, 2001; Bhalerao & Ward, 2001; Lin, Liu, &Yuan, 2001; Sung et al., 2005). What all these applications lack is an in-built and easy-to-use quality system for monitoring and improving the quality of the peer feedback processes. Therefore, from 2004 to 2006 the Espace-software was developed (using Java, Apache and MySQL) and field-tested by the authors in a project of the Dutch Digital University with as main features: free license, modifiable open source code, platform-independent web application with in-built and easy-to-use quality system. User roles in Espace Since Espace is a web application, users only need a browser to be able to use Espace anywhere and on any device. Three kinds of user roles are distinguished. In chronological order these roles are: administrators, tutors and students. The administrator imports student accounts (in the form of XML-files) and couples these accounts to courses. He is also responsible for correctly importing the assignments, assessment criteria and feedback instructions received from the teacher (tutor). Because the administrator role is not essential to the educational process, we will not discuss it further. Before a particular run of a course with peer feedback in it starts, the tutor defines the many settings of that run. For instance: when the course starts, which students will get which assignments, how many versions of the assignment outcome are obligatory, whether feedback is given anonymously, etc. Also the tutor has to decide about the settings of the quality system of Espace, for instance what percentage of students will get extra feedback from the tutor. When the run has started, the tutor sees as his home screen in Espace a to-do-list supporting him in monitoring the quality of the learning taking place in the course. For instance, the in-built quality system of Espace will at random select the students who need to get extra tutor feedback according to the preset percentage mentioned earlier. We will give a more detailed description of the diverse aspects of the tutor role in the paragraph on the in-built quality system . The student who logs into Espace also sees a to-do-list, with items such as: do assignment, give feedback, react to feedback, make final version of the assignment, etc. The student role is described in more detail in this paragraph. A student can be an assignment maker, a reviewer (feedback giver), or both, depending on what the tutor has decided beforehand (possibly in negotiation with students). This makes it possible that not only students in the same class give feedback to assignments made by their classmates, but also that students from one class can give feedback to assignments made by another class, for instance a senior class to a 2 junior class. Besides individual work, group work is also possible. A group of students makes the assignment and gets feedback from another group of students. Figure 1 shows a student screen with some items in his to-do-list. Clicking on the item opens the related action screen. When the action is performed by the student, the item disappears from his to-do-list. Possible student actions are: - Do assignment: the assignment text appears and also links to the assignment instruction and the assessment criteria. In a text box the student can type his assignment outcome and can also attach files. These files can have any format, allowing students to upload spreadsheets, graphics, audio and video. This way it is possible not only to upload static documents but also more dynamic performances such as presentations, as advocated by Trahasch (2004). When the student asks for feedback, Espace sends the assignment outcome to one or more feedback givers (reviewers). - Give feedback: in the to-do-list of the reviewer, the assignment outcome appears and also links to the assignment, the assignment instruction, the feedback instruction and the assessment criteria. In a text box the reviewer can type his feedback and he can also attach files. These files can have any format, allowing students to give feedback by means of saved questionnaire files, oral feedback on audio or demonstrations on video. The reviewer also rates the global quality of the assignment outcome on a five-point scale from bad to very good. This global outcome rating is not for grading purposes, but it feeds the in-built quality system of Espace, to be described in following paragraphs. A potential problem with peer assessment is the quality of the feedback. Is this the blind leading the blind? To support the student-reviewer, it is important to provide specific criteria and instructions on how to use them. Wiggins (2001) and Miller (2003) have shown that specific criteria and descriptive feedback are more effective than evaluative feedback and unclear criteria. - React to feedback: the student reads the received feedback, sees the global outcome rating, and rates the global quality of the feedback received on a five-point scale from bad to very good. Robinson (2002) found that peer feedback is often regarded as inadequate by those who receive it. According to Davies (2006), students should be judged for the quality they show in performing peer assessment. Bostock (2002) added assessment by the teacher of the peer assessments and feedback, as a way of policing their quality and valuing the effort that went into writing them. In our view, quality of feedback is most efficiently monitored by the ‘consumer’ himself by letting the student rate the usefulness of the received feedback (actually giving feedback to the feedback received). This global feedback rating feeds the in-built quality system of Espace. Finally, and if allowed by the tutor settings, the student can ask the reviewer to elaborate on the given feedback (give more feedback or explain unclear feedback. - Elaborate on feedback: when asked the reviewer can elaborate on the feedback already given. The reviewer also sees the global feedback rating given to him by the feedback receiver. There is a great deal of evidence that students do not understand the feedback they are given (Chanock, 2000). Therefore, good feedback practice encourages peer dialogue (Nicol & Milligan, 2006). Figure 1: Student screen with to-do-list 3 - Produce next version (or final version) of assignment outcome: using the elaborated feedback the student can make version 2 (up to 10) of the outcome and start a new loop in the feedback process (get feedback, ask and get elaboration) until he produces a final version of the assignment outcome and the feedback loop ends. Allowing resubmission and providing opportunities to close the gap between current and desired performance, is one of the principles of good feedback practice (Nicol & Milligan, 2006). Besides the to-do-list, a student can choose from a number of menu options: - Contact: in Espace, the student can only contact his tutor, not mail to other students, in order to avoid that feedback would occur outside the proper channel. - Inbox: in Espace, the student can only get mail from his tutor - News: provides general info about the course just-in-time - Help: leads to a user guide for students and a FAQ list (both are PDF-files). - Overview: this enables to search and view all the student information in the Espace database, from still active courses as well as from archived courses. For instance: all versions of all assignment outcomes produced, all feedback given and received, all mails sent and received, all assignment info (criteria, instructions, etc.). In-built feedback quality system Before peer assessment can start, the tutor has to set the stage. The underlying philosophy of Espace is that the software should be user-driven and adaptable. Espace supports the teacher in his teaching and therefore must be able to support many forms of peer feedback in many educational situations. Depending on the educational situation, the teacher selects the options in the software, possibly in consultation with students. The main software settings are: - Choose participants: decide who will participate in the course - Compose groups: if applicable, participants can be clustered into groups - Select assignment makers and feedback givers: decide which participants (or groups) will make which assignments and decide which participants (or groups) will give feedback on which assignments. - Write the assignments, the assessment criteria and the instructions (instructions for doing the assignment, for giving feedback and for reacting to feedback). These texts are imported by the administrator, and are reusable in other runs of the same course or in other courses. - Select per assignment the number of feedback givers. The software will now randomly allocate feedback givers to assignment makers for each assignment. This allocation can be manually altered before and during the course as the teacher seems fit. For instance, to replace a dropped-out student or to avoid conflicts between certain students. - Select the number of versions of the outcome the assignment maker can or must produce. - Activate anonymity of student interactions: students will not see who made assignments and who gives feedback when anonymity is activated. Lejk and Wyvill (2001) and Davies (2003) found that the majority of students feel it is imperative that peer assessment and feedback remain anonymous. Few students are willing to sacrifice their ‘popularity’ for being ‘critical’ (Zhao, 1998). - Activate student mail: to reduce his workload, the tutor can deactivate the mail option so students cannot contact their tutor. - Activate elaboration option: makes it possible that the feedback receiver asks the reviewer for clarification of his feedback. - Set the alarm level of the outcome rating: below this minimum acceptable rating, the tutor will be notified of a low assignment outcome rating by a reviewer - Set the alarm level of the outcome rating consensus: below this minimum acceptable consensus, the tutor will be notified of a low consensus between two or more reviewers who rated an assignment outcome (only applicable when there are two or more feedback givers) - Set the alarm level of the feedback rating: below this minimum acceptable rating, the tutor will be notified of a low feedback rating by a feedback receiver. - Set the alarm level of repeated low feedback ratings: above this maximum acceptable level, the tutor will be notified that repeated low feedback ratings are given to the same reviewer. - Set the percentage of assignment outcomes that will receive feedback from the tutor (on top of the peer assessment) 4 - Set the percentage of students that will be randomly inspected by the tutor. Like an auditor checking the books, the tutor inspects the outcomes and feedback of a preset number of students. Espace supports this checking by randomly selecting students and presenting all their relevant information to the tutor. Depending on his software settings, the tutor will be able to set the level of quality control and at the same time the level of time expenditure. Quality control and time efficiency are inversely related. This trade-off between quality and efficiency can be changed during the course run when the teacher sees reason to do so, allowing greater flexibility to the tutor. The built-in quality system of Espace supports the teacher in his monitoring of the quality of the peer assessment taking place. All settings have default values which make it easier and faster for the tutor to define a new run. When logging in in Espace, the tutor can find the following items in his to-do-list: - Low ratings of assignment outcome - Low consensus of assignment outcome ratings - Low feedback ratings - Repeated low feedback ratings - Give tutor feedback - Inspect students - Read student messages When the tutor opens an item in his to-do-list, all the relevant student information is presented to him. For instance, when there is low consensus of outcome ratings between reviewers, the assignments, criteria, instructions, outcomes and ratings of the involved students will be presented to the tutor for evaluation. After evaluation, the tutor can select one or more options from the following list of tutor actions: 1. Let feedback giver repeat his feedback. If the tutor feels the feedback giver can do a better job, he can choose to put this item back on the to-do-list of the feedback giver. The tutor can also send mails to the assignment maker and the feedback giver explaining why he has done so and how feedback can be improved (see action 4). 2. Let other student give feedback. If the tutor feels the feedback giver cannot do a better job, he can choose to put an extra item on the to-do-list of another student instructing him to give feedback. The tutor can also send mails to the assignment maker and the feedback giver explaining why he has done so. Students are to be informed before the start of the course that they can be required to give repeated or more frequent feedback. 3. Give feedback as tutor. For a number of reasons the tutor can decide it is necessary to give feedback himself. For instance when the assignment outcome is too complex or when no students are available to give feedback. 4. Send mail to student. In mails the tutor can explain why he has taken certain actions and how assignment makers but especially feedback givers can improve their performance. 5. Put message on News bulletin board. At the start of the course it is customary to welcome all students and to give some starting tips. During the course, problems and their solutions which are relevant for all students can be put on the News board. 6. Let student restart with the assignment. This action is taken when an assignment maker has by accident or intent sent an assignment outcome well below the reasonably to be expected quality. 7. Remove item from to-do-list. This item allows the tutor to remove the item from his to-do-list when he feels that he has dealt with it in a sufficient manner by performing one or more tutor actions. This option can also be chosen immediately, without taking any other action. This is the case when the evaluation by the tutor shows no need for tutor action and the tutor considers this particular warning bell a false alarm. Besides the to-do-list, a tutor can choose from a number of menu options such as Mail, News, Help, and Overview (for a description of these options, see the student role). Help in this case leads to a user guide for tutors, obviously. The tutor has an extra option: Statistics. This allows creating percentage tables showing the progress of students and the outcome and feedback ratings given. Students are categorized in the following progress statuses: not started yet, produce assignment outcome version 1, give feedback on version 1, react to received feedback related to version 1, elaborate on given feedback related to version 1, read elaboration related to version 1 and (eventually) produce version 2, (eventually) give feedback on 5 version 2, etc. etc., until the student gets to work on the final version and he closes the feedback loop by sending in the final version (status: ready with assignment). Conclusions Espace was tested during the Espace-project in a number of field trials: two teacher education courses and one general writing course. Student users found the tool easy to use, socially and intellectually stimulating, and ultimately leading to better assignment outcomes. One student remarked: “I was sceptical at first, but now I wish we had this tool available last year”. Teacher users found that the tool saved them a lot of time, although some preparation time was needed to get to know the tool, adjust the settings and introduce it to students. This means that time savings are most impressive when the number of student users is high. Of course, the less sensitive the tutor sets the quality system features, the less time he will need to spend monitoring the peer feedback processes. A number of considerations are important before and during the implementation of peer feedback. First of all, the assignment has to be such that peer feedback is useful. If there is a model answer for the assignment, students can compare their outcomes to this model and no peer feedback is needed. In other words, the assignment needs to lead to very divergent answers where feedback can only be provided by a tutor or by peers. Also, the assignment should not be too difficult for peers to assess. Well-defined criteria are a must for peer assessment (Wiggins, 2001; Miller, 2003). Some students may not welcome the responsibility of assessing, or value the assessments of peers (Boud et al., 2001). For peer feedback to be acceptable to students, the educational purpose should be stressed in order to get students intrinsically motivated (Davies, 2000): “Peer assessment must not be used as a means of purely reducing the tutor's marking load. There must be a positive educational benefit for the students, and they must be made aware of what that benefit is prior to the use of the system”. Since peer feedback enhances the workload of students, this should be taken into account when calculating the total workload and possibly this additional load needs to be compensated by reducing the number of other activities. To avoid peer feedback fatigue, peer feedback should be used in moderation and certainly not too concentrated in one time period. Finally, students should not have the opportunity to be free riders and skip assignments or miss feedback deadlines (Prins et al., 2005). It is therefore recommended that students get some extrinsic motivation to perform the peer assessment processes properly, either by earning marks for it or by making peer assessment a prerequisite requirement to finish the course. 6 References Bhalerao, A. & Ward, A. (2001). ‘Towards electronically assisted peer assessment: a case study’, Association for Learning Technology Journal, 9, 1, 26-37. Bostock, S.J. (2001). Student peer assessment. A commissioned article for the ILT now in the HE Academy resources database. Last accessed on July 3, 2006 at http://www.heacademy.ac.uk/resources.asp?process=full_record&section=generic&id=422 Bostock, S.J. (2002). Web Support for Student Peer Review, Keele University Innovation Project Report July 2002 . Last accessed on July 3, 2006 at http://www.keele.ac.uk/depts/aa/landt/lt/docs/peerreviewprojectreport2002.pdf Bostock, S.J. (2004). Motivation and electronic assessment,. In: Irons, A. and Alexander, S. (Eds.) Effective Learning and Teaching in Computing (pp. 86-99). Routledge Falmer: London. Boud, D., Cohen, R. & Sampson, J. (2001). Peer learning and assessment, In: Boud, D., Cohen, R. and Sampson, J. (Eds.) Peer Learning in Higher Education (pp. 67-84 ). London: Kogan Page. Chanock, K. (2000). Comments on essays: do students understand what tutors write? Teaching in Higher Education, 5(1), 95-105. Davies, P. (2000). Computerized Peer Assessment. Innovations in Education and Training International, 37, 4, 346-355. Davies, P (2003). Closing the Communications Loop on the Computerized Peer-Assessment of Essays. Association for Learning Technology Journal, 11 (1), 41-54. Davies, P (2006). Peer-Assessment: Judging the quality of student work by the comments not the marks? Innovations in Education and Teaching International (IETI), 43 (1), 69-82. DU or Dutch Digital University. Last accessed on September 30, 2006 at: http://www.du.nl/digiuni/index.cfm/site/Internet/pageid/4F0F2DBB-508B-67D05E339E86FC68ECF8/index.cfm Falchikov, N. (2004). Involving students in assessment. Psychology Learning and Teaching, 3(2), 102-108. Hanrahan, S.J. & Isaacs, G. (2001). Assessing self- and peer-assessment: The students’ views. Higher Education Research & Development, 20, 53-70 Keppell, M., Au, E., Ma, A. & Chan, C. (2006). Peer learning and learning-oriented assessment in technology-enhanced environments. Assessment and Evaluation in Higher Education, 31(4), 453-464. Lejk, M. & Wyvill, M. (2001). The effect of the inclusion of self-assessment with peer assessment of contributions to a group project: A quantitative study of secret and agreed assessments. Assessment & Evaluation in Higher Education, 26, 551-561 Lin, S.S.J., Liu, E.Z.F. & Yuan, S.M. (2001). Web-based peer assessment: feedback for students with various thinking-styles, Journal of Computer Assisted Learning, 17, 430-432. Lim Yuen Lie, Lisa-Angelique (2003). Implementing Effective Peer Assessment. Assessment, Vol. 6, No. 3. Last accessed on July 3, 2006 at http://www.cdtl.nus.edu.sg/brief/v6n3/sec4.htm Miller, P.J. (2003). The effect of scoring criteria specificity on peer and self assessment. Assessment & Evaluation in Higher Education, 28 (4), 383-393. 7 Nicol, D. J. & Milligan, C. (2006). Rethinking technology-supported assessment in terms of the seven principles of good feedback practice. In C. Bryan and K. Clegg (Eds), Innovative Assessment in Higher Education. London: Routledge. Moodle Workshop http://moodle.org Trahasch, S. (2004). "From Peer Assessment towards Collaborative Learning," Proceedings of 34th ASEE/IEEE Frontiers in Education Conference. Savannah, USA: IEEE, 2004, pp. F3F-16 - F3F-20. Last accessed on July 4, 2006 at: http://fie.engrng.pitt.edu/fie2004/papers/1256.pdf Prins, F.J., Sluijsmans, D.M.A., Kirschner, P.A. & Strijbos, J.W. (2005). Formative peer assessment in a CSCL environment: a case study. Assessment & Evaluation in Higher Education, 30, 417-444. Robinson, J. (2002). In Search of Fairness: an application of multi-reviewer anonymous peer review in a large class. Journal of Further and Higher Education, 26 (2), 183-192. Sung, Y., Chang, K., Chiou, S. & Hou, H. (2005). The design and application of a web-based self- and peer-assessment system. Computers & Education, 45 (2), 187-202. Topping, K. (2003). Self and peer assessment in school and university: reliability, validity and utility. In: M. Segers, F. Dochy & E. Cascallar (Eds). Optimizing new modes of assessment: in search of qualities and standards. Dordrecht: Kluwer Academic Publishers. Turnitin Peer Review www.turnitin.com Wiggins, G. (2001). Educative Assessment. San Francisco: Jossey-Bass. Zhao, Y. (1998). The Effects of Anonymity on Computer-Mediated Peer Review. International Journal of Educational Telecommunications, 4 (4), 311-345. Contact author: Maurice De Volder, Associate Professor, Open University Netherlands, Valkenburgerweg 177, 6419 AT Heerlen, The Netherlands, e-mail maurice.devolder@ou.nl 8