Chapter 5: Boltzmann Machine

advertisement

5-1

Chapter 5: Boltzmann Machine

‧ Neural networks achieve purposes by

minimizing or maximizing some functions

Learning process - e.g., Adaline (LMS)

Backpropagation (MSE)

Recall process - e.g., BAM (Energy)

Hopfield model (Energy)

Methods: gradient descent, hill climbing

Difficulties: local extremes

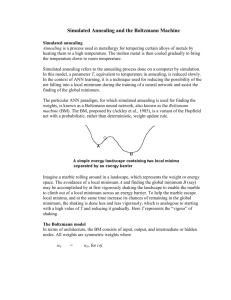

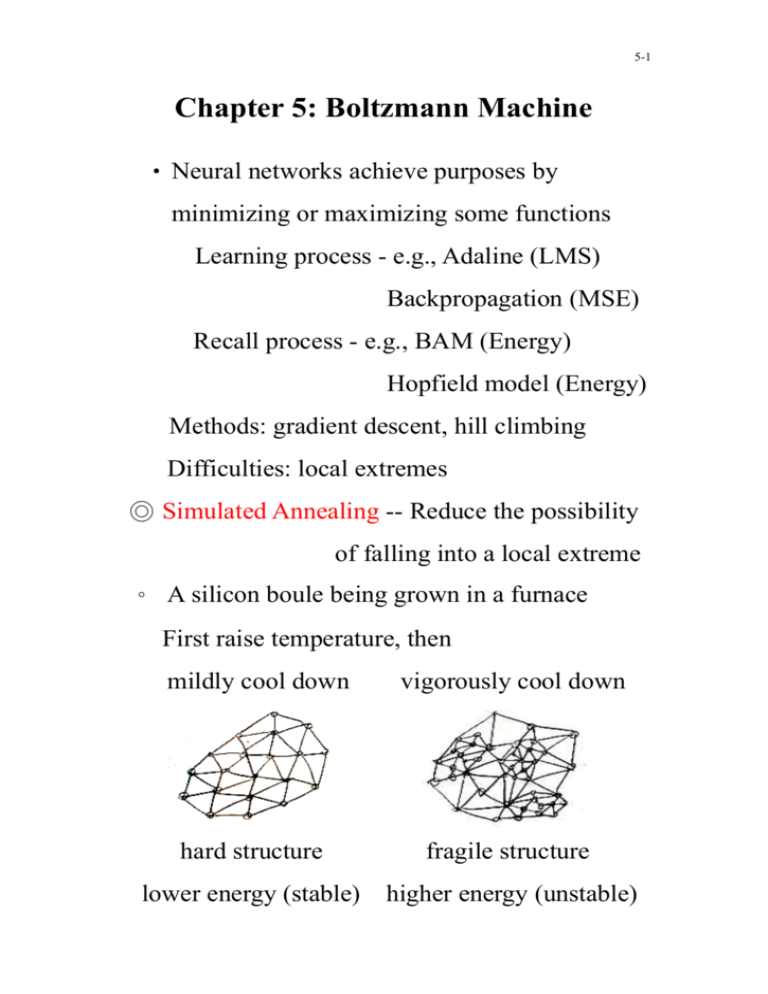

◎ Simulated Annealing -- Reduce the possibility

of falling into a local extreme

。 A silicon boule being grown in a furnace

First raise temperature, then

mildly cool down

vigorously cool down

hard structure

fragile structure

lower energy (stable) higher energy (unstable)

5-2

○ A thermal system that is initially at

temperature T0 is changed to T

。 The system reaches an equilibrium state after a

sufficient time period

However, particles interchange their energies

one another

Histogram

Probability

distribution

distribution

In an average sense, the system energy at the

N

equilibrium state is ET Ei PE

i i

i 1

The system energy actually fluctuates around ET

5-3

。 A particle system at temperature T whose

energy follows the Boltzmann distribution

p ce

E

where 1/ kT

k: Boltzmann constant

T: Kelvins (absolute) temperature

◎ Entropy - the average coarseness of particles

The coarseness of a particle with energy E

1

H log log p

p

Entropy: H <H i > = pi H i pi log pi

i

i

5-4

5.1. Information Theory and Statistical Mechanics

5.1.1. Information Theory

e : event ,

p(e) :probability of occurrence

if e occurs ,we receive

1

bits of information

I (e) log 2

p (e)

。 Bit: the amount of information receives when

any of two equally probable alternatives

is observed, i.e., p(e)

1

2

1

log 2 2 1 bit

1/ 2

Let p(e) 1 I (e)=log 21 0 bit

I (e) log 2

This means that if we know for sure that an

event will occur, its occurrence provides no

information at all

。 An information source

-- A sequence output of symbols from the

set S s1 , s2 ,..., sq with occurring

probabilities

p(s ), p(s ),..., p(s )

1

2

q

5-5

。 Zero-memory source: the probability of sending

each symbol is independent of symbols

previously sent.

The amount of information received from

1

each symbol is I ( si ) log 2

p( si )

Entropy: the average amount of information

received per symbol

q

q

i 1

i 1

H ( s) I p( si )I ( si ) p( si )log 2 p( si )

。 Entropy corresponding to a measure of disorder

in a system

The most disorderly system is the one whose

symbols occur with equal probability

Proof:

Two sources contain q symbols with

symbol probabilities

p1i , i 1,..., q s1 , p2 j , j 1,..., q s2

q

p

i 1

ri

1 ,

q

p

2j

j=1

1

5-6

The difference in entropy between the two

sources

q

H1 H 2 [ p1i log 2 p1i p2i log 2 p2i ]

i 1

q

[ p1i l o g2 p i 1 p i

i 1

1

l opg2 i 2

p1i log 2 p i2 p i log

p2 i ]

2

2

q

p1i

[ p1i l o g2

p( 1i p 2i ) l o2 pg i2

p

i 1

2i

q

p1i log 2

i 1

q

( p

i 1

1i

p1i

p2i

p2i )log 2 p2i ------ (5.6)

If s 2 is a source with equiprobable

symbols, then

1

p2i , log 2 p2i log 2 q c (constant)

q

q

( p

i 1

1i

q

q

i 1

i 1

p2i )c ( p1i p2i )c

(1 1)c 0

The second term in (5.6) is zero

]

5-7

q

q

p1i

p2i

H1 H 2 p1i log 2

p1i log 2

p

p1i

i 1

i 1

2i

q

p1i

where p1i log 2

: information gain

p

i 1

2i

(relative entropy or Kullback-Leibler

divergence) between the probability

functions p1i and p2i )

log x x 1

q

q

p2i

p

p1i log 2

p1i ( 2i 1)

p1i i 1

p1i

i 1

q

q

i 1

i 1

p2i p1i 1 1 0

H1 - H 2 0

5-8

5.1.2. Statistical Mechanics

-- Deal with a system containing a large number

of elements

e.g., thermodynamics, quantum mechanics

Overall properties are determined by the

average behavior of the elements

○ Thermodynamic System

The probability pr that a particle is at a

certain energy Er

pr ce Er (Boltzmann or Canonical

distribution)

p

r

r

1, c ( e

Er

r

e Er

) , pr

e Ek

-1

k

The statistical average of energy

e Er

E pr Er

Er

Ek

e

r

r

k

Er

e

Er

r

z

Entropy: s kB pr ln pr

r

5-9

○ Two systems with the respective entropies

s1 kB p1r ln p1r , s2 kB p2 r ln p2 r

r

r

Suppose they have the same average energy,

i.e., E1 p1r Er p2 r Er E2

r

r

Suppose p2r follows Boltzmann distribution

i.e., p2 r ce Er

The entropy difference between two systems

s1 s2 kB p1r ln p1r kB p2 r ln p2 r

r

r

p

p1r ln 1r ( p1r p2 r ) ln p2 r k B

p2 r

r

r

ln p2 r ln(ce Er ) ln c ln(e Er ) c Er

( p

1r

r

p2 r ) ln p2 r ( p1r p2 r )(c Er )

r

c p1r p1r Er c p2 r p2 r Er

r

r

r

c E1 c E2

( E1 E2 ) 0

s1 s2 ( p1r ln

r

p2 r

)k B

p1r

r

5-10

p1r ln

r

p2 r

0 ( ln x x 1)

p1r

s1 s2 0

* A system following the Boltzmann

distribution has the largest entropy

5.1.3. Annealing

-- A system at a high temperature has a higher

energy state than at a lower temperature

Rapid cooling can result in a local energy

minimum

。 An annealing process lowers temperature

slowly and finds the global energy minima

with a high probability.

At each temperature, sufficient time should be

given in order to allow the system to reach an

equilibrium following the Boltzmann

distribution p ( Er ) e

Er

k BT

5-11

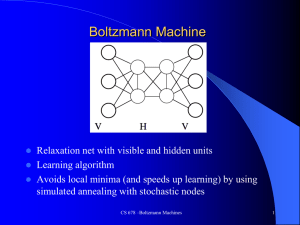

。 Hopfield network: looks for the nearest local

minimum by deterministically updating

outputs of nodes

Boltzmann Machine: lets the output value of

each node be determined stochastically

according to the Boltzmann distribution

5.2. Boltzmann Machine

○ Features:

1. The output of PEs is a stochastic function

of the inputs.

2. The production algorithm combines an

Energy minimization with an entropy

maximization

5-12

○ Two different types of architecture:

1. Boltzmann completion network

2. Boltzmann I/O network

5-13

◎ Boltzmann Completion Network

Objective: to learn a set of input patterns and

then to supply complete patterns if partial

or noisy patterns are presented.

Input patterns: binary vectors

1 n

。 The system energy E

2 i 1

n

j 1,i j

(no self-loop)

wij xi x j

n : # units (both hidden and visible)

xk : the output of the k th unit

。 Suppose the system has learned a set of binary

vectors encoded in a set of weights.

Given an incomplete pattern

x (0, u,1,0, u,1,0), where u: unknown

of a stored pattern x (0,1,1,0,0,1,0)

To recall x

1. Set the outputs of known visible units to

the values specified by x

2. Set outputs of unknown visible units and

hidden units to random values from 1,0

5-14

3. Set the initial temperature to a large T T0

4. Calculate the net input net k of randomly

selected unit xk ( hidden or visible node)

1

(sigmoid)

net k / T

1 e

Change xk if pk z

5. Calculate pk

z : a uniform random number from 0,1

6. Repeat 4 and 5 until all units have had

some probability of being selected for

update (one processing cycle)

7. Repeat 6 several cycles, until equilibrium

(having the maximal entropy) has been

reach at the given T

8. Lower T and repeat steps 4 though 7

Once T has been small enough, the network

stabilizes (having the minimal energy) and

the result is the outputs of the visible units

◎ Boltzmann I/O Network

The input vector is clamped at the visible

layer and is never updated

5-15

All hidden and output units are updated

according to simulated annealing

5.2.2. Learning -- Using simulated annealing

and gradient decent techniques

-- The learning algorithm attempts to cause the

network to form a probability model of the

population based on the given examples

-- There are often many different models that are

consistent with the examples. How to choose

among the various models?

-- Insist the model to lead to the most homogeneous

distribution of input patterns consistent with

the examples supplied.

。 Example:

3D vectors (_, _, _) in a population P,

whose components {1,0}

Suppose vectors of type (1, _, _) have 40%

in P. There are many choices, e.g.,

5-16

P(1, 0,1) 4%, P(1, 0, 0) 10%

40%

P(1,1, 0) 8%, P(1,1,1) 18%

The most homogeneous distribution is

P(1, 0,1) 10%, P(1, 0, 0) 10%

40%

P(1,1, 0) 10%, P(1,1,1) 10%

i.e., equal probabilities to each case.

。 In an information source, if symbols possessed

1

equal probability of occurrence, i.e., pi ,

q

the source has the maximum entropy.

。 In a physical system in equilibrium at some

temperature T, the system possessing the

Boltzmann distribution of state energy has

the maximum entropy.

。 Boltzmann distribution: pr ce E ce

r

Er

k BT

As T , pr c , i.e., equiprobable states

(most homogeneous)

5-17

。 In terms of maximum entropy,

a physical system with Boltzmann states

an information source with equiprobable

symbols

。 The information gain of S 2 w.r.t. S1 is

defined as G P1r log

r

P1r

P2 r

※ A learning algorithm that discovered

weights w that minimized G would lead

ij

the network from state S2 to state S1

。 The closest to the Boltzmann distribution,

the maximum entropy and minimum energy,

the most homogeneous distribution of input

patterns

。 Training the Boltzmann machine

1. Raise the temperature of the NN to some

high value.

2. Anneal the system until equilibrium is

reached at some low temperature value.

5-18

3. Adjust the weights of the network so that G

is reduced.

4. Repeat 1~3 until observed

Boltzmann

distributions.

This procedure combines gradient descent (3)

in G and simulated annealing (2)

。 Given a set of vectors {Va } for being learned,

Defined {Η b } as the set of all possible

vectors that may appear on the hidden units

Clamp the outputs of visible units to each Va

。 Let P (Va ) : the probability that visible units

are clamped to Va

P (Va Hb ) : the probability that Va is

clamped to the visible units and

H b appears on the hidden layer

P (Va )

P

(Va H b )

b

Let P (Va ) : the probability that Va appears

on the visible layer without

clamping visible units

5-19

P (Va Hb ) : the probability that Va appears

on the visible layer and H b

appears on the hidden layer

without clamping visible units

P (Va ) P (Va H b )

b

An unclamped (free-running) system in

equilibrium at some temperature, the

probabilities are the Boltzmann probabilities

e E

P (Va H b )

Z

ab

T

Eab wij xi ab x j ab

i j

, where Z e E

mn

T

m ,n

ab

visible (Va )

, x : hidden (H ) unit

b

P (Va ) P (Va H b )

b

e

Eab T

b

Z

P

(V )

Information gain: G P (Va )ln a

a

P (Va )

P (Va ) P (Va )

G

wij

wij

a P (Va )

( P (Va ) independent of wij

clamping)

5-20

e

P (Va )

wij

wij

b

Z

Z

wij

e

1

T

Eab T

b

Eab T

b

e Eab T

Z

Eab wij xi x j

Z eE

mn

e

b

ab

i j

Eab T

Z2

Eab

wij

ab

b

Z

wij

e Eab T Z

Z 2 wij

Eab

xi ab x j ab

wij

T

m ,n

Z

Emn

( e Emn T )

e

wij wij m ,n

m , n wij

1 Emn E T

e mn

T wij

m,n

1

xi mn x j mn e Emn T

T m ,n

T

5-21

P (Va )

1 e E

wij

T b Z

ab

T

Eab

e E T Z

wij

Z 2 wij

b

ab

1 e E T ab ab

xi x j

T b Z

e E T 1

mn

mn E T

x

x

b Z 2 T

i

j e

m ,n

1

P (Va H b ) xi ab x j ab

T b

b e E T 1 e E T mn mn

xi x j

Z

T m ,n Z

1

P (Va H b ) xi ab x j ab

T b

P (Va )

mn

mn

P

(

V

H

)

x

x

m

n

i

j

T m ,n

ab

ab

mn

ab

mn

P (Va )

G

1

P (Va H b ) xi ab x j ab

wij

T a ,b P (Va )

+

P

a (Va )

+

P (Vm H n ) xi mn x j mn

T

m,n

P (Va Hb ) P ( Hb Va ) P (Va )

Likewise, P (Va H b ) P ( H b Va ) P (Va )

5-22

P ( Hb Va ) P ( Hb Va )

i.e., if Va is on the visible layer, then the

probability that H b will occur on the

hidden layer should not depend on

whether Va got there by being clamped

to that layer or by free-running to that

layer.

P (Va H b ) P (Va )

P (Va H b ) P (Va )

P

(V )

P (Va Hb ) a P (Va Hb )

P (Va )

G 1

( P ij P ij ) , where

wij T

P ij P (Va H b ) xi ab x j ab

co-occurence

a ,b

ab ab probabilities

P ij P (Va H b ) xi x j

a ,b

Weight update:

wij c

G c

( Pij Pij ) ( Pij Pij )

wij T

5-23

Pij , Pij : compute the frequency that xi ab

ab

and x j are both active averaged

over all possible combinations of

Va and H b

。Expand the algorithm of training a Boltzmann

machine by incorporating simulated annealing

process

1. Clamp one training vector to the visible

units

2. Anneal the network until equilibrium at

the minimum temperature

3. Continue for several processing cycles

(steps 1,2). After each cycle, determine

the pairs of connected units which are on

simultaneously (i.e. co-occurrence).

4. Average the co-occurrence results

5. Repeat 1~4 for all training vectors

Average the co-occurrence results to

get an estimate of Pij .

5-24

6. Unclamped the visible units

Run through 2~5 for calculating Pij

7. Change weights according

wij ( Pij Pij )

8. Repeat 1~7 until Pij Pij is sufficiently

small.

※ Arbitrarily assign initial weights

5.2.3. Practical Considerations

。 The learning process is very slow.

Geman & Geman show that the temperature

must be reduced according to

T (tn )

T0

1 ln tn ,

where T0 : Starting temperature

tn : the nth processing cycle

◎ Cauchy machine

a. Based on Cauchy distribution (

1

)

2

(1 x )

rather than the Boltzmann distribution

5-25

b. Annealing temperature follows

T (tn )

T0

1 tn

5.3. Symptom-Diagonosis Application –

Diagnose why a car will not start

。 Symptoms:

1. Does nothing, 2. Solenoid, 3. Grinds,

4. Cranks, 5. No spank, 6. Cable hot,

7. No gas

。 Possible causes:

1. Battery, 2. Solenoid,

3. Stater,

4. Wires, 5. Distributor,

6. Fuel Pump

。 Relationships

5-26

。 Solution:

1. Boltzmann

I

Network

2. Determine hidden units

Arbitrarily select 14 PE

Add more PE if training can not converge

Fewer PE in order to improve

performance

3. Training data sets

6 training vector pairs

5-27