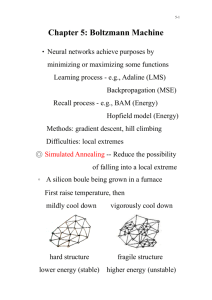

Simulated Annealing and the Boltzmann Machine

advertisement

Simulated Annealing and the Boltzmann Machine

Simulated annealing

Annealing is a process used in metallurgy for tempering certain alloys of metals by

heating them to a high temperature. The molten metal is then cooled gradually to bring

the temperature down to room temperature.

Simulated annealing refers to the annealing process done on a computer by simulation.

In this model, a parameter T, equivalent to temperature in annealing, is reduced slowly.

In the context of ANN learning, it is a technique used for reducing the possibility of the

net falling into a local minimum during the training of a neural network and assist the

finding of the global minimum.

The particular ANN paradigm, for which simulated annealing is used for finding the

weights, is known as a Boltzmann neural network, also known as the Boltzmann

machine (BM). The BM, proposed by (Ackley et al., 1985), is a variant of the Hopfield

net with a probabilistic, rather than deterministic, weight update rule.

Imagine a marble rolling around in a landscape, which represents the weight or energy

space. The avoidance of a local minimum A and finding the global minimum B (say)

may be accomplished by at first vigorously shaking the landscape to enable the marble

to climb out of a local minimum across an energy barrier. To help the marble escape

local minima, and at the same time increase its chances of remaining in the global

minimum, the shaking is done less and less vigorously, which is analogous to starting

with a high value of T and reducing it gradually. Here T represents the “vigour” of

shaking.

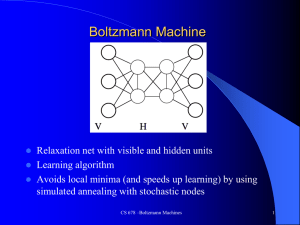

The Boltzmann model

In terms of architecture, the BM consists of input, output, and intermediate or hidden

nodes. All weights are symmetric weights where

wij

=

wji, for ij.

Outputs

Inputs

Thus Boltzmann networks are highly recurrent, and this recurrence eliminates any basic

difference between input and output nodes, which may be considered as either inputs or

outputs as convenient.

Node outputs in a BM take on discrete {1,0} values. Outputs are computed

probabilistically, and depend upon the temperature variable T. Activation, Si, of node i is

given by

n

Si

=

w u

j 0

ij

j

The new value of the node’s output ui is given by

ui

=

1 with probabilit y pi

0 with probabilit y 1 pi

(1)

where,

1

(2)

1 e Si / T

The new value of ui will be either 1 or 0 with equal probability 0.5 if either Si = 0 or T is

very large. On the other hand, if T is very small, the new activation is computed

deterministically, and the node behaves like a familiar discrete {1,0} cell.

pi

=

Input and output nodes can be either clamped or free-running. If they are clamped, then

their outputs, ui, are fixed and do not change. If they are free-running, then their outputs

change according to the equation for ui above. Intermediate nodes are always freerunning.

The BM begins with a high temperature T and follows a simulated annealing schedule

where T is gradually reduced as iterations proceed. At each iteration, we select a node ui

at random and, if it is not clamped, compute ui’s new output. When T becomes

sufficiently small, the net becomes deterministic like a Hopfield net and reaches

equilibrium. At this stage, no further output changes occur, and the node outputs are

read off.

The energy of the BM is computed using the same formula used in Hopfield nets:

E(t)

=

- ui (t )u j (t ) wij

i j

With T = 0 or close to 0, in Eq.(2) above, the net behaves as a Hopfield net, and its

energy either decreases (for a change in ui from 1 to 0), or remains unchanged (for a

change in ui from 0 to 1). Hence the system must stabilise by moving into a minimum

energy state if T = 0. To ensure that a global minimum will be reached with a high

probability, simulated annealing can be performed by lowering T gradually.

The network can settle into one of a large number of energy states, the distribution of

which is given by the Boltzmann distribution function

p = ke-E/T

Where p is the probability of the net finding itself in a state with energy E.

If P is the probability of a state with energy E , then

P /P = ( e-E/T)/( e-E/T)

= e-(E-E)/T

If E is a lower energy state than E then

therefore

e-(E-E)/T > 1

P /P

> 1,

so P > P

which means as the network approaches thermal equilibrium, lower energy states are

more probable, dependent only upon their relative energy.

Higher temperatures allow local minima states to be escaped via higher energy states,

but also allow transitions from lower to higher minima with almost equal probability. As

the temperature is lowered, however, the probability of escaping from a higher minima

to a lower one falls, but the probability of travelling in the opposite direction falls even

faster, and so more low energy states are reached. Eventually, the system settles down at

low temperature in thermal equilibrium.

Learning in Boltzmann Machines

The network is arbitrarily divided into input, output and hidden units.

Learning occurs in two phases.

Phase 1:

Input and output units are clamped to their correct values, and the

temperature set to some arbitrary high value. The net is then allowed to cycle through its

states with the temperature being gradually lowered until the hidden units reach thermal

equilibrium. Weights between pairs of "on" units are incremented.

wij

=

wij

+

if ui = 1 and uj = 1

= k(pij – p’ij)

where pij is the average probability of the two neurons i and j being on when the

network is clamped, and p’ij is the probability of them being on when the

network is not clamped, and k is a scaling factor (Ackley et al., 1985).

Phase 2:

Only the input units are clamped to their correct values, with hidden and

output units left free. The net is run until thermal equilibrium is reached. Weights

between on units are decremented.

wij

=

wij

if ui = 1 and uj = 1

The first phase reinforces connections that lead from input to the output, while the

second "unlearns" poor associations.

Limitations of the Boltzmann machine

There are a number of problems associated with Boltzmann machines. Decisions about

how much to adjust the weights, how long you have to collect the statistics to calculate

the probabilities, how many weights you change at a time, how you adjust the

temperature during simulated annealing, and how to decide when the network has

reached thermal equilibrium. The main drawback however is that Boltzmann learning is

significantly slower than backpropagation.

References:

Beale & Jackson, Neural Computing, Adam Hilger 1990.

Ackley, D.H., Hinton, G.E. and Sejnowski, T.J. (1985) A learning algorithm for

Boltzmann machines, Cognitive Science, 9, 147-69.