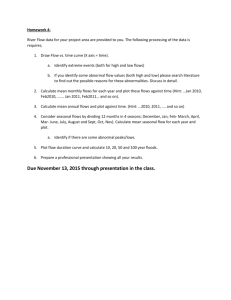

Chapter 2 JMP Handout

advertisement

Ch 2. – Statistics Background for Forecasting – Computing in JMP

A typical JMP dataset is shown below. These data are a time series for chemical process

viscosity used in Chapter 2. Chemical Process Viscosity.JMP (Table B.3 in text)

To obtain basic univariate displays and summary statistics in JMP we use Analyze >

Distribution.

1

The Distribution dialog box is shown below.

The standard output is shown below.

In the output below I have added some additional features to the standard output.

2

Plotting Time Series in JMP

There are three options for plotting time series in JMP. A time series plot is basically a

scatterplot of the time series values versus time.

Method 1 - Fit Y by X

If there is a column in the spreadsheet for time period (t), the preferred method would

be to use Analyze > Fit by X. In JMP Fit Y by X is used when we wish to examine the

relationship between two variables, generically labeled X and Y. Y is considered the

response which in the case of time series will always be the time series values 𝑦𝑡 . X is

considered the predictor or explanatory variable and will generally be the time period

variable t. I have set up all time series datasets for this class to include a time (t)

column.

Below is the default scatterplot returned by JMP.

Comments:

3

The Bivariate Fit pull-down menu…

Selecting Fit Each Value and Kernel Smoother…

4

Idea behind Kernel Smoothers:

5

Method 2 – Graph > Overlay Plots

Another methods for plotting a time series in JMP is to use the Graph > Overlay Plots

option. This will only plot a time series versus time and does not allow for smoothing

or other analysis options that are available from the Bivariate Fit approach above. The

only advantage of this approach is that is allows multiple time series to plotted on the

same graph. For example consider the data in the file Global Mean Surface

Temperature Anomaly and Global CO2 Concentration.JMP (Table B.6 in text) on the

course website.

We might be interested in plotting both time series in the same graph to see how the

two series are related to one another. However, notice how the two time series are in

different units and in different scales! You clearly could not use the same Y-axis

(vertical axis) to plot these time series. Using Graph > Overlay Plot you can choose one

variable to be on the Y-axis on the left and one variable to have the Y-axis on the right.

The resulting dialog box is shown on the following page.

6

Notice we have put both time series in the Y-box and Year (time) in the X-box. The

arrows to the left of the Y variables specify which side of the plot the Y-axis will be

displayed. As these two variables have different units and scale we need to place one

of their Y-axes on the right. To do this, highlight the series to put on the right and click

the Left Scale/Right Scale button.

Click OK and the graph below will be produced.

Comments:

7

Method 3 – Graph > Graph Builder

Graph builder is a generic graph building sandbox you can play in JMP. It allows for

the creation of a variety of plot types in a dynamic and interactive fashion. To try to

cover all of the possibilities would be impossible at this point and there in theory

infinitely many ways to plot data using this tool. I will demonstrate some options as it

pertains to the material we are covering in this course using the data file U.S. Beverage

Manufacturer Product Shipments, Unadjusted.JMP (Table B.5 in text) on the course

website.

Data types in JMP:

8

To change data types in JMP, you can right-click on the data type icon next to the

variable at any time and change it to another type. For example, we might want to treat

both Month and Year in the beverage sales data set as Ordinal, rather than treat them

numerically as a number. The reasons for making these changes will be discussed

later. Below I have right-clicked on Month to change its data type to Ordinal.

After changing to Month & Year to

Ordinal.

We will now use Graph > Graph Builder to construct the following plots:

1) A scatterplot plot of Sales vs. Time

2) Comparative boxplots to examine monthly differences in sales.

3) Comparative boxplots to examine yearly differences in sales.

The starting point is Graph Builder is shown on the following page.

9

To plot Sales vs. Time (or even Date) drag those variables to the appropriate axis.

Comments:

10

To examine monthly differences we can drag Month to the X-axis and click the

Comparative Boxplots icon.

Comments:

11

We use the same approach to look at annual changes in beverage sales.

Or do both simultaneously by dragging carefully.

Comments:

12

We can use Graph Builder to plot two (or even more) time series simultaneously, even

when they are in different units and scales as we did above using Overlay Plot. Again

by carefully dragging both time series to the Y-axis we can create the stacked effect you

see below. The Overlay Plot approach may be better for seeing the relationship.

Comments:

13

Comparative Boxplots using Fit Y by X

Another way to create the comparative boxplots of beverage sales vs. year and/or

month we did above using Graph Builder is to use the Fit Y by X option. Remember

that Fit Y by X essentially means “I want to conduct an analysis to see how two

variables X and Y relate to one another”.

Fit Y by X and Data Type

14

To construct comparative boxplots of beverage sales vs. month and year, select Fit Y by

X and place both Month & Year in the X-box and Sales in the Y-box.

After clicking OK the default plots are shown below. We can select numerous analyses

and graphical enhancements to add to these plots from the Oneway Analysis pull-down

menus above each plot.

15

This pull-down menu is shown below.

Quantiles – gives quantile summary statistics

and adds comparative boxplots to the

display.

Means and Std Dev – gives means and

standard deviations by location and adds

mean/SD lines to the plot.

Comments:

The Display Options and their effects are summarized below...

Box Plots - adds quantile boxplots to the display

Mean Diamonds - adds mean diamonds to the plot

Mean Lines – adds a horizontal showing the mean for each

group/population.

Mean CI Lines – adds lines depicting the 95% confidence interval for

the population mean of each group to the plot.

Mean Error Bars - adds the means and standard errors (Ch. 6) to the

plot

Std Dev Lines - add lines one standard deviation above and below the

mean.

Connect Means - adds line segments connecting the individual

means.

X-Axis Proportion - if checked the space allocated to the groups will

proportional to the sample size for that group.

Points Spread – staggers the points much more than jittering.

Points Jittered – “jitters” the points so individual observations are

more easily seen.

Histograms – adds histograms

16

The comparative boxplots and summary statistics for beverage sales vs. month and year

are shown below.

17

Autocovariance and Autocorrelation in JMP

In your notes one way to examine the presence of autocorrelation in a time series is

consider plots of 𝑦𝑡+𝑘 vs. 𝑦𝑡 or equivalently 𝑦𝑡 𝑣𝑠. 𝑦𝑡−𝑘 for k = 1, 2, …. In the time series

𝑦𝑡−𝑘 is called lag k time series. In JMP there is a relatively easy way to construct the lag

k of a time series.

As an example consider the chemical viscosity time series data, and suppose we want to

consider the relationship between 𝑦𝑡 and 𝑦𝑡−1 , i.e. we want to investigate the potential

of lag 1 autocorrelation (𝜌1 ). To form the lag 1 time series double-click to the right of the

Viscosity column to add a new column to the spreadsheet. Then right-click at the top of

this new column and select Formula from the pop-out menu as shown below.

This will open the JMP calculator which is shown below…

18

The lag operator in JMP (Lag) is found in the menu labeled Row in the JMP calculator

as shown below.

Notice there is also a function called Dif which is another important operator that we

use in time series analysis. We will be examine this operator later in this handout for

Chapter 2.

After selecting the Lag operator we will see the following in the JMP calculator.

To form the lagged time series of the viscosity readings (𝑦𝑡−1 ) click the Viscosity

variable so the calculator will show: Lag(Viscosity,1). To form a series with a lag of k,

simply change the 1 to whatever lag k you are interested.

19

After changing the column name to Visc Lag 1 our data table should look like:

Notice the lag 1 operator simply shifts the time series by one row down. We can now

plot 𝑦𝑡 𝑣𝑠. 𝑦𝑡−1 using Fit Y by X with Y = Viscosity and X = Visc Lag 1. To add the

correlation between 𝑦𝑡 and 𝑦𝑡−1 to the output, i.e. calculate the lag 1 sample

autocorrelation 𝑟1 = 𝛾̂1 select Density Ellipse (.95) as shown below, with the results

shown on the top of the next page.

20

Comments:

Clearly there appears to be a significant amount of correlation between 𝑦𝑡 and 𝑦𝑡−1 .

Using the rule of thumb that if

𝑟𝑘 ≥

2

√𝑇

=

2

√100

= .20

there is significant autocorrelation, we can see this is the case as 𝑟𝑘 ≈ .789. We can also

see that this correlation is statistically significantly different from 0 by considering the the

p-value < .0001.

What is a p-value? (review)

21

Now let’s repeat the process to examine a lag of k = 2.

And for k = 3 we have…

22

This process will begin to get very tedious if want to consider all lags up to the

𝑇

recommended 𝐾 = 4 =

100

4

= 25! We can use JMP to produce the entire estimated ACF

up to lag K = 25 by using the Analyze > Modeling > Time Series command.

The dialog box for this command is shown below:

The resulting estimated ACF is shown below. The 𝑟𝑘 estimates differ slightly but are

very similar to those shown above. We will discuss this plot and the different statistics

associated with it in more detail in Chapter 5.

Comments:

23

Time Series Plot of Chemical Viscosity Readings

Example 2: Pharmaceutical Product Sales

Below is a time series plot for pharmaceutical product sales (Table B.2 in text).

How does this series differ from the chemical viscosity series?

Does it appear to be stationary?

24

The autocorrelation function (ACF) for the pharmaceutical sales time series is shown

below.

ACF Plot for Pharmaceutical Sales

Comments:

25

Example 3: Beverage Sales

While ACF is only defined for stationary time series we can still do the computations

for a nonstationary time series. What does an ACF look for a nonstationary time series?

Let’s consider the beverage sales time series which has both long term trend and some

strong seasonal/cyclical behavior.

Time Series Plot of Beverage Sales

ACF Plot for Beverage Sales

Comments:

26

Data Transformations (section 2.4.1 in text)

Data transformations of numeric data are integral part statistical analyses. The most

common type of transformation in statistics is the power transformation. Suppose we

have a numeric random variable y, the power transformation of y is defined as:

𝑦

(𝜆)

𝑦𝜆 − 1 𝜆 ≠ 0

={

𝜆

𝜆=0

𝑙𝑛𝑦

Essentially we are simply raising the random variable y to a power, and you should

simply think of it that way. Power transformations are also used to improve the

normality of y. For example if the distribution of y is left-skewed raising the power of y

will generally improve normality, and if the distribution is right-skewed lowering the

power of y improve normality. The most useful tranformations in this class will be

variance stabilizing transformations (𝜆 = .5, 0 (𝑙𝑛𝑦), −.5, −1), and of these by the natural

log is the most common, which will consider in the example below.

Example: U.S. Monthly Liquor Sales – January 1980 – December 2007

What features do we see in this time series?

27

One of the key features in this time series is that the variation in the monthly liquor

sales appears to increase over time. This is a common phenomenon is time series

analysis. Clearly this is not a stationary time series as there is an obvious long term

increasing trend in sales, but it is also not stationary because the variation increases

with time as well. To stablilize the variance in this time series we consider taking the

natural log of the monthly liquor sales and plot the series in the log scale over time.

To form the natural log of sales in JMP, double-click to the right of the Liquor Sales

column to add a new column to the spreadsheet. Change the name ln(Sales) and then

right-click at the top of the column and select Formula from the menu that appears.

This will open the JMP Calculator and we can use it to specify that the contents of this

new column will be the natural log of the monthly liquor sales. The natural log (ln) is

an example of a transcendental function in mathematics, thus we select that option and

click on Log as shown below, then click on Liquor Sales as the variable to be

transformed, and finally click OK.

28

Time Series Plot of ln(Liquor Sales)

The variation appears to now be constant, thus the variance has been stabilized by

taking the natural logarithm of the monthly liquor sales.

Example 2: International Sunspots (see example in text pg. 35)

29

Trend and Seasonal Adjustments in JMP (section 2.4.2 in text)

Fitting a long term trends using a linear or polynomial function of time in JMP can done

using Fit Y by X (𝑦𝑡 𝑣𝑠. 𝑡) and options from the Bivariate Fit… pull-down menu above

the resulting scatterplot.

Example: Beverage Sales

30

Examining Residuals from Cubic Fit

What are residuals?

31

After saving the residuals to the data table we can plot them vs. time as in the

Diagnostic Plots above.

The time series of residuals after using a cubic polynomial to model the long term trend

in beverage sales appears to be stationary, however there is clearly a seasonal/cyclic

pattern in the residuals. To effectively model this time series for purposes of making

forecasts we would need to model this important structure in the time series as well.

As this series appears to be stationary we could look at the estimated ACF for the

residuals - Analyze > Modeling > Time Series and then set up the dialog box as shown

below.

32

ACF plot of the residuals after removing long term trend using a cubic polynomial.

Comments:

Seasonal Decomposition of a Time Series – (seasonal+ trend + error)

There are several different methods for extracting and graphically displaying the long

term and seasonal/cyclic trends from a time series. The basic idea is break the time

series into a seasonal/cyclic trend + long term trend + random error, i.e.

𝑦𝑡 = 𝑆𝑡 + 𝐿𝑡 + 𝑒𝑡

assuming and additive structure. The structure could also be multiplicative, i.e.

𝑦𝑡 = 𝑆𝑡 𝐿𝑡 𝑒𝑡

in which case we could simply take the natural logarithim of the time series to achieve

additive structure instead, i.e.

ln(𝑦𝑡 ) = ln(𝑆𝑡 𝐿𝑡 𝑒𝑡 ) = ln(𝑆𝑡 ) + ln(𝐿𝑡 ) + ln(𝑒𝑡 ) = 𝑆𝑡∗ + 𝐿∗𝑡 + 𝑒𝑡∗ additive in log scale!

There is not an automated way to estimate the long term and seasonal trends in JMP

(there is in R which we will see later), although we can estimate the components using

33

basic modeling approaches readily available. We examine this process in by using the

U.S. beverage sales time series.

Example: Beverage Sales

We generally begin by first estimating or extracting the long term trend (𝐿𝑡 ) first. As we

have seen above a cubic polynomial in time (t) does a reasonably good job of this.

We can save the predicted values (i.e. estimated 𝐿𝑡 values) from this fit and the

residuals from this fit. The residuals from this first fit would give,

𝑦𝑡 − 𝐿̂𝑡 = 𝑆𝑡 + 𝑒𝑡

We can then use the residuals from the long term trend fit to estimate the seasonal trend

(𝑆𝑡 ). To extract the fitted values (𝐿𝑡 estimate) and the save the residuals from fitting the

long term trend we can select Save Predicted and Save Residuals from the Polynomial

Fit pull-down menu below the time series plot as shown below.

Comments:

34

Using the residuals from the long term trend which are now saved to the data table we

can estimate the seasonal trend (𝑆𝑡 ) as follows. Select Analyze > Fit Model and put the

residuals from the long term trend in Y box and Month (ordinal or nominal) in the

Construct Model Effects box.

Note: I renamed the residual from the long-term trend fit yt – Lt and the predicted values Lt.

You will get lots of output that we are not going to concern ourselves with at this time.

All we are going to do is save the predicted values from this fit (which will be our

estimate of the seasonal trend 𝑆𝑡 ) and the residual values (which will be our estimated

error term 𝑒𝑡 ). This is done as shown below from the Fit Model output.

35

In final data table I will save these columns St and et respectively. A portion of the final

spreadsheet is shown below.

We can then use Graph Builder to visualize our estimated 𝐿𝑡 , 𝑆𝑡 , and 𝑒𝑡 .

36

Adding our estimated long-term and season trends together should match the original

time series fairly well. You can use the JMP calculator to add Lt and St together and

plot the results along with the original series to compare them using Overlay Plot.

The time series and ACF plots for error series (𝑒𝑡 ) are shown below. Does the error

series appear stationary.

37

Regression Models for Seasonal Time Series

(see Sec 2.4.2 pgs. 41-42)

Another approach is incorporate a seasonal term in our regression model consisting of

trigonometric functions sine and cosine. For example in using the trend + season + error

approach above we first used a cubic fit to remove the long term trend. We could add a

seasonal component to the regression model as follows, assuming a 12-month period

for the seasonality of beverage sales.

𝐸(𝑦𝑡 ) = 𝐸(𝑦|𝑡) = 𝛽𝑜 + 𝛽1 𝑡 + 𝛽2 𝑡 2 + 𝛽3 𝑡 3 + 𝛽4 sin (

2𝜋𝑡

2𝜋𝑡

) + 𝛽5 cos (

)

12

12

I think it is a bit premature to introduce this type of modeling as this is a fairly

complicated multiple regression model, thus I will just show the resulting fit from this

model and compare it to the original time series.

The regression model with a cubic trend and harmonic terms based on the sine and

cosine functions with period d = 12 months seems to fit the time series fairly well. We

will examine the regression models in more detail in Chapter 3!

38

Differencing a Time Series to Create Stationarity

(see section 2.4.2 – Example 2.6 found on pgs. 36 – 38)

Example 1: Blue and Gorgonzola Cheese Production

A time series plot for this series is shown below with a linear fit to the obvious long

term trend, i.e. 𝐸(𝑦𝑡 ) = 𝐸(𝑦|𝑡) = 𝛽𝑜 + 𝛽1 𝑡.

Selecting Plot Residuals from the Linear Fit pull-down menu produces the plots below.

Comments:

39

Another approach to detrending a time series is by the differencing the time series,

which involves forming a new time series (∇𝑦𝑡 ) by taking successive difference of the

original time series,

∇𝑦𝑡 = 𝑦𝑡 − 𝑦𝑡−1

∇ is called the backward difference operator.

Another way to express this difference is to use the backshift operator, B, defined as

𝐵𝑦𝑡 = 𝑦𝑡−1

so using this operator we define the differenced time series as follows:

∇𝑦𝑡 = (1 − 𝐵)𝑦𝑡 = 𝑦𝑡 − 𝑦𝑡−1

Sometimes it is necessary to difference more than once to remove a trend from a time

series. (Warning: The second difference involves a bit of algebra!)

∇2 𝑦𝑡 = ∇(∇𝑦𝑡 ) = (1 − 𝐵)2 𝑦𝑡 = (1 − 2𝐵 + 𝐵 2 ) = 𝑦𝑡 − 2𝑦𝑡−1 + 𝑦𝑡−2

In general, powers of the backshift operator (B) and the backward difference operator

(∇) are defined as:

𝐵 𝑑 𝑦𝑡 = 𝑦𝑡−𝑑

∇𝑑 𝑦𝑡 = (1 − 𝐵)𝑑 𝑦𝑡

Fortunately we rarely need to use d > 2 for either of these operators. One exception

however is when a series has strong seasonal monthly pattern (e.g. beverage sales). In

such cases a difference of the form

𝑦𝑡 − 𝑦𝑡−12

might be needed to detrend the seasonal pattern in the time series. For this purpose, we

define the lag-d seasonal operator (∇𝑑 ) (note: the d is a subscript!)

∇𝑑 𝑦𝑡 = (1 − 𝐵 𝑑 ) = 𝑦𝑡 − 𝑦𝑡−𝑑

For example to remove a monthly seasonal trend via differencing we could use

∇12 𝑦𝑡 = (1 − 𝐵12 ) = 𝑦𝑡 − 𝑦𝑡−12

We now consider some examples of differencing to remove trend.

40

Example 1: Blue and Gorgonzola Cheese Production (cont’d)

This time series does not exhibit a repeating seasonal pattern so we will first try a first

difference. To do this in JMP we add a new column to our spreadsheet and specify that

we wish to use a formula to define the contents of this column.

After renaming the new column using the backshift operator notation (1-B)yt and

plotting the resulting first differenced time series we see the following.

Clearly the trend has been removed and the series appears to be stationary. We can

examine an ACF plot of the first differenced series.

41

Comments:

Example 2: U.S. Beverage Sales (again)

When using differencing to remove both a seasonal and long term trend that is evident

in this time series it is important to FIRST remove the seasonal trend using a lag-d

seasonal difference operator, then remove the long term trend using first and possibly

second differences on the deseasonalized series obtained by using the seasonal

difference operator.

The monthly pattern within year appears to be fairly consistent from year to year so a

lag-12 seasonal difference should remove the seasonality in this time series. To do this

add a new column, use Formula to specifiy a lag-12 difference as shown on below.

42

The lag-12 seasonal differenced time series (1 − 𝐵12 )𝑦𝑡 is shown below.

Is the seasonal structure gone, i.e. has lag-12 seasonal differencing effectively

deseasonalized the time series?

Does this series appear stationary?

Does it still seem to have some structure that might need to be removed using a first

difference?

43

Next, we a consider a first difference of the deseasonalized time series using the JMP

calculator as shown below.

A time series plot of the seasonal (lag-12) and trend differenced (first difference) time

series is shown below along with an ACF plot.

Comments:

44

Which approach to detrending a time series is more appropriate?

In the cheese production example is it better to fit a linear trend and examine a time

series consisiting of the residuals from this fit OR is it better to examine a first

differenced time series ∇𝑦𝑡 = (1 − 𝐵)𝑦𝑡 = 𝑦𝑡 − 𝑦𝑡−1 ?

In the U.S. beverage sales example is it better to use a cubic polynomial along with a

monthly adjustment as we did in the (trend + seasonal + error) approach or to use

differencing?

45

One-Step-Ahead Forecasts Example (text pgs. 54-57)

This is the analysis of the one-step-ahead forecast errors (Table 2.3 pg. 54) example. The

question is whether or not these one-step-ahead forecast errors are uncorrelated with

constant variance and normally distributed. The output below is from analyzing these

errors using Analyze > Distribution. The errors certainly appear to have a normal

distribution and the Shapiro-Wilk goodness-of-fit test fails to find a significant

departure from normality (p > .1974).

The Ljung-Box Q statistic is provided

for all K up to 25. All p-values are not

significant suggesting that all

autocorrelations (𝜌𝑘 ) for k =1,…,25

are not statistically significant

(p > .7289).

46

Comparing Models – 𝑹𝟐 , 𝑹𝟐𝒂𝒅𝒋 , 𝒂𝒏𝒅 𝑨𝑰𝑪

Consider again the regression model below that we fit to the U.S. beverage sales time

series

𝐸(𝑦𝑡 ) = 𝐸(𝑦|𝑡) = 𝛽𝑜 + 𝛽1 𝑡 + 𝛽2 𝑡 2 + 𝛽3 𝑡 3 + 𝛽4 sin (

2𝜋𝑡

2𝜋𝑡

) + 𝛽5 cos (

)

12

12

The plot and output below contain visual and numeric information regarding the

quality of this model fit to the data.

Comments:

47

Split-Sample Approach (brief example)

In this example I fit the regression model used above to all but the last two years of

beverage sales and then used that model to predict the last years which left out. Thus

the sample has been split.

The plot below shows the forecasts for the last two years.

The table below contains the forecasts (X’s) and the forecast errors.

48

Using the column labeled et(1) we could compute the various measures of forecast error

performance: ME, MAD, MSE, MPE, and MAPE.

I have saved this spreadsheet to the course1 website called Beverage Sales (forecast

errors).JMP. You can right click at the top of the column and click on Formula used to

view it. The Col Sum() function used in many of them is located in the Statistical menu

in the JMP Calculator and the absolute value function, e.g. |et(1)|, is found in the

Numeric menu.

49