p05-meganca

advertisement

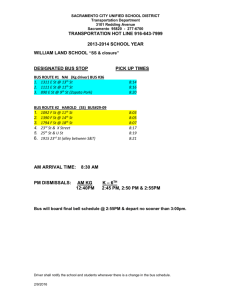

Uncertain Data for Bus Stop Identification Meg Campbell Blind users of public transit still face challenges finding bus stops in areas that they do not know well. We would like to extend OneBusAway to provide basic information about each stop that would allow blind users to locate it more readily (eg: markers such as bus shelters, benches, a description of the bus stop pole, and other physical information about the stop that can be felt rather than seen.) Metro possesses some information about bus stops beyond what they make publicly available on their site. However, this information is incomplete, frequently out of date, and contains only some of the fields we need. We can supplement it with information obtained from crowdsourcing, where individual users provide feedback about the current state of a bus stop in order to give us data that Metro lacks in a more up-to-date form. One approach to obtaining this data that we have been exploring is using Mechanical Turk to obtain responses. Bus stops are identified on Google maps, and then presented to the worker as a Google street view. The workers are asked to identify whether certain markers are present or not and to locate them on the screen, giving GPS coordinates for each labeled object. Fig 1: The interface used by workers on MTurk to label stop landmarks Responses from many workers are then collected as an aggregate to give probabilistic results (eg: 75% of workers believe there is a bus shelter at a particular stop.) This MTurk study was performed as a joint project between UW and the University of Maryland, covering a subset of bus stops in the DC and Seattle areas. It does not provide comprehensive data for every Seattle bus stop but serves as a preliminary data source to evaluate MTurk as a means of obtaining this information. Data Collection Because this project is a continuation of outside research the early part of my work was focused on completing data collection. We chose audit areas around UW, downtown Seattle, Washington DC, and College Park, Maryland to use as the initial test of the MTurk data collection method. A several square mile radius was chosen in advance for each area. Then all positions marked on Google Maps as being bus stops were used as data points and presented as panoramic views to the workers on MTurk. Additionally, we visited each stop on the list in person and took photos exhaustively documenting the current state of the bus stop. There were two goals to this phase of the data collection. Firstly, we wanted to evaluate how accurate the MTurk workers were at applying labels to the given Google Maps image. Secondly, we wanted to evaluate how accurately the Google data reflects the current state of the bus stops in those areas. In order to accomplish this for each stop three sets of data were collected: the MTurk worker’s labels from the interface, the researchers’ labels from the same MTurk interface, and a dataset representing “ground truth” of the researchers manually counting bus stop attributes from current photos. We found the labels produced by the MTurk workers to be largely accurate against the Google Maps data when enough workers labeled a single stop; this will be discussed in further detail below. On average, I found that about 17.6% of Seattle bus stops identified by Google maps were false positives that were not present in Google street view. Of the bus stops found in the physical survey, 93.7% were identified on Google maps. MTurk Data Data collection on MTurk is conducted as individual assignments to workers for which workers are paid when the whole assignment is completed. A single “assignment” in the BusStopLabeler was defined as being 14-16 randomly chosen labeling tasks, where each labeling task refers to completely labeling a single stop. The full schema of this data is provided in the appendix below. Fig 2: Subset of the relevant tables and fields from the MTurk data tables For the purposes of aggregating the data afterwards, the fields shown in Figure 2 are the most relevant ones. For each bus stop the specific labeling task that produced data for it can be found by PanoramaID. These tasks are part of a larger assignment, which may or may not have been completed (meaning all tasks in the assignment were finished and submitted). For the purposes of our study we only considered labels from completed assignments in order to avoid cases in which some labels had been placed but the worker had not finished the full task. Each labeling task is associated with some number of labels, which contain both a GIS location and the type of label used. A label may also be flagged as deleted if the user placed the label but withdrew it prior to submission. Only undeleted labels are considered for the purpose of this study. In order to produce a full table of the probabilistic traits of a bus stop, first all labels for each LabelingTask must be collected into a single row representing that specific task’s view of the bus stop. The null columns— meaning no labels were placed for a given attribute—must be replaced with explicit 0s in order to preserve the overall counts when using aggregation on all rows. Once rows of data representing each labeling task have been generated, these can be grouped by the internal bus stop ID and processed into the overall probabilistic expectations for the stop. At this step I kept only the most common guess for each trait. For example, if the beliefs stated that there was a 60% probability of being 2 shelters and a 40% probability of being 1 shelter, I did not preserve any information about the distribution other than the most likely choice and its probability. Ties were broken arbitrarily by the max function. (These were very uncommon; with more than 15 respondents most bus stops tended towards pronounced trends of certainty, with exceptions discussed in more detail below.) Fig 3: MTurk probability table (subset of columns) MTurk and Metro With the table of MTurk probabilities assembled, the MTurk data was joined to the Metro data to combine the data into a single most probable deduction about the stop. Join For applications using geographical data the standard approach is to use a spatial database. Using the latitude and longitude coordinates the tables were joined using a radius from the official (Metro) location of the stop (Mamoulis). The initial approach used a very strict radius because stops were frequently so close together. Using a 10 foot radius (0.00003 degrees latitude and 0.00004 degrees longitude) the first approach successfully joined only 4% of the MTurk data with the Metro database. Ideally, the aim of the project would be to join all data obtained through crowdsourcing with all of the official Metro data. However, false positives would be an extremely bad outcome for this kind of work. Telling a user a patently false thing about a stop (eg: it has 5 benches, when in actuality it has 2) is actually worse than providing no information, because it will obfuscate the process of stop finding. Therefore, the reliability of the information is crucial, and the join algorithm needs to err heavily on the side of omitting information rather than using more information but allowing false positives. When the radius requirements were substantially relaxed (30 feet) the join still only used 42% of the MTurk data but also obtained false positives. In order to cut down on the false positives, a variable joining method was suggested, which would use a stricter join radius in areas where bus stops were known to be very close together (such as downtown) and a wider radius in other areas. Using this tuning and specifically keeping the strict (15 foot) radius for downtown only, the join utilized 47% of the MTurk data without any false positives. Manual analysis of the joined bus stop data indicated that every stop was correctly joined, with 100% accuracy, even for bus stops as close as across the street from each other. However, a large amount of the MTurk data was not able to be used without compromising the accuracy in this step. Simple Stop Info The resulting data from the join has probabilistic spatial information. The primary purpose of the application is to give information about each specific stop; therefore, the main focus of the research is on this step, generating a “most likely” description of a specific stop. A simple algorithm was applied by assigning weights to the value of the information (Sarkar). The starting assumption is that the Metro data is a reliable source, but may be outdated. Metro data marked as having been updated this year received a likelihood weighting of 0.9; 0.7 for the previous year; and 0.5 for anything earlier. MTurk data will be weighted according to the probability assigned to each feature. For features about which data exists in both Metro and MTurk databases (such as shelters, benches, etc) the probability of the truth of each assignment will be compared and the more probable choice will be stored along with the number of features as the “most likely” bus stop scenario. Fig 4: Bus stop summary The weights of this algorithm could be tuned further in order to weight the selection of information differently. However, in practice, the Metro data virtually never improved on the bus stop expectations generated by the MTurk data. The best use of these weights would be to set them to very low probabilities (eg: 0.5, 0.3, and 0.2) so that they would only provide data when uncertainty was very low on MTurk; for example, for bus stops that could not be easily labeled in the Turk interface because the image was obscured by something such as a bus parked in front of the stop. Path Search The primary intent of the research was to provide a reliable description of the attributes of specific bus stops. However, once each stop is adequately characterized, it is possible to look at paths of bus stops in order to get an overall perspective on their accessibility. Hua and Pei provide some alternative algorithms that describe probabilistic queries on paths. These are designed to provide greater accuracy than earlier approaches which considered simply the average properties between two endpoints. However, unlike Hua’s examples, the paths generated by a Metro bus stop search are inherently discrete rather than continuous. Once a user is on the bus, all buses are effectively equal in terms of accessibility. The variances are between specific bus stops (and, beyond the scope of the available data, on the paths between bus stops.) The probability of a single bus stop being accessible does not impact in any way the probability that the next stop on the route will be accessible. Therefore, in practice discretizing the algorithm in this way essentially results in an accessibility “score” for a route rather than an overall probability that the route is accessible. Partially this is because there is no meaningful way to provide a probability answer to this question. All stops are functionally accessible to blind Metro users in that they can be reached; we are simply trying to evaluate the degree to which they can be easily identified. For a bus stop route A containing stops A, B, C, … the accessibility score is then defined as the sum of the products of each stop’s accessibility score and the strength of that belief, 𝐴𝑃 = 𝐴𝐴 ∗ 𝑃𝐴 + 𝐴𝐵 ∗ 𝑃𝐵 + 𝐴𝐶 ∗ 𝑃𝐶 + ⋯ Each individual stop’s accessibility score is the number of identifiable features present. For example, a bus stop with two benches and one shelter would have an accessibility score of 3. Fig 5: Bus stop path accessibility rankings A higher score represents a more probably identifiable string of stops. However, in order to account for variance in path length, it is the case that equal length paths should only ever be compared to each other. A shorter path will always be higher in “accessibility” than a longer path, due to the fact that every stop identification increases uncertainty. Therefore, these queries identify the shortest length paths (smallest number of stops) and compare only these paths to each other, assigning a route score to only these. Results & Conclusions The primary aim of this project is to generate an accurate listing of the attributes of a bus stop in order to make the stop more accessible to blind and low vision users of public transit. To do this, we obtained official bus stop information both from Metro and from Mechanical Turk. The data from Metro is insufficient in the level of detail for our purposes, so the MTurk data—or other crowdsourced additional data—is absolutely necessary in order to fully describe the stop. To determine the success of the MTurk data, a random sampling of half of the Seattle bus stops in the set were chosen and the MTurk results were visually compared to photos of each stop. Of the bus stops chosen, the MTurk data correctly characterized the bus stop in all features 42.3% of the time. When the data was incorrect, 86.7% of the time the discrepancy resulted from an omission of features. 2 0 Delta Features Idenitfied 0 2 4 6 8 10 12 14 16 18 -2 -4 -6 -8 -10 -12 Correct Number of Features Fig 5: Errors in Stop Identification, MTurk data only The vast majority of identification errors in stops came from failing to label features rather than adding extraneous labels. The MTurk data sampling omitted 51.1% of the correct labels. Only 2.2% of labels placed were incorrect additional labels for features that were not present. In general, this trend is more desirable than the inverse; the requirement to not have false positives is much more important than the need to capture all data. However, the omissions are still substantial. The hope would be that joining the MTurk data with the Metro data would correct some of these label omissions. However, in the subset of data viewed, the Metro join only improved the bus stop description 3.8% of the time. In 73.1% of cases it had no effect, while 23.1% of the time it actually made the MTurk estimation worse. In 2/3 of those cases, it removed correctly labeled data. Both data sets err heavily on the conservative side. Possibly in the case of MTurk, because submitting extra labels takes more time and work, it is uncommon for many extraneous labels to be posted to a stop. Because the MTurk estimate is conservative in the first place, one improvement to the join algorithm would be to only make modifications in order to add in omitted labels, never to remove those placed. In practice, the MTurk data had many more applicable fields than the Metro data. Declining to use the Metro data altogether in favor of crowdsourced data would not substantially damage the performance of the app. However, how to correctly associate the crowdsourced data by latitude and longitude with the official bus stop location data remains an issue, as the app must still associate these two pieces of information in order to present Metro-only data such as bus routes and times. References Hua, M, and Jian Pei. Ranking queries on uncertain data. New York: Springer, 2011. Mamoulis, N. Spatial data management. San Rafael, Calif: Morgan & Claypool Publishers, 2012. Sarkar, S., & Dey, D. 2009. Relational Models and Algebra for Uncertain Data. In C. Aggarwal (Ed.), Managing and Mining Uncertain Data (45-73). New York, NY: Springer. Appendix SIS data: Fields and descriptions from Metro Field Accessibility Level ADA Landing Pad ATIS Transfer Point Authorizatio n Awning Bay Bearing Code Bollards Created By Cross Street Curb Curb Height Curb Paint Begin Date End Date Date Mapped Date Created Displaceme nt Display X Display Y Ditch Extension Surface Extension Width FareZone From Cross Curb Info Sign Anchor Info Sign Intersection Juris Comments All entries are changed to "Yes," "Limited," or "Not Access" when signs are ordered. Yes/No I don't think this is maintained in its source data. Defaults to GIS assignment; can overwrite from drop-down list. Refers to authorizing business or jurisdiction that owns the property. Yes/No P&R or Transit Center bay assignment Direction of coach travel. N/S/E/W Yes/No Either Planner name or "SIS data load" Defaults to GIS assignment; can overwrite from drop-down list. Color(s) of paint; drop-down list. Date the current Stop Status began. Many stops have multiple "instances" of temporal data. Default is 1/1/4000; can overwrite Yes/No Drop-down list: asphalt, concrete, dirt, grass, landscape, stone, pavers, footing, other, gravel, unknown, none Freeform entry Defaults to GIS assignment; can overwrite from drop-down list. Drop-down list of choices "Info Signs" are stand-alone signs that don't have route information; drop-down list of sign types to choose from. Location of stop relative to On/Cross intersection: near side, far side, etc. Defaults to GIS assignment; can overwrite from drop-down list. Jurisdiction in which the stop is located; may not be property owner. See "Authorization.") Last Edited Latitude Layover Group Longitude News Box On Street Other Covered Area Other Transit Sign Paint Length Parking Strip Surface Percent From % Passing Pullout Retaining Wall RFP District Ride Free Area Flag Rte Sign Post Anchor Rte Sign Post Type Rte Sign Type Rte Sign Mounting Dir Schedule Holder Shelters Shoulder Surface Shoulder Width Side Side Cross Side On Sidewalk Width Stop Owner Special Sign Stop Length Stop Status Stop Type Stop Name Date Not used currently Not used currently Street on which stop is located Can refer to overpass, etc., that protects riders from weather. Yes/No E.g. "towaway," "school" Length of curb paint; may differ from stop length Asphalt, Concrete, Dirt, Grass, Landscape, Stone, Pavers, Footing, Other, Gravel, Unknown, None Not used currently Not used currently; Yes/No Not used currently; Yes/No Route Facilities Planning District Defaults to No; only option Drop-down list: band, base plate, bolt, cncrt-asphlt, concrete, cncrt-earth, earth, none, unknown, foundation, moveable pad Drop-down list: 2in metal, 4in wood, light, none, pillar, strain, utility, unknown, art pole, not Metro Drop-down list of sign types Direction of sign relative to street: drop-down list - away, flush, toward… Drop-down list of options Freeform entry Not used currently; same options as Parking Strip Surface Freeform entry Side of street in GIS Location relative to cross street the stop is located - N/S/E/W Side of On Street on which stop is located - N/S/E/W (E.g., On Street runs N/S, "Side On" would be on E or W of On Street Freeform entry Transit Agency "owning" stop: Metro, ST, PT, CT, Seattle, etc. Signs for operators; drop-down list Freeform entry Active, Planned, Closed, Inactive. Stop may have multiple instances of the same or different stop statuses depending on dates. Indicates if stop has special use or restrictions; drop-down list. "Public" name of stop for signage, enunciator, Trip Planner, etc. Street Address Stop Comment Strip Width Traffic Signal Trans Link ID Walkway Surface Xcoord Ycoord Zip Code Not used currently. Freeform Freeform entry; used to note details for which there are no fields available. Not used currently. Freeform Not used currently. Yes/No Not used currently; same options as Parking Strip Surface MTurk data: schema graphic from University of Maryland See separately submitted image for larger file.