Confidence Interval for Standardized Difference Between Means, Related Samples

The Point Estimate

Cohen defined dz as the mean difference score divided by the standard deviation of the

difference scores. The “z” was added to note that the unit of analysis is no longer X (the one

mean) or Y (the other mean) but their difference, Z. While I and others (Dunlap, Cortina,

Vaslow, & Burke, 1996) think it is most often not a good idea to use dz as an effect size

estimator, there may be cases where it is defensible. In such cases the confidence interval for

dz could be obtained in exactly the same manner that one would obtain the confidence interval

for d for a one sample test.

Why not use the standard deviation of the difference scores as the standardizer? Think

about the in the variance sum law: Y 1Y 2 Y21 Y2 2 2 Y 1 Y 2 . Now suppose that

you found a difference on a test of clerical accuracy between individuals tested after taking a

placebo and individuals tested after taking a sedating antihistamine. The difference in means is

3. Within each group the standard deviation is 10. You have independent samples. The d is

3/10 = .3. Now suppose that you had correlated samples with the same difference in means

and the same within-condition standard deviations. The correlation between samples will result

in the standard deviation of the difference scores being less than the standard deviation within

each group (again, remember the in the variance sum law). Suppose it is reduced to a value

of 7. If you were to use the standard deviation of the difference scores as the standardizer

when estimating d, you would obtain a value of 3/7 = .43 – but the difference between the

means is still just 3 points. Why should d = .3 with independent samples but .43 with correlated

samples when the difference between means is the same in both cases and the withinconditions standard deviations the same as well?

Programs to compute the point estimate:

Excel

SAS

SPSS

The Confidence Interval

You cannot use my Conf_Interval-d2.sas program (or my similar SPSS program) to

construct a confidence interval for d when the data are from correlated samples. With

correlated samples the distributions are very complex, not following the noncentral t. You can

2(1 r12 )

dˆ 2

construct an approximate confidence interval, dˆ zcc SE , where SE is

,

2(n 1)

n

but such a confidence interval is not very accurate unless you have rather large sample sizes. I

I got this from Kline’s Beyond Significance Testing, Chapter 4. I do have an SAS program that

uses this algorithm.

Algina & Kesselman (2003) provided a new method for computing confidence intervals

for the standardized difference between means. I shall illustrate that method here.

2

Run this SAS code:

options pageno=min nodate formdlim='-';

******************************************************************************;

title 'Experiment 2 of Karl''s Dissertation';

title2 'Correlated t-tests, Visits to Mus Tunnel vs Rat Tunnel, Three Nursing Groups

'; run;

data Mus; infile 'C:\D\StatData\tunnel2.dat';

input nurs $ 1-2 L1 3-5 L2 6-8 t1 9-11 t2 12-14 v_mus 15-16 v_rat 17-18;

v_diff=v_mus - v_rat;

proc means mean stddev n skewness kurtosis t prt;

var v_mus V_rat v_diff;

run;

*****************************************************************************;

Proc Corr Cov; var V_Mus V_Rat; run;

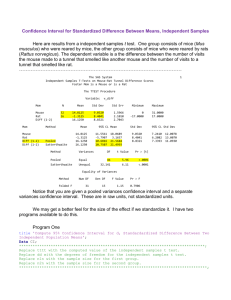

Get this output

-------------------------------------------------------------------------------------------------Experiment 2 of Karl's Dissertation

Correlated t-tests, Visits to Mus Tunnel vs Rat Tunnel, Three Nursing Groups

1

The MEANS Procedure

Variable

Mean

Std Dev

N

Skewness

Kurtosis

t Value

Pr > |t|

ƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒ

v_mus

22.7291667

10.4428324

48

-0.2582662

-0.3846768

15.08

<.0001

v_rat

13.2916667

10.4656649

48

0.8021143

0.2278957

8.80

<.0001

v_diff

9.4375000

11.6343334

48

-0.0905790

-0.6141338

5.62

<.0001

ƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒ

The CORR Procedure

Covariance Matrix, DF = 47

v_mus

v_rat

v_mus

v_rat

109.0527482

41.6125887

41.6125887

109.5301418

Pearson Correlation Coefficients, N = 48

Prob > |r| under H0: Rho=0

v_rat

v_mus

v_rat

0.38075

1.00000

0.0076

To obtain our point estimate of the standardized difference between mean, run this SAS code:

Data D;

M1 = 22.72917 ;

SD1 = 10.44283;

M2 = 13.29167 ;

SD2 = 10.46566;

d = (m1-m2) / SQRT(.5*(sd1*sd1+sd2*sd2)); run;

Proc print; var d; run;

Obtain this result:

Obs

d

3

1

0.90274

Using the approximation method first presented,

SE

.90274 2 2(1 .38075)

0.18567 . A 95% CI is .90274(1.96)(.18567) = [.539, 1.267].

2( 48 1)

48

From James Algina’s webpage, I obtained a simple SAS program for the confidence

interval. Here is it, with values from above.

*This program computes an approximate CI for the effect

size in a within-subjects design with two groups.

m2 and m1 are the means for the two groups

s1 and s2 are the standard deviations for the two groups

n1 and n2 are the sample sizes for the two groups

r is the correlation

prob is the confidence level;

data;

m1=22.7291667 ;

m2=13.2916667 ;

s1=10.4428324 ;

s2=10.4656649 ;

r= 0.38075 ;

n=48

;

prob=.95 ;

v1=s1**2;

v2=s2**2;

s12=s1*s2*r;

se=sqrt((v1+v2-2*s12)/n);

pvar=(v1+v2)/2;

nchat=(m1-m2)/se;

es=(m1-m2)/(sqrt(pvar));

df=n-1;

ncu=TNONCT(nchat,df,(1-prob)/2);

ncl=TNONCT(nchat,df,1-(1-prob)/2);

ul=se*ncu/(sqrt(pvar));

ll=se*ncl/(sqrt(pvar));

output;

proc print;

title1 'll is the lower limit and ul is the upper limit';

title2 'of a confidence interval for the effect size';

var es ll ul ;

run;

quit;

Here is the result:

ll is the lower limit and ul is the upper limit

of a confidence interval for the effect size

Obs

es

ll

ul

1

0.90274

0.53546

1.26275

4

4

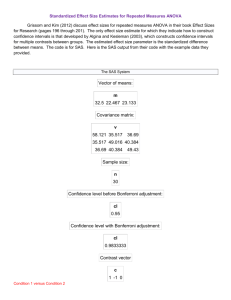

The program presented by Algina & Keselman (2003) is available at another of Algina’s

web pages . This program will compute confidence intervals for one or more standardized

contrasts between related means, with or without a Bonferroni correction, and with or without

pooling the variances across all groups. Here is code, modified to compare the two related

means from above.

* This program is used with within-subjects designs. It computes

confidence intervals for effect size estimates. To use the program one

inputs at the top of the program:

m--a vector of means

v--a covariance matrix in lower diagonal form, with periods for

the upper elements

n--the sample size

prob--the confidence level prior to the Bonferroni adjustment

adjust--the number of contrats is a Bonferroni adjustment to the

confidence level is requested. Otherwise adjust is set

equal to 1

Bird--a switch that uses the variances of all variables to calculate

the denominator of the effect size as suggested by K. Bird

(Bird=1). Our suggestion is to use the variance of those

variables involved in the contrast to calculate the denominator

of the effect size (Bird=0)

In addition one inputs at the bottom of the program:

c--a vector of contrast weights

multiple contrasts can be entered. After each, type the code

run ci;

proc iml;

m={ 22.72917 13.29167};

v={109.0527482 . ,

41.6125887 109.5301418};

do ii = 1 to nrow(v)-1;

do jj = ii+1 to nrow(v);

v[ii,jj]=v[jj,ii];

end;

end;

n=48

;

Bird=1;

df=n-1;

cl=.95;

adjust=1;

prob=cl;

print 'Vector of means:';

print m;

print 'Covariance matrix:';

print v;

print 'Sample size:';

print n;

print 'Confidence level before Bonferroni adjustment:';

print cl;

cl=1-(1-prob)/adjust;

print 'Confidence level with Bonferroni adjustment:';

print cl;

start CI;

pvar=0;

5

count=0;

if bird=1 then do;

do mm=1 to nrow(v);

if c[1,mm]^=0 then do;

pvar=pvar+v[mm,mm];

count=count+1;

end;

end;

end;

if bird=0 then do;

do mm=1 to nrow(v);

pvar=pvar+v[mm,mm];

count=count+1;

end;

end;

pvar=pvar/count;

es=m*c`/(sqrt(pvar));

se=sqrt(c*v*c`/n);

nchat=m*c`/se;

ncu=TNONCT(nchat,df,(1-prob)/(2*adjust));

ncl=TNONCT(nchat,df,1-(1-prob)/(2*adjust));

ll=se*ncl/(sqrt(pvar));

ul=se*ncu/(sqrt(pvar));

print 'Contrast vector';

print c;

print 'Effect size:';

print es;

Print 'Estimated noncentrality parameter';

print nchat;

Print 'll is the lower limit of the CI and ul is the upper limit';

print ll ul;

finish;

c={1 -1};

run ci;

quit;

Here is the output:

ll is the lower limit and ul is the upper limit

of a confidence interval for the effect size

Vector of means:

m

22.72917

13.29167

Covariance matrix:

v

109.05275 41.612589

41.612589 109.53014

6

Sample size:

n

48

Confidence level before Bonferroni adjustment:

cl

0.95

Confidence level with Bonferroni adjustment:

cl

0.95

Contrast vector

c

1

-1

Effect size:

-------------------------------------------------------------------------------------------------ll is the lower limit and ul is the upper limit

of a confidence interval for the effect size

es

0.9027425

Estimated noncentrality parameter

nchat

5.619997

ll is the lower limit of the CI and ul is the upper limit

ll

ul

0.5354583 1.2627535

Notice that this produces the same CI produced by the shorter program.

Recommended additional reading:

7

Algina, J., & Keselman, H. J. (2003). Approximate confidence intervals for effect sizes.

Educational and Psychological Measurement, 63, 537-553. DOI:

10.1177/0013164403256358

Dunlap, W. P., Cortina, J. M., Vaslow, J. B., & Burke, M. J. (1996). Meta-analysis of

experiments with matched groups or repeated measures designs. Psychological

Methods, 1, 170-177.

Grissom, R. J., & Kim, J. J. (2005). Effect sizes for research: A broad practical

approach. Mahwah, NJ: Erlbaum. – especially pages 67 and 68

Glass, G. V., & Hopkins, K. D. Statistical methods in education and psychology (2nd ed.),

Prentice-Hall 1984. Section 12.12: Testing the hypothesis of equal means with paired

observations, pages 240-243. Construction of the CI is shown on page 241, with a

numerical example on pages 242-243.

Kline, R. B. (2004). Beyond significance testing: Reforming data analysis methods in

behavioral research. Washington, DC: American Psychological Association. 325 pp.

{First edition. There is a second edition out now.}

Back to Wuensch’s Stats Lessons Page

Copyright 2015, Karl L. Wuensch - All rights reserved.

Fair Use of This Document