Remote Data Audit for Robustness and Dynamic Integrity on Multi

advertisement

Remote Data Audit for Robustness and Dynamic

Integrity on Multi Cloud Storage

M.Ramesh*1, E.suresh#2

*1

Department of Information Technology

SKP Engineering College, Tiruvannamalai-606611, TamilNadu

#2

Department of Information Technology

SKP Engineering College, Tiruvannamalai-606611, TamilNadu

Abstract- A multi cloud is a cloud computing environment in which

an organization provides and manages some internal and external

resources. Provable data possession (PDP) is an audit technique for

ensuring the integrity of data in storage outsourcing. However,

early remote data audit schemes have focused on static data,

whereas later schemes such as DPDP (Dynamic Provable Data

Possession) support the full range of dynamic operations on the

deletions. Robustness is required for both static and dynamic RDA

schemes that rely on spot checking for efficiency.

In this project proposes the RDA (Remote Data Audit) mechanism

in multi clouds to support the scalable service and data migration,

in which multiple cloud service providers to collaboratively store

and maintain the clients' data. Remote Data Audit (RDA) allows

users to efficiently check the modification of data stored at cloud

servers. This audit service construction is based on the techniques

supporting provable updates to outsourced data and timely anomaly

detection. This method based on probabilistic query and periodic

verification for improving the performance of audit services. The

security of this scheme based on multi-prover.

or external pressures (e.g., government censure). The server

Keywords-Provable Data Possession, Interactive Protocol,

Zero _ Knowledge, Audit Service, Multiple Cloud, Cooperative.

Remote Data Audit (RDC) is a technique that allows checking

might also accidentally erase some data and choose not to

notify the client. Exacerbating the problem are factors such as

limited bandwidth between the client and server, as well as the

client's limited resources. Cloud storage is a dynamic service

provided by Server. Clients can pay a fee to obtain an

appropriate storage space to store their own data. Because the

storage device is not under the control of Clients and Server

has not been fully trusted, Clients have no way to prevent

unauthorized individuals, or even Server itself to access or

tamper their data. It is a great challenge for data integrity and

confidentiality.

the integrity of data stored at a third party, such as a Cloud

I. INTRODUCTION

Storage Provider (CSP). Especially when the CSP is not fully

In recent years, cloud storage service has become a faster

trusted, RDC can be used for data auditing. Security audit is

profit growth point by providing a for clients’ data.based on

an important solution enabling trace back and analysis of any

distributed cloud environment as a multi-Cloud (or hybrid

activities including data accesses the application activities, and

cloud). In access his/her resources remotely through interfaces

so on. Robust means that the auditing scheme for mitigating

such as Web services provided by Amazon EC2. Outsourcing

arbitrary amounts of data corruption. Protecting against large

storage prompts a number of interesting challenges. One

modification ensures the CSP has committed the storage

problem is to verify that the server continually and faithfully

resources. Small space can be reclaimed undetectably, making

stores the entire file the server is untrusted in terms of both

it useless to delete data to save on storage costs or sell the

security and reliability: it might maliciously or accidentally

same storage multiple times. Protecting against small

erase the data or place it onto temporarily unavailable storage.

corruptions protects the data itself, not just the storage

The could occur for numerous reasons including cost-savings

resource. In the modifying a single bit may destroy an

only timely detect abnormality, but also take up less resource,

encrypted file or invalidate authentication information. Thus,

or rationally allocate resources. Hence, a new PDP scheme is

robustness is important for all audit schemes that rely on spot

desirable to accommodate these application requirements from

checking, which includes the majority of static and dynamic

hybrid clouds.

RDC schemes.

III. CONTRIBUTIONS

1) Efficiency and Security: The plan proposed by the PDP is

II.EXITING SYSTEM

safer to rely on a non-symmetric key encryption will be clear,

The traditional cryptographic technologies for data

efficient in the use of symmetric key operations in the settings

integrity and availability, based on Hash functions and

(once) and the validation stage.However, our plan is more

signature schemes cannot support the outsourced data without

efficient than the PORs, because it does not require lots of

a local copy of data. It is evidently impractical for a cloud

data encryption in outsourced and no additional posts on the

storage service to download the whole data for data validation

symbol block, and the ratio is more secure because we encrypt

due to the expensiveness of communication, especially, for

data to prevent unauthorized third parties to know its contents.

large-size files. Recently, several PDP schemes are proposed

2) Proof of availability of data: We plan a major variation of

to address this issue. In fact, PDP is essentially an interactive

PDP, to provide public validation. Allow people other than the

proof between a CSP and a client because the client makes a

owner for information on the server has proved challenge.

false/true decision for data possession without downloading

Another major concern is the security issue of

data. Existing PDP schemes mainly focus on integrity

dynamic data operations for public audit services. In clouds,

verification issues at untrusted stores in public clouds, but

one of the core design principles is to provide dynamic

these schemes are not suitable for a hybrid cloud environment

scalability for various applications. This means that remotely

since they were originally constructed based on a two-party

stored data might be not only accessed by the clients, but also

interactive proof system. For a hybrid cloud, these schemes

dynamically updated by them, for instance, through block

can only be used in a trivial way: clients must invoke them

operations such as modification, deletion and insertion.

repeatedly to check the integrity of data stored in each single

However, these operations may raise security issues in most of

cloud. This means that clients must know the exact position of

existing schemes, e.g., the forgery of the verification metadata

each data block in outsourced data. Moreover, this process

(called as tags) generated by data owners and the leakage of

will

the user’s secret key. Hence, it is crucial to develop a more

consume

higher

communication

bandwidth

and

computation costs at client sides.

Early audit schemes have focused on static data, in

which the client cannot modify the original data or can only

efficient and secure mechanism for dynamic audit services, in

which a potential adversary’s advantage through dynamic data

operations should be prohibited.

perform a limited set of updates. The DPDP, a scheme that

supports the full range of dynamic updates on the outsourced

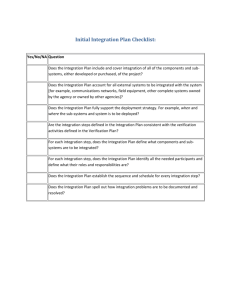

IV. PROPOSED SCHEME ARCHITECTURE AND

TECHNIQUES

data, while providing the same strong guarantees about data

integrity. Ability to perform updates such as insertions,

Although existing techniques offer a publicly

modifications, or deletions, extends the database services and

accessible remote interface for checking and managing the

more complex cloud storage system. Solving these problems

tremendous amount of data, the majority of existing PDP

will help improve the quality of PDP services, which can not

schemes are incapable to satisfy the inherent requirements

from multiple clouds in terms of communication and

is not directly involved in the CPDP scheme in order to reduce

computation costs. To address this problem, we consider a

the complexity of cryptosystem.

multi-cloud storage service as illustrated in Figure 1. In this

a. Fragment Structure and Secure Tags

architecture, we consider that a data storage service involves

To maximize the storage efficiency and audit

four entities: data owner (DO), who has a large amount of data

performance, our audit system introduces a general fragment

to be stored in the cloud; cloud service provider (CSP), who

structure for outsourced storages. An instance for this

provides data storage service and has enough storage space

framework which is used in our approach is shown in Fig. 3:

and computation.

an outsourced file 𝐹 is split into 𝑛 blocks {𝑚1,2, ⋅⋅⋅ ,𝑚𝑛}, and

each block 𝑚𝑖 is split into 𝑠 sectors {𝑚𝑖,1,𝑚𝑖,2, ⋅⋅⋅ ,𝑚𝑖,𝑠}. The

fragment framework consists of 𝑛 block-tag pair (𝑚𝑖, 𝜎𝑖),

where 𝜎𝑖 is a signature tag of a block 𝑚𝑖 generated by some

secrets 𝜏 = (𝜏1, 𝜏2, ⋅⋅⋅ , 𝜏𝑠). We can use such tags and

corresponding data to construct a response in terms of the

TPA’s challenges in the verification protocol, such that this

response can be verified without raw data. If a tag is

enforceable by anyone except the original signer, we call it a

Architecture for Data Integrity

secure tag. Finally, these block-tag pairs are stored in CSP and

resources; third party auditor (TPA), who has capabilities

the encrypted secrets 𝜏 (called as PVP) are in TTP. Although

to manage or monitor the outsourced data under the delegation

this fragment structure is simple and straightforward, but the

of data owner; and authorized applications (AA), who have

file is split into 𝑛 × 𝑠 sectors and each block (𝑠 sectors)

the right to access and manipulate the stored data. Finally,

corresponds to a tag, so that the storage of signature tags can

application users can enjoy various cloud application services

be reduced with the increase of 𝑠. Hence, this structure can

via these authorized applications

reduce the extra storage for tags and improve the audit

Then, by using a verification protocol, the clients can

performance. There exist some schemes for the convergence

issue a challenge for one CSP to check the integrity and

of 𝑠 blocks to generate a secure signature tag. These schemes,

availability of outsourced data with respect to public

built from collision-resistance hash functions (see Section 5)

information stored in TPA. We neither assume that CSP is

and a random oracle model, support the features of scalability,

trust to guarantee the security of the stored data, nor assume

performance and security.

that data owner has the ability to collect the evidence of the

b. Index-Hash Table:

CSP’s fault after errors have been found. To achieve this goal,

In order to support dynamic data operations,

a TPA server is constructed as a core trust base on the cloud

we introduce a simple index-hash table to record the changes

for the sake of security. We assume the TPA is reliable and

of file blocks, as well as generate the hash value of each block

independent through the following functions: to setup and

in the verification process. The structure of our index-hash

maintain the CPDP(Cooperative Provable Data Possession)

table is similar to that of file block allocation table in file

cryptosystem; to generate and store data owner’s public key;

systems. Generally, the index-hash table 𝜒 consists of serial

and to store the public parameters used to execute the

number, block number, version number, and random integer.

verification protocol in the CPDP scheme. Note that the TPA

Note that we must assure all records in the index-hash table

differ from one another to prevent the forgery of data blocks

set of random index-coefficient pairs Q to the organizer; 3) the

and tags. In addition to recording data changes, each record 𝜒𝑖

organizer relays them into each Pi in P according to the exact

in the table is used to generate a unique hash value, which in

position of each data block; 4) each Pi returns its response of

turn is used for the construction of a signature tag 𝜎𝑖 by the

challenge to the organizer; 5) the organizer synthesizes a final

secret key 𝑠𝑘. The relationship between 𝜒𝑖 and 𝜎𝑖 must be

response from these responses and sends it to the verifier.

cryptographically secure, and we make use of it to design our

The above process would guarantee that the verifier

verification protocol. Although the index-hash table may

accesses files without knowing on which CSP or in what

increase the complexity of an audit system, it provides a

geographical locations their files reside.

higher assurance to monitor the behavior of an untrusted CSP,

as well as valuable evidence for computer forensics, due to the

reason that anyone cannot forge the valid 𝜒𝑖 (in TPA) and 𝜎𝑖

(in CSP) without the secret key 𝑠𝑘.

1) Setup Phase: We start with a database D divided into y

blocks, such as: 1 2 , , ,y D = D D _ D . We want to be able to

challenge storage SER t times. We make use of a pseudorandom function (PRF), f, and a pseudo-random permutation

(PRP) g with the following parameters: In our example, l = log

Index-Hash Hierarchies for CPDP Model

y since we use g to exchange index. The output of f is used to

generate the key for g and c = logt (t as a challenge to the

V .REMOTE DATA AUDIT SCHEME

number of SER) we note that using a standard block cipher can

According to the above-mentioned architecture, four

be f and g generated, such as AES. In this case, L = 128. In

different network entities can be identified as follows: the

setup phase, we will use the pseudo-random function f and two

verifier (V), trusted third party (TTP), the organizer (0), and

k -bit master secret keys W and Z. The key W is used to

some cloud service providers (CSP s) in hybrid P = {PihE[l,c]'

generate session permutation keys while Z is used to generate

The organizer is an entity that directly contacts with the

the current challenges

verifier. Moreover it can initiate and organize the verification

process. Often, the organizer is an independent server or a

certain CSP in P. In our scheme, the verification is performed

by a 5-move interactive proof protocol as follows:

1) the organizer initiates the protocol and sends a

commitment to the verifier; 2) the verifier returns a challenge

2) Verification Phase: When owners want to save it h the

verification server get proof of, the first owner to recalculate

the tag key i k and i C .For example, setup phase for 5)a).

steps, from this, Only need to encryption save files owner

addition, the parameter 𝜓 is used to store the file-related

public key pk and private key sk and a verification of the

information, so an owner can employ a unique public key to

master key W , Z , K and the current tags i . Then the owner

deal with a large number of outsourced files.

send i k and i c to the SER (such as the verification phase of

the algorithm, step 2). When the server receives the owner of

4) Zero-knowledge property of verification: In the CSPs’

the message, then the calculated z value:

robustness against attempts to gain knowledge by interacting

with them. For our construction we make use of the zeroknowledge property to preserve the privacy of data blocks and

If it is equal, then the owner will identify the correct storage

signature tags. Firstly, randomness is adopted into the CSPs’

server ciphertext file M. This construction is directly derived

responses in order to resist the data leakage attacks. That is,

from multi-prover zero-knowledge proof system (MPZKPS),

the random integer 𝜆𝑗, is introduced into the response 𝜇𝑗,, i.e.,

which satisfies following properties for a given assertion, 𝐿:

𝜇𝑗,𝑘 = 𝜆𝑗,𝑘 + Σ (𝑖,𝑣𝑖)∈𝑄𝑘𝑣𝑖⋅𝑚𝑖,𝑗 . This means that the

a) Completeness: whenever 𝑥∈𝐿, there exists a strategy for the

cheating verifier cannot obtain 𝑚𝑖, from 𝜇𝑗, because he does

provers that convinces the verifier that this is the case;

not know the random integer 𝜆𝑗,. At the same time, a random

b) Soundness: whenever 𝑥 ∕∈𝐿, whatever strategy the proves

integer 𝛾 is also introduced to randomize the verification tag

employ, they will not convince the verifier that 𝑥∈𝐿;

𝜎,

c) Zero-knowledge: no cheating verifier can learn anything

i.e., 𝜎′ ← (Π𝑃𝑘∈𝒫𝜎′𝑘⋅𝑅− ). Thus, the tag 𝜎 cannot reveal to

other than the veracity of the statement. According to existing

the cheating verifier in terms of randomness.

IPS research [15], these properties can protect our construction

from various attacks, such as data leakage attack (privacy

5) Knowledge soundness of verification: In every data-tag

leakage), tag forgery attack (ownership cheating), etc.

pairs (𝐹, 𝜎) ∕∈𝑇𝑎𝑔𝐺(𝑠𝑘, 𝐹), in order to prove nonexistence of

fraudulent 𝒫and 𝑂, we require that the scheme satisfies the

3) Completeness property of verification: In our scheme, the

knowledge soundness property, that is,

completeness property implies public verifiability property,

which allows anyone, not just the client (data owner), to

challenge the cloud server for data integrity and data

,

ownership without the need for any secret information. First,

where 𝜖 is a negligible error. We make use of 𝒫to construct a

for every available data-tag pair (𝐹, 𝜎) ∈𝑇𝑎𝑔𝐺𝑒𝑛(𝑠𝑘, 𝐹) and a

knowledge extractor ℳ , which gets the common input (𝑝𝑘,

random challenge 𝑄 = (𝑖 , 𝑣𝑖)𝑖∈𝐼, the verification protocol

𝜓) and rewindable blackbox accesses to the prover 𝑃, and then

should be completed with success probability a, that is,In this

attempts to break the computational Diffie-Hellman (CDH)

process, anyone can obtain the owner’s public key 𝑝𝑘 = (𝑔,

problem in 𝔾: given 𝐺,𝐺1 = 𝐺𝑎,𝐺2 = 𝐺𝑏∈𝑅𝔾, output 𝐺𝑎𝑏∈𝔾.

ℎ,𝐻1 = ℎ𝛼,𝐻2 = ℎ𝛽) and the corresponding file parameter 𝜓 =

But it is unacceptable because the CDH problem is widely

(𝑢, 𝜉(1), 𝜒) from TTP to execute the verification protocol,

regarded as an unsolved problem in polynomial-time.

hence this is a public verifiable protocol. Moreover, for

different owners, the secrets 𝛼 and 𝛽 hidden in their public key

𝑝𝑘 are also different, determining that success verification can

only be implemented by the real owner’s public key. In

(for example, when modifying a data block, all the symbols in

VI.ACHIEVING ROBUST DATA

Achieving robustness of data over groups of symbols

that block and the corresponding parity symbols should be

modified).

(constraint groups) and it requires to hide the association

between symbols and constraint groups (i.e., the server should

not know which symbols belong to the same constraint group).

When dynamic updates are performed overfile data, the parity

of the affected constraint groups should also be updated,

which requires knowledge of the data and the parity symbols

in those constraint groups.

However, the user cannot simply retrieve only the symbols of

the affected constraint groups, as that would reveal the

contents of the corresponding constraint groups and break

robustness. Moreover, the client cannot simply update only the

parity symbols in the affected constraint groups, as that may

allow the server to infer which parity symbols are in the same

constraint group by comparing the new parity with the old

parity also be updated, which requires knowledge of the data

and the parity symbols in those constraint groups .

We use two independent logical representation of the file for

different purposes: For the purpose of file updating (during the

Update phase), the file is seen as an ordered collection of

blocks. Basically, update operations occur at the block level.

This is also the representation used for checking data

possession (during the Challenge phase), as each block has

one corresponding verification tag. For the purpose of

encoding for robustness, the file is seenas a collection of

symbols, which are grouped into constraintgroups and each

constraint group is encoded independently. For each file

block, there is a corresponding verification tag which needs to

be stored at the server. Thus, larger file blocks result in

smaller additional server storage overhead due to verification

tags. On the other hand, efficient encoding and decoding

requires the symbols to be from a small size field. As a result,

one file block will usually contain multiple symbols. Each file

update operation which is performed at the block level results

into several operations applied to the symbols in that block

VII. CONCLUSIONS

In this paper, we presented the construction of an

efficient Remote Data Audit scheme for distributed cloud

storage. Based on homomorphic verifiable response and hash

index hierarchy, to support dynamic scalability on multiple

storage servers. We also showed that our scheme provided all

security properties required by zero knowledge interactive

proof system, so that it can resist various attacks even if it is

deployed as a public audit service in clouds. This provide

robustness and, at the same time, support dynamic data

updates, while requiring small, constant, client storage. The

main challenge that had to be overcome was to reduce the

client-server

communication

overhead

during

updates.

Furthermore, we optimized the probabilistic query and

periodic verification to improve the audit performance. Our

experiments clearly demonstrated that our approaches only

introduce a small amount of computation and communication

overheads. Therefore, our solution can be treated as a new

candidate for data integrity verification in outsourcing data

storage systems. As part of future work, we would extend our

work to explore more effective CPDP constructions. First,

from our experiments we found that the performance of CPDP

scheme, especially for large files, is affected by the bilinear

mapping operations due to its high complexity. To solve this

problem, Abased constructions may be a better choice, but this

is still a challenging task because the existing RSAbased

schemes have too many restrictions on the performance and

security [2. Finally, it is still a challenging problem for the

generation of tags with the length irrelevant to the size of data

blocks. We would explore such a issue to provide the support

of variable-length block verification.

REFERENCES

[1] B. Sotomayor, R. S. Montero, I. M. Lorene, and I. T. Foster,

“Virtual infrastructure management in private and hybrid clouds,”

IEEE Internet Computing, vol. 13, no. 5, pp. 14–22, 2009.

[2] G. Ateniese, R. C. Burns, R. Curtmola, J. Herring, L. Kissner, Z.

N. J. Peterson, and D. X. Song, “Provable data possession at

untrusted stores,” in ACM Conference on Computer and

Communications Security, P. Ning, S. D. C. di Vimercati, and P. F.

Syverson, Eds. ACM, 2007.

[3] A. Juels and B. S. K. Jr., “Pars: proofs of irretrievability for large

files,” in ACMConference on Computer and Communications

Security, P. Ning, S. D. C. di Vimercati, and P. F. Syverson, Eds.

ACM, 2007.

[4] G. Ateniese, R. D. Pietro, L. V. Mancini, and G. Tsudik,

“Scalable and efficient provable data possession,” in Proceedings of

the 4th international conference on Security and privacy in

communication networks, Secure Comm, 2008.

[5] C. Erway, A. Krupp¸ C. Papamanthou, and R. Tamassia,

“Dynamic provable data possession,” in ACM Conference on

Computer and Communications Security, E. Al-Shaer, S. Jha, and A.

D. Keromytis, Eds. ACM, 2009.

[6] H. Shacham and B. Waters, “Compact proofs of retrievability,” in

ASIACRYPT, ser. Lecture Notes in Computer Science, J. Pieprzyk,

Ed., vol. 5350. Springer, 2008.

[7] Q. Wang, C.Wang, J. Li, K. Ren, and W. Lou, “Enabling public

verifiability and data dynamics for storage security in cloud

computing,” in ESORICS, ser. Lecture Notes in Computer Science,

M. Backes and P. Ning, Eds., vol. 5789. Springer, 2009.

[8] Y. Zhu, H. Wang, Z. Hu, G.-J. Ahn, H. Hu, and S. S. Yau,

“Dynamic audit services for integrity verification of outsourced

storages in clouds,” in SAC, W. C. Chu, W. E. Wong, M. J. Palakal,

and C.-C. Hung, Eds. ACM, 2011.

[9] K. D. Bowers, A. Juels, and A. Oprea, “Hail: a high-availability

and integrity layer for cloud storage,” in ACM Conference on

Computer and Communications Security, E. Al-Shaer, S. Jha, and A.

D. Keromytis, Eds. ACM, 2009.

[10] Y.Dodis, S.P.Vadhan, and D. Wichs, “Proofs of irretrievability

via hardness amplification,” in TCC, ser. Lecture Notes in Computer

Science, O. Reingold, Ed., vol. 5444. Springer, 2009.

[11] L. Fortnow, J. Rompel, and M. Sipser, “On the power of

multiprover interactive protocols,” in Theoretical Computer Science,

1988.

[12] Y. Zhu, H. Hu, G.-J. Ahn, Y. Han, and S. Chen, “Collaborative

integrity verification in hybrid clouds,” in IEEE Conference on the

7th International Conference on Collaborative Computing:

Networking ,Applications and Work sharing, Collaborate Com,

Orlando, Florida, USA, October 15-18, 2011.

[13] M. Armbrust, A. Fox, R. Griffith, A. D. Joseph, R. H. Katz, A.

Konwinski, G. Lee, D. A. Patterson, A. Rabkin, I. Stoica, and M.

Zaharia, “Above the clouds: A berkeley view of cloud computing,”

EECS Department, University of California, Berkeley, Tech. Rep.,

Feb 2009.

[14] D. Boneh and M. Franklin, “Identity-based encryption from the

weil pairing,” in Advances in Cryptology (CRYPTO’2001), vol. 2139

of LNCS, 2001, pp. 213–229.

[15] O. Goldreich, Foundations of Cryptography: Basic Tools.

Cambridge University Press, 2001.