Enterprise Use Case - QI

advertisement

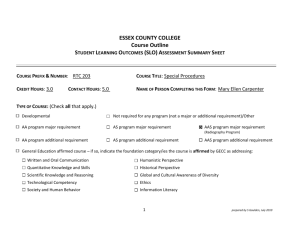

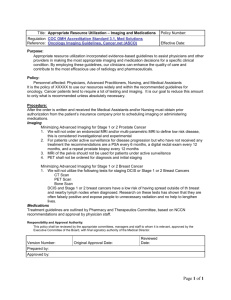

QI-Bench: Informatics Services for Quantitative Imaging EUC Rev 1.0 QI-Bench: Informatics Services for Characterizing Performance of Quantitative Medical Imaging Enterprise Use Case August 16, 2011 Rev 1.0 Required Approvals: Author of this Revision: Andrew J. Buckler Principal Investigator: Andrew J. Buckler Print Name Signature Date Document Revisions: Revision BBMSC Revised By Reason for Update Date 0.1 Andrew J. Buckler Initial draft December 17, 2010 0.2 Andrew J. Buckler Incorporate challenge concept and initial feedback on other points December 23, 2010 0.3 Andrew J. Buckler Refinement and resolution of feedback January 1, 2011 0.4 Andrew J. Buckler Incorporated relationship diagrams January 6, 2011 0.5 Andrew J. Buckler More feedback January 14, 2011 0.6 Andrew J. Buckler Cull out SUC 1.0 Andrew J. Buckler Update to open Phase 4 June 15, 2011 August 16, 2011 1 of 19 QI-Bench: Informatics Services for Quantitative Imaging EUC Rev 1.0 Table of Contents 1. INTRODUCTION ...................................................................................................................................................3 1.1. PURPOSE & SCOPE ...............................................................................................................................................3 1.2. INVESTIGATORS, COLLABORATORS, AND ACKNOWLEDGEMENTS........................................................................3 1.3. DEFINITIONS ........................................................................................................................................................4 2. SCENARIO / OVERVIEW ....................................................................................................................................5 3. USE CASES .............................................................................................................................................................6 3.1. CREATE AND MANAGE SEMANTIC INFRASTRUCTURE AND LINKED DATA ARCHIVES..........................................7 3.2. CREATE AND MANAGE PHYSICAL AND DIGITAL REFERENCE OBJECTS ...............................................................8 3.3. CORE ACTIVITIES FOR BIOMARKER DEVELOPMENT ............................................................................................9 3.4. COLLABORATIVE ACTIVITIES TO STANDARDIZE AND/OR OPTIMIZE THE BIOMARKER .........................................9 3.5. CONSORTIUM ESTABLISHES CLINICAL UTILITY / EFFICACY OF PUTATIVE BIOMARKER .................................... 10 3.6. COMMERCIAL SPONSOR PREPARES DEVICE / TEST FOR MARKET ...................................................................... 11 4. BUSINESS LOGIC AND ARCHITECTURE MODELING ............................................................................. 12 4.1. VALIDATION AND QUALIFICATION AS CLINICAL RESEARCH ............................................................................. 12 4.2. CLINICAL RESEARCH BAM ............................................................................................................................... 12 4.3. ARCHITECTURAL ELEMENTS ............................................................................................................................. 13 5. DOMAIN ANALYSIS AND SEMANTIC INFRASTRUCTURE ..................................................................... 14 5.1. INPUT SPECIFICATIONS: QIBA PROFILES ........................................................................................................... 16 5.2. INFORMATION MODELS AND ONTOLOGIES ........................................................................................................ 17 6. REFERENCES ...................................................................................................................................................... 19 BBMSC 2 of 19 QI-Bench: Informatics Services for Quantitative Imaging EUC Rev 1.0 1. Introduction 1.1. Purpose & Scope Quantitative results from imaging methods have the potential to be used as biomarkers in both routine clinical care and in clinical trials, in accordance with the widely accepted NIH Consensus Conference definition of a biomarker.1 In particular, when used as biomarkers in therapeutic trials, imaging methods have the potential to speed the development of new products to improve patient care. 2,3 Imaging biomarkers are developed for use in the clinical care of patients and in the conduct of clinical trials of therapy. In clinical practice, imaging biomarkers are intended to (a) detect and characterize disease, before, during or after a course of therapy, and (b) predict the course of disease, with or without therapy. In clinical research, imaging biomarkers are intended to be used in defining endpoints of clinical trials. A precondition for the adoption of the biomarker for use in either setting is the demonstration of the ability to standardize the biomarker across imaging devices and clinical centers and the assessment of the biomarker’s safety and efficacy. Although qualitative biomarkers can be useful, the medical community currently emphasizes the need for objective, ideally quantitative, biomarkers. “Biomarker” refers to the measurement derived from an imaging method, and “device” or “test” refers to the hardware/software used to generate the image and extract the measurement. Regulatory approval for clinical use4 and regulatory qualification for research use depend on demonstrating proof of performance relative to the intended application of the biomarker: In a defined patient population, For a specific biological phenomenon associated with a known disease state, With evidence in large patient populations, and Externally validated. This document describes public resources for methods and services that may be used for the assessment of imaging biomarkers that are needed to advance the field. It sets out the workflows that are derived the problem space and the goal for these informatics services as described in the Basic Story Board. 1.2. Investigators, Collaborators, and Acknowledgements Buckler Biomedical Associates LLC Kitware, Inc. In collaboration with: Information Technology Laboratory of (ITL) National Institute of Standards and Technology (NIST) Quantitative Imaging Biomarker Alliance (QIBA) Imaging Workspace of caBIG It is also important to acknowledge the many specific individuals who have contributed to the development of these ideas. A subset of some of the most significant include Dan Sullivan, Constantine Gatsonis, Dave Raunig, Georgia Tourassi, Howard Higley, Joe Chen, Rich Wahl, Richard Frank, David Mozley, Larry Schwartz, Jim Mulshine, Nick Petrick, Ying Tang, Mia Levy, Bob Schwanke, and many others <if you do not see your name, please do not hesitate to raise the issue as it is our express intent to have this viewed as an inclusive team effort and certainly not only the work of the direct investigators.> BBMSC 3 of 19 QI-Bench: Informatics Services for Quantitative Imaging EUC Rev 1.0 1.3. Definitions The following are terms commonly used that may of assistance to the reader. BAM BRIDG BSB caB2B Business Architecture Model Biomedical Research Integrated Domain Group Basic Story Board Cancer Bench-to-Bedside caBIG CAD caDSR CDDS CD CDISC CBER CDER CIOMS CIRB Clinical management Clinical trial Cancer Biomedical Informatics Grid Computer-Aided Diagnosis Disease Data Standards Registry and Repository Clinical Decision Support Systems Compact Disc Clinical Data Interchange Standards Consortium Center for Biologics Evaluation and Research Center for Drug Evaluation and Research Council for International Organizations of Medical Sciences Central institutional review board The care of individual patients, whether they be enrolled in clinical trial(s) or not A regulatory directed activity to prove a testable hypothesis for a determined purpose Computed Tomography Domain Analysis Model Digital Imaging and Communication in Medicine Deoxyribonucleic Acid Data Safety Monitoring Board Enterprise Conformance and Compliance Framework Electronic Case Report Form Electrocardiogram Electronic Medical Records Enterprise Use Case Enterprise Vocabulary Services Food and Drug Administration Fluorodeoxyglucose Health Level Seven Institutional Biosafety Committee Institute of Biological Engineering Institutional Review Board Interagency Oncology Task Force Image Query in-vitro diagnosis Medicines and Healthcare Products Regulatory Agency Magnetic Resonance Imaging National Cancer Institute National Institute of Biomedical Imaging and Engineering National Institutes of Health National Library of Medicine CT DAM DICOM DNA DSMB ECCF eCRF EKG EMR EUC EVS FDA FDG HL7 IBC IBE IRB IOTF IQ IVD MHRA MRI NCI NIBIB NIH NLM BBMSC 4 of 19 QI-Bench: Informatics Services for Quantitative Imaging Nuisance variable Observation PACS PET Pharma Phenotype PI PRO QA QC RMA RNA SDTM SEP EUC Rev 1.0 A random variable that decreases the statistical power while adding no information of itself The act of recognizing and noting a fact or occurrence Picture Archiving and Communication System Positron Emission Tomography pharmaceutical companies The observable physical or biochemical characteristics of an organism, as determined by both genetic makeup and environmental influences. 5 Principal Investigator Patient Reported Outcomes Quality Assurance Quality Control Robust Multi-array Average Ribonucleic Acid Study Data Tabulation Model Surrogate End Point Tx Systematized Nomenclature of Medicine – Clinical Terms Service-Oriented Architecture In clinical trials, a measure of effect of a certain treatment that correlates with a real clinical endpoint but does not necessarily have a guaranteed relationship. 6 Treatment UMLS US VCDE VEGF WHO XIP XML Unified Medical Language System Ultrasound Vocabularies & Common Data Elements vascular endothelial growth factor World Health Organization eXtensible Imaging Platform Extensible Markup Language SNOMED CT SOA Surrogate endpoint 2. Scenario / Overview Medical imaging research often involves interdisciplinary teams, each performing a separate task, from acquiring datasets to analyzing the processing results. The number and size of the datasets continue to increase every year due to continued advancements in technology. We support federated imaging archives with a variety of informatics services (software tools) that facilitate the development and evaluation of new candidate products. This public resource might be accessed by developers at various stages of their development cycle. Some may need to access the scans and data at the outset of their development process, whereas other innovators might have access to internal resources and need to access this resource later in their cycle to document performance. A core concept is the “Reference Data Set.” This is intended to include both images and non-imaging data that are useful in either the development or performance assessment of a given imaging biomarker and/or specific tests for that biomarker. A given biomarker must have at least one test that is understood to measure it, but in general there may be many tests that are either perceived or in fact measure the biomarker at various level of proficiency. The utility of a biomarker is defined in terms of its practical value in regulatory and/or clinical decision making, and the performance of a test for that biomarker is defined in terms of the accuracy with which it measures the biomarker. This gives rise to a high-level representation of workflows defined for this area (Fig. 5). BBMSC 5 of 19 QI-Bench: Informatics Services for Quantitative Imaging EUC Rev 1.0 Figure 1: Use Case Model for Developing Quantitative Imaging Biomarkers and Tests 3. Use Cases The following sections describe the principal workflows which have been identified. The sequence in which they are presented is set up to draw attention to the fact that each category of workflows builds on others. As such, it forms a rough chronology as to what users do with a given biomarker over time, and may also be useful to guide the design in such a way as it may be implemented and staged efficiently (Fig. 7). BBMSC 6 of 19 QI-Bench: Informatics Services for Quantitative Imaging EUC Rev 1.0 Figure 2: Relationship between workflow categories that illustrates progressive nature of the activities they describe and possibly also suggesting means for efficient implementation and staging. 3.1. Create and Manage Semantic Infrastructure and Linked Data Archives Scientific research in the medical imaging field involves interdisciplinary teams, in general performing separate but related tasks from acquiring datasets to analyzing the processing results. Collaborative activity requires that these be defined and implemented with sophisticated infrastructure that ensures interoperability and security. The number and size of the datasets continue to increase every year due to advancements in the field. In order to streamline the management of images coming from clinical scanners, hospitals rely on picture archiving and communication systems (PACS). In general, however, research teams can rarely access PACS located in hospitals due to security restriction and confidentiality agreements. Furthermore, PACS have become increasingly complex and often do not fit in the scientific research pipeline. The workflows associated with this enterprise use case utilize a “Linked Image Archive” for long term storage of images and clinical data and a “Reference Data Set Manager” to allow creation and use of working sets of data used for specific purposes according to specified experimental runs or analyses. As such, the Reference Data Set is a selected subset of what is available in the Linked Data Archive (Fig. 8). BBMSC 7 of 19 QI-Bench: Informatics Services for Quantitative Imaging EUC Rev 1.0 Figure 3: Use Case Model for Create and Manage Reference Data Set (architectural view) As in the case with the categories as a whole, individual workflows are generally understood as building on each other (Fig. 9). Figure 4: Workflows are presented to highlight how they build on each other. 3.2. Create and Manage Physical and Digital Reference Objects The first and critical building block in the successful implementation of quantitative imaging biomarkers is to establish the quality of the physical measurements involved in the process. The technical quality of imaging biomarkers is assessed with respect to the accuracy and precision of the related physical measurement(s). The next stage is to establish clinical utility (e.g., by sensitivity and specificity) in a defined clinical context of use. Consequently, NIST-traceable materials and objects are required to meet the measurement needs, guidelines and benchmarks. Appropriate reference objects (phantoms) for the technical proficiency studies with respect to accuracy and precision, and well-curated and characterized clinical Reference Data Sets with respect to sensitivity and specificity must be explicitly identified. Individual workflows are generally understood as building on each other (Fig. 11). BBMSC 8 of 19 QI-Bench: Informatics Services for Quantitative Imaging EUC Rev 1.0 Figure 5: Workflows are presented to highlight how they build on each other. 3.3. Core Activities for Biomarker Development In general, biomarker development is the activity to find and utilize signatures for clinically relevant hallmarks with known/attractive bias and variance. E.g., signatures indicating apoptosis, reduction, metabolism, proliferation, angiogenesis or other processes evident in ex-vivo tissue imaging that may cascade to the point where they affect organ function and structure. Validate phenotypes that may be measured with known/attractive confidence interval. Such image-derived metrics may involve the extraction of lesions from normal anatomical background and the subsequent analysis of this extracted region over time, in order to yield a quantitative measure of some anatomic, physiologic or pharmacokinetic characteristic. Computational methods that inform these analyses are being developed by users in the field of quantitative imaging, computer-aided detection (CADe) and computer-aided diagnosis (CADx).7,8 They may also be obtained using quantitative outputs, such as those derived from molecular imaging. Individual workflows are generally understood as building on each other (Fig. 12). Figure 6: Workflows are presented to highlight how they build on each other. 3.4. Collaborative Activities to Standardize and/or Optimize the Biomarker The first and critical building block in the successful implementation of quantitative imaging biomarkers is to establish the quality of the physical measurements involved in the process. The technical quality of imaging biomarkers is assessed with respect to the accuracy and reproducibility of the related physical measurement(s). Consequently, a well thought-out testing protocol must be developed so that, when carefully executed, it can ensure that the technical quality of the physical measurements involved in deriving the candidate biomarker is adequate. The overarching goal is to develop a generalizable approach for technical proficiency testing which can be adapted to meet the specific needs for a diverse range of imaging biomarkers (e.g., anatomic, functional, as well as combinations). Guidelines of “good practice” to address the following issues are needed: (i) composition of the development and test data sets, (ii) data sampling schemes, (iii) final evaluation metrics such as accuracy as well as ROC and FROC metrics for algorithms that extend to detection and localization. With development/testing protocols in place, the user would be able to report the estimated accuracy and reproducibility of their algorithms on phantom data by specifying the protocol they have used. Furthermore, they would be able to demonstrate which algorithmic implementations produce the most robust and unbiased results (i.e., less dependent on the development/testing protocol). The framework we propose must be receptive to future modifications by adding new development/testing protocols based on up-to-date discoveries. BBMSC 9 of 19 QI-Bench: Informatics Services for Quantitative Imaging EUC Rev 1.0 Inter-reader variation indicates difference in training and/or proficiency of readers. Intra-reader differences indicate differences from difficulty of cases. To show the clinical performance of an imaging test, the sponsor generally needs to provide performance data on a properly-sized validated set that represents a true patient population on which the test will be used. For most novel devices or imaging agents, this is the pivotal clinical study that will establish whether performance is adequate. In this section, we describe workflows that start with developed biomarker and seek to refine it by organized group activities of various kinds. These activities are facilitated by deployment of the Biomarker Evaluation Framework within and across centers as a means of supporting the interaction between investigators and to support a disciplined process of accumulating a body of evidence that will ultimately be capable of being used for regulatory filings. By way of example, a typical scenario to demonstrate how the Reference Data Set Manager involves three investigators working together on to refine a biomarker and tests to measure it: Alice who is responsible for acquiring images for a clinical study. Martin, who is managing an image processing laboratory responsible for analyzing the images acquired by Alice, and Steve, a statistician located at a different institution. First, Alice receives volumetric images from her clinical collaborators; she logs into the Reference Data Set Manager and creates the proper Reference Data Sets of datasets. She uses the web interface to upload the datasets into the system. The metadata are automatically extracted from the datasets (DICOM or other well known scientific file formats). She then adds more information about each dataset, such as demographic and clinical information, and changes the Reference Data Set’s policies to make it available to Martin. Martin is instantly notified that new datasets are available in the system and are ready to be processed. Martin logs in and starts visualizing the datasets online. He visualizes the dataset as slices and also uses more complex rendering technique to assess the quality of the acquisition. As he browses each dataset, Martin selects a subset of datasets of interest and put them in the electronic cart. At the end of the session, he downloads the datasets in his cart in bulk and gives them to his software engineers to train the different algorithms. As soon as the algorithms are validated on the training datasets, Martin uploads the algorithms, selects the remaining testing datasets and applies the Processing Pipeline to the full Reference Data Set using the Batch Analysis Service. The pipeline is automatically distributed to all the available machines in the laboratory, decreasing the computation time by several orders of magnitude. The datasets and reports generated by the Processing Pipeline are automatically uploaded back into the system. During this time, Martin can monitor the overall progress of the processing via his web browser. When the processing is done, Martin gives access to Steve in order to validate the results statistically. Even located around the world, Steve can access and visualize the results, make comments and upload his statistical analysis in the system. Individual workflows are generally understood as building on each other (Fig. 14). Figure 7: Workflows are presented to highlight how they build on each other. 3.5. Consortium Establishes Clinical Utility / Efficacy of Putative Biomarker Biomarkers are useful only when accompanied by objective evidence regarding the biomarkers’ relationships to health status. Imaging biomarkers are usually used in concert with other types of biomarkers and with clinical endpoints (such as patient reported outcomes (PRO) or survival). Imaging and other biomarkers are often essential to the qualification of each other. The following figure expands on Figure 17 and specializes workflow “Team Optimizes Biomarker Using One or More Tests” as previously elaborated to build statistical power regarding the clinical utility and/or efficacy of a biomarker. BBMSC 10 of 19 QI-Bench: Informatics Services for Quantitative Imaging EUC Rev 1.0 Figure 8: Use Case Model to Establish Clinical Utility / Efficacy of a Putative Biomarker Individual workflows are generally understood as building on each other (Fig. 18). Figure 9: Workflows are presented to highlight how they build on each other. 3.6. Commercial Sponsor Prepares Device / Test for Market Individual workflows are generally understood as building on each other (Fig. 21). Figure 10: Workflows are presented to highlight how they build on each other. BBMSC 11 of 19 QI-Bench: Informatics Services for Quantitative Imaging EUC Rev 1.0 4. Business Logic and Architecture Modeling 4.1. Validation and Qualification as Clinical Research Validating and qualifying measurements which are made to ensure that the various readouts used are understood in terms of their quality, validity, and integrity: Investigate both bias and variance of both readers and algorithm-assisted readers in static measurement of the biomarker in patient datasets with a set of reference measurements o Include experiments to assess minimum detectable change Investigate the scanner-dependent error, bias, and variance of readers and algorithm-assisted readers o Use equipment from several manufacturers at multiple clinical sites, collect scans of phantom as well as clinical data, and assess variability due to each step of the chain using test-retest studies Investigate proposed alternative methods or algorithms to produce comparable values for <fill in imaging biomarker> o Develop useful quantitative approaches to post-processing, analysis, and interpretation that minimize variability and bias o Use both imaging and sensor metadata to assess mean value and propagate confidence interval Tests and evaluations of surrogacy (using outcomes data) Workflows: Create and manage semantic infrastructure and linked data archives o Define, extend, and disseminate ontologies, vocabularies, and templates o Install and configure linked data archive systems o Create and manage user accounts, roles, and permissions o Query and retrieve data from linked data archive Create and manage physical and digital reference objects o Develop physical and/or digital phantom(s) o Import data from experimental cohort to form reference data set o Create ground truth annotations and/or manual seed points in reference data set Core activities for marker development o Set up an experimental run o Execute an experimental run o Analyze an experimental run Collaborative activities to standardize and/or optimize the marker o Validate marker in single center or otherwise limited conditions o Team optimizes biomarker using one or more tests o Support “open science” publication model Consortium establishes clinical utility / efficacy of putative biomarker o Measure correlation of imaging biomarkers with clinical endpoints o Comparative evaluation vs. gold standards or otherwise accepted markers o Formal registration of data for qualification Commercial sponsor prepares device / test for market o Organizations issue “challenge problems” to spur innovation o Compliance / proficiency testing of candidate implementations o Formal registration of data for approval or clearance 4.2. Clinical Research BAM Clinical research is defined as: (1) Patient-oriented research, i.e., research conducted with human subjects (or on material of human origin such as tissues, specimens and cognitive phenomena) for which an investigator (or colleague) directly interacts with human subjects. (Excluded from the definition of patient-oriented research are in vitro studies that utilize human tissues that cannot be linked to a living BBMSC 12 of 19 QI-Bench: Informatics Services for Quantitative Imaging EUC Rev 1.0 individual.) Patient-oriented research includes: (a) mechanisms of human disease, (b) therapeutic interventions, (c) clinical trials, and (d) development of new technologies; (2) Epidemiologic and behavioral studies; or (3) Outcomes research and health services research. Multi-institutional trials will have the following business modes: plan study end of protocol planning - approvals are done, ready to be activated, available for sites to open the study initiate study end of setup - sites have done what they need to do to open the study for enrollment conduct study end of conduct - no more data are being collected on the study subjects reporting and analysis (this goes across the first three business modes) planning reporting - IRBs conduct reporting - AEs analysis reporting end of analysis - initial results have been published, and other publishing is ongoing for years 4.3. Architectural Elements There are a number of so-called “architectural elements” that may be described to help with, or otherwise play a role in, these activities. For example, a “Reference Data Set Manager” may be defined to manage Reference Data Sets. Using this example element, the Reference Data Set Manager would be defined so as to be specifically tuned for medical and scientific datasets and provides a flexible data management facility, a search engine, and an online image viewer. Continuing the example, the Reference Data Set Manager should enable users to run a set of extensible image processing algorithms from the web to the selected datasets and to add new algorithms, facilitating the dissemination of users' work to different research partners. More comprehensively, Figure 11 identifies a set of platform-independent architectural elements that will be described and used in developing workflows associated with this enterprise use case: BBMSC 13 of 19 QI-Bench: Informatics Services for Quantitative Imaging EUC Rev 1.0 Figure 11: Platform Independent Architectural Elements and their Relationships Summarizing, the set of platform-independent architectural elements named in SUC: Clinical Systems: o Image Viewer o Image Annotation Tool o Clinical Decision Support System (CDSS) o Clinical Data Management System Research Methods: o (Image) Processing Algorithms o Statistical Methods o Multi-scale Analysis Application Linked Data Archive: o Image Archive o Image Annotation Repository o Clinical Data Repository Shared Semantics: o Annotation template o Common Data Elements o Ontology Analysis Technique/Biomarker Evaluation Framework: o Batch Analysis Service o Reference Data Set Manager o Profile Editor / Server 5. Domain Analysis and Semantic Infrastructure The archives and informatics services that operate on them have the potential to facilitate efficient and collective efforts to gather and analyze validation and qualification data on imaging biomarkers useable by regulatory bodies. Working together makes the process more robust than if individual stakeholders BBMSC 14 of 19 QI-Bench: Informatics Services for Quantitative Imaging EUC Rev 1.0 were to pursue qualification unilaterally. Once a quantitative imaging biomarker has been accepted by the community, including the national regulatory agencies, it may then be utilized without the need for repeated data collection for new drug applications (NDAs) by pharmaceutical companies and in device clearance or approval applications by imaging device manufacturers. This approach offers stakeholders a cost-effective process for product approval, while simultaneously advancing the public health by accelerating the time to market for efficacious drugs, devices, and diagnostic procedures (Fig. 13). Figure 12: Integrated flows across the enterprise identifies the upper left part to represent “feeder” activity that results in characterization and qualification data with two applications. One, the use by biotechnology and pharmaceutical companies in therapeutic clinical trials, is shown on the right. It leads to biomarkers deemed “qualified for use” by national regulatory agencies such as FDA or foreign equivalents. The second use, by the device industry as shown on the left, creates commercial diagnostic tests approved by regulatory agencies for the “appropriate use” of therapies. In this way, the technical validation data are applicable both to clinical trials and to clinical practice, thereby benefitting all stakeholders. Through this method: The collaborative enterprise acts as a sponsor on behalf of its membership, seeking clearance or approval for a test on a class of devices. Individual devices are tested for compliance with the class. National regulatory agencies (FDA or foreign equivalents) allow use of data collected to qualify a quantitative imaging biomarker (across a multiplicity of implementations) to be contributory as evidence for individual device sponsors to use in seeking market approval of individual implementations (thereby accelerating commercialization). In the end, more consistency can be expected in image interpretation, which should create more efficient multi-center clinical trials and be useful as patients move among providers. QIBA is a joint effort among many societies and industry partners to build a common framework for characterizing and optimizing performance across systems, centers, and time. It will be increasingly possible for physicians to rely on consistent quantitative interpretations as the standard of care. In theory, medical workflows incorporating these stable measures should compare favorably to workflows without them in terms of improved patient outcomes and lower costs of care. Without this effort, variations in measures diminish the value of imaging metrics and restrict their utilization. BBMSC 15 of 19 QI-Bench: Informatics Services for Quantitative Imaging Informatics Services for Quantitative Imaging links several relevant concepts that are distributed across the conceptual hierarchy. As such, a spanning ontology that draws together these concepts is possible using according to the following principles: • Metadata is data • Annotation is data • Data should be structured • Data models should be defined • Annotation may often follow a model from another domain • Data of all these forms is valuable EUC Rev 1.0 Figure 13: The semantic infrastructure needed to support quantitative imaging performance assessment encompasses multiple related but distinct concepts and vocabularies to represent them which include characterization of the target population and clinical context for use. Specifically, the domain includes linked models and controlled vocabularies for the categories identified in Figure 14. 5.1. Input Specifications: QIBA Profiles The QIBA Profile is a key document used to specify key aspects in the industrialization of a quantitative imaging biomarker (Fig. 15). Figure 14: QIBA Process to "Industrialize" Quantitative Imaging Biomarkers using Profiles. As such, it is a document used to record the collaborative work by QIBA participants. The Profile establishes a standard for each biomarker by setting out: Claims: tell a user what can be accomplished by following the Profile. Details: tell a vendor what must be implemented in their product to declare compliance with the Profile. The details may also define user procedures necessary for the claims to be achieved. The process is descriptive rather than prescriptive, e.g., it specifies what to achieve, not how to achieve it. Tiered approach supports installed base and guides future developments. BBMSC 16 of 19 QI-Bench: Informatics Services for Quantitative Imaging EUC Rev 1.0 A Profile includes the following sections: I. CLINICAL CONTEXT II. CLAIMS III. PROFILE DETAIL 0. Executive Summary 1. Context of the Imaging Protocol within the Clinical Trial 2. Site Selection, Qualification and Training 3. Subject Scheduling 4. Subject Preparation 5. Imaging-related Substance Preparation and Administration 6. Individual Subject Imaging-related Quality Control 7. Imaging Procedure 8. Image Post-processing 9. Image Analysis 10. Image Interpretation 11. Archival and Distribution of Data 12. Quality Control 13. Imaging-associated Risks and Risk Management Figure 15: Three performance levels for the indicated context for use are specified with specifications on input data required to meet them. APPENDICES A. Acknowledgements and Attributions B. Background Information C. Conventions and Definitions D. Documents included in the imaging protocol (e.g., CRFs) E. Associated Documents (derived from the imaging protocol or supportive of the imaging protocol) F. TBD G. Model-specific Instructions and Parameters IV. COMPLIANCE SECTION V. ACKNOWLEDGEMENTS Each of the Detail sections utilize a method for multiple levels of biomarker performance as means to extend the utility of development of the Profiles (Fig. 20). In this program, the expectation is to utilize Profiles as they are developed under QIBA processes and activities as the basis for a computable document with defined semantics covering the necessary abstractions to define the task in a formalized metrology setting. 5.2. Information Models and Ontologies In order to support these capabilities, the following strategy will be employed in the development and/or use of information models and ontologies (Tables 2 and 3): Ontologies: Ontology Systematized Nomenclature of Medicine--Clinical Terms (SNOMED-CT) BBMSC Available through UMLS Metathesaurus, NCBO BioPortal Extend or just use? Use Dynamic connection? Dynamically read at runtime Example of use Grammar for specifying clinical context and indications for use 17 of 19 QI-Bench: Informatics Services for Quantitative Imaging EUC Rev 1.0 RadLex (including Playbook) RSNA through www.radlex.org,or NCBO Bioportal Use Dynamically read at runtime Grammar for representing imaging activities Gene Ontology (GO) GO Consortium, www.geneontology.org Use Dynamically read at runtime Nouns for representing genes and gene products associated with mechanisms of action International vocabulary of metrology --- Basic and general concepts and associated terms (VIM) International Bureau of Weights and Measures (BIPM) Use Updated on release schedule How to represent measurements and measurement uncertainty Exploratory imaging biomarkers Paik Lab at Stanford Extend Dynamically read at runtime Grammar for representing imaging biomarkers Table 1: Ontologies utilized in meeting the functionality As a practical matter, many (but not all) of these ontologies have been collected within the NCI Thesaurus (NCIT). It may be that there is utility in utilizing this to subsume included ontologies as a design consideration. Information models: Information Model Available through Extend or just use? Dynamic connection? Example of use Biomedical Research Integrated Domain Group (BRIDG) (drawing in HL7RIM and SDTM) caBIG Use Updated on release schedule Data structures for clinical trial steps and regulatory submissions of heterogeneous data across imaging and non-imaging observations Life Sciences Domain Analysis Model (LS-DAM) caBIG Use Updated on release schedule Data structures for representing multiscale assays and associating them with mechanisms of action that link phenotype to genotype Annotation and Image Markup (AIM) caBIG Extend Updated on release schedule Data structures for imaging phenotypes Table 2: Information Models utilized in meeting the functionality Our objective is to create a domain analysis model that encompasses the above for the purpose of driving our design. BBMSC 18 of 19 QI-Bench: Informatics Services for Quantitative Imaging EUC Rev 1.0 6. References 1 Clinical Pharmacology & Therapeutics (2001) 69, 89–95; doi: 10.1067/mcp.2001.113989. 2 Janet Woodcock and Raymond Woosley. The FDA Critical Path Initiative and Its Influence on New Drug Development. Annu. Rev. Med. 2008. 59:1–12. 3 http://www.fda.gov/ScienceResearch/SpecialTopics/CriticalPathInitiative/CriticalPathOpportunitiesRep orts/ucm077262.htm, accessed 5 January 2010. 4 http://www.accessdata.fda.gov/scripts/cdrh/cfdocs/cfcfr/CFRSearch.cfm?CFRPart=820&showFR=1, accessed 28 February 2010. 5 http://www.answers.com/topic/phenotype. Accessed 17 February 2010. 6 http://www.answers.com/topic/surrogate-endpoint. Accessed 17 February 2010. 7 Giger, QIBA newsletter, February 2010. Giger M, Update on the potential of computer-aided diagnosis for breast disease, Future Oncol. (2010) 6(1), 1-4. 8 BBMSC 19 of 19