Here - Dartmouth College

advertisement

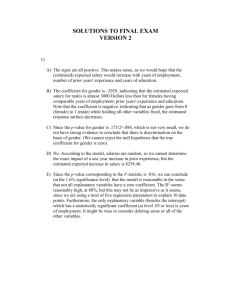

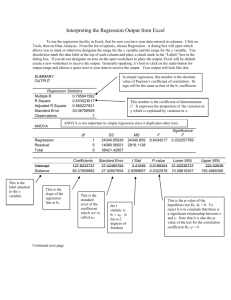

Economics 20 Prof. Patricia M. Anderson MIDTERM EXAM EXPLANATIONS 1. This was a very straightforward question about the interpretation of dummy variables and reported p-values. We’re told that season is a dummy variable = 1 if the athlete’s sport is in season, meaning the comparison is the off season. Thus, given the coefficient of .099 on season, we can conclude that, all else equal, athletes can expect their gpas to be .1 points higher in the sports season. The reported pvalue is always that for a 2-sided alternative (a 1-sided test would imply dividing the reported p-value in half) to the null of no effect. The reported p-value is .056, implying that we can reject the null of no effect at the 5.6% level against a 2-sided alternative. 2. This one involved a less straightforward interpretation, but was identical to the third problem we discussed for the October 9 In-Class Review Problems. The idea was that if we considered the following model: trmgpa = 0 + 1math + 2verbal + 3frstsem + 4season + u and the null hypothesis 1 = 2, we could rearrange the model to be testing = 1 – 2 = 0. The rearrangement would result in trmgpa = 0 + math + 2(math+ verbal) + 3frstsem + 4season + u, which was what was estimated. Thus, the coefficient on math tells us about about 1 – 2 and the coefficient on sat tells us about the effect of verbal. We can then check the p-values and see that we cannot reject that 1 = 2, but we can reject that 2 = 0. So, we can conclude both that doing better on the verbal portion of the SAT does significantly increase an athlete’s gpa and that the effect of the math and verbal portions of the SAT are not significantly different. Even without realizing that this was the same as the practice problem, or doing the rearrangement, we could answer this question. First, just consider what it means for sat to change, holding math constant. It means verbal must be changing, and hence that we are measuring the effect of verbal on trmgpa (and it is significantly positive). Similarly, what does it mean for math to change, holding sat constant? It means math and verbal must be changing in an exactly offsetting manner, so if math goes up, verbal must go down the same amount and we are just capturing the difference in the effect of math and verbal (and it’s not significantly different from zero). 3. This one is a straightforward t-test, but where we are not testing a null of 0, as the reported t-statistic assumes. Rather, we have to calculate our own t-statistic. Remember, the t-statistic is always (the estimated coefficient – the assumed null) / the standard error of the estimate. Thus, in this case we have (.919553 – 1)/.0722385 = 1.114 in absolute value. To determine the approximate p-value, we just need to look up the critical values in a t-table. With 29 degrees of freedom (i.e. 31 – 1 – 1) the 2-tailed critical value for .20 significance level is 1.311. Thus, we could only reject the null at more than the .20 level. There is exactly like an oct4.log example. 4. This one just requires imposing the restrictions implied by the null hypothesis, and rearranging into a model to be estimated. So if we set 0 = 0 and 1 = 1 we get exchange = ppp + u and when we rearrange we get exchange – ppp = u as the restricted model. This is similar to an oct9.log example. 5. We are given the restricted SSR of .422 and we can read the unrestricted SSR off the printout as .404, but to compute the F-statistic we also need the degrees of freedom. The numerator degrees of freedom is always the number of restrictions, so that’s 2. The denominator degrees of freedom are the degrees of freedom from the unrestricted model, so that’s 29. Now we just plug and chug with the Fstatistic formula to get [(.422-.404)/2)]/[(.404/29)] = .646 for our F-statistic. Looking in the 10% Ftable, we see that the critical value with 2, 29 df is 2.5, so we fail to reject the null at the .10 level. 1 6. This is an application of the principle that if the Var(u) = 2h(x), then we can correct for the problem of heteroskedasticity by using WLS, where the weight is 1/h(x). In this case, we’re told that 1/h(x) = ppp, so we can solve for h(x) = 1/ppp and determine that 2h(x) = 2/ppp = Var(u). This is similar to multiple choice question 3 on PS 3. 7. This is a very straightforward question about the interpretation of a log-log model. We know that log-log models imply a constant elasticity (see Stata problem 1 on PS3), because the estimated coefficient is interpreted as the elasticity. In this case, we have that the elasticity of per pupil spending with respect to per capita income is .21, so we know that %per pupil spending / %per capita income = .21. Thus increasing per capita income by 100% increases spending by 21%, since 21/100 = .21. 8. This is a classic example of the rare case where a simple regression is identical to a multiple regression because the additional variable is neither correlated with the included variable nor with the dependent variable. In this case, the additional variable is a random number, so it should definitely meet these conditions. 9. This question is inspired by the final problem we discussed for the October 25 In-Class Review Problems. As in that case, since the original model is a simple regression, the Breusch-Pagan regression is also a simple regression and the overall F-test will have the identical implication as a simple t-test on lnpcy. Here we are told the p-value from the overall F-test is .014, so we can reject the null of homoskedasticity at the 1.4% level, and we can be sure the p-value of the t-test for the significance of lnpcy in the Breusch-Pagan regression is 0.014. Given that we’ve rejected homoskedasticity, we know that WLS will be a more efficient estimator. 10. This is just a simple example of changing the units of measurement, similar to questions 5 and 6 on PS1. We know that predicted weekly hours of sleep = -weekly hours of work -education and that there are 60 minutes in an hour. If we were just changing hours of work into minutes, we would divide it’s coefficient by 60, while changing the dependent variable implies multiplying all coefficients by 60. Thus, there is no change in the second coefficient because both time measures are changed to minutes (essentially we both divided it by 60 and multiplied it by 60). We end up then with coefficients of 3600, -.15 and -12, since 60*60 = 3600 and 60*-.20 = -12. 11. This is a question about omitted variable bias, similar to the last Oct 4 discussion problem. The new information about children implies a model like sleep = 0 + 1work + 2education + 3children + u, where 3is negative but we have omitted children from our estimate of 1. We know that E(our estimate of 1) = 1 + 3 where has the sign of the correlation between children and work. Recall that as long as education is uncorrelated with either work or children, we can use the simple case as our guide. We’re told that is negative, so our original model has a positive bias. Thus, if we included children in the model we would remove this positive bias and expect to get something less than the current estimate of -.15. 12. This is a question that reminds us of one of the nice attributes of taking logs of dollar values – we don’t have to worry about inflating/deflating the dollar amounts because we get the same result either way. This exact principle is illustrated in the September 30 log file. Intuitively it’s because we are interpreting the coefficient as a percentage change. To see mathematically what is going on, consider that the model we estimate is ln(salary) = 0 + 1rating + u. If we first deflated salary, we would substitute in ln(salary*inflation factor) = ln(salary) + ln(inflation factor) and rearrange to get ln(salary) = 0 + ln(inflation factor) + 1rating + u. Thus, the coefficient on rating would be the same and the constant would increase. 2 13. This is a pretty straightforward question about interpreting groups of dummy variables. We are told that catcher is the comparison group. Thus, the p-value on shrtstop tells us that shortstops and catchers do not earn significantly different salaries. Comparing the coefficient on outfield with the others tells us that outfielders earn more than any other non-pitcher, while the p-value only tells us the significance compared to catchers. Finally, if frstbase=.498 and scndbase=.368, there is a .13 difference between how much more first basemen make than catchers and how much more second basemen make. Thus, we know that first basemen earn about 13 percent more than second basemen, but we cannot tell if this is significantly different from zero based on what is given in the printout. 14. In this example, position was a categorical variable that has been turned into a group of dummy variables, so testing the null that position does not affect salary is equivalent to an F-test for the joint significance of all of the position dummy variables. Since these are the only variables in the regression, the reported overall F-test carries out this test for us. Since the p-value for this test is .0665, at the 5% level we can not reject the null that position does not affect salary. 15. This is about both the definition and interpretation of the R2. We know that R2 is a measure of goodness of fit, and is defined as either SSR/SST or 1-SSR/SST. That is, it is the fraction of the total variance that is explained by the model. The notation R2 derives from the fact that it can also be calculated as the squared correlation between y and ŷ, where we often refer to a correlation as r. While we didn’t prove this mathematically we did show (see sep27.log, for example) that it was true. While R2 is a goodness-of-fit measure, it can only be used to compare models with the same y and the same number of x variables. R2 cannot fall when another x is added to the model. The adjusted R2 is more appropriate in such a situation. 16. This is a simple case of interpreting a dummy variable. We need only look at the coefficient on black to see how, all else equal, the sentence of a black person who kills a white person compares to that of a white person who kills a white person. Note that the sentence of a black person who kills a black person would also be 1.71 years more than that of a white person who kills a black person. 17. The key to this question is realizing that the model given is not actually the restricted model – it already allows the race of the offender to change the intercept. If the sentencing model is completely different for black offenders, we are considering the unrestricted model: priyears = 0 + 1prviolnu + 2stranger + 3vblack + 4black + 5prviolnu*black + 6stranger*black + 7vblack*black + u, and the null hypothesis being tested is 4=0, 5=0, 6=0, 7=0. Thus we are testing 4 restrictions and the degrees of freedom of the unrestricted model is 2679-7-1=2671, so we have 4 numerator and 2671 denominator degrees of freedom. To calculate this based totally on the formula for the Chow test, you just need to consider that the estimated model would be priyears = 0 + 1prviolnu + 2stranger + 3vblack + u so that k=3 and we have 3+1 = 4 and 2679 – 2*(3+1) = 2671 degrees of freedom. Stata problem 1 from PS3 demonstrated how to think about this type of problem. 18. This asks you to think about the effect of sample size on the estimates. Since a model satisfying the Gauss-Markov assumptions is unbiased, the expected value of the coefficients is the same no matter the sample size. Thus, there is no systematic difference across researchers in the estimated coefficients. However, we do know that the standard errors of the OLS estimator are inversely related to sample size. Thus, there is a systematic difference across researchers in the size of the estimated standard errors, with Researcher A having the largest and Researcher C having the smallest. 19. This is an example of the “partialling out” interpretation of multiple regression (in fact this exact example can be seen in sep30.log). Intuitively, what is going on? The residuals from the first regression are the part of SAT score that is not explained by gender. That is we get only variation in 3 SAT score that is left after holding sex constant. We then see what effect this residual variation in SAT score has on college GPA. But that is exactly what a multiple regression is doing, since the estimate of 1 obtained from the model college GPA = 0 + 1SAT + 2female + u tells us the effect of variation in SAT score on college GPA, holding sex constant. 20. This is a fairly straightforward example of interpreting a model with interactions (just like part d of Stata problem 1 on PS2). The marginal effect of coke is -.0235+.001*age (since agecoke = age*coke). For a 16-year-old, then, we have that the marginal effect is -.0234931+.0011153*16 = -.0056, so each additional use of coke reduces condom use by just under .6 percentage points. Note the difference between percentage points and percent. If the probability of an outcome has dropped from .50 to .40, what once was a 50% chance is now a 40% chance, and the outcome has dropped by 10 percentage points. However, that is a 20 percent drop, because 10 is 20 percent of 50. 21. This question gets at the best way to think about the intercept in a model where it makes no sense to consider all of the x’s to be set to zero. Since we know that the average person in the data is 16, we can plug in 16 for age before setting the dummy variables to zero. This tells us that the average nonpot-smoking, non-coke-snorting female in the data has a 59% chance of having worn a condom, since .042164*16+1.265384=.59. 22. This question is just like the last two problems we discussed for the October 14 In-Class Review Problems. Changing the dependent variable from equal to 1 for an event occurring to equal to 1 for an event not occurring has no effect on the R2. Since the interpretation now is the effect of x on the probability that an event does not occur, the slope coefficients would change sign. Similarly, since the probability of the event not occurring is 1-the probability of the event occurring, the new intercept is 1the old intercept. So, in this case, R2 would be .0419, the intercept would be -.265384 and all other coefficients would change sign. Note that if you want to convince yourself about the new intercept, repeat the exercise from 21 above and discover that the average non-pot-smoking, non-coke-snorting female in the data has a 41% chance of having not worn a condom 23. This question is about the drawbacks to using a linear probability model. While it’s true that such a model will be heteroskedastic, we estimated robust standard errors, so we can still make inferences. Similarly, while the errors are likely non-normal it is a large sample so we can still make inferences by relying on asymptotic normality. What we can’t avoid is the fact that any linear probability model may produce predicted values that are outside of the 0, 1 interval. 24. This question reminds us that in order to derive the OLS estimator, we imposed our population assumptions that E(u) and E(ux)=0 onto the sample. It is because of this first assumption that the mean of the residuals is always 0. Because of the second one, the covariance between the residuals and the x’s will always be zero. So what does this have to do with the question? If there is no correlation between the residuals and the x’s, then after we run a regression of the residuals on all of the x’s, we can be absolutely certain that the R2 is equal to 0. You can see an example of this in oct16.log. You could also just approach this question intuitively. The residuals represent the variation in lwage that can’t be explained by educ, exper, tenure, exper2 and tenure2. Thus, we cannot explain any more of the variation by regressing it again on educ, exper, tenure, exper2 and tenure2 and we must obtain an R2 is equal to 0. 25. This is pretty much straight out of the book from the beginning of Ch 8. Pages 257-258 discuss how the assumption of homoskedasticity played no role in proving unbiasedness, that the goodness-offit measure, R2 is also unaffected by the presence of heteroskedasticity, and that SSR/n consistently estimates 2 whether or not we have homoskedasticity or not. 4