Density Functional Theory: A primer

advertisement

Density Functional Theory: A primer

Sergio Aragon

San Francisco State University

Abstract

A condensed description of Hartree-Fock methods and Density Functional theory is

presented. We show that the mathematical form of both methods is the same and that

DFT achieves accuracy better than or equal to HF//MP2 methods more economically and

for a much larger class of molecules, including transition metals.

I.

Wavefunctions & Schroedinger’s Equation

To study and predict the properties of atomic and molecular systems (including solid

state matter), one must use Quantum Mechanics. For molecular systems, the energy

shifts caused by the presence of magnetic dipoles of the electrons and nuclei are small

compared to chemical binding energies, and can usually be treated successfully by

perturbation theory. In addition, because the chemistry focus is on the valence electrons,

the core electrons can be treated approximately and thus relativistic effects, which affect

mostly the core, can be neglected. For chemistry and the solid state, then, the basic

characterization of structure and reactivity can be obtained from the non-relativistic

Schroedinger equation without magnetic effects. For the characterization of structure, the

time independent Schroedinger Equation suffices, while to study the interaction of

molecular systems with electromagnetic radiation, the full time dependent Schroedinger

Equation is necessary. The following discussion is restricted to the time independent

Schroedinger Equation in the Born-Oppenheimer approximation in which the electronic

structure is computed at fixed nuclear positions. For a molecular system, it is:

H (x1,x2,…,xN; R1,…,RNn) = E (x1,x2,…,xN; R1,…,RNn)

(1)

Where x1,x2,…,xN represent the spin and cartesian coordinates of the N electrons in the

molecule, and R1,…,RNn are the nuclear coordinates of the Nn nuclei in the molecule.

The Hamiltonian operator is given by,

H = Te + Tn +Ven +Vee +Vnn

(2)

where the kinetic and potential energies of N electrons (e) and Nn nuclei (n) are given

by,

Te =

Tn =

2

2 me

2

2

Ne

2

1

2k

k 1 m k

Nn

(3)

Zk e2

Ven =

1 k 1 40 | r Rk |

Ne N n

Ne

Vee =

Ne

e2

1 4 0 | r r |

Zk Z je2

Vnn =

j 1 k j 4 0 | R j Rk |

Nn Nn

The solutions to this equation must be obtained with several important restrictions. First,

the wavefunction must be normalizable, and, second, it must be antisymmetric with

respect to the exchange of any pair of electron spin-coordinates xi, xj because electrons

are Fermions. This requirement is equivalent to the usually stated Pauli Exclusion

Principle: no two electrons of the same spin can occupy the same space point at the same

time. Equivalently, (x1,x1,…) =0. This implies that two electrons of the same spin are

automatically kept away from each other by the antisymmetry requirement and they do

not move independently of each other. Their motion is correlated, regardless of the fact

that they are electrically charged objects.

Example I: The Hydrogen Molecule

A simple example of the above requirements is the approximate MO-LCAO minimal

basis set wavefunction of the 2-electron hydrogen molecule. Let R = |R1-R2|, then the

unnormalized wavefunction may be written as,

(x1,x2; R) = [1s1(r1;R)+1s2(r1;R)][ 1s1(r2;R)+1s2(r2;R)][(1)(2)-(1)(2)].

The spatial part of the wavefunction containing the 1s orbitals (each centered on

nucleus 1 and 2) is symmetric with respect to the exchange of electron coordinates r1

and r2, but the spin part of the wavefunction is antisymmetric with respect to the

exchange of electron 1 for 2. This wavefunction satisfies the Pauli exclusion

principle (i.e., it is overall antisymmetric). Note that the meaning of the coordinate x1

implies the simultaneous specification of the electron Cartesian coordinates r1 and the

spin state of the electron (or). The normalization can be done in confocal

elliptical coordinates and is omitted for simplicity. This wavefunction is too crude to

yield an accurate value for the coordinate R (it is too large) and for the ground state

energy E0 (it is not negative enough), but it does give us an idea to start from.

Exercise: Show that the above wavefunction can be written as a single determinant.

The molecular structure problem entails finding the values of the R1,…,RNn nuclear

coordinates at which the energy E is minimum. This value of the energy, E0, is called the

ground state energy, and the resulting structure, the ground state molecular geometry. We

would also like to know a variety of other properties of the ground state such as the

electric moments (dipole, quadrupole, ….), the electrostatic potential on the “boundary of

the molecule”, the effective electric charges on the nuclei, and other such properties.

If we solve eq. (1) completely, we obtain much more than that. In fact, quantum

mechanics tells us that all possible measurable properties of the isolated molecular

system are contained in the wavefunctions (x1,x2,…,xN; R1,…,RNn) for all the states of

the molecule. In order for all this information to be contained there, the wavefunction is

very complex. It is a high dimensional object, dependent on 3 N electron Cartesian

coordinates, N electron spin coordinates, and 3 Nn nuclear coordinates. For a molecule

such as benzene, with 12 nuclei and 42 electrons, this object exists in 162-6=156

dimensional Cartesian space! The determinant has 42! = 1.4 1051 terms! We can’t even

make a picture of it. If we were to store it as a numerical object, with a resolution of 0.1

au out to 10 au, we would need 10312 numbers to store, at single precision (4

bytes/number), at 2 Gbytes/cm2 (counting electrical connections access), we would need

more than 10293 Km2 of surface to store that information. The Earth has a surface area of

less than 109 Km2. The promised knowledge hidden in the Schroedinger Equation is not

quite easily accessible. We must make do with much much less. How much less can we

do with?

The situation is not as hopeless as this trivial computation makes it sound. First of all, the

majority of the storage space can be avoided by using a basis set of functions, usually

centered around the nuclei, to expand the unknown wavefunction. Then only a smaller set

of coefficients and parameters are required. The remaining problem, however, is that the

mathematical representation requires the use of an infinite set of basis functions and in

practice we must use only a finite set. The choice of basis set becomes a crucial point of

departure.

Furthermore, the fundamental behavior of the electrons is to exquisitively avoid each

other in a multi-electron system. The motion of the electrons is highly correlated.

Electrons of the same spin are automatically kept away from each other by the

antisymmetry requirement (exchange correlation or Fermi correlation), but electrons of

different spin must be kept away from each other by the spatial form of the wavefunction

(Coulomb correlation). The latter can only be done approximately in practice.

In wavefunction based methods, the simplest treatment with useable accuracy is the

Hartree-Fock method. In this method, one assumes that the wavefunction can be written

as the antisymmetric combination of one-electron orbitals (a Slater determinant) and

thereby replaces the exact Hamiltonian of eq. (2) by that of a set of non-interacting

“electrons” where each moves in the average field of the rest of them (a Mean Field

Theory). The orbitals obey non-linear integro-differential Fock equations, and the

solution must be obtained by iterative methods until self-consistence is achieved (an SCF

method).

Example II: The Hartree-Fock Method

For an even number of electrons, one Slater determinant suffices to setup the

Hartree-Fock equations. Let the wavefunction be composed of the spin-orbitals i =

i or i. Then,

1 ( x1 ) 2 ( x1 ) . . N ( x1 )

1 ( x2 )

.

. . N ( x2 )

1

HF =

(4)

.

.

. .

.

N!

.

.

. .

.

1 ( x N )

.

. . N (xN )

The fundamental principle that enables us to obtain an approximate solution to the

Schroedinger Equation is the Variational Principle: E = <|H|> E0. We vary

the spin-orbitals themselves in order to minimize the value of E, obtaining a quality

approximation to the ground state energy. Substituting the Hartree-Fock

wavefunction into the expectation value, we obtain,

EHF = <HF |H|HF > =

1 N N

1

1

|

h

|

[ | | | | ] Vnn

2 1 1

r12

r12

1

N

(5)

1 2 Nn Z k

where the h

is a one electron operator containing the electron

2

k 1 rk

kinetic energy and the electron-nucleus attraction. The double integrals in the

double summation are the Coulomb and Exchange integrals, respectively. The

antisymmetry requirement makes the Exchange integral appear. The variational

principle, applied to eq. (5) yields the integro-differential equation that the spinorbitals must satisfy:

{h VHF ( x )}

(6)

N

VHF ( x ) [ J ( x ) K ( x )]

1

J ( x1 ) | ( x2 ) | 2

dx2

r12

K ( x1 ) ( x1 ) [ ( x2 ) * ( x2 )

dx2

] ( x1 )

r12

where the effective Hartree-Fock potential VHF is a operator containing both a

Coulomb (J) and a non-local Exchange operator (K) added over all electrons (the

mean field). These equations represent a fictitious system of non-interacting

particles that approximates the electron behavior. Furthermore, if we assume that

upon removal of a given electron (ionization) from the system, that the distribution

of the rest of the electrons does not change (which is not true), then the energy

required to ionize the system from the orbital is precisely the eigenvalue .

This is a statement of Koopman’s theorem and provides a physical interpretation for

the orbital eigenvalues. Note that it is approximate, with unknown error.

In actual practice, the HF spin-orbitals are expanded in a basis set and the Variational

problem becomes a simpler one of finding the optimum values of the expansion

coefficients. When this is done, the practical equations are called the Roothaan-HartreeFock equations. This has been discussed in detail previously.

The Hartree-Fock method satisfies the exchange requirements perfectly, but misses badly

in the rest of the electron correlation effects. To obtain chemical accuracy, post-HartreeFock methods must be used in addition, such as Moller-Plesset perturbation theory,

Configuration Interaction, or Coupled Cluster methods. These are computationally

expensive, limiting the size of the system to which they can be applied, and of very

limited application to transition metals. What else can we do?

II.

Electron density instead of the wavefunction

Can we obtain the ground state properties of the molecular system without explicitly

constructing the very complex wavefunction? In 1964, Hohenberg & Kohn demonstrated

that, surprisingly, the answer is YES. The reason this is of fundamental importance is

that the electron density, for any molecular system, is a function of only three spatial

coordinates (for a given set of nuclear positions)! Let’s define it:

(r1) = N | x1 x 2 ... x N R1 ... R Nn | 2 d 1 dx 2 ...dx N

(7)

This is the integral over all electron cartesian and spin coordinates, except the Cartesian

ones of electron 1, of the probability distribution defined by the wavefunction, multiplied

by the number of electrons in the system. The integral of the density over all space equals

N. The Hohenberg-Kohn theorems are deceptively simple:

Theorem 1: The external potential, Ven is determined, up to an additive constant by the

electron density. Since (r1) determines the number of electrons N, then the density also

determines the ground state wavefunction and all other electronic properties of the

system.

Proof: Prove by contradiction. Assume that there exist two different external potentials

V=Ven +Vnn and V’=Ven’ +Vnn’ which both give the same electron density (r1).

Then we have two Hamiltonians, H and H’ with the same ground state density and

different and ’. Now we use the variational principle, taking ’ as a trial function

for the H Hamiltonian, to obtain:

E0 < <’’<’'’<’'’

= E0' + <’V-V'’

In addition, we can take the as a trial function for the H’ Hamiltonian, to obtain:

E0’ < <'<<’

= E0 + <V’-V

Now we recognize that the expectation value of the difference in the external potentials

differ only in sign because we assumed that the electron density is the same. When we

add the two equations, we then obtain the contradiction:

E0 + E0’ < E0’ + E0

(10)

Thus we conclude there there exists a unique map between the external potential V and

the ground state density. This implies that all the energies, including the total energy, is a

functional of the density. We write this as E = E[]. The density determines the

Hamiltonian, and thereby, the wavefunction.

Detour: What is a functional?

We give an intuitive definition by comparing the situation to the more familiar

notion of function. A function f: R R is a map (or assignment) between one

number of the set R (say the real numbers) to another number of the set R. We

usually denote this assignment by y = f(x), and we agree that to each value of x

there corresponds only one value of y. On the other hand, a functional is a map

between a set of functions and a number; F: {f(x)} R, and we denote this by

F[f(x)] = y. A simple example is the definite integration operator:

b

F [ g ] g ( x)dx . The specification of the integrable function g(x) produces a

a

number defined in terms of the constants a and b.

Theorem 2: Variational Principle

Suppose we have a trial density ’. Then this density defines its own wavefunction ’,

and the expectation value of the true Hamiltonian satisfies the variational principle:

<’’Vee[ (r )V (r )dr ’] E0[0] = <00

Thus, the correct density is the one that produces the minimum energy. Is there any

system for which we can explicitly write out the energy functional? The box below gives

such an example.

Thomas-Fermi-Dirac Atomic Model

The uniform electron gas consists of N=Z electrons in a volume which is uniformily

positive so as to keep the entire system electrically neutral. For this system, Thomas

and Fermi (1927) computed the kinetic energy, and Dirac (1930) computed the

Exchange correlation energy:

(r ) 1 (r1 ) (r2 )

5/3

ETFD[] = C F (r )dr Z

dr

dr1dr2 C X 4 / 3 (r )dr

r

2

r12

(12)

The values of the constants C are of order unity. This model reproduces the Madelung

Rule for the orbital energy filling of electrons in atoms, but is not otherwise accurate,

and when applied to molecules, it produces no binding. However, for the electron

gas, the Coulomb correlation can also be computed, albeit, numerically (Ceperly &

Alder,1980) and this is included in many modern functionals.

The Kohn-Sham Equations

One of the main problems of the Thomas-Fermi-Dirac theory is that the kinetic energy

functional is not representative of the very inhomogenous electron density in atoms and

molecules. The KS method relies on the introduction of a fictitious reference systems of

non-interacting electrons that is constructed to have the same electron density as the

system of interest. These electrons must move in a complex potential that takes into

account the actual forms of electron correlation and the difference between the kinetic

energy functional of the reference system and the real system.

The reference system of ficticious non-interacting particles has a rigorous solution in

terms of single electron wavefunctions, or molecular orbitals. These are called the KohnSham orbitals. Thus, we can express the kinetic energy exactly for the reference system

as the sum of the expectation value of the Laplacian for each “electron”.

TS [ S ]

1 N

j | 2j | j

2 j 1

provided that the density of the real system, = S =

(13)

N

j 1

energy functional of the real system is written as:

| j (r , ) | 2 . Then, the

E[] = TS[] + J[] + Vnn + Exc[]

(14)

Where all the unknown pieces are collected (swept under the rug!) into the Exchange

Correlation energy,

Exc[SEee[J[

The above expression for the Exchange-Correlation energy includes the error in the

kinetic energy, the correlation effects, and also the correction for the self –interaction of

the electron that has been included in the classical Coulomb integral J.

Now we must apply the variational principle and the variables to be adjusted are the KS

orbitals themselves. In practice, this is done just like in the HF method, a basis set

expansion is used to represent the KS orbitals. Thus, similar computational engines are

required for both DFT and HF theory and it is not then a surprise that computational

packages have them often both available as options. We write the entire energy functional

in terms of the KS orbitals:

1 N

1 N N

1

2

E[ ] j | j | j j i |

| j i Exc[ ]

2 j 1

2 j 1 i 1

r12

Nn N

Nn Nn

a b

1

(16)

|

|

a

j

j

ra1

a 1 j 1

a 1 b 1 rab

The setting the functional derivative of this expression to zero yields the equations that

the KS orbitals must satisfy to provide the same density for both systems:

Nn

Z

1

{ 2j a VKS ( x j )} j j j

2

a 1 raj

(17)

where the effective KS potential in which the non-interacting particles move contains the

classical electrostatic interaction, the Exchange-Correlation potential and the electron

nucleus attraction.

(r2 )

VKS (r1 )

dr2 Vxc(r1 )

r12

(18)

Note that eq. 17 is identical in mathematical form to the HF equations satisfied by the

Hartree-Fock orbitals. Thus, the same methods are used to solve them. Since the

unknown density is required to write out the KS potential, the equations are also pseudoeigenvalue equations and must be solved by an SCF method. The fundamental difference

lies in the form of the VKS potential versus the VHF potential. Each of them has an

ordinary Coulomb repulsion term and an Exchange term. In the HF case, the Exchange

terms is known and exact for the model, while the Coulomb correlation term is missing.

In the DFT case, the Vxc term includes UNKNOWN functional forms for the Coulomb

and Exchange correlation and the correction to the kinetic energy.

Why is this advantegous? It might not seem we have gained anything because we knew

ahead of time that we needed to include all these correlation effects, and pasting an

unknown term in the equations as a place holder may not seem like a great advance. The

reason that it does represent a fundamental advance is that we don’t have to completely

guess what to put in for Vxc because we do have the exactly soluble uniform electron

gas.

III.

Approximations for the Exchange-Correlation Functional

The simplest approximation that one can make is to imagine that at every point in space

we can use the value of the density that the uniform electron gas would have at that point,

and allow it to vary from point to point. This is called the Local Density Approximation

(LDA). In more detail, we write:

E xcLDA [ ] (r ) xc ( (r )) dr

,

xc ( (r )) x ( (r )) c ( (r ))

(19)

1/ 3

3 3 (( r ))

where the x ( (r ))

is the Dirac/Slater Exchange energy of the gas.

4

The Coulomb correlation piece is obtained from interpolations of the Monte Carlo data of

Ceperly and Alder (1980). The typical LDA approximation is the SWVN

implementation that implies Slater Exchange plus Coulomb correlation obtained by

Vosko, Wilk & Nussair (1980). Perdew & Wang (1992) made a more accurate

implementation.

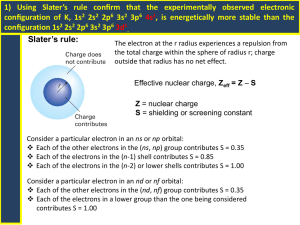

Schematic of the LDA (Koch & Holthausen).

The LDA by its self does not contain sufficient accuracy for chemical applications. It is

necessary to include terms that explicitly take into account the spatial variation of the

density. This the formulation of functionals within the Generalized Gradient

Approximation (GGA) is what made good accuracy density functional theory possible.

As above, approximations are made separately for the Exchange and Coulomb portions.

The Coulomb expressions are quite complex and we will skip writing them down. The

popular choices are:

LYP : Lee, Parr &Yang (1988)

PW91: Perdew & Wang (1991)

P86: Perdew (1986)

These can be combined with Exchange Correlation functionals. These have the form,

E xCGA E xLDA F (s ) 4 / 3 (r )dr

, s

| |

4 / 3

(20)

Two commonly used choices for the Exchange functional are:

Becke (1988): F B

s

(21)

1 6s Sinh 1 ( s )

1 / 15

(22)

F 86 1 1.296 x 2 14 x 4 0.2 x 6

s

x

(24 2 )1 / 3

The b parameter in the Becke form is fit to accurate densities of a large number of atoms,

while that of Perdew does not have any empirical parameters. Typical combinations in

common use give rise to the functionals:

Perdew (1986) :

Becke & Perdew/Wang: BPW91

Becke & Lee/Parr/Yang: BLYP

These functionals are all LOCAL in the mathematical sense, but go beyond the Local

Density Approximation.

Hybrid Functionals

It should be clear that the HF method has a systematic error. The lack of Coulomb

correlation implies that the energies are higher than they should be as charges are allowed

to get closer to each other than they should be, thus the bond lengths tend to be smaller

and the binding energies larger than experiment. In DFT, on the other hand, the

systematic errors occur in precisely the opposite direction. Thus a combination of the HF

Exchange and the functional exchange should improve things considerably. The popular

B3LYP functional is the prime example of this kind. The performance is excellent, with

an RMS error of about 2 kcal/mol on the large G2 molecule reference set. This

functional has the form:

E xcB 3 LYP (1 a) E xLSD aE xc 0 bE cB 88 cE cLYP (1 c) E cLSD

(23)

The parameters a,b,c control the relative amounts of HF Exchange and Coulomb

correlation from the various sources and they are determined empirically (by fitting to

data). The performance is very good, as we will discuss further in the next section.

IV.

Performance

V.

References

1. W. Koch, M. C. Holthausen, “A Chemist’s Guide to Density Functional Theory”,

Wiley-VCH: New York, 2000.

2.