time-dependant perturbation theory

advertisement

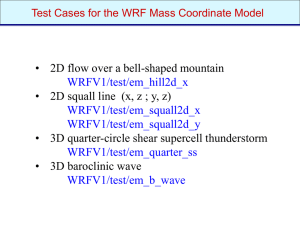

MODULE 17 Time-dependent Perturbation Theory In order to understand the properties of molecules it is necessary to see how systems respond to newly imposed perturbations on their way to settling into different stationary states. This is particularly true for molecules that are exposed to electromagnetic radiation where the perturbation is a rapidly oscillating electromagnetic field. Time dependent perturbation theory allows us to calculate transition probabilities and rates, very important properties in the Photosciences. The approach we shall use is that for the time-independent situation, viz., examine a two-state system in detail and then outline the general case of arbitrary complexity. The two-state system We can write the total hamiltonian for the system as the sum of a stationary state term and a time-dependent perturbation Hˆ Hˆ (0) Hˆ (1) (t ) and suppose that the perturbation is one that oscillates at an angular frequency, , then Hˆ (1) (t ) 2 Hˆ (1) cos t (17.1) (17.2) where Ĥ (1) is the hamiltonian for a time-independent perturbation and the factor 2 is for later convenience. Our time-dependent Schrödinger equation is Ĥ i t (17.3) As before we consider a pair of eigenstates 1 and 2 representing the (time-independent) wavefunctions 1 and 2 with energy eigenvalues E1 and E2. These wavefunctions are the solutions of the equation Hˆ (0) n En n (17.4) and are related to the time-dependent functions according to n (t ) n e iEnt / 1 (17.5) When the perturbation is acting the state of the system can be expressed as a linear combination of the basis functions defined by the set in equation (17.5). We confine ourselves to just two of them and the linear combination becomes (t ) a1 (t )1 (t ) a2 (t )2 (t ) (17.6) Notice that we are allowing that the coefficients are time-dependent because the composition of the state may evolve with time. The overall time dependence of the wavefunction is partly due to the changes in the basis functions and partly due to the way the coefficients change with time. 2 At any time t, the probability that the system is in state n is given by an (t ) . Substitution of equation (17.6) into the Schrödinger equation (17.3) leads to the following Hˆ a1 Hˆ (0) 1 a1 Hˆ (1) (t )1 a2 Hˆ (0) 2 a2 Hˆ (1) (t ) 2 (a11 a2 2 ) t 1 a 2 a i a1 i 1 1 i a2 i 2 2 t t t t i (17.7) Now each of the basis functions satisfies the time dependent Schrödinger equation, viz. n Hˆ (0) n i t (17.8) so the last equation in (17.7) simplifies down as a1 Hˆ (1) (t )1 a2 Hˆ (1) (t ) 2 i a11 i a2 2 (17.9) where the dots over the coefficients signify their time derivatives. Now we expand the last equation by explicitly writing the time dependence of the wavefunctions a1Hˆ (1) (t ) 1 e iE1t / a2 Hˆ (1) (t ) 2 e iE2t / i a1 1 eiE1t / i a2 2 e iE2t / (17.10) Now multiplying from the left by the bra 1 and use the orthonormality relationship we find a1H11(1) (t )eiE1t / a2 H12(1) (t )eiE2t / i a1eiE1t / (17.11) where the matrix elements are defined in the usual way. Now we write 21 E2 E1 when we find that (17.11) becomes a1H11(1) (t ) a2 H12(1) (t )ei21t i a1 2 (17.12) It is not unusual to find that the perturbation has no diagonal elements so we put (1) H11(1) (t ) H 22 (t ) 0 And then equation (17.12) reduces to a2 H12(1) (t )ei21t i a1 (17.13) or, rearranging a1 1 a2 H12(1) (t )e i21t i (17.14) this provides us with a first order differential equation for one of the coefficients but it contains the other one. We proceed exactly as above to obtain an equation for the other coefficient a2 1 (1) a1 H 21 (t )ei21t i (17.15) In the absence of any perturbation, the two matrix elements are both equal to zero and the time derivatives of the two coefficients also become zero. In which case the coefficients retain their initial values and even though oscillates with time, the system remains frozen in its initially prepared state. Now suppose that a constant perturbation, V, is applied at some starting time and continued until a time later. To allow this let us write (1) H12(1) (t ) V and H 21 (t ) V * where we have invoked the Hermiticity of the hamiltonian. a1 iVa2ei21t Then a2 iV * a1ei21t (17.16) This is a pair of coupled differential equations, which can be solved by one of several methods. One solution is a2 (t ) ( Aeit Beit )ei21t / 2 (17.17) where A and B are constants that are determined by the initial conditions and is given by 1 2 2 ( 21 4 V )1/ 2 2 A similar expression holds fora1. 3 (17.18) If, prior to the perturbation being switched on the system is definitely in state 1 then a1(0) = 1, and a2(0) = 0. These initial conditions allow us to find A and B and eventually we can arrive at two particular solutions, one for a1(t) and one for a2(t). These are i i21t a1 (t ) cos t 21 sin t e 2 2 1 (17.19) and iV a2 (t ) 1 i 21t 2 sin te (17.20) The probability of finding the system in state 2 (initially equal to zero) after turning on the perturbation is given by P2 (t ) a2 (t ) 2 (17.21) and by substitution of equation (17.20) we are lead to the Rabi formula 4V 2 P2 (t ) 2 4 V 21 2 1 2 2 1/ 2 sin ( 4 V ) t 21 2 2 (17.22) Now suppose the pair of states is degenerate, then 21 = 0 and P2 (t ) sin 2 V t (17.23) This function has the form shown in Figure 17.1. 1 0.8 g( t ) 0.6 f ( t) k( t ) 0.4 0.2 0 0 2 4 6 8 10 t Figure 17.1: three plots of the function in equation (17.23), with V = 1 (g), 2 (f), and 3(k) 4 The system oscillates between the two states, spending as much time in 1 as in 2. We also see that the frequency of the oscillation depends on the strength of the perturbation, V, so that strong perturbations drive the system between the two states more rapidly than do weak perturbations. Such systems are described as ‘loose’, because even weak perturbations can drive them completely from one extreme to the other. The opposite situation is different, i.e. where the states are far apart in energy in comparison to 2 4 V and the absolute value of V. Then 21 2 2 2V 2 1 P2 (t ) sin 21t 2 21 (17.24) Again we find oscillation but now the probability of finding state 2 is never higher than 2 4 V / 21 which is much less than unity. Here the probability of the perturbation driving the 2 system into state 2 is very small. Furthermore, notice that the oscillation frequency is governed by the energy separation and is independent of the perturbation strength. The only role of the perturbation is to govern the fraction of the system in state 2. The probability is higher at larger perturbations. Many level systems For the many-level system we need to expand the wavefunction as a linear combination of all the n-states contained in equation (17.4). This leads to very complicated equations when combined with perturbations that are more complex than the time-independent one used above. To approach this we use the approximation technique called “variation of constants” and invented by Dirac. The problem we are faced with is to find out how the linear combination varies with time under the influence of realistic perturbations. We base the approximation on the condition that the perturbation is weak and applied for such a short time that all the coefficients remain close to their initial values. Eventually it can be shown that t 1 i fi t a f (t ) H (1) dt fi (t )e i 0 5 (17.25) where af is the coefficient for the final state that was initially unoccupied. This approximation ignores the possibility that the route between initial and final states is via other states, i.e. it accounts only for the direct route. It is a first order theory because the perturbation is applied once and only once. Now we can use the expression in (17.25) to see how a system will behave when exposed to an oscillatory perturbation, such as light. First we consider transitions between a pair of discreet states i and f . Then we shall embed the final state into a continuum of states. Suppose our perturbation oscillates with a frequency = 2 and it is turned on at t = 0. Then its hamiltonian has the form Hˆ (1) (t ) 2 H (1) cos t H (1) (ei t e i t ) (17.26) (Now you see the reason for the factor of 2 in the expansion.). Putting this expression into equation (17.25) yields a1 (t ) H (1) fi i t (e i t 0 e i t )e i fi t dt i ( fi )t 1 ei ( fi ) t 1 H (1) fi e i i ( fi ) i ( fi ) where (17.27) fi = Ef – Ei. This is an obscure result but simplification is very straightforward. In electronic spectroscopy and photoexcitation the frequencies and fi involved are very high, of the order of 1015 s-1. Thus the first term in the braces is exceedingly small, whereas the second term, in which the denominator can approach zero, can be very large. It is therefore possible to ignore the first term. Then the probability of finding the system in state f after a time t, when it was initially completely in state i, becomes 4 H (1) fi 2 Pf (t ) a f (t ) 2 2 ( fi ) sin 2 2 1 ( fi )t 2 (17.28) 1 (t ) V we rewrite equation (17.28) as Using the relationship employed earlier that H12 Pf (t ) 4 V fi 2 2 1 sin ( fi )t ( fi ) 2 2 6 (17.29) This expression looks very much like equation (17.24) that was developed for a static perturbation on the two-level system. The difference being that fi is here replaced by (fi - ) which is termed the frequency offset. Equation (17.29) informs us that not only is the amplitude of the transition probability dependent on the frequency offset, so is its time dependence. Both these increase as approaches fi and when the difference becomes zero, the transition probability is at a maximum; at this point the radiation field and the system are in resonance. Evaluating the limit of equation (17.29) as the two frequencies approach each other we find that 2 lim Pf (t ) V fi t 2 (17.30) fi and the probability of the transition occurring as a result of the perturbation increases quadratically with time. The shape of equation (17.27) and the time dependence of the amplitude for four times are shown in Figure 17.2. 22 19.8 17.6 15.4 g( f 1) g( f 2) g( f 3) 13.2 11 g( f 4) 8.8 6.6 4.4 2.2 0 20 16 12 8 4 0 4 8 12 16 20 r f Figure 17.2: Plots of equation (17.27) at four different times (1,2,3, and 4) showing the quadratic dependence of the amplitude on time. The vertical scale is arbitrary. 7 Transitions to states in a continuum In the previous section the transition was between a pair of discrete states; now we consider the case where the final state is part of a continuum of states, close to each other in energy. We can still use equation (17.29), to estimate the probability of promoting to one member of the continuum, but now we need to integrate over all the transition probabilities that the perturbation can induce in the system. We need to define a density of states, (which relates to the number of states accessible as a result of the perturbation, then ( E )dE is the number of final states in the range E to E + dE that are able to be reached. Then the total transition probability is given by P(t ) Pf (t ) ( E )dE (17.31) range Now we use equation (17.29) and the relation fi E / to arrive at the following 1 P(t ) 4 V fi 2 sin ( E / )t 2 range 2 ( E / )2 ( E )dE (17.32) Now we set about simplifying this expression. First, we recognize that the quotient in the integrand, which is equivalent to sin 2 x / x 2 is very sharply peaked when E / , the radiation frequency. This means that we can sensibly restrict ourselves to considering only those states that have significant transition probabilities at fi. Because of this we can evaluate the density of states in the neighborhood of E fi fi and treat it as a constant. The other effect of this is that although the matrix elements V fi depend on E, we are concerned only with a narrow range of energies contributing to the integral, that we can assume it is a constant. Under these considerations equation (17.32) simplifies to 1 2 P(t ) V fi ( E fi ) sin 2 ( E / )t 4 range 2 ( E / )2 dE (17.33) To add one more approximation we convert the integral into a standard form by extending the limits to infinity. Because of the shape of the function, the value of the integrand has virtually no area outside the actual range, so this approximation introduces very little error. Now we simplify 8 again by setting x 1 ( E / )t , which implies that dE (2 / t )dx , and the probability 2 expression becomes 2 sin x 2 P(t ) V fi ( E fi )t 2 dx 2 x t 2 (17.34) Using the standard form that 2 sin x x dx then the required probability becomes 2 P(t ) 2 t V fi ( E fi ) (17.35) If we now define the transition rate (kfi) as the rate of change of the probability that the system arrives at an initially empty state, we find that 2 k fi 2 V fi ( E fi ) (17.36) This expression is known as the Fermi Golden Rule and it tells us that we can calculate the rate of a transition if we know the square modulus of the transition matrix element between the two states and the density of final states at the frequency of the transition. It is a very useful expression and we shall find many examples of its use in the coming weeks. 9