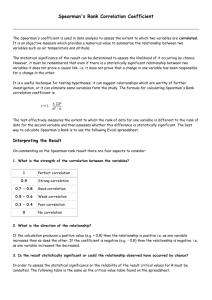

SPEARMAN`S COEFFICIENT OF RANK

advertisement

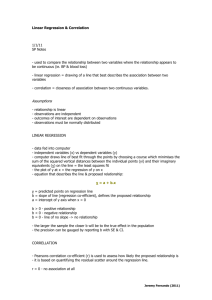

CORRELATION Bivariate data consists of two variables that are in some way related to each member of the group. E.g. Maths (x) and Physics (y) scores for 10 students. The values (x,y) for each member can be plotted as a set of points (x1, y1), (x2, y2) …on a scatter diagram. If all the points seem to lie near a straight line then there is said to be LINEAR CORRELATION between the to variables. Positive linear corr (P1 marks vs P2 marks) Negative linear corr (Price of a car vs Mileage) No correlation (Height vs P1 marks) Example 1 This table gives you the marks scored by 15 contestants in part A and part B of a quiz. Draw a scatter graph of the scores. Describe the relationship between the scores in art A and part B. A B 15 20 35 37 34 35 23 25 35 33 27 30 36 39 34 36 23 27 24 20 30 33 40 35 25 27 35 32 20 28 Now do EXERCISE 9A (Chapter 9 Page 142) PRODUCT MOMENT CORRELATION COEFFICIENT – a measure of correlation The product moment correlation coefficient, r, given by r ( xi x )( yi y ) ( xi x ) ( yi y ) 2 2 S xy S xx S yy This is also on the formula booklet ! measures how close the points on a scatter diagram are to a straight line. 1 r 1 Where there is little correlation, r is close to zero. Useful notation: For finding r, this involves three different sums. It is best to find them separately then use the formula. (xi ) 2 2 2 For n pairs of values (xi, yi) Sxx = ( xi x ) = xi n (yi ) 2 Syy = ( yi y ) = y n 2 2 i Sxy = ( xi x )( yi y ) = xi yi (xi )(yi ) n Example 1 For the quiz data, find the PMCC and hence determine the strength of the correlation. A (x) 15 B (y) 20 35 37 34 35 23 25 35 33 27 30 First we need the means : x 36 39 34 36 23 27 24 20 436 29 15 30 33 y 40 35 25 27 35 32 20 28 457 30 15 So we can complete the data table like this : A (x) B (y) xx y y ( x x )( y y ) 15 20 14 10 140 35 37 6 7 42 34 35 5 5 25 23 25 6 5 30 35 33 6 3 18 Sxy = ( xi x )( yi y ) Sxx = ( xi x ) 2 = 723 Syy = ( yi y ) 2 = 485 Therefore PMCC r = 27 30 2 0 0 36 39 7 9 63 34 36 5 6 30 23 27 6 3 18 24 20 5 10 50 30 33 1 3 3 40 35 11 5 55 25 27 4 3 12 35 32 6 2 12 20 436 28 457 9 2 18 516 = 516 (remember that the squares are summed) ( xi x )( yi y ) ( xi x )2 ( yi y )2 516 723 485 = 0.871 (3sf) S xy S xx S yy The value of r = 0.871 tells us that the correlation is quite strong positive linear correlation between the two variables. The points lie fairly close to a line with positive slope. Now do EXERCISE 9B & 9C (Chapter 9 Page 147) SPEARMAN’S COEFFICIENT OF RANK CORRELATION (r) Instead of using the values of the variables x and y, we rank them in order of size, using the numbers 1, 2, 3, …n. A correlation coefficient can then be determined on the basis of these ranks. For each pair of value we calculate d = rank x rank y Then, Spearman’s coefficient of rank correlation is given by : 6d 2 r 1 n(n 2 1) Note : this value is particularly useful when the exact data values are not known but have already been ranked (eg. positions in a class or competition) Example 1 These are the marks obtained by 8 pupils in a Maths and Physics. Calculate Spearman’s coefficient of rank correlation. Maths (x) Physics (y) 67 70 42 59 85 71 51 38 39 55 97 62 81 80 70 76 Method of ranking Rank x Rank y d d2 4 5 1 1 Now d 2 32 So 2 3 1 1 7 6 1 1 3 1 2 4 1 2 1 1 8 4 4 16 6 8 2 4 5 7 2 4 n=8 6d 2 r 1 n(n 2 1) r 1 6(32) 8(63) = 0.2539… Spearman’s coefficient of rank correlation is 0.25 (2dp) Example 2 (for students) The marks of 10 pupils in French and German tests are as follows : French (x) German (y) 12 6 8 5 16 7 11 7 7 4 10 9 13 8 17 13 12 10 9 11 Calculate Spearman’s coefficient of rank correlation. Now So d 2 84 n = 10 6d 2 r 1 n(n 2 1) r 1 6(84) 10(99) = 0.49 Spearman’s coefficient of rank correlation is 0.49, indicating some positive correlation between the marks in the two tests Now do EXERCISE 9D (Chapter 9 Page 160) Now do MISCELLANEOUS Exercise 9 (Chapter 9 Page 162 to 165) REGRESSION If all the points show correlation, we can calculate a REGRESSION LINE (previously a best fit line). Drawing and calculating REGRESSION LINES (a) If there is very little scatter : Calculate the mean of the x values, x Calculate the mean of the y values, y Draw a line of best fit through ( x , y ) An equation in the form y = mx + c can be found using gradient and intercept. (b) If there is a fair degree of scatter: We draw one of two LEAST SQUARES REGRESSION LINES. (i) Least squares regression line y on x (estimate y, given values of x) This is calculated by minimising the sums of the squares of the VERTICAL distances (called residuals) from each point to the line. (In other words, if we used a GIVEN value of x in our linear function, the value of y (the dependent variable) must be as small a vertical distance from the best fit line!) Hence y on x. The equation of the line for which the sum of the residual squares are minimum is y = a + bx where S xy b = S (the regression coefficient of y on x) xx and a = y bx (the intercept bit!) The equations of the regression lines are : y on x : yy S xy S xx (x x) So you need y y n x x n (xi ) 2 Sxx = x n 2 i Sxy = xi yi x on y : xx S xy S yy ( y y) (xi )(yi ) n (Same as above but Syy instead of Sxx) Example 1 Calculate the regression line equation of y on x for the following case of results. x y So 1 10 2 14 4 12 6 13 x n=7 7 15 38 5.4 7 8 12 10 13 y 89 12.7 7 y 2 1147 x 2 270 (xi ) 2 38 2 Sxx = x = 270 = 63.71… n 7 2 i Sxy = xi yi yy S xy S xx (xi )(yi ) 38 89 495 11.85… n 7 (x x) y 12.7 11.85 ( x 5.4) 63.71 y = 0.186x + 11.7 Now the regression line x on y : (yi ) 2 89 2 Syy = y = 1147 = 15.428…… n 7 2 i All the other values we can use from above… Therefore xx S xy S yy x 5.4 ( y y) 11.85 ( y 12.7) 15.43 x = 0.769y 4.35 Making predictions using a regression line