The method introduction of varying coefficient analysis with non

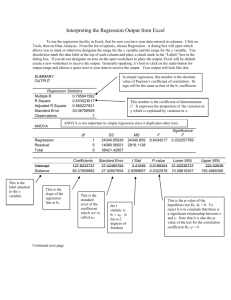

advertisement

Online Appendix

The method introduction of varying coefficient analysis with

5

non- parametric estimation

The varying coefficient method was firstly developed by Hastie and Tibshirani in

1993. This method have been widely use in economic, biology, although it is not well

introduced in the ecology research. The varying coefficient method with

10

non-parametric estimation does not pre-specify functional forms of the involved

variables, but uses information purely from data to estimate a curve/function. Such

varying coefficient method is obviously more flexible and robust to fit data than any

parametric method, because if a parametric structure of the model is pre-assumed

there is a risk of the wrong model and conclusions.

15

In a Varying coefficients model, the factor that might lead to the variation of the

correlation coefficient of species’ quantitative characteristics can be described by

variable Z, a regression model with functional coefficients in such situation can be

expressed as

Y 0 (Z ) 1 (Z ) X ,

20

(2)

where is a random error with mean zero and is independent of Z, 0 ( Z ) and

1 ( Z ) are respectively the intercept and slope both of which are functions of Z. In

model (2), if 1 ( Z ) is independent of the third variable Z (i.e. constant slope), then it

reduces to the classical linear regression model (1). If the effect from Z is not a

1

constant for species quantitative characteristics of interacting species, this model is

called varying coefficients regression model (Hastie & Tibshirani 1993). Let

XY Z denote the conditional correlation coefficient of X and Y when Z is given. Then

5

it is easy to see

1 ( Z ) XY Z (

Y Z

X Z

),

(3)

where X2 |Z and Y2|Z are the conditional variance of X and Y for given Z

respectively. If Y |Z / X |Z is not significantly dependent on Z, the correlation

coefficient XY Z as a function of Z is equivalent to 1 ( Z ) .

10

In model (2), the functional forms of 0 ( Z ) and 1 ( Z ) are not pre-specified

(or unknown), and a general method employing non-parametric smoothing technique

can be used to estimate these functions. This nonparametric estimation method is of

an advantage that no functional forms need to be pre-specified, but only using the

information from data to obtain an estimated curve/function. Such a model is

15

obviously more flexible and robust to fit data than any parametric methods

(Epanechnikov 1969), because when a parametric structure of model is pre-assumed

we need to take the risk of wrong modeling and conclusions.

Let ( Z ) ( 0 ( Z ), 1 ( Z ))' , U (1, X )' where the prime stands for transpose of a

vector. When the matrix E (UU ' | Z ) is invertible, a least squares type estimator of

20

(Z ) is uniquely defined by

ˆ (Z ) E (UU ' | Z ) 1 E (UY | Z ) .

(4)

The conditional expectation above can be estimated by nonparametric smoothing

method such as kernel estimation (see Epanechnikov 1969). Let { ( X i , Yi , Z i ) ,

2

i 1,2, , n } be a sample of observations. Then

E (UU ' | Z )

1 n

wni (Z )U iU i' ,

n i 1

E (UY ' | Z )

1 n

wni (Z )U iYi

n i 1

(5)

n

where U i (1, X i )' , wni (Z ) K h (Z Z i ) / f h (Z ) , f h ( Z ) n 1 K h ( Z Z i ) ,

i 1

5

K h (u ) h 1 K (u / h) . The kernel function K (u ) is a continuous, bounded and

symmetric real function that integrates to one (e.f.

K (u)du 1 ). A scalar h is

defined as bandwidth. A variety of kernel functions are possible in general. Some

commonly used kernel functions include the Epanechnikov kernel (Epanechnikov

1969) with K (u ) 0.75(1 u 2 ) I (| u | 1) and the quadratic kernel with

10

K (u )

15

(1 u 2 ) 2 I (| u | 1) (see Haerdle 1994), where I (| u | 1) is an indicator

16

function taking the value 1 when | u | 1 , and 0 otherwise. We see that the weight

function wni (Z ) is zero outside interval | Z Z i | h . It has been shown that large h

will lead to large bias and small variance of the estimator, which is called

over-smoothing and small h will lead to small bias and large variance, which is called

15

under-smoothing. An appropriate bandwidth h can be selected by the leave-one-out

cross validation method (Haerdle 1994). In applications one can subjectively choose

the bandwidth h to either over-smooth or under-smooth the curve based on their

special purpose (Haerdle 1994, Ch 5).

Although we can see, from the fitted curves, how the regression coefficients vary

20

with Z, the functional forms of the curves are unknown. A method to test whether

1 ( Z ) is of some given functional forms can be developed when a Nonparametric

Monte Carlo Test (NMCT) is applied. The readers are referred to Zhu (2005).

3

In the varying coefficient regression model, the correlation coefficient XY Z can

be well described by the regression coefficient 1 ( Z ) only when the ratio

Y |Z / X |Z is a constant. However, when this condition is not satisfied, 1 ( Z ) , as a

5

function of Z, is not equivalent to XY Z and a nonparametric estimation of XY Z is

desired. This can be seen from the definition as

Cov( X , Y | Z )

XY Z

Var ( X | Z )Var (Y | Z )

XY |Z

.

X |Z Y |Z

(6)

The conditional covariance of X and Y, and the variance of X and of Y when Z is

given can be computed by using kernel estimation (Haerdle 1994). For example, using

10

the same notations, we have

XY |Z

X |Z

1 n

wni (Z ) ( X i Eˆ ( X | Z ))(Yi E (Y | Z ))

n i 1

1 n

wni (Z ) ( X i Eˆ ( X | Z )) 2

n i 1

Y |Z

1 n

wni (Z ) (Yi Eˆ (Y | Z )) 2

n i 1

1 n

1 n

Where Eˆ ( X | Z ) wni X i , Eˆ (Y | Z ) wniYi . We can see that even for such

n i 1

n i 1

a complex structure, we can, similar to the linear model, still use correlation

15

coefficient | XY Z | to reveal the correlation relationship between variables although

it is now a function of another involved factor.

Simulation Study

The computation of the nonparametric estimation for the varying coefficient proposed

in this paper has been programmed using Matlab code. Estimation for the varying

20

coefficients i (z ) (i=0,1) is written by a Matlab function cvm.m, and the estimation

for the correlation coefficient is contained in npcc.m. We now conduct a simulation

4

study to examine the performance of the new method for the varying coefficients

analysis.

In the simulations, we consider different forms of the functions 1 ( z ) , and set

5

0 ( z ) to be a constant. We respectively use constant, linear and other two special

functions of 1 ( z ) to simulate data, the drawn data follow the model

y 0 ( z ) 1 ( z ) x , where z is a random number from 0 to 8, x and are

the standard normal random variables, and the sample size is 300. For each 1 ( z ) ,

we obtain the generated dataset {(yi, xi, zi): i=1, …, n}, then use Matlab function

10

cvm.m we programmed to calculate the estimated values of ˆ1 ( z ) , where the

bandwidth h in all the plots is h=0.5. The plots of y versus x and ˆ1 ( z ) versus z

(together with 1 ( z ) versus z ) are reported in Figures 1 and 2. When 1 ( z ) is a

constant, y and x are linearly related as is shown in Figure 1(a), and the estimated

ˆ1 ( z) is also approximately a constant as is shown in Figure 1(b). However, when

15

1 ( z) is not a constant function of z , while either linear or other specified forms,

we can see, from the plots of y versus x as shown in Figure 1(c), Figure 2(a) and

Figure 2(c), that y and x no longer follow a linear relationship, but our method can

still provide a good estimator ˆ1 ( z ) of 1 ( z ) , which fits the given functions of

1 ( z) very well, as shown in Figure 1(d), Figure 2(b) and Figure 2(d).

20

The simulation results suggest that when the regression coefficients are the

functions of the other factors, the use of the linear regression with constant coefficient

is not appropriate, while the varying correlation coefficient analysis works well to

identify the correlation patterns behind the data.

5

(a)

(b)

150

5

1(z)

y

4

100

3

2

50

10

20

30

x

(c)

40

1

50

600

0

2

4

z

(d)

6

8

0

2

4

z

6

8

2

1

y

1(z)

400

200

0

-1

-2

0

50

100

x

150

-3

Fig. 1: (a) Plot of y versus x for the data simulated from the model

y 2 1 ( z) x , where 1 ( z ) =3, n=300, x is a normal random variable with

5

mean 32 and standard deviation 4, z is a random number from 0 to 8, is a

normal random error with zero mean and standard deviation 1. This model has a

constant slope. (b) Plot of ˆ1 ( z ) versus z (dot) and 1 ( z ) versus z (line) for the

data of (a), we see the estimated ˆ1 ( z ) is approximated constant. (c) Plot of y versus

x for the data simulated from the model y 300 1 ( z) x , where

10

1 ( z) 0.5 z 2 , n=300, x is a normal random variable with mean 100 and

standard deviation 12.5, z is a random number from 0 to 8, is a normal random

error with zero mean and standard deviation 0.2. This model has a linear function of

1 ( z) . (d) Plot of ˆ1 ( z) versus z (dot) and 1 ( z) versus z (line) for the data in (b);

it is clear that the estimated ˆ1 ( z ) approximates the real function 1 ( z ) very well.

6

(b)

2

400

1

1(z)

y

(a)

500

300

200

100

50

-1

100

x

(c)

-2

150

400

2

4

z

(d)

6

8

0

2

4

z

6

8

0

1(z)

y

0

1

300

200

-1

100

0

50

0

100

x

150

-2

Fig. 2: (a) Plot of y versus x for the data simulated from the model

y 300 1 ( z) x , where 1 ( z ) 1.5 z 1.9 , n=300, x is a normal random

1 z

5

variable with mean 100 and standard deviation 12.5, z is a random number from 0

to 8, is a normal random error with zero mean and standard deviation 0.2. (b) Plot

of ˆ1 ( z ) versus z (dot) and 1 ( z ) versus z (line) for the data of (a), we see the

estimated ˆ1 ( z ) approximates 1 ( z ) very well. (c) Plot of y versus x for the data

simulated from the model y 250 1 ( z) x , where 1 ( z )

10

0.5 2 z

2,

1 0.2 z 0.1 z 2

n=300, x , z and are same as that in (a). (d) Plot of ˆ1 ( z ) versus z (dot) and

1 ( z) versus z (line) for the data of (c), it is clear that the estimated ˆ1 ( z)

approximates the real function 1 ( z ) very well.

Examination of data of the fig and fig wasp

7

We firstly examine whether the linear regression analysis works on describing the

interaction between viable seeds and wasp offspring, following some graphical

evaluation methods such as Sugihara & May (1990). Fig. 3(a) and 3(b) clearly show a

5

good linear relationship for the simulated data in Figure 1(a). Fig. 3(c) and 3(d) are for

the real data from fig/fig wasp mutualism (Ficus racemosa), showing that a constant

linear regression is not appropriate compared with the results in Figure 3(a) and 3(b).

The p-value of the F-test in the linear regression model for this real data is 0.501,

which is obviously non-significant.

10

This above examination showed that the simple or generalized linear regression

can not be simply used to analyses the naturally collected data of figs and fig wasps.

(a)

(b)

20

100

50

y

y/x

10

0

0

-10

0

100

200

Observation number

(c)

-50

-20

300

0

10

20

2000

4000

(d)

20

4000

10

2000

0

-10

-20

-10

x

y

y/x

-20

0

-2000

0

50

100

150

Observation number

200

-4000

-4000 -2000

0

x

Fig.3. Some plots for artificial data and real data of fig/fig wasp mutualism. (a) Plot of y / x

versus observation number for the simulation data as shown in Figure 1(a), which indicates a

15

constant linear regression of y on x is well described since y / x approximates as a constant.

8

(b) Plot of y versus x for the simulation data, which shown a clear linear relationship. (c)

Plot of y / x versus observation number for the fig/fig wasp data, which is unstable and

indicates a constant linear regression of y on x is not appropriate. (d) Plot of y versus x for

5

the fig/fig wasp data, which shown no clear linear relationship exists. y=seeds, x= wasp offspring

(galls) in (c) and (d).

Two functions by Matlab code

10

15

20

25

30

35

function coef=vcm(x,y,z,h)

%-----------------------------------------------------------------% nonparametric estimation of varying coefficients model

% model: y=\alpha_0(z)+\alpha_1(z) x + e

% input variables: x, y and z have the same length, say n.

%

h is bandwisth

% output variable: coef is the regression coefficients estimators

%

coef is a n x 2 matrix. The first column is estimator

%

of \alpha_0(z), and The second column is estimator

of

%

\alpha_1(z).

% plot od coef(:,1) and coef(:,2) versus z can display the trend

% of the estimators of \alpha_0(z) and \alpha_1(z) as a function of

z .

%-----------------------------------------------------------------n=length(x);

X0=ones(n,1);

X1=x;

X=[X0 X1];

res=zeros(n,1);

for j=1:n

w1=1-(z-z(j)).^2/h^2;

X00=X0(w1>0);

X10=X1(w1>0);

y0=y(w1>0);

w0=w1(w1>0);

XX=ones(length(y0),2);

XX=[X00 X10];

9

5

10

15

20

25

30

35

XX=XX.*(w0*ones(1,2));

coef(j,:)=pinv(XX'*XX)*XX'*(y0.*w0);

clear y0 X10 X00 w1 w0 x10b y0b;

end

function rxy=npcc(x,y,z,h)

%------------------------------------------------------------------% nonparametric estimation of Pearson correlation coefficents

% input variables: x and y are two variables,and z is a variable,

%

h is bandwisth. x,y and z have the same length n.

% output variable: rxy is the correlation coefficients between x and

y

%

rxy is n vector.

% plot od rxy versus z can display the relationship of rxy with z.

%-----------------------------------------------------------------n=length(z);

for j=1:n

w1=1-(z-z(j)).^2/h^2;

x0=x(w1>0);

y0=y(w1>0);

w0=w1(w1>0);

w0=w0.^2;

y0b=sum(y0.*w0)./sum(w0);

x0b=sum(x0.*w0)./sum(w0);

sigmaxy=sum((y0-y0b).*(x0-x0b).*w0)./sum(w0);

sigmax=sum((x0-x0b).^2.*w0)./sum(w0);

sigmay=sum((y0-y0b).^2.*w0)./sum(w0);

rxy(j)=sigmaxy/sqrt(sigmax*sigmay);

clear y0 x0 w1 w0 x0b y0b sigmax sigmay sigmaxy;

end

clear n;

Literature cited

Epanechnikov, V. (1969) Nonparametric estimates of a multivariate probability

density. Theoretical Probability and Its Applications, 14, 153-158.

40

Haerdle,W. (1994) Applied nonparametric regression. Springer, Berlin.

10

Hastie,T., Tibshirani, R. 1993). Varying-coefficient models (with discussion). J. R.

Statistic. Soci.B, 55, 757-796.

Sugihara, G., May, R.M. 1990. Non-linear forecasting as a way of distinguishing

5

chaos from measurement error in time series. Nature, 344,734-741.

Zhu, L.X. 2005. Nonparametric Monte Carlo Tests and their applications. Springer,

New York.

11