Stationary Time Series Models: AR, MA, ARIMA

advertisement

Review: Stationary Time Series Models

White noise process, covariance stationary process, AR(p), MA(p) and ARIMA

processes, stationarity conditions, diagnostic checks.

White noise process xt ~ i.i.d

N (0, 2 )

A sequence {xt } is a white noise process if each value in the sequence has

1. zero-mean E ( xt ) E ( xt 1 ) .. 0

2. constant conditional variance E( x2t ) E( xt 1 ) .. 2 Var( xt i )

3. is uncorrelated with all other realizations

E ( xt xt s ) E ( xt j xt j s ) .. 0 Cov( xt j xt j s )

2

Properties 1&2 : absence of serial correlation or predictability

Property 3

: Conditional homoscedasticity (constant conditional variance).

Covariance Stationarity (weakly stationarity)

A sequence {xt } is covariance stationary if the mean, var and autocov do not grow over

time, i.e. it has

1. finite mean E ( xt ) E ( xt 1 ) ..

2. finite variance E[( x t )2 ] E[( xt 1 )2 ] .. x Varx

3. finite autocovariance

E[( xt )( xt s )] E[( xt j )( xt j s )] .. s Cov( xt j xt j s )

2

Ex. autocovariance between xt , xt s s

s

0

But white noise process does not explain macro variables characterized by persistence so

we need AR and MA features.

Application:

plot wn and lyus

Program: whitenoise.prg

workfile: usuk.wf –page 1

The program generates and plots log of US real disposable income and a white

noise process WN, based on the sample mean and variance of log US income

(nrnd=normal random variable with 0 mean and SD of 0.36)

lyus=log(uspdispid)

WN= 8.03+0.36*nrnd

9.0

8.5

8.0

7.5

7.0

1960 1965 1970 1975 1980 1985 1990 1995

WN

AR(1): xt xt 1 et

LYUS

et ~ i.i.d (0, 2 ) ,

(random walk: 1 )

MA(1): xt et et 1

More generally:

AR(p): xt 1 xt 1 2 xt 2 ... p xt p et

MA(q): xt et 1et 1 2et 2 ... q et q

ARMA(p,q): xt 1 xt 1 2 xt 2 ... p xt p et 1et 1 .. q et q

Using the lag operator:

AR(1): (1 L) xt et

MA(1): xt (1 L)et

AR(p): (1 1L 2 L2 ... p LP ) xt ( L) xt et

MA(q): xt (1 1L 2 L2 ... q Lq )et ( L)et

ARMA(p,q): a( L) xt b( L)et

1. AR process

Stationarity Conditions for an AR(1) process

(1 L) xt et with ( L) 1 L and substituting for L: ( z ) 1 z

The process is stable if ( z ) 0 for all numbers satisfying z 1 . Then we can write

xt (1 L)1 et i 0 i et i

If x is stable, it is covariance stationary:

1. E ( xt ) or 0 – finite

e2

2. Varx E[ x t ] E (i 0 et i ) e i 0

0 -- finite

1 2

2

i

2

2

2i

3. covariances

1 E ( xt xt 1 ) E[( xt 1 et ) xt 1 ] x2

2 E ( xt xt 2 ) E[(( xt 2 et 1 ) et ) xt 2 ] 2 E ( xt2 2 ) 2 x2

s E ( xt xt s ) s x2 sVar ( x)

Autocorrelations between xt , xt s :

rs s s

0

Plot of rs over time = Autocorrelation function (ACF) or correlogram.

For stationary series, ACF should converge to 0:

lim rs 0 if 1

s

0 direct convergence

0 dampened oscillatory path around 0.

Partial Autocorrelation (PAC)

Ref: Enders Ch.2

In AR(p) processes all x’s are correlated even if they don’t appear in the regression

equation.

Ex: AR(1)

s sVar ( xt )

r1 1 ; r2 2 2 r1 r12 ; r3 3 3 r2 r1

0

0

0

We want to see the direct autocorrelation between xt j and xt by controlling for all x’s

between the two. For this, construct the demeaned series and form regressions to get the

PAC from the ACs.

1st PAC:

*

xt* 11xt 1 et

11 r1

2nd PAC:

x x

*

21 t 1

*

t

x

*

22 t 2

et

In general, for s 3 , sth PAC:

s

rs j 1s 1, j rs j

ss

s 1

1 j 1s 1, j rj

Ex: for s=3, 33

22

r2 r12

.

1 r12

and sj s 1, j sss 1, s j .

r3 (21r2 22r1 )

.

1 (21r1 22r2 )

Identification for an AR(p) process

PACF for s>p: ss 0

Hence AR(1): 22

r2 11r r2 r12 r12 r12

0

1 11r1 1 r12

1 r12

33

r3 (21r2 22r1 )

1 (21r1 22r2 )

To evaluate it, use the relation sj s 1, j sss 1, s j :

21 1,1 2211 11 r1 , substitute it to get:

33

r3 r1 r2

1 r1

2

=

r13 r13

0

1 r12

Stability condition for an AR(p) process

(1 1L 2 L2 ... p LP ) xt ( L) xt et

The process is stable if ( z ) 0 for all z satisfying z 1 , or if the roots of the

characteristic polynomial lie outside the unit circle. Then, we can write:

xt ( L) 1 et

a( L)et

j 0 ai et i .

Then we have the usual moment conditions:

1. E ( xt ) or 0 – finite

2. Varx E[ x t ] E(i 0 ai et i )2 , a0 1, et i et j 0

2

Varx e

2

i 0 ai

2

e2

1 a2

0 -- finite variance, hence time independent.

3. covariances

s E ( xt xt s ) E[(et a1et 1 a2et 2 ...)(et s a1et s 1 ...)] 2 E ( xt2 2 ) 2 x2

= [(as a1a1 s a2a2 s ...) 2e e2 i 0 ai ai s finite and time

independent.

a a a a a3a4 ...

r1 1 1 2 2 3

0

(a1 a2 ...) 2

rs

s

0

aa

a

i is

2

i

If the process is nonstationary, what do we do?

Then there is a unit root, i.e. the polynomial has a root for z=1 (1) 0 . We can thus

factor out the operator and transform the process into a first-difference stationary series:

( L) (1 *1 L *2 L2 ... *p 1 Lp 1 )(1 L)

(1 *1 L *2 L2 ... *p 1 Lp 1 )xt et -- an AR(p-1) model.

If * ( L) (1 *1 L *2 L2 ... *p1 Lp1 ) has all its roots outside the unit circle,

xt is stationary: xt ~ I (1)

If * ( L) still has a unit root, we must difference it further until we obtain a stationary

process: xt ~ I (d )

An integrated process = a unit root process.

unconditional mean is still finite but

Variance is time dependent

Covariance is time dependent

(more later).

Application: generate an AR(1) series

Program: ARMA.prg

Workfile: USUK.wf, page 2 (undated)

Program:

smpl 1 1

genr x=0

smpl 2 200

series x=0.5*x(-1)+NRND '

'nrnd=normal random variable with 0 mean and SD of 0.36

Go to workfile, Click on: series – graph -- line

3

2

1

0

-1

-2

-3

25

50

75

100

125

150

175

200

X

On series, click on View – Correlogram, level – OK

Date: 09/04/07 Time: 19:43

Sample: 2 200

Included observations: 199

Autocorrelation

.|****

.|**

.|*

.|.

*|.

*|.

*|.

.|.

.|.

*|.

.|.

.|.

.|*

.|*

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Partial Correlation

.|****

.|.

*|.

.|.

*|.

.|.

.|.

.|.

.|.

*|.

.|*

.|.

.|*

.|.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

AC

PAC

Q-Stat

Prob

0.537

0.257

0.074

0.003

-0.078

-0.087

-0.059

-0.029

0.013

-0.081

-0.015

0.010

0.076

0.101

0.537

-0.045

-0.065

-0.002

-0.087

-0.007

0.015

-0.002

0.036

-0.156

0.113

0.000

0.071

0.051

58.316

71.715

72.832

72.834

74.074

75.654

76.369

76.549

76.586

77.970

78.017

78.039

79.294

81.484

0.000

0.000

0.000

0.000

0.000

0.000

0.000

0.000

0.000

0.000

0.000

0.000

0.000

0.000

ACF=0 at lag 3 and PAC=0 at lag 2 hence AR(1).

On Eviews: Q stats=Ljun-Box Q statistics and their p-values. H0: there is no autocorrelation up to

lag k, asymptotically distributed as

q2 , q=# autocorrelations.

Dotted lines: 2 SE bounds calculated approximately as

Here: T=199 hence SE bounds =0.14.

Program:

smpl 1 1

genr xa=0

smpl 2 200

series xa= -0.5*xa(-1)+NRND

2 / T , T=# observations.

rho=-0.5 dampened oscillatory path.

smpl 1 1

genr w=0

smpl 2 200

series w=w(-1)+NRND

rho=1 random walk unit root.

2. MA process

xt et 1et 1 2et 2 ... q et q ,

e = 0 mean white noise error term.

(1 1L 2 L2 ... q Lq )et = ( L)et

If ( z ) 0 for z 1 , the process is invertible, and has an AR() representation:

( L) 1 xt ( L) xt =

j 0

j xt j et

xt j 1 j xt j et

xt j 1 j xt j et

Stability condition for MA(1) process

xt et 1et 1

Invertibility requires 1 1

Then the AR representation would be:

xt (1 1L)et (1 1L) 1 xt et xt i 11i xt i et

E ( xt ) 0 finite

Var ( xt ) (0) var( et 1et 1 ) (1 12 ) e2 finite.

(1)

1

(1) 1Ee2t 1 1 e2 r1

(0) 1 12

(2) (3) 0 r2 r3 0 , hence autocorrelations’ cut off point = lag

1

More generally : AC for MA(q)=0 for lag q.

PAC:

11 r1

1

2

1 1

r r2

1

22 2 12

11 ( 11 2 )

2

2

1 r1

1 11

(1 1 )(1 1 1 )

2

33

22r1

1 21r1

2211

2211

11 22 (?? check)

1 22

1 2111 1 (11 (1 22 ))11

For AR:

AC depends on the AC coefficient (rho), thus tapers off

PAC depends on rs or s , cuts of 0 at s (AR(1): cutoff at L=1)

For MA:

AC depends on var of error terms: abrupt cutoff

PAC depends on the MA coefficient , thus tapers off.

3. ARMA process

ARMA(p,q): ( L) xt ( L)et

(1 1L 2 L2 ... p LP ) xt (1 1L 2 L2 ... q Lq )et

If q=0 pure AR(p) process

If p=0 pure MA(q) process

If all characteristics roots of xt i1 i xt i i0i et i are within the unit circle, then

p

q

this is an ARMA(p,q) process. If one or more roots lie outside the unit circle, then this is

an integrated ARIMA(p,d,q) process.

Stability condition for ARMA(1,1) process

--Favero, p.37—

xt c1 xt 1 et a1et 1

1 a1L

et .

(1 c1 ) xt (1 a1L)et xt

1 c1L

If c1 1 then we can write

xt (1 a1L)(1 c1L (c1L) 2 ...)et

(1 c1L (c1L) 2 ... a1L a1c1L2 ...)et

(1 (a1 c1 ) L c1 (c1 a1 ) L2 c12 (c1 a1 ) L3 ...)et an MA( ) representation.

E ( xt ) 0 finite

(a c ) 2

Var ( xt ) (0) 1 1 21 e2 finite

1 c1

Covariances --finite

(1) c1Var ( xt ) a1 e2

(2) c1 (c1Var ( xt ) a1 e2 ) c1 (1) ( j ) c1 ( j 1),

j2

Autocov functions :

(1)

r1

(0)

r2 c1r1

r3 c1r2 c12 r1

Any stationary time series can be represented with an ARMA model:

AR( p) MA()

MA( p) AR()

Application

Plot and ARMA(1,1) and an AR(1) model with rho=0.7 and theta=0.4, and look at the

AC and PAC functions.

Prog: ARMA.prg

File: USUK, page 2.

smpl 1 1

genr zarma=0

genr zar=0

smpl 1 200

genr u=NRND

smpl 2 200

series zarma=0.7*x(-1)+u+0.4*u(-1)

series zar=0.7*x*(-1)

plot zarma zar

zarma.correl(36)

zar.correl(36)

4

3

2

1

0

-1

-2

-3

-4

25

50

75

100

ZARMA

125

150

ZAR

175

200

Summary of results

Enders Table 2.1

ACF

WN

rs 0, s 0

Exponential Decay:

AR(1)

rs s

0 direct decay

0 oscillating

Positive (negative) spike

MA(1)

at lag 1 for 0 ( 0 )

rs 0 for s 2

ARMA(1,1) Exponential (oscillating) decay

at lag 1 if 0 ( 0 ).

Decay at lag q for ARMA(p,q)

PACF

ss 0, s 0

Spike at lag 1 (at p for AR(p))

11 r1 , ss 0 for s 2

Oscillating (geometric) decay

for 11 0 ( 11 0 )

Oscillating (exponential) decay

at lag 1, 11 1

Decay after lag p for ARMA(p,q)

Stationary Time Series II

Model Specification and Diagnostic tests

E+L&K Ch 2.5,2.6

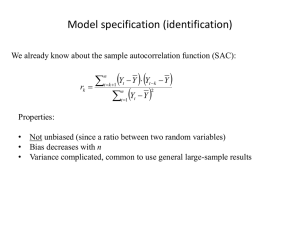

So far we saw the theoretical properties of stationary TS. To decide about the type of

model to use, we need to decide about the order of operators (# lags), the deterministic

trends, etc. We therefore need to specify a model and then conduct tests on its

specification, whether it represents the DGP adequately.

1. Order specification criteria:

i.

The t/F-statistics approach: start from a large number of lags, and reestimate

by reducing by one lag every time. Stop when the last lag is significant.

Monthly data: look at the t-stats for the last lag, and F-stats for the last quarter.

Then check if the error term is white noise.

ii.

Information Criteria: AIC, HQ or SBC

Definition in Enders:

Akaike:

AIC=T.log(SSR)+2n

Schwarz:

SBC=T.log(SSR)+n.log(T) also called Schwartz and Risanen.

Hannan-Quinn:

HQC=T. log(SSR)+2n.log(log(T))

T=#observations, n=#parameters estimated, including the constant term.

Adding additional lags will reduce the SSR, these criteria penalize the loss of

degree of freedom that comes along with additional lags.

The goal=pick the #lag that minimizes the information criteria.

Since ln(T)>2, SBS selects a more parsimonious model than AIC.

For large sample, HQC and in particular SBC is better than AIC since they

have better large sample properties.

o If you go along with SBC, verify that the error term is white

noise. In small samples, AIC performs better.

o If you go with AIC, then verify that the t-stats are significant.

This is valid whether the processes are stationary or integrated.

Note: various software and authors use modified versions of these tests. As

long as you use the criteria consistently among themselves, any version will

give the same results.

For ex: definition by LK:

AIC=log(SSR) + 2(n/T)

SC= log(SSR) + n(logT/T)

HQ=log(SSR) + 2n.log(logT)/T)

Definition used in Eviews :

AIC=-2(l/T)+(2n/T)

SC=-2(l/T)+n(logT/T)

HQ=-2(l/T)+2n.log(logT)/T

T

(1 log(2 ) log SSR / T ) and

2

is the constant of the log likelihood often omitted.

where l is the log likelihood given by l

Denote the order selected by each criterion as p̂ . The following holds independent of the

size of the sample:

pˆ ( AIC ) pˆ ( HQ ) pˆ (SC)

Word of warning:

It is difficult to distinguish between AR, MA and mixed ARMA processes based on

sample information. The theoretical ACF and PACF are not usually replicated in real

economic data, which have a complicated DGP. Look into other tests that analyze the

residuals once a model is fitted:

2. Plotting the residuals:

Check for outliers, structural breaks, nonhomogenous variances.

Look at standardized residuals (subtract the mean and divide by standard

T

uˆ uˆ

(uˆ uˆ ) 2

deviation): uˆ s t~ where ̂ u 1 t t is the standard deviation and û is

u

T

the mean. If û ~N(0, ) then û will be in general in a 2 band around the 0

line.

Look at the AC and PAC to check the remaining serial residuals in the residuals,

and AC of squared residuals to check for conditional heteroscedasticity. If the

2

s

AC and PAC of earlier lags are not in general within 2 / T band around 0 then

there is probably left over serial dependence in the residuals or conditional

heteroscedasticity.

3. Diagnostic tests for residuals:

i. Test of whether the kth order autocorrelation is significantly different from zero:

The null hypothesis: there is no residual autocorrelation up to order s

The alternative: there is at least one nonzero autocorrelation.

H 0 : rk 0 and k=1,..s

H1 : rk 0 for at least one k=1,..s.

where rk is the k-th autocorrelation.

If the null is rejected, then at least one r is significantly different from zero.

The null is rejected for large values of Q. If there are any remaining residual

autocorrelation, must use a higher order of lag.

The Box-Pierce Q-statistics (Portemanteau test for residual autocorrelation)

s

2

Q T rk2 ~ (s)

k 1

T=# observations.

But not reliable for small samples and it has reduced power for large s. Instead, use

Ljung-Box Q statistics:

QLB T (T 2)k 1 rk2 /(T k )

s

with similar null and alternative hypotheses.

It can also be used to check if the residuals from an estimated ARMA(p,q) model are

white noise (adjust for the lags in AR(p) and MA(q)):

2

2

Q~ (s p q) or (s p q 1) with a constant.

ii. Breusch-Godfrey (LM) test for autocorrelation for AR models for residuals:

It considers an AR(h) model for residuals.

Suppose the model you estimate is an AR(p):

yt pj1 j yt j ut

You fit an auxiliary equation

p

h

uˆt j 1 j yt j i 1 iuˆt i errort where ût u is the OLS residual from the

(*)

AR(p) model for y.

H 0 : 1 .. h 0

H 1 : 1 0 or

2 0,....

The LM statistics for the null: LMh T .R2 ~ 2 (h) , where R 2 is obtained from fitting (*).

For better small sample properties use an F version:

FMLh

R2 T p h 1

~ F (h, T p h 1)

h

1 R2

iii. Jarque-Bera test for nonnormality

It tests if the standardized residuals are normally distributed, based on the third and fourth

moments, by measuring the difference of the skewness and the kurtosis of the series with

those from the normal distribution.

H 0 : E (uts )3 0 ( skewness) and

H1 : E (uts )3 0 or

E (uts )4 3 (kurtosis)

E (uts )4 3

JB ~ 2 (2) and the null is rejected if JB is large. In this case, residuals are considered

nonnormal.

Note:

most of the asymptotic results are also valid for nonnormal residuals.

the results may be due to nonlinearities. Then you should look into ARCH

effects or structural changes.

iv. ARCH-LM test for conditional heteroscedasticity

Fit an ARCH(q) model to the estimation of the residuals

q

uˆ 2t i 0 iuˆ 2t i errort and test if

H 0 : 1 .. q 0 no conditional heteroscedasticity

H1 : 1 0 or 2 0,....

ARCH LM (q) TR2 ~ 2 (q) . Large values of ARCH-LM show that the null is rejected

and there are ARCH effects in the residuals. Then fit an ARCH or GARCH model.

v. RESET

Tests a model specification against alternatives (nonlinear).

Ex: you are estimating a model

yt axt ut

But the actual models is

yt axt b zt ut

where z can be missing variable(s) or a multiplicative relation. The test checks if powers

of predicted values of y is significant. These consist of the powers and cross-product

terms of the explanatory variables:

zt { yˆ 2 , yˆ 3 ,...}

H 0 : b=0 --no misspecification

The test statistics has an F(h-1,T) distribution. The null is rejected if the test statistics is

too large.

vi. Stability analysis

Recursive plot of residuals, of estimated coefficients;

CUSUM test (cumulative sum of recursive residuals): if the plot diverges significantly

from the zero line, it suggests structural instability.

CUSUMSQR (square of CUSUM) in case there are several shifts in different directions.

Chow test: for exogenous break points.

Example:

Workfile: LKEdata.wf page--Enders

Series: US PPI

Plot the data:

120

100

80

60

40

20

1960 1965 1970 1975 1980 1985 1990 1995 2000

PPI

Positive trend in the series indicates nonstationarity.

Correlogram:

Date: 09/19/07 Time: 10:00

Sample: 1960Q1 2002Q2

Included observations: 169

Autocorrelation

.|********

.|********

.|*******|

.|*******|

.|*******|

.|*******|

.|*******|

.|*******|

.|*******|

.|*******|

.|*******|

.|****** |

Partial Correlation

.|********

.|.

|

*|.

|

.|.

|

.|.

|

.|.

|

.|.

|

.|.

|

.|.

|

.|.

|

.|.

|

.|.

|

AC

1

2

3

4

5

6

7

8

9

10

11

12

0.990

0.978

0.966

0.952

0.937

0.923

0.908

0.894

0.880

0.866

0.852

0.838

PAC

Q-Stat

Prob

0.990

-0.044

-0.071

-0.056

-0.036

0.003

-0.003

-0.004

0.009

-0.003

-0.011

0.001

168.44

334.05

496.37

655.05

809.87

960.86

1108.0

1251.5

1391.2

1527.5

1660.1

1789.4

0.000

0.000

0.000

0.000

0.000

0.000

0.000

0.000

0.000

0.000

0.000

0.000

The confidence intervals [2 / T , 2 / T ] = [2 / 169 , 2 / 169 ] [0.154 , 0.154 ]

Q statistics highly significant, the residuals are not white noise. Need to respecify the

model.

ACF dies out very slowly

PACF (the autocorrelation conditional on the in-between values of time series): single

spike

Observation of the data suggests U.R. Hence we cannot use the models developed so far.

First difference dppi.

Plot: it seems to be stationary, though the variance does not look constant, increases in

the second half of the sample.

4

3

2

1

0

-1

-2

-3

-4

1960

1965

1970

1975

1980

1985

DPPI

1990

1995

2000

ACF dies out quickly after the 4th lag, and PACF has a large spike and dies out

oscillating. Note the significant correlations and PAC at lag 6 and PAC at lag 8. This

can be an AR(p) or an ARMA(p,q) process.

Included observations: 168

Autocorrelation

Partial Correlation

AC

PAC

Q-Stat

Prob

.|**** |

.|**** |

1

0.553

0.553

52.210

0.000

.|***

|

.|.

|

2

0.335

0.043

71.571

0.000

.|**

|

.|*

|

3

0.319

0.170

89.229

0.000

.|**

|

.|.

|

4

0.216

-0.042

97.389

0.000

.|*

|

*|.

|

5

0.086

-0.081

98.682

0.000

.|*

|

.|*

|

6

0.153

0.149

102.82

0.000

.|*

|

*|.

|

7

0.082

-0.096

104.02

0.000

*|.

|

*|.

|

8

-0.078

-0.149

105.10

0.000

*|.

|

.|.

|

9

-0.080

-0.007

106.23

0.000

.|.

|

.|*

|

10

0.023

0.121

106.33

0.000

.|.

|

.|.

|

11

-0.008

0.004

106.34

0.000

.|.

|

.|.

|

12

-0.006

0.007

106.35

0.000

In Eviews:

run equation dppi c AR(1)

(You can also run equation dppi c dppi(-1), but with the first specification Eviews

estimates the model with a nonlinear algorithm, which helps controlling for nonlinearities

if they exist in the DGP).

Dependent Variable: DPPI

Method: Least Squares

Date: 09/19/07 Time: 10:13

Sample (adjusted): 1960Q3 2002Q1

Included observations: 167 after adjustments

Convergence achieved after 3 iterations

Variable

Coefficient

Std. Error

t-Statistic

Prob.

C

AR(1)

0.464364

0.554760

0.133945

0.064902

3.466835

8.547697

0.0007

0.0000

R-squared

Adjusted R-squared

S.E. of regression

Sum squared resid

Log likelihood

Durbin-Watson stat

0.306907

0.302706

0.770679

98.00107

-192.4560

2.048637

Mean dependent var

S.D. dependent var

Akaike info criterion

Schwarz criterion

F-statistic

Prob(F-statistic)

0.466826

0.922923

2.328814

2.366155

73.06313

0.000000

Inverted AR Roots

.55

Check the optimal lag length for an AR(p) process.

Check the roots: if it is <1, stability is confirmed. View-ARMA structure-roots shows

you the root(s) visually.

View-ARMA structure-correlogram: allows you to compare the estimated correlations

with the theoretical ones.

View-ARMA structure-impulse response: shows how the DGP changes with a shock of

the size of one standard deviation.

View-Residual tests: to check if there is any residual autocorrelation left.

Optimal lag length:

lags

1

2.328814*

2.366155*

AIC

SBC

2

2.565575

2.603069

3

2.575278

2.612925

10

2.735557

2.774324

It appears that AR(1) dominates the rest in terms of the information criteria.

Roots of AR(1):

0.55<1 –stability condition satisfied, since the root is inside the unit circle.

Inverse Roots of AR/MA Polynomial(s)

1.5

1.0

0.5

0.0

-0.5

-1.0

-1.5

-1.5

-1.0

-0.5

0.0

0.5

1.0

1.5

AR roots

But serial correlation is a problem:

Breusch-Godfrey Serial Correlation LM Test:

F-statistic

Obs*R-squared

3.478636

6.836216

Prob. F(2,163)

Prob. Chi-Square(2)

0.033159

0.032774

High Q stats (correlogram), and the Godfrey-Breusch test TR2 =6.84> (22) 5.99 rejects

the null at the 5% confidence interval, thus there is at least one i 0 . There is serial

correlation in the error terms.

Lets try ARMA(p,q):

lags

1,1

AIC

SBC

2.3305*

2.386*

1,2

2,1

2.345

2.3366

2.3926

2.402

Information criteria favor an ARMA(1,1) specification although the marginal change is

very small. So we should consider all options.

ARMA (1,1) -- Eviews: equation dppi c ar(1) ma(1)

Included observations: 167 after adjustments

Convergence achieved after 9 iterations

Backcast: 1960Q2

Variable

Coefficient

Std. Error

t-Statistic

Prob.

C

0.455286

0.163873

2.778280

0.0061

AR(1)

0.730798

0.100011

7.307163

0.0000

MA(1)

-0.261703

0.139158

-1.880622

0.0618

R-squared

0.313995

Mean dependent var

0.466826

Adjusted R-squared

0.305629

S.D. dependent var

0.922923

S.E. of regression

0.769062

Akaike info criterion

2.330510

96.99884

Schwarz criterion

2.386522

F-statistic

37.53259

Prob(F-statistic)

0.000000

Sum squared resid

Log likelihood

-191.5976

Durbin-Watson stat

1.935301

Inverted AR Roots

.73

Inverted MA Roots

.26

Both roots inside the unit circle. AR and MA coefficients significant.

Some of the AC at larger lags are significant.

LM test: TR2 =5.29 < (22) 5.99 does not reject no serial correlation in the error terms.

Notice that if you estimate an ARMA(1,2) the MA coefficient is insignificant. Together

with the information criteria, we can rule out this specification. The LM test results also

do not support this specification.

Variable

Coefficient

Std. Error

t-Statistic

Prob.

C

0.457550

0.176196

2.596826

0.0103

AR(1)

0.807616

0.120152

6.721624

0.0000

MA(1)

-0.280755

0.148664

-1.888526

0.0607

MA(2)

-0.152639

0.116160

-1.314038

0.1907

R-squared

0.322970

Mean dependent var

0.466826

Adjusted R-squared

0.310509

S.D. dependent var

0.922923

S.E. of regression

0.766355

Akaike info criterion

2.329317

95.72980

Schwarz criterion

2.404000

F-statistic

25.91910

Prob(F-statistic)

0.000000

Sum squared resid

Log likelihood

-190.4980

Durbin-Watson stat

2.011448

Inverted AR Roots

.81

Inverted MA Roots

.56

-.27

ARMA (2,1) is better than ARMA(1,2) but does not dominate ARMA(1,1). The

estimated coefficients are significant, but there is residual serial correlation.

We can perform additional tests to check the stability of the coefficient, seasonal effects,

etc.

Seasonality

Cyclical movements observed in daily, monthly or quarterly data. Often it is useful to

remove it if it is visible in lags s, 2s, 3s, …. of the ACF and the PACF. You add an AR

or MA coefficient at the appropriate lag.

Possibilities:

Quarterly seasonality with MA at lag 4:

xt a1 xt 1 et b1et 1 b4et 4

Quarterly seasonality with AR at lag 4:

xt a1 xt 1 a4 xt 4 et b1et 1

Multiplicative seasonality

Accounts for interaction of the ARMA and seasonal effects.

MA term at lag 1 interacting with the seasonal MA term at lag 4:

(1 a1L) xt (1 b1L)(1 b4 L4 )et

xt a1 xt 1 et b1et 1 b4et 4 b1et 1b4et 4

AR term at lag 1 interacting with the seasonal AR term at lag 4:

(1 a1 )(1 a4 L4 ) xt (1 b1L)et

xt a1 xt 1 a4 xt 4 a1a4 xt 1 xt 4 et b1et 1

One solution to remove strong seasonality is to transform the data by seasonal

differencing. For quarterly data:

yt (1 L4 ) xt

If the data is not stationary, then also need to first difference it:

yt (1 L)(1 L4 ) xt

Eviews:

Several methodologies for seasonal adjustment

Series—Proc—Seasonal Adjustment

Census X12

Census 11

Moving Average Methods

Tramo-Seat method

The first two options allow additive or multiplicative specifications, control for holidays,

trading days, outliers.

Homework: due September 26, 2007

Data: Enders Question 12.