Introduction to ARMA processes

advertisement

Linear Stationary Processes.

ARMA models

• This lecture introduces the basic linear

models for stationary processes.

• Considering only stationary processes is

very restrictive since most economic

variables are non-stationary.

• However, stationary linear models are used

as building blocks in more complicated

nonlinear and/or non-stationary models.

Roadmap

1. The Wold decomposition

2. From the Wold decomposition to the

ARMA representation.

3. MA processes and invertibility

4. AR processes, stationarity and causality

5. ARMA, invertibility and causality.

The Wold Decomposition

Wold theorem in words:

Any stationary process {Zt} can be expressed as a sum of two

components:

- a stochastic component: a linear combination of lags of a

white noise process.

- a deterministic component, uncorrelated with the latter

stochastic component.

The Wold Theorem

If {Zt} is a nondeterministic stationary time series, then

¥

Z t = åy j at- j +Vt = Y(L)at +Vt ,

j=0

where

¥

1. y 0 = 1 and åy j2 < ¥.

j=0

2. {at } is WN(0, s 2 ), with s 2 > 0,

3. The yi 's and the a's are unique.

4. Cov(as , Vt ) = 0 for all s and t,

5. {Vt } is deterministic.

Some Remarks on the Wold Decomposition, I

(··) If Zt is purely - nondeterministic, then Vt = 0. Most

of the time series that we will consider in this course are

purely non - deterministic. For instance, ARMA processes.

Importance of the Wold decomposition

• Any stationary process can be written as a linear combination

of lagged values of a white noise process (MA(∞)

representation).

• This implies that if a process is stationary we immediately

know how to write a model for it.

•Problem: we might need to estimate a lot of parameters (in most

cases, an infinite number of them!)

• ARMA models: they are an approximation to the Wold

representation. This approximation is more parsimonious (=less

parameters)

Birth of the ARMA(p,q) models

Under general conditions the infinite lag polynomial of the Wold

decomposition can be approximated by the ratio of two finite-lag

polynomials:

(L )

(L )

Therefore

q

p (L )

Q q (L)

Z t = Y(L)at »

at ,

F p (L)

F p (L)Z t = Q q (L)at

(1 - f1L - ... - f p Lp )Z t = (1 + q1L + ... + q q Lq )at

Z t - f1Z t-1 - ... - f p Z t - p = at + q1at -1 + ... + q q at- q

AR(p)

MA(q)

MA processes

MA(1) process (or ARMA(0,1))

Let

{at }

a zero-mean white noise process at ® (0, s a2 )

- Expectation

E(Zt ) = m + E(at ) + qE(at -1) = m

- Variance

Var(Z t ) = E(Z t - m) 2 = E(at + qat -1 ) 2 =

= E(at2 + q 2 at2-1 + 2qat at -1 ) = s a2 (1+ q 2 )

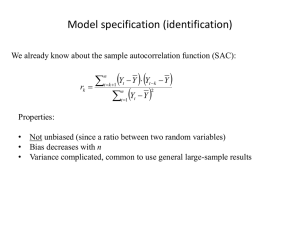

Autocovariance

1st. order

E(Z t - m)(Z t -1 - m) = E(at + qat -1 )(at -1 + qat -2 ) =

= E(at at -1 + qat2-1 + qat at -2 + q 2 at -1at -2 ) = qs a2

MA(1) processes (cont)

-Autocovariance of higher order

E(Z t - m)(Z t - j - m) = E(at + qat -1)(at - j + qat - j -1) =

= E(at at - j + qat -1at - j + qat at - j -1 + q 2 at -1at - j -1) = 0

- Autocorrelation

g1

qs 2

q

r1 = =

=

2

2

g 0 (1+ q )s

1+ q 2

r j = 0 j >1

Partial autocorrelation

j >1

MA(1) processes (cont)

Stationarity

MA(1) process is always covariance-stationary because

E (Zt ) = m Var ( Zt ) = (1 + q 2 )s 2

g1

qs 2

q

r1 = =

=

g 0 (1+ q 2 )s 2 1+ q 2

r j = 0 j >1

at

Zt

MA(q)

Zt = m +at +q1at-1 +q2at-2 + +qqat-q

Moments

E(Z t ) = m

g 0 = var(Z t ) = (1+ q12 + q 22 +

g j = E(at + q1at-1 +

MA(q) is

covarianceStationary

for the

same reasons

as in a MA(1)

+ q q2 )s a2

+ q q at-q )(at- j + q1at- j-1 +

+ qq at- j-q )

ì(q j + q j +1q1 + q j +2q2 + + q qq q- j )s 2 for j £ q

gj =í

î0 for j > q

g j q j + q j +1q1 + q j +2q 2 + + q qq q- j

rj = =

q

g0

2

q

åi

i=1

Example MA(2)

q1 + q1q 2

r1 =

1+ q12 + q 22

q2

r2 =

1+ q12 + q 22

r3 = r4 =

= rk = 0

MA(infinite)

¥

Z t = m + åy j at - j

y0 = 1

j =0

Is it covariance-stationary?

¥

E (Z t ) = m, Var(Z t ) = s a2 åy i2

i= 0

¥

g j = E [(Z t - m)(Z t- j - m)] = s 2 åy iy i+ j

i= 0

¥

rj =

åy y

i

i= 0

¥

i+ j

åy i2

The process is

covariance-stationary

provided that

¥

åy

2

i

<¥

i= 0

i= 0

(the MA coefficients are square-summable)

Invertibility

Definition: A MA(q) process is said to be invertible if it admits an autoregressive

representation.

Theorem: (necessary and sufficient conditions for invertibility)

Let {Zt} be a MA(q), Zt = qq (L)at .Then {Zt} is invertible if and only

q (x) ¹ 0 for all x ÎC such that | x |£1. The coefficients of the AR

representation, {j}, are determined by the relation

¥

p (x) = å p j x j =

j=0

1

, |x| £1.

q (x)

Identification of the MA(1)

Consider the autocorrelation function of

these two MA(1) processes:

Z t = m + at + qat -1

Z*t = m +a*t +(1/q )a*t-1

The autocorrelation functions are:

q

1) r1 =

1+ q 2

1/q

q

2) r *1 =

2 =

1+ (1/q )

1+ q 2

Then, this two processes show identical

correlation pattern. The MA coefficient is

not uniquely identified.

In other words: any MA(1) process has two

representations (one with MA parameter larger

Identification of the MA(1)

• If we identify the MA(1) through the autocorrelation structure,

we would need to decide which value of to choose, the one

greater than one or the one smaller than one. We prefer

representations that are invertible so we will choose the value

.

Z

AR processes

AR(1) process

Zt = c + fZt -1 + at

Stationarity

Z t = c + fc + f Z t-2 + fat-1 + at =

2

= c(1+ f + f 2 +

) + at + fat-1 + f 2 at-2 +

geometric progression

if f < 1 Þ

(1) 1+ f + f 2 +

¥

(2)

åy = å

j=0

Remember!!

¥

2

j

j=0

=

1

1- f

MA(¥)

bounded sequence

1

f =

< ¥ if f < 1

2

1- f

2j

¥

åy

j =0

2

j

< ¥ is a sufficient condition for stationarity

AR(1) (cont)

Hence, an AR(1) process is stationary if

f <1

Mean of a stationary AR(1)

c

Zt =

+ at + fat-1 + f 2 at-2 +

1- f

c

m = E(Z t ) =

1- f

Variance of a stationary AR(1)

1

2

g 0 = (1+ f + f + )s =

s

1- f2 a

2

4

2

Autocovariance of a stationary AR(1)

You need to solve a system of equations:

[

]

[

]

g j = E ( Z t - m)( Z t- j - m) = E (f ( Z t-1 - m) + at )( Z t- j - m) =

[

]

= fE ( Z t-1 - m)( Z t- j - m) + at ( Z t- j - m) = fg j-1

j

j 1

j1

Autocorrelation of a stationary AR(1)

ACF

gj

g j-1

rj =

=f

= fr j-1

go

g0

j ³1

r j = f 2 r j-2 = f 3 r j-3 =

= f j r0 = f j

EXERCISE

Compute the Partial autocorrelation

function of an AR(1) process.

Compare its pattern to that of the

MA(1) process.

AR(p)

Zt = c +f1Zt-1 +f2Zt-2 + .......fpZt-p + at

stationarity

ACF

All p roots of the characteristic equation

outside of the unit circle

rk = f1rk-1 + f 2 r k-2 + ......f p rk- p

ü System to solve for the first p

ï

r2 = f1r11 + f 2 r 0 + ......f p r p-2 ï autocorrelations:

ý p unknowns and p equations

ï

r p = f1r p-1 + f 2 r p-2 + ......f p r0 ïþ

r1 = f1r 0 + f 2 r1 + ......f p r p-1

ACF decays as mixture of exponentials and/or damped sine waves,

Depending on real/complex roots

PACF

fkk = 0 for k > p

Exercise

Compute the mean, the variance and the

autocorrelation function of an AR(2) process.

Describe the pattern of the PACF of an AR(2) process.

Causality and Stationarity

Consider the AR(1) process, Z t 1 Z t 1 a t

Iterating we obtain

Z t = a t + f1a t + ...+ f1k a t -k + f1Z t -k -1.

If f1 < 1 we showed that

¥

Z t = å f1 j at - j

j= 0

This cannot be done if f1 ³1, (no mean - square convergence)

However, in this case one could write

Z t = f1-1Z t +1 - f1-1at +1

¥

Then, Z t = -å f1- j at + j

j= 0

and this is a stationary representation of Z t .

Causality and Stationarity (II)

However, this stationary representation depends on future values of

It is customary to restrict attention to AR(1) processes with

at

1 1

called

1

or

1 futureSuch processes are

stationary but also CAUSAL,

indepent AR representations.

1

1

Remark: any AR(1) process with f1 > 1 can be rewritten as an AR(1)

process with f *1 < 1 and a new white sequence.

Thus, we can restrict our analysis (without loss of generality) to processes

with

f1 <1 1

1

Causality (III)

Definition: An AR(p) process defined by the equation p ( L ) Z t a t

is said to be causal, or a causal function of {at}, if there exists a sequence of constants

{y j } such that

å

¥

j=0

| y j |< ¥ and

¥

Z t = åy j at - j,

t = 0,±1,...

j =0

- A necessary and sufficient condition for causality is

f(x) ¹ 0 for all x ÎC such that | x |£1.

Relationship between AR(p) and MA(q)

Stationary AR(p)

F p (L)Z t = at F p (L) = (1 - f1L - f 2 L2 - ....f p Lp )

1

= Y(L) Þ F p (L)Y(L) = 1

F p (L)

1

Zt =

at = Y(L)at

F p (L)

Y(L) = (1+ y1L + y 2 L2 + ....)

Invertible MA(q)

Z t = Qq (L)at

Qq (L) = (1 - q1L - q 2 L2 - ....q q Lq )

1

= P(L) Þ Qq (L)P(L) = 1

Qq (L)

1

P(L)Z t =

Z t = at

Qq (L)

P(L) = (1+ p1L + p 2 L2 + ....)

ARMA(p,q) Processes

ARMA (p,q)

p ( L )Z t q ( L )at

Invertibil

ity roots of q ( x ) 0

Stationari ty roots of

p ( x) 0

Pure AR representa tion ( L ) Z t

Pure MA representa tion Z t

x 1

x 1

p (L)

q (L)

q (L)

p (L)

Z t at

at ( L )at

ARMA(1,1)

(1 - fL) Zt = (1 - qL)at

stationarity ® f < 1

invertibil ity ® q < 1

pure AR form ® P (L)Z t = at p j = (f - q )q

j -1

pure MA form ® Zt = Y(L) at y j = (f - q )f

j -1

j ³1

j ³1

ACF of ARMA(1,1)

Zt Zt -k = fZt -1Zt -k + at Zt -k - qat -1Zt -k

taking expectations

g k = fg k-1 + E(at Zt -k ) - qE(at -1Zt -k )

you get this system of

equations

k =0

E(at Z t ) = s 2 a

E (at -1Z t ) = (f - q )sa 2

g 0 = fg 1 + sa 2 - q (f - q )sa 2

k = 1 g 1 = fg 0 - qsa 2

k ³ 2 g k = fg k -1

ACF

ì1

ï

ï (f - q )(1 - fq )

rk = í

2

1

+

q

- 2fq

ï

ï

îfr k -1

PACF

k =0

k =1

MA(1) Ì ARMA(1,1)

exponential decay

k ³2

Summary

• Key concepts

– Wold decomposition

– ARMA as an approx. to the Wold decomp.

– MA processes: moments. Invertibility

– AR processes: moments. Stationarity and

causality.

– ARMA processes: moments, invertibility,

causality and stationarity.