Models for Serially Correlated Errors

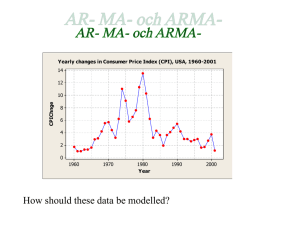

advertisement

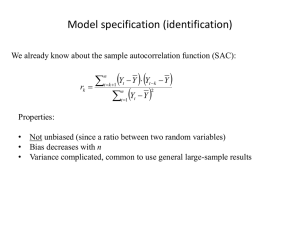

1 Models for Serially Correlated Errors Steve Carpenter, Zoology 535, Ecosystem Analysis Many ecological data are organized sequentially in time, and dynamic models are a major part of ecology. There is also a large body of statistical research concerned with time series. The purpose of this handout is to introduce one of the most important families of statistical models for time series, called Box-Jenkins models after the statisticians who did much of the early work on them. The dynamics of time series often represent a mixture of ecologically interesting interactions with noise that is serially correlated. That is, the variation that obscures the ecological pattern has its own structure, and this structure in the noise is very difficult to separate from the ecological signals of interest. It is necessary to model the noise explicitly to remove its effects and study the ecological patterns. In this exercise, we learn some of the common models for noise in time series data. Models for Univariate Time Series Definitions A series is an ordered sequence of observations in any dimension. We will be working with time series. Generally we will use t as a subscript to denote the position of an observation in a time series. For example yt is observation t of time series y. The backshift operator Bs shifts a time series backward s steps. For example, B yt = yt-1 [1] B2 yt = yt-2 [2] Bs yt = yt-s [3] Serial correlation is the correlation of a time series with itself. Serial correlation can be a source of bias in fitting models to time series. One way to correct this bias is to build parameters that account for the serial correlation into the overall model (Box and Tiao 1975). The noise model is the model for the serial correlation of a time series. Generally this series is the dependent variate, or response variate, in an ecological study. The intervention model is the model for proposed causal relationships. In an ecological study, the intervention model relates series of one or more independent variates, or input variates, to the response variate. Intervention analysis is the process of identifying, fitting, and evaluating combined intervention and noise models for a set of input and response series. Diagnostics for serial correlation include 2 Autocorrelation function or ACF: This function is represented as a plot of the autocorrelation as a function of lag. The autocorrelation is simply the ordinary Pearson product-moment correlation of a time series with itself at a specified lag. The autocorrelation at lag 0 is the correlation of the series with its unlagged self, or 1. The autocorrelation at lag 1 is the correlation of the series with itself lagged one step; the autocorrelation at lag 2 is the correlation of the series with itself lagged 2 steps; and so forth. Partial autocorrelation function or PACF: This function is also represented as a plot versus lag. The partial autocorrelation at a given lag is the autocorrelation that is not accounted for by autocorrelations at shorter lags. To calculate the partial autocorrelation at lag s, yt is first regressed against yt-1, yt-2, . . . yt-(s-1). Think of the partial autocorrelation as the autocorrelation which remains at lag s after the effects of shorter lags (1, 2, . . . s-1) have been removed by regression. It is useful to know that for a time series of n observations, the smallest significant autocorrelation is about 2/sqrt(n). There are many kinds of models for serially correlated errors. Most ecological time series can be fit by one of a few types of models. We will restrict our attention to the fairly small group of models that usually work for ecological studies. Moving average or MA models have the general form yt = (B) t [4] In equation 4, y is the observed series which is serially correlated. is a series of errors which are free of serial correlation; is sometimes called "white noise". (B) is a polynomial involving the MA parameters and the backshift operator B: (B) = 1 + 1 B + 2 B2 . . . [5] The parameters must lie between -1 and 1. The order of a MA model is the number of parameters. For a pure MA process, the ACF cuts off at a lag corresponding to the order of the process. An ACF that cuts off at lag k is significant at lags of k and below, and nonsignificant for lags greater than k. If the ACF cuts off at lag k, that is evidence that a MA model of order k, or MA(k) model, is appropriate. A MA(k) model has k parameters: 1, 2, . . . k. The MA(1) model is yt = (1 + B) t which is equivalent to [6] 3 yt = t + t-1 [7] The MA(2) model is yt = (1 + 1 B + 2 B2) t = t + 1 t-1 + 2 t-2 [8] Examples of ACFs and PACFs for data that fit these models are presented in the illustrations that follow page 4.. Autoregressive or AR models have the general form (B) yt = t [9] Here yt and t have the same meaning as in eq. 4. (B) is a polynomial involving the autoregressive parameters (-1 < < 1) and the backshift operator: (B) = 1 + 1 B + 2 B2 . . . [10] As was the case with equation 4, equation 10 is written with pluses by engineers and with minuses by statisticians. An AR(k) model, or AR model of order k, has k parameters. For a pure AR(k) process, the PACF cuts off at lag k. The AR(1) model is (1 + B) yt = t [11] yt = - yt-1 + t [12] or The AR(2) model is (1 + 1 B + 2 B2) yt = t [13] yt = - 1yt-1 - 2yt-2 + t [14] or Examples of ACFs and PACFs from data that fit these models are presented in the illustrations that follow page 4. Autoregressive Moving Average or ARMA models include both AR and MA terms: (B) yt = (B) t [15] 4 Since we are usually interested in an expression for y, ARMA models are often written yt = [(B) / (B)] t [16] The ARMA(1,1) model is (1 + B) yt = (1 + B) t [17] yt = - yt-1 + t + t-1 [18] or Examples of ACFs and PACFs from ARMA(1,1) models are presented in the illustrations that follow page 4. Model fitting is a sequential process of estimation and evaluation. First, diagnostics are calculated. On the basis of the diagnostics, one guesses a form for the noise model. The model is fit, and residuals are calculated. The diagnostics are then calculated for the residuals. If the model is appropriate, the ACF of the residuals will be nonsignificant. If the ACF of residuals is significant for one or more lags, one then tries a different model. Further tips for identifying the correct model will become evident in the exercises. A stationary series is one whose statistical distribution is constant throughout the series. In practice, we will call a series stationary if the mean is roughly constant (no obvious trends) and the variance is roughly constant (scatter around the mean is about the same). If a series is not stationary, model fitting may be very difficult. Transformations of the data can be used to make a series more stationary and improve model fitting. Since units are always arbitrary, there is no reason not to transform a series to units that improve model performance. Log transformations often help stabilize the variance of biological data. For more about choosing transformations, see Box et al. (1978). Differencing transforms a series to first differences. The first difference of yt is (1-B)yt. Differencing often makes series stationary. Models fit to differenced series are called Autoregressive Integrated Moving Average or ARIMA models. Backtransformation from first differences to the original series is straightforward. If one knows any value on the original series, the entire original series can be calculated from the first differences. 5 Diagnostics for some simple autoregressive processes. 6 Diagnostics from some simple Moving Average processes. 7 Diagnostics for some simple Autoregressive-Moving Average processes. 8 Correcting for Serially Correlated Errors in Nonlinear Model Fits A general model for a dynamic ecological process is Yt+1 = F(Yt, Xt, ) + (19) where Y is the dynamic variable (or variables), X is a forcing variable (or variables), is a vector of unknown parameters, and is a vector of errors. The function F may be nonlinear. There may be multiple predictor and response variables (i.e. X and Y may be matrices). While we don't know the parameters , we are free to guess parameter values and calculate how far off our predictions are. The residuals, E, are estimates of : E = Yt+1 - F(Yt, Xt, ) (20) Optimal parameter estimates are found by minimizing some function of E with respect to the parameters. In some cases, we may be concerned about serial correlations in E. For example, serial correlations would bias parameter estimates. Suppose it is important to correct for this bias? Some ARMA models that are often useful for fixing serial correlations in ecological modeling are the autoregressive lag 1 model, symbolized AR(1); the moving average lag 1 model, symbolized MA(1); and the autoregressive moving average lag 1 model, symbolized ARMA(1,1). We will symbolize the serially correlated errors as Z to distinguish them from whitenoise errors . One or more additional parameters must be fit to remove the serial correlation from Z, yielding the uncorrelated errors . Our model to be estimated becomes Yt+1 = Ft + Zt (21) where Ft = F(Yt, Xt, ); Y is the time series of interest, possibly measured with error; X are input variables, possibly measured with error; Z is the process error with no time series correction; and are parameters to be estimated. For working with time series models, it is convenient to define the backshift operator B as B (Zt) = Zt-1 (22) In other words, the backshift operator shifts the time series back one step. AR(1) case: By definition, the AR(1) model is (1 - pB)Zt = t, or Zt = pZt-1 + t (23) 9 Therefore the full model is Yt+1 = Ft + pZt-1 + t (24) and the expression for the residuals used in equation 3 is Et = Yt+1 - Ft - pZt-1 (25) where Zt-1 = Yt - Ft-1 MA(1) case: By definition, the MA(1) model is Zt = (1 - qB)t, or Zt = t - qt-1 (26) Therefore the full model is Yt+1 = Ft + t - qt-1 (27) and the expression for the residuals used in equation 3 is Et = Yt+1 - (Ft - qt-1) (28) where t-1 = Yt - Ft-1 + qt-2 ARMA(1,1) case: By definition, the ARMA(1,1) model is (1 - pB)Zt = (1 - qB)t, or Zt = pZt-1 + t - qt-1 (29) Therefore the full model is Yt+1 = Ft + pZt-1 + t - qt-1 (30) and the expression for the residuals used in equation 3 is Et = Yt+1 - (Ft + pZt-1 - qt-1) (31) Zt-1 = Yt - Ft-1 (32) t-1 = Yt - Ft-1 - pZt-2 + qt-2 (33) where: or t-1 = Zt-1 - pZt-2 + qt-2 (34) 10 Intervention Analysis Intervention analysis is a special case of multivariate time series analysis. It is used to detect effects of an independent variable on a dependent variable in a time series. In some ways it is a time series analog of regression. Intervention analysis can be used to measure the effects of a driver on a response variable, or to measure the effects of disturbances (either experimental or inadvertent) in ecological time series. Definitions Intervention Models link one or more input (or independent) variates to a response (or dependent) variate (Box and Tiao 1975, Wei 1990). Intervention models are a form of transfer function. For one input variate x and one response variate y, the general form of an intervention model is yt = [(B) / (B)] xt-s + N(t) [35] Here N(t) is an appropriate noise model as described in Exercise 11. The delay between a change in x and a response in y is s. The intervention model has both a numerator polynomial and a denominator polynomial. The numerator polynomial is (B) = 0 + 1B + 2B2 + . . . [36] The numerator parameters determine the magnitude of the effect of x on y. These parameters can be any real number; they are not constrained to lie between -1 and +1. The numerator parameters are usually of greatest interest in ecological applications. The denominator polynomial is (B) = 1 + 1B + 2B2 + . . . [37] where -1 < < 1. The denominator determines the shape of the response. Graphs of some common intervention models are shown on the figure that follows page 12. Cross-correlations are used to diagnose intervention models. The cross-correlation function (CCF) is a plot of the correlation of x and y versus lag. The CCF can be misleading if x or y are serially correlated. Thus x and y are usually fit to noise models (or "filtered") before calculating the CCF. Matlab uses a polynomial filter to calculate the CCF, and then estimates parameters of the intervention model and the noise model jointly. SAS first fits a noise model, then calculates the CCF, then fits the intervention model for the filtered series. 11 The lag of the first significant CCF term indicates s, the shift of the intervention effect. The minimum significant correlation is about 2/sqrt(n) where n is the number of observations in the time series. If your intervention model has captured all the information about y that is available in x, then the residuals of the model will have no significant cross correlations with x. Fitting intervention models follows the same sequential approach used to fit noise models. First, one examines diagnostics and guesses an initial form of the model. This initial model is fit and evaluated using diagnostics for the residuals, parameter standard deviations, parameter correlations, and prediction error standard deviation. An improved model form may be considered, fit and evaluated. The process may continue through several iterations until a satisfactory model is found. As in the fitting of noise models, data transformations may be needed to detrend the data or stabilize the variance. The intervention parameters are usually a focus of investigation because they measure the effect of x on y. These have two different interpretations. The frequentist interpretation is that the parameter is a fixed constant which is estimated with error. In this case, the parameter standard deviation can be used to form a 95% confidence interval (which will be approximately equal to the parameter estimate plus and minus twice the standard deviation). This confidence interval will contain the true (but unknown) parameter in 95% of replicate experiments. Since ecosystem experiments are often unreplicable in principle, this interpretation can be problematic. The Bayesian interpretation is that the parameter is a random variate. We can measure its distribution, but it does not make sense to view it as a fixed number. Every experimental trial is a unique sample from this distribution, so we need not envision identical replicates. The derivation of the distribution of the parameters is explained in Box and Tiao (1975). This derivation assumes that all values of the parameters are equally likely before running the experiment. For a particular intervention parameter, the distribution we seek is called the marginal posterior distribution. This distribution is a special case of the t distribution explained in Box and Tiao (1973, pp. 116-118) and Pole et al. (pp. 75-80). The formula for the distribution of is P(=x) = a-(f+1)/2 b / c [38] where f is the degrees of freedom (number of observations minus number of parameters estimated), * is the estimate of , s is the standard deviation of the estimate of , and a = f + [(x - *)2/s2] [39] b = [(f + 1)/2] ff/2 [40] 12 c = (f/2) ( s2)1/2 [41] The gamma function (n) = 0 e-x xn-1 dx. REFERENCES Box, G.E.P., G.M. Jenkins and G.C. Reinsel. 1994. Time Series Analysis: Forecasting and Control. Prentice-Hall, Englewood Cliffs, NJ. Box, G.E.P. and G.C. Tiao. 1975. Intervention analysis with application to economic and environmental problems. J. Amer. Stat. Assoc. 70: 70-79. Carpenter, S.R. 1993. Analysis of the ecosystem experiments. Chapter 3 in S.R. Carpenter and J.F. Kitchell (eds), The Trophic Cascade in Lakes. Cambridge Univ. Press, London. Carpenter, S.R., K.L. Cottingham, and C.A. Stow. 1994. Fitting predator-prey models to time series with observation errors. Ecology 75: 1254-1264. Ljung, L. 1987. System Identification: Theory for the User. Prentice-Hall, Englewood Cliffs, NJ. Wei, W.W.S. Time Series Analysis. Addison-Wesley, Redwood City, California. 13