8 Regression with auto-correlated disturbances

advertisement

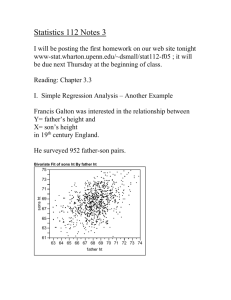

8 Regression with auto-correlated disturbances (8.1) (8.2) (8.3) (8.4) Introduction Properties of least square estimators when the disturbances are auto-correlated The Durbin-Watson test for auto-correlation Generalized least squares 8.1 Introduction In the last section we investigated consequences for the least square estimator that the disturbances 1 , 2 , ....., n had varying variances, but otherwise satisfied the classical standard conditions. Now, we consider a problem we often encounter when analysing time series data, namely that the random disturbances are correlated. We already know that the disturbances also take care of the numerous factors which influence the endogenous variable, but for various reasons have not been explicitly specified in the regression equation. It is evident that some of these factors may show a definite temporal pattern, that is to say they are correlated. Since the disturbances summarize these factors we intuitively realize that in many situations this will have as a consequence that the disturbances in the regression equation will be correlated. Since this is a breach of one of the classical assumptions in least square regression we expect that some of the properties we have learned the least square estimator to have, will not hold in this situation. 8.2 Properties of least square estimators when the disturbances are auto-correlated. Since our concern is consequences of auto-correlated disturbances we can as well consider a regression with only one independent variable. That is to say, we specify the regression equation: (8.2.1) Yt 0 1 X t t t 1, 2, 3, ....., T where T denotes the size of the sample. Regarding the auto-correlated disturbances t there are two popular specifications in econometrics: (8.2.2) (8.2.3) t t 1 ut t 1ut 2 ut 1 1 In case (8.2.2) the random process t is said to be a first-order auto-regressive process, and is usually denoted by the obvious symbols AR (1) process. The second process t is called a moving average process, and (8.2.3) denotes a MA(1) process. The number 1 appearing in these denotations is meant to tell us that the processes t are specified by using one lag only 1 t 1 . We also note that the process ut appearing in these specifications are supposed to be a purely random process with mean zero and variance 2 . In the present course we always assume that the disturbances t are AR (1) processes. We also note that the condition 1 is important. It means that process specified by (8.2.2) is a stationary process, which implies that the means of t is a constant independent of time t , and that the covariance of t and t s depends only on the time-lag s and not on the time t . These properties are easily derived by using the recursion (8.2.2) to solve for t with respect to white noise process u t . However, the mean and variance of t and the various covariance of t and t s , can be derived from specification (8.2.2). Using the standard formula for calculating the mean, we obtain from (8.2.2) that (8.2.4) E t E t 1 Eut Since t describes a stationary process it follows from what we have said above that E t E t 1 say, so that (8.2.4) reduces to (8.2.5) 0 since Eut 0 From (8.2.5) it follows that E t 0 . The variance of t is derived in a similar way. From the specification (8.2.2) we obtain (8.2.6) Var t E t2 2 E t21 E u t2 2 E t 1u t Since t 1 and ut are uncorrelated (why?) the last term of (8.2.6) will vanish. Hence, (8.2.6) reduces to (8.2.7) 2 2 2 2 (8.2.8) 2 implying that 2 1 2 Calculating the covariances between t and t 1 , and generally between t and t s , we use again the recursion (8.2.2). Obviously we have (8.2.9) Cov( t t 1 ) (1) Var ( t 1 ) 2 (8.2.10) Cov( t t 2 ) (2) 2 2 Proceeding in this way we will find that generally (8.2.11) Cov( t t s ) ( s ) s 2 2 In time-series analysis the covariances calculated above will often be called auto-covariances. If we normalize the auto-covariances by the variance we obtain the auto-correlations (s ) , so we realize that (8.2.12) ( s) ( s) s (0) The interesting question is now if the auto-correlated disturbances of t have any consequences for the least square estimators of the regression parameters 0 and 1 appearing in regression (8.2.1)? We know already that The OLS estimators of these parameters are given by (8.2.13) ˆ0 Y ˆ1 X (8.2.14) ˆ1 Yt ( X t X ) (X t X) 2 1 ( X X ) (X X ) t t 2 t Using the independence of t of the exogenous variable X t , we readily derive that (8.2.15) E ˆ1 1 and E ˆ0 0 From (8.2.14) we also find that (8.2.16) E ˆ1 1 2 2 Var ˆ1 2 xt xt xt 1 ..... 2 T 1 x1 xT 1 2 xt2 xt2 where xt X t X . T When the number of observations T grows the sum x t 1 2 t will become infinitely large, which implies that the right hand side of (8.2.16) will tend to zero. Thus, the estimator ̂1 will converge to 1 in probability and we say that ̂1 is a consistent estimator. By similar reasoning we can show that also ̂ 0 is a consistent estimator. Combining these facts with (8.2.15) we conclude that the OLS estimators ˆ0 and ˆ1 are unbiased and consistent even though the disturbance process t are auto-correlated. So what goes wrong in this situation? Well, we observe from (8.2.16) that auto-correlation will change the expression for the variance of ̂1 . Note that when the error process t is purely random 0 and (8.2.16) will reduce to the standard expression for the variance of ̂1 . From this we understand that the standard t and F tests and the standard procedures for calculating confidence intervals are not valid when the disturbances are auto-correlated. 3 8.3 The Durbin-Watson test for auto-correlation We noted above that auto-correlation in the disturbance process can take several patterns. The auto-regressive and moving average patterns are perhaps the more common, but the error process can also have a combinations of these two forms. Since the prevalence of autocorrelation can cause serious problems for applied statistical analyses, we should like to have reliable tests designed to expose this problem. A finite sample test derived for this purpose is the so-called Durbin-Watson test. The original D-W test is constructed to disclose the existence of a simple auto-regressive disturbance process, i.e. to disclose if the error process t has an AR (1) form. The specific model under consideration is (8.3.1) Yt 0 1 X t t (8.3.2) t t 1 ut t 1, 2, 3, ....., T 1 As the null hypothesis the D-W test uses the hypothesis of no auto-correlation so that H 0 : 0 and in addition that the purely error term ut is normally distributed or ut : N (0, 2 ) This hypothesis can be tested against the alternatives H1 : 0, H 2 : 0, H3 : 0. The construction of D-W test is simple and very intuitive. One starts by regressing Yt on X t as indicated by (8.3.1). Having obtained the estimates ˆ and ˆ we calculate the residuals 0 (8.3.3) 1 ˆt Yt Yˆt Yt ˆ0 ˆ1 X t Then the D-W test is based on the test statistic T (8.3.4) d (ˆ ˆ t 2 t 1 t )2 T ˆ t 1 2 t We readily see that we have approximately (8.3.5) d ; 2(1 ˆ ) where ̂ is the OLS estimate of obtained from the ‘regression’ (8.3.2) or T (8.3.6) ˆ ˆ ˆ t 2 T t t 1 ˆ t 2 2 t 1 4 We observe that ̂ is almost equal to the empirical correlation coefficient r between ˆt and ˆt 1 since T (8.3.7) ˆ ˆ r t 2 t t 1 T T t 2 t 2 ( ˆt2 )( ˆt21 ) Hence, we can also write (8.3.7) d ; 2(1 r ) The value of the test statistic d depends on the observations of the exogenous variable X t and the values of t . However, Durbin and Watson showed that, for given values of t , d is necessarily contained between two limits d L and dU which are independent of the values of X t and are functions only of the number of observations T and the number of the exogenous variables k so that (8.3.8) d L d dU The limits d L and dU are random variables whose distribution can be determined for each pair of (k , T ) under given assumptions on the distribution of t . We note above that under the null hypothesis t is normally distributed mean 0 and var iance 2 . The distributions of d L and dU under the null hypothesis has been tabulated by Durbin and Watson. Since the correlation coefficient r is restricted to the interval 1,1 we observe from (8.3.7) that d is restricted (approximately) to the interval 0,4. This fact provides us with useful guidelines for when we shall reject the null hypothesis when testing against the various alternative hypotheses. Suppose we wish to test the null hypothesis: H 0 : 0 against H 3 : 0 Since ̂ is approximately equal to r , it follows from (8.3.7) that we have every reason to be doubtful to the null hypothesis if the calculated value of the test statistic d is in the neighbourhood of zero. But to reject or not to reject the null hypothesis has to be decided on basis of the distributions of two bound d L and dU . The usual test procedure recommends us first to choose the level of significance , to this level of significance we determine the relevant fractile values from the distributions of the two bounds so that so that Pd L d1 and PdU d 2 . The decision process is now: If dˆ d1 reject H 0 If d dˆ d 2 we can neither reject or accept H 0 , the statistical material is indeterminate. If dˆ d 2 do not reject H 0 5 In a similar way we can test H 0 : 0 against H 2 : 0 and finally against H1 : 0 Most textbooks give tables for the distributions of two bounds, for example Hill et al. Table 5. 8.4 Generalized least square When the random disturbances t follow an AR (1) we have seen that the disturbances are correlated ((8.2.9) – (8.2.11)). This is a breach of the classical conditions underpinning the ordinary least square method. Although OLS gives us unbiased and consistent estimators when the disturbances are auto-correlated, it is evidently possible to find better and more convenient estimating methods. Generalized least squares is such a method which we will illustrate in this section by applying it to the regression model specified by (8.2.1) and (8.2.2). If we shift the time index one period backwards and multiply by the regression (8.2.1) is transformed to (8.4.1) Yt 1 0 1 X t 1 t 1 Subtracting (8.4.1) from (8.2.1) we attain (8.4.2) Yt Yt 1) (1 ) 0 1 ( X t X t 1 ) u t If we define the variables (8.4.3) ~ Yt Yt Yt 1 ~ X t X t X t 1 6 we can write (8.4.2) as (8.4.4) ~ ~ Yt (1 ) 0 1 (1 ) X t ut ~ ~ If we know , Yt and X t are observable and (8.4.4) turns out to be an ordinary linear regression with a disturbance u t satisfying all classical conditions. However, it follows from ~ ~ the definition of Yt and X t that time index has to run from 2 up to T , so restricting our analysis to (8.4.4) we in effect loose one observation and hence some efficiency in the estimation. But the ‘good’ situation is easily recovered. For the first observation the regression satisfies (8.4.5) Y1 0 1 X 1 1 2 The variance of the 1 is (1 ) we multiply the first observation by (8.4.6) where, of course, 2 is the variance of u t . Hence, if 2 1 2 , that is 1 Y 1 1 X 1 2 2 1 2 0 1 2 1 1 we observe that the variance of the random error appearing in (8.4.6) is simply 2 that is , equal to the var iance of u t . So if we supplement regression (8.4.4) with (8.4.6) as the first observation, we get an extended regression which uses all T observations, has independent and homoskedastic disturbances. We observe that the this extended regression ~ now has two explanatory variables I 1 2 , 1 , 1 ,........, 1 , the vector of the ~ ~ ~ ~ second explanatory variable is, of course, X 1 2 X 1 , X 2 , X 3 ,......., X T . The method we have described above is in effect an application of generalized least square. Generalized least square will always involve some kind of transformation of the observable variables. Above we have tacitly assumed that the parameter , usually it is not. When is not known it has to be estimated in some way in order to be able to use the transformations above. An approach often used is to start by running the regression (8.2.1) and then calculate the residuals (8.4.7) ˆt Yt Yˆt Yt ˆ0 ˆ1 X t Then one can estimate by running the regression of ˆt on ˆt 1 (of course without an intercept term). Having obtained an estimate ˆ of one proceeds as above. 7