REGRESSION ANALYSIS

advertisement

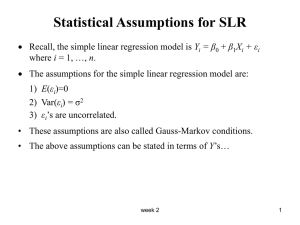

REGRESSION ANALYSIS GENERAL ASPECTS Analysing quantitative variables is very useful to find out a function able to synthesize the link existing among 2 or more variables. IF a causal relationship is assumed – hence it is assumed that exist a viariable Y (dependent) and one or more variables X (independent)hence we define: Y f (X ; ) the regression equation of Y on X - f is a generic function of X e parameters). It can be linear or non linear. error component - arises from: measurement errors on Y – X not stochastic – not adequate specification of the function: (not all the variables influencing Y are considered) In an inferential context is a random variable and as consequence Y is a random variable as well If f is linear Y a bX The simple linear regression mODELL (a; b) SEE FIGURES 1 AND 2 the multiple linear regression model IS A VECTOR FUNCTIONAL FORM The object is to find out the function f ( X ; ) giving the nearest theorical yi f ( xi ; ) - estimates – to the empirical ( actually observed ) y i . is the estimate of f ( X ; ) can assume many forms: f ( X ; ) e1 X non linear f ( X ; ) log( 1 X ) log( 2 X ) non linear - semi log - f ( X ; ) 1 log( X ) 2 X 2 linear f ( X ; ) 1 2 X linear If the relationship between Y and X does not depend on : Y f ( X ; ) we have an exact relationship – descriptive -. PARAMETER ESTIMATION: ASSUMPTIONS The estimation procedure depends on the assumption we make about the residuals . We obtain desirable properties and a very simple method under the following assumptions. 1. 2. 3. 4. Zero mean E(ui)= 0 for all i Common variance – homoskedasticity – V(ui)= 2 for all i independence: ui and uj are independent for any i and j independence of xj :x an u are independent for all j SEE FIGURE 3 Under these assumptions, it can been shown that the least-squares estimators of a and b are BLUE: minimum –variance unbiased estimators. For demonstration see: Maddala (1986) Econometrics, appendix B 5 Normality: in conjuction with 1,2 and 3 this implies that ui N(0, 2) NOTE: This assumption is not requested for optimality of estimators but to make confidence-interval statements and tests of significance. PARAMETER ESTIMATION: METHOD OF LEAST SQUARES Let be f ( X ; ) a bX ; (a; b) we need to find out a* e b* such as yi have a minimum distance from the actual y i . Distance: is the euclidean distance. n S (a; b) yi yi i 1 y 2 n i 1 a bxi min 2 i we look for the linear function minimizing the sum of the squared distances from each pair of actual values xi , yi and each pair of theorical ones xi , yi given by the specified function. SEE FIGURE 4 Considering that S(a;b) is not negative, it is enough to solve the system (obtained equating to zero the two derivatives with respect to a and b): n S (a; b) 0 a S (a; b) 0 b 2 ( yi a bxi ) 0 i 1 n 2 ( yi a bxi ) xi 0 i 1 and we get n b xi yi nxy i 1 n x 2 nx 2 i 1 i a y b x COV ( X , Y ) V (X ) NOTE: If we consider the relationship: X c dY then d* COV ( X ,Y ) V (Y ) hence if and b* d * V ( X )b V (Y )d * V ( X ) 1 and V (Y ) 1 b* d * V (Y ) V (X ) GOODNESS OF FIT: R2 1 n ( yi y ) 2 n R 2 i 1 V (Y ) V (Y ) 1 n 1 n 1 n 2 2 ( y y ) ( y y ) ( yi yi* ) 2 i i n i 1 n i 1 n i 1 I II III I = total variance II= proportion of total variance explained by X (or by the regression model III= residual variation (RSS) R2 1 If Then Residual Variation regression Variation Total Variance Total Variance R2 1 i yi yi 0 i The squared coefficient of linear correlation lineare is equal to R2 TESTING Because we do not know the exact values of parameters and we need to make estimation, estimators produce random variables with a probability distibutions If assumption 5 is true, then b* N (b, 2 / (xi- x )2 ) (1) a* N (a, 2 (1/n + x 2/ (xi- x )2 ) (2) but 2 is not known taking into account that RSS/ 2 has a χ2 distribution with (n-2) degrees of freedom 2 * = RSS /n-2 is an unbiased estimator for 2 substituting 2 * for 2 in (1) and (2) we get the estimated variances NOTE: the square root of the variances of b and a are called: STANDARD ERRORS (SE) Then (b* - b) / SE (b*) and (a* - a) / SE (a*) have each t distributions with (n-2) degree of freedom: t = b* - b /SE (b*)/√ xi2 t (n-2) A t distribution is given by the ratio of a random variable with normal standardized distribution and a random variable with a square root χ2 distribution divided by the degree of freedom (b* - b) / SE (b*) : √RSS/ 2 :√n-2 For n > 30 t can be approximated by a normal CONFIDENCE INTERVAL for the parameter b p= 0,95 b ± t0,025 SE /√ xi2 SEE FIGURES 5 AND 6 HYPOTHESIS TESTING for the parameter b Ho: b= b0 H1: b ≠ b0 b* - b /SE (b*)/√ xi2 t (n-2) b is assumed = 0 ANALYSIS OF RESIDUALS Residuals i yi yi SEE FIGURES 4 AND 7 Goal : Checking the existence of non- linear relationships : functional form non appropriate Checking the existence of a constant variability around the regression line : this ensure that the regression line is an appropriate synthesis of the relationship. Finding outliers PROPERTIES n i 1 i n x 0 i 1 i i 0 da cui segue che n i 1 i y 0 * i n e y i 1 n * i yi i 1 x: Y: Household income Expenses for leisure X Tot Aver Y X2 X*Y 1330000 1225000 1225000 1400000 1575000 1400000 1750000 2240000 1225000 1330000 1470000 2730000 120000 60000 30000 60000 90000 150000 240000 210000 30000 60000 120000 270000 159600000000 73500000000 36750000000 84000000000 141750000000 210000000000 420000000000 470400000000 36750000000 79800000000 176400000000 737100000000 176890000 150062500 150062500 196000000 248062500 196000000 306250000 501760000 150062500 176890000 216090000 745290000 18900000 1575000 1440000 120000 2626050000000 3213420000 b*=(2.626.050.000.000-12*1.575.000*120.000)/(32.134.200.000.00012*1.575.0002)= =0.1512866 a*=120000-0.1512866*1575000= -118276.4