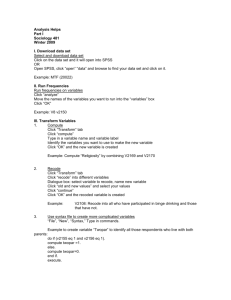

tonight`s regression assignment

advertisement

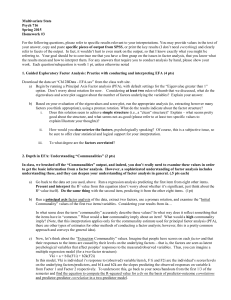

Regression Worksheet Carl Rogers was fond of discussing the discrepancy between the “actual self” (your current view of yourself) and the “ideal self”(your self as you would truly like to be). He held that the goal of therapy was to reduce the discrepancy between the two selves. A number of methods have been used over the years to measure such discrepancies within individuals’ self-concepts, and efforts have been made to correlate self-discrepancies with measures of self-esteem. Suppose a psychologist measures self-discrepancy on a scale that ranges from 0 (no discrepancy) to 24 and selfesteem on a scale that ranges from 0 (low self-esteem) to 50, and she obtains the following data: Discrepancy 10 17 9 13 14 18 14 10 24 8 18 20 5 9 Esteem 40 30 39 31 28 16 25 29 12 34 17 12 19 20 a. Compute descriptive statistics, Pearson’s r, and the covariance in SPSS. Is the correlation significant? b. What is the proportion of overlapping variance between discrepancies and self-esteem? c. Treat “Esteem” as the dependent variable (outcome variable) and “Discrepancy” as the independent variable (predictor variable) in a bivariate regression. Based on the results from ‘a’, compute the regression coefficient and y-intercept (show your simple computations here). Check your results in SPSS by running a bivariate regression analysis. d. What is multiple R2 and how does it compare to the proportion of overlapping variance you computed for ‘b’ above? Is the regression weight statistically significant? What is the observed p-value for the regression weight, and how does it compare to the p-value for the correlation above? e. Write a brief summary of the results. Is “Discrepancy” a good predictor of “Esteem?” f. Plug each discrepancy score above into the regression equation and compute each person’s predicted self-esteem score (if you want to save time use an SPSS compute statement to do this). Enter these scores into SPSS as a new variable and compute Pearson’s r between the predicted self-esteem scores and the observed self-esteem scores above. What is the value? Explain why you obtained this result. g. Now compute the following values for each person (again, this is easiest in SPSS using compute statements): 1. ( y y ) 2 2 . ( y y ) 2 3. ( y y) 2 Sum the results separately for each set of values and note how they compare to SS total, SSregression, and SSresidual on your regression output. Compute Multiple R2 from the SS values. 2. Download and open the Berkeley Guidance Study data from the website. Build and test the following model in SPSS: soma = wt9 + ht9 + lg9 + st9 The “soma” variable is a rating variable that ranges from 1 (thin) to 7 (obese) regarding body type at age 18. Wt9, ht9, lg9, and st9 are measures of weight, height, leg circumference, and strength at age nine. The equation posits the nine-year old variables as predictors of body type at 18. Is the overall model statistically significant? In other words, is R2 significant? What proportion of variance in soma is explained by the model; in other words, what is the value for R2? What are the beta weights for the four predictors? Which are statistically significant at the .05 level? Interpret the direction of any significant beta weights. For example, if the beta weight for wt9 were .54, this would indicate that higher weights at age 9 are predictive of higher soma ratings at age 18. 3. Run the same regression model above for males and females, separately. Record any important differences between the two models (e.g., differences in R2 values, beta weights, significance levels, etc.). 4. Using the Berkeley Guidance Study data, use the Forward, Backward, and Stepwise functions in SPSS to maximally predict “soma” from all of the 2-year and 9-year physical measures. Liz will show you how to run these automatic model building methods in SPSS. Did the three methods arrive at the same final regression model?