Hypothesis Testing

advertisement

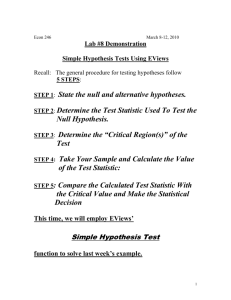

DISTRIBUTIONS AND HYPOTHESIS TESTING Area Under the Normal Curve 35.0 2.5 30.4 (-3) 32.6 (-2) 34.8 (-) 37 () 39.2 (+) 43.6 41.4 (+3) (+2) The Normal curve is symmetric about the mean, so the area under the curve may be determined from a table that has entries for only half of the values. The Standard Normal Distribution has a mean of 0 and a standard deviation of 1; and the total area under the curve is exactly 1. In order to adapt the table to a generic situation, we compute a change in variable, defined as: z x where: x is the point in the interval, is the distribution mean, and is the distribution standard deviation. When we do this, z now measures how many standard deviation units the x-value is from the mean. The table lists the area [(z)] under the normal curve from - to z, so the entries in the table require us to look up the value of corresponding to the computed z. [Note: Due to symmetry, (-z) = 1 - (z)] Example: A process has a distribution that is Normally distributed, with a mean of 37.0 and a standard deviation of 2.2. The specification calls for a value of 35.0 2.5. Estimate the proportion of the process output that will meet specifications. Solution: 1. Convert the problem to a Standard Normal Distribution and find the area under the Standard Normal Curve from - to the upper specification limit. 2. Convert the problem to a Standard Normal Distribution and find the area under the Standard Normal Curve from - to the lower specification limit 3. Subtract (2.) from (1.) to get the process yield. Step 1.) = 37 = 2.2 x 35.0 2.5 37.5 37.5 37.0 z 0.23 2.2 (0.23) 0.5910 Step 2.) = 37 = 2.2 x 35.0 2.5 32.5 32.5 37.0 z 2.05 2.2 ( 2.05) 1 ( 2.05) 1 0.9798 0.0202 Step 3.) 0.5910 0.0202 0.5708 57% 687303317 Page 1 of 8 Hypothesis Testing Terms Statistic is an estimate of a parameter or calculation about or from a distribution. A distribution is a mathematical function that captures the frequency (or probability) that a given situation will occur. Population is a term that refers to the complete set of observations. If we have the complete set (or even a very large set of observations), then our estimates (statistics) should be very accurate. We have special identifiers for these estimates: is the symbol for the population mean; 2 is the symbol for the population variance ( is for the population standard deviation); and N will represent the population size, or the number of elements in the population. Sample is a term that refers to a subset of the population, selected at random (meaning every element has an equal chance of being selected), and selected independently (meaning any of our prior selections did not bias our current selection). We also have special identifiers for the statistics estimated from samples: x is the symbol for the sample mean; S2 is the symbol for the sample variance (S is the symbol for the sample standard deviation), and n is the sample size. Hypothesis is a guess about a situation, that can be tested and can be either true or false. The Null Hypothesis has a symbol H0, and is always the default situation that must be proven beyond a reasonable doubt. The Alternative Hypothesis is denoted by the symbol HA (H1 in some texts) and can be thought of as the opposite of the Null Hypothesis - it can also be either true or false, but it is always false when H0 is true and vice-versa. There are two types of comparisons, and three forms for the alternative hypothesis, illustrated in Figure 1 (below). The first type of HA says that there is a difference, but the direction of the difference is not known (or needed). In this case, it is a two-sided comparison, and the Null hypothesis is rejected if the test statistic would be placed in either of the tail regions representing 1/2 of the rejection criteria area (see Fig. 1(b)). Otherwise, the Null hypothesis is kept. The second type of HA says that there is a difference, and the direction of the difference is anticipated (or required). In this case, there is a one-sided comparison, and the Null hypothesis is rejected if the test statistic would be placed in the tail region representing the entire rejection criteria area. If a sample mean must be greater than the other mean (Form 1), the right tail region is used (Fig. 1(c)), and the value of the test statistic must exceed the criterion value. If the situation is reversed, and the sample mean must be less than the other mean (Form 2), then the left tail region is used (Fig. 1(a)) and the criterion value must be less than the test statistic value. Only one of these last two forms is hypothesized and tested otherwise the two-sided comparison must be used. Figure 1. Hypothesis Test Rejection Criteria 2 s 0 One-Sided Test Statistic < Rejection Criterion (a) 687303317 2 s 0 0 s Two-Sided Test Statistic < -½ Rejection Criterion or Statistic > +½ Rejection Criterion (b) s One-Sided Test Statistic > Rejection Criterion (c) Page 2 of 8 Type I Errors occur when a test statistic leads us to reject the Null Hypothesis when the Null Hypothesis is true in reality. The chance of making a Type I Error is estimated by the parameter (or level of significance), which quantifies the reasonable doubt. Type II Errors occur when a test statistic leads us to fail to reject the Null Hypothesis when the Null Hypothesis is actually false in reality. The probability of making a Type II Error is estimated by the parameter . Figure 2 depicts the errors associated with hypothesis testing. Depending on the situation, we can knowingly adjust our tests so that we can control the risks of these types of errors to a reasonable extent. H0 is the Null Hypothesis (always the default), HA is the Alternative Hypothesis H0: There is NO significant difference at the level. HA: There IS a significant difference at the level. Common levels: .10, .05, .01 P-value: The smallest level of significance that would lead one to reject H0. It is possible to report the P-value for the test, and let the user decide if the significance is acceptable (rather than use a set value). H0 is True H0 is False H0 is True OK TYPE II ERROR - risk (Keep H0 when H0 is False) Consumer's Risk H0 is False TYPE I ERROR - risk (Reject H0 when H0 is True) Producer's Risk OK T E S T R E S U L REALITY T Figure 2. Hypothesis Testing & Error Types Sampling Distributions It can be difficult to associate an exact distribution with every possible type of event that we might be interested in predicting. Fortunately, most of the comparison hypotheses that we are interested in making can be fit to about four, well-known functions. These functions are referred to as sampling distributions: (1) the Normal Distribution; (2) the t–Distribution; (3) the Chi-Squared (2) Distribution; and (4) the F–Distribution. One of the factors that controls how well these sampling distributions approximate the actual distribution depends on the sample size or a statistic calculated from the sample size called the degrees of freedom (symbol ). Comparison of Means One of the first types of comparison that are important are those that compare the location of two distributions. When we do this, we compare the difference in the mean values for the two distributions, and check to see if the magnitude of their difference is sufficiently large relative to the amount of variation in the distributions. This is illustrated in Figure 3, below. Definitely Different Probably Different Probably NOT Different Figure 3. Comparison of Means, Illustrated. 687303317 Page 3 of 8 Definitely NOT Different Remembering that there will be a little variation in any sample taken from a distribution, the idea when comparing means it to express the distance between the two means in terms of the spread, and then see how likely we would be to observe a difference of that magnitude if there really were no difference in the two distributions (the Null Hypothesis). If the probability of that magnitude is sufficiently small, then we would reject the Null Hypothesis, and accept the Alternative Hypothesis instead. Assuming that the mean value from both distributions is normally distributed, we could always use a form of the t-test to perform the comparison. However, if we know a little bit more about the distributions, we can be far more efficient with the data, and more effective in detecting a true difference. Figure 5 shows a decision chart for two-way comparisons. The top portion of the chart shows the different ways that we could perform the test, based on our knowledge or prior experience with the processes. Comparison of Variances A second type of important comparison is when a difference in the spread of two distributions is suspected. To make this comparison, we compute the ratio of the two variances, and then compare the ratio to one of two known distributions as a check to see if the magnitude of that ratio is sufficiently unlikely for the distribution. This is illustrated in Figure 4, below. Definitely Different Probably Different Probably NOT Different Definitely NOT Different Figure 4. Comparison of Variances, Illustrated. Again, similar to our comparison of means, more experience with at least one of the processes will let us be more efficient in testing if there really is no difference in the two distributions (the Null Hypothesis). If the probability of that magnitude is sufficiently small, then we would reject the Null Hypothesis, and accept the Alternative Hypothesis instead. The bottom half of Figure 4 shows the decision criteria for two-way variance comparisons. For these last two tests, however, the assumption that the data comes from distributions that are normally distributed is very important. In fact, we should assess how normally our data are distributed prior to conducting either test. Testing for a Normal Distribution The idea behind the Normal Probability Plot is that if we structured a scatter plot of the sorted data values and the probability of encountering those data values, that the plot would look roughly linear if the true distribution were Normally distributed. Further, if two distributions are plotted on the same graph, since the slope of each data set on a Normal Probability Plot is proportional to the variance, parallel plots should have similar variances. Normal probability plots may be done on special paper, may be done manually, or can easily be done with Microsoft's Excel spreadsheet (an example of such a spreadsheet is available on disk). The construction and evaluation steps are outlined below: 687303317 Page 4 of 8 1. Record raw data and count the observations (obtaining n) 2. Set up a column of values (from 1 to j), where j is an index 3. Compute (zj) for each j value: (zj) = (j - 0.5)/n 4. Obtain a zj value for each (zj) from Standard Normal Table (find the entry ((zj) in the table, then read index value (zj), or use the Excel Norminv() function) 5. Set up a column of observed data, sorted into increasing values 6. Construct a scatter plot of zj values versus sorted data values 7. Approximate the slope with a sketched line at the 25% and 75% data points Evaluating a Normal Probability Plot 1. Assess the Equal-Variance and Normality assumptions: Data from a Normal sample should tend to fall along the line, so if a “fat pencil” covers almost all of the points, then a normality assumption is supported The slope of the line reflects the variance of the sample, so equal slopes support the equal variance assumption 2. Theoretically, a sketched line should intercept the zj = 0 axis at the mean value, so if the two plots intersect at that point, they should have the same mean 3. Practically: Close is good enough for comparing means Closer is better for comparing variances 4. If the slopes differ much for two samples, use a test that assumes the variances are not the same Summary of Hypothesis Testing Steps State the null hypothesis (H0) that test statistic . Choose an alternative hypothesis HA from one of the alternatives: , , or . Choose a significance level of the test (. Select the appropriate test statistic and establish a critical region. (If the decision is to be based on a P-value, it is not necessary to state the critical region) 5. Compute the value of the test statistic () from the sample data. 6. Decision: Reject H0 if the test statistic has a value in the critical region (or if the computed P-value is less than or equal to the desired significance level ); otherwise, do not reject H0. 1. 2. 3. 4. 687303317 Page 5 of 8 x – 0 – 0 Figure 4. Decision Chart for Hypothesis Testing. Population Variance (2) is known (use z-test) Is the Population Mean known? Yes (use 0) z0 = 0 n No (use x2) x1 – x2 – 0 z0 = 12 22 + Means (z or t) What is known about the Variation? Population Variance (2) is unknown (use S2 & t-test) Is the Population Mean known? n1 Yes (use 0) x1 – 0 – 0 t0 = S n No Are the Variances similar? Kind of comparison ? n2 Yes (use pooled S2) x1 – x2 – 0 t0 = 1 Sp 1 + n1 No (use non-pooled S2) n2 x1 – x2 – 0 t0 = S12 S22 + n1 Paired Comparison (use difference in means & t-test) d – 0 t0 = Sd n Variances (2 or F) Is the Population Variance known? Yes (use 0 & 2-test) (n – 1) S2 = 2 02 0 No (use S2 & F-test) S12 F0 = S22 687303317 Page 6 of 8 n2 COMPARISON OF MEANS Condition Dist. Estimator 1 Estimator 2 Variance Known (σ2) Z Mean Known (µo) ------------ Mean Unknown (y) Test Statistic HA Criteria y - o o Zo Z n o o Z o Z y1 y 2 1 2 Zo Z 12 1 2 1 2 Z o Z Zo ------------ Zo n1 Variance Unknown (S2) t Mean Known (µo) Mean Unknown (y) ------------ n2 y - o to Similar Variances 12 22 22 S n y1 y 2 to 1 1 n1 n 2 Sp Dissimilar Variances 12 22 to Paired Comparison Difference In Means di d1 d2 i 1 n i to Sd n 2 Z o Z to t , n-1 to t , t o t , v 2 Where S p 2 2 1 2 n1 n2 n 1 S n 1 1 y1 y 2 t , v S p n1 n 2 2 (n1 1) S12 (n 2 1) S 22 and v n1 n 2 2 n1 n 2 2 1 2 t o t , 1 2 t o t , v v S2 S2 y1 y2 t , v S p 1 2 n1 n2 2 2 S12 S 22 n1 n2 Where v 2 2 S12 S 22 n1 n2 n1 1 n2 1 to t , S d d t , n 1 n 2 n-1 2 Page 7 of 8 y t , 2 n n 1 n Where S d 687303317 y1 y 2 Z 2 2 1 2 d 0 d Z o Z t o t , n 1 S12 S 22 n1 n 2 n y Z 2 2 o o y1 y 2 Sample Mean Of Differences d o Confidence Interval (d i d )2 i 1 n 1 COMPARISON OF VARIANCES Condition Variance Known Dist. ( ) Test Statistic o2 2 o HA 2 (n 1) S o2 2 2 o2 S 2 1 , S 22 F S Fo S 2 1 2 2 2 2 , n 1 or 2 o Confidence Interval 2 o2 o2 12 ,n1 ,n1 2 Variance Unknown Criteria 2 o 12 22 2 1 2 o 2 o Fo F 2 ,n1 1,n2 1 12 22 1 ,n1 1,n2 1 2 2 1, n 1 1 1 F , v2 , v1 NORMALITY ASSUMPTION IS CRITICAL 687303317 Page 8 of 8 (n 1) S 2 (n 1) S 2 , 2 2 2 , n 1 1 , n 1 2 or Fo F Fo F ,n1 1,n2 1 NOTE: F1 , v1 , v2 1 , n 1 2 2 Fo F ,n 2 2 2 S12 S 22 S12 F 1 ,n2 1,n1 1 , S 2 2 2 F ,n2 1,n1 1 2