9.3 Criteria for model selection

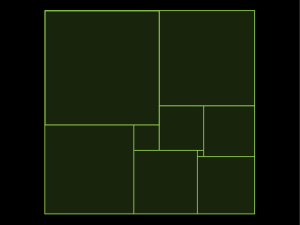

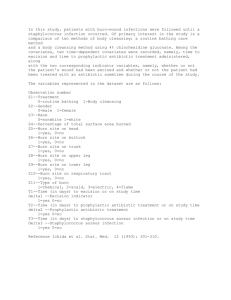

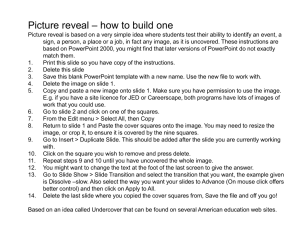

advertisement

1 Chapter 9 Building the Regression Model I: Model Selection and Validation 9.3 Criteria for Model Selection: Two opposed criteria of selecting a model: Including as many covariates as possible so that the fitted values are reliable. Including as few covariates so that the cost of obtaining information and monitoring is not a lot. Note: There is not unique statistical procedure for selecting the best regression model. Note: Common sense, basic knowledge of the data being analyzed, and considerations related to invariance principle (shift and scale invariance) can not ever be set side. Motivating example: The “Hald" regression data X1 Y X2 X3 X4 78.5 7 26 6 60 74.3 1 29 15 52 104.3 11 56 8 20 87.6 11 31 8 47 95.9 7 52 6 33 109.2 11 55 9 22 102.7 3 71 17 6 72.5 1 31 22 44 93.1 2 54 18 22 115.9 21 47 4 26 83.8 1 40 23 34 113.3 11 66 9 12 109.4 10 68 8 12 Total 13 observations. 2 Several methods which can be used are: (a) using the value of R 2 . (b) using the value of s 2 , the mean residual sum of squares. (c) using Mallows’ C p statistic. (d) using AIC p and SBC p criteria. (e) using PRESS p criterion. (a) R2 : Example (continue): In “Hald” data, there are 4 covariates, X 1 , X 2 , X 3 , and X 4 . All possible models are divided into 5 sets: 4 Set A: Y 0 1 possible model. 0 4 Set B: Y 0 i X i , i 1,2,3,4 4 possible models. 1 4 Set C: Y 0 i X i j X j , i j , i, j 1,2,3,4 6 possible models. 2 Set D: Y 0 i X i j X j k X k , i j k , i, j , k 1,2,3,4 4 4 possible models 3 4 Set E: Y 0 1 X 1 2 X 2 3 X 3 4 X 4 , 1 possible model. 4 Total 2 4 16 models. For every set, one or two models with large R 2 are picked. They are the following: Sets Set B Set C Set D Set E Models Y 0 2 X 2 R2 0.666 Y 0 4 X 4 0.675 Y 0 1 X 1 2 X 2 0.979 Y 0 1 X 1 4 X 4 0.972 Y 0 1 X 1 2 X 2 4 X 4 0.982 Y 0 1 X 1 2 X 2 3 X 3 0.982 Y 0 1 X1 2 X 2 3 X 3 4 X 4 0.982 3 Principle based on R 2 : A model with large R 2 and small number of covariates should be a good choice since large R 2 implies the reliability of fitted values and a small number of covariates reduce the costs of obtaining information and monitoring. Example (continue): Based on the above principle, two models, Y 0 1 X 1 2 X 2 and Y 0 1 X 1 4 X 4 are sensible choices!! Note: X 2 and X 4 are highly correlated. The correlation coefficient is -0.973. Therefore, it is not surprising that the two models have very close R 2 . (b) Mean residual sum of squares s2 : s2 A useful result: As more and more covariates are added to an already overfitted model, the mean residual sum of squares will tend to stabilize and approach the true value of 2 provided that all important variables have been included. That is, 5 Number of Covariates (p) 10 4 Example (continue):) Again, we compute all s 2 for 16 possible models. We have the following table: Sets Average s2 s2 Set B 115.06( X 1 ), 82.39( X 2 ), 176.31( X 3 ), 80.35( X 4 ) 113.53 Set C 5.79( X 1 , X 2 ), 122.71( X 1 , X 3 ), 7.48( X 1 , X 4 ), 41.54( X 2 , X 3 ) 86.89( X 2 , X 4 ), 17.59( X 3 , X 4 ) 47.00 Set D 5.35( X 1 , X 2 X 3 ), 5.33( X 1 , X 2 , X 3 ),5.65( X 1 , X 3 , X 4 ) 8.20( X 2 , X 3 , X 4 ) 6.13 Set E 5.98( X 1 , X 2 X 3 , X 4 ) 5.98 Average s2 The plot of average s 2 against p (the number of covariates, including 0 ) is 6 2 3 4 5 Number of Covariates (p) Principle based on s 2 : A model with mean sum of squares s 2 close to the estimate of 2 (the horizontal line) and with the fewest covariates might be a sensible model. 5 Example: The estimate of 2 could be 6. The model Y 0 1 X 1 2 X 2 is sensible since its mean residual sum of squares s 2 is 5.79 (close to 6) and the number of covariates are small compared with the other models with s 2 close to 6. (c) Mallows Cp : Suppse the full model (containing all possible covariates) is model r : Y 0 1 X 1 r 1 X r 1 . Then, Mallows C p RSS (model p) (n 2 p) , s2 where n is the sample size, p is the number of parameters, RSS (model p) is the residual sum of squares from a model containing p parameters, and s 2 is the mean residual sum of squares from the model containing all possible covariates. Intuition of Mallows C p : Suppose s 2 is the mean residual sum of squares from the full model (containing all possible covariates) Y 0 1 X 1 r 1 X r 1 , and Y 0 1 X 1 p 1 X p 1 is the true model, p r . Then RSS (model p) , the mean residual sum of squares from model p, should be n p a sensible estimate 2 accurately. That is, RSS (model p) 2 . Thus, n p 6 RSS (model p) (n p) 2 . Also, the mean residual sum of squares s 2 for the overfitted model s 2 2 . RSS (model p) (n p) 2 Cp (n 2 p) (n 2 p) s2 2 (n p) (n 2 p) p Thus, ( p, C p ) will falls close to the line of Y X . (r,Cr) Cp r p (p,Cp) p r p Note: Cr r. Principle based Mallows C p : The principle of selecting a best regression equation is to plot C p versus p for every possible models. Then, choose some models with fewer covariates close to the line Y X . 7 Example (continue): For the motivating example, we calculate C p for all 16 possible models. We then have the following table: Cp Set A Set B Set C Set D Set E 443.2 202.5 ( X 1 ) ,142.5 ( X 2 ) ,315.2 ( X 3 ) ,138.7 ( X 4 ) 2.7 ( X 1 , X 2 ) ,198.1 ( X 1 , X 3 ) ,5.5 ( X 1 , X 4 ), 62.4 ( X 2 , X 3 ), 138.2 ( X 2 , X 4 ), 22.4 ( X 3 , X 4 ) 3 ( X 1 , X 2 , X 3 ), 3 ( X 1 , X 2 , X 4 ), 3.5 ( X 1 , X 3 , X 4 ), 7.3 ( X 2 , X 3 , X 4 ) 5 ( X1 , X 2 , X 3 , X 4 ) 5 4 Cp (X1,X3,X4) (X1,X2,X3),(X1,X2,X4) 3 (X1,X2) 3 4 5 p The point ( p, C p ) value for the model Y 0 1 X 1 2 X 2 is close to the line Y X . and the model also has fewer parameters. Therefore, we recommend this model as a sensible choice. (d) AIC p and SBC p criteria: 8 AIC p n ln RSS (model p) n ln n 2 p SBC p n ln RSS (model p) n ln n ln n p Principle based on AIC p and SBC p criteria: Search for models with small values of AIC p and SBC p . Example (continue): For the motivating example, we calculate AIC p and SBC p for all 16 possible models. We then have the following tables: AIC p Set B Set C Set D Set E 63.51 ( X 1 ) ,59.17 ( X 2 ) ,69.09 ( X 3 ) ,58.85 ( X 4 ) 25.41 ( X 1 , X 2 ) ,65.11 ( X 1 , X 3 ) ,28.74 ( X 1 , X 4 ), 51.03 ( X 2 , X 3 ), 60.62 ( X 2 , X 4 ), 39.86 ( X 3 , X 4 ) 25.02 ( X 1 , X 2 , X 3 ), 24.97 ( X 1 , X 2 , X 4 ), 25.73 ( X 1 , X 3 , X 4 ), 30.57 ( X2 , X3, X4 ) 26.93 ( X 1 , X 2 , X 3 , X 4 ) BIC p Set B Set C Set D Set E 64.641 ( X 1 ) ,60.30 ( X 2 ) ,70.22 ( X 3 ) ,59.98 ( X 4 ) 27.11 ( X 1 , X 2 ) ,66.81 ( X 1 , X 3 ) ,30.44 ( X 1 , X 4 ), 52.73 ( X 2 , X 3 ), 62.32 ( X 2 , X 4 ), 41.56 ( X 3 , X 4 ) 27.28 ( X 1 , X 2 , X 3 ), 27.23 ( X 1 , X 2 , X 4 ), 27.99 ( X 1 , X 3 , X 4 ), 32.83 ( X2 , X3, X4 ) 29.75 ( X 1 , X 2 , X 3 , X 4 ) According to the two selection criteria, several models, Y 0 1 X 1 2 X 2 , Y 0 1 X 1 2 X 2 3 X 3 , Y 0 1 X 1 2 X 2 4 X 4 Y 0 1 X 1 3 X 3 4 X 4 9 are sensible models. (d) PRESS p (prediction sum of squares) criteria: PRESS p yi yˆi (i ) model p n 2 i 1 where , yˆ i (i ) model p is the predicted value of y i using the current model (model p) and the observation without y i to fit the model. For example, in “Hald” data, as the current model is Y 0 1 X 1 2 X 2 , yˆ 2 ( 2 ) model 3 is the predicted value of y 2 using the data y1 , y3 , y 4 ,, y13 Principle based on to fit the model. PRESS p criteria: Search for models with small values of PRESS p .