Why Experiment? - the NCRM EPrints Repository

advertisement

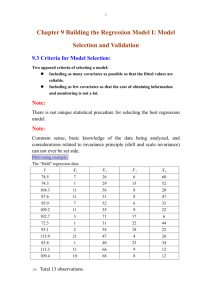

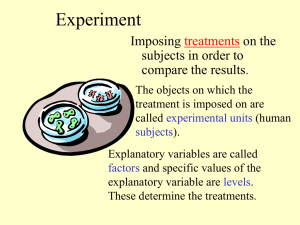

Field Experiments: A Brief Overview of Core Topics Don Green Columbia University Why Experiment? Causal explanation vs. description: parameters and parameter estimation The role of scientific procedures/design in making the case for unbiased inference The value of transparent & intuitive science that involves ex ante design/planning and, in principle, limits the analyst’s discretion Experiments provide benchmarks for evaluating other forms of research Experiments at the border between theory and practice Experiments allow us to study the effects of phenomena that seldom occur naturally (e.g., out-of-equilibrium phenomena) Systematic experimental inquiry can lead to the discovery and development of new interventions Momentum of experimental programs: each finding leads to new questions Experimentation is not an assumption-free research procedure Unbiased estimation of the sample average treatment effect hinges on assumptions of Random assignment: independence of treatments and potential outcomes Excludability: the only systematic way in which treatment assignments affect outcomes is via the treatment itself Non-interference: potential outcomes are affected solely by the treatment that is administered to the subject, not to treatments administered to other subjects Core assumptions must be kept in mind at all times when designing, analyzing, and interpreting experiments To the extent possible, try to address core assumptions through experimental design rather than argumentation What follows are suggestions for making experiments more convincing Many of these suggestions grow out of lessons learned from my own mishaps and mistakes Implementing random assignment • CONSORT suggests that random allocation be performed by an outside team/person Should be carefully documented (for later RI purposes) • • • • If you are working with a naturally occurring random assignment, make sure you understand it in detail and verify its statistical properties Use a random number seed Good to make the process transparent (sometimes they are public lotteries), but beware of excludability violations Working out the random assignment with research partners • Your research partners may not fully understand what you mean when you say that treatments will be allocated randomly and that they won’t get to adjust or approve the random assignment Be pro-active with more palatable designs • • • Stepped wedge Blocking to ensure that “preferred” or “inexpensive” subjects have a high probability of being included 90% of life is just showing up • • • Your experiment may get out of hand if you don’t supervise it, especially when interventions are launched and outcomes are measured Don’t expect your research partners to understand your rationale for doing things Beware of their good intentions (e.g., their desire to treat everyone) Noncompliance is often a problem of miscommunication or misrepresentation • Your partners may exaggerate how many subjects they are actually able to treat • • With supervision, you can use a randomly sorted treatment list and an exogenous stopping rule Measure compliance with the treatment as accurately as you can • Irony that when research partners exaggerate their contact rate, the produce an underestimate of the CACE Trade offs of orchestrating the treatments yourself • On the one hand, implementing your own interventions is a pain in the neck and often expensive • • All the more reason to do a pilot test to see what you’re getting yourself into On the other hand, you get to do more interesting (and often more publishable) work that is less prone to collapse • “Program evaluation” work is good for practice/apprenticeship but may involve uninteresting treatments or outcomes Do not confuse random sampling with random assignment • • • Random sampling refers to the procedure by which subjects are selected from some broader population. Random sampling facilitates generalization but not necessarily unbiased causal inference. Random assignment refers to the procedure by which subjects are allocated to treatment conditions. Its role is to make assignments independent of potential outcomes. Maintain the integrity of random assignment When randomly allocating subjects to experimental groups, follow and document a set procedure. Do not redo the random assignment because you are disappointed that certain observations ended up in the “wrong” experimental group. Design your experiment in ways that minimize attrition or cause attrition to be (arguably) unaffected by assignment Maintain symmetry between treatment and control groups Apart from receiving different treatments, subjects in different experimental conditions should be handled in an identical fashion. The same standards should be used in measuring outcomes across the groups, and where possible, those tasked with measuring outcomes should be blind to experimental condition. Make sure your analysis is appropriate to your random assignment procedure • When the probability of being assigned to the treatment group varies across subjects, you must either analyze the experimental results separately for each stratum or apply inverse probability weights before pooling the data • If you use restricted randomization, your estimation (weighting) and inference procedures (simulations) should take these restrictions into account When estimating average treatment effects using regression (i.e., regressing outcomes on the treatment as well as a set of covariates), do not attach a causal interpretation to the covariates' coefficients • Covariates help reduce disturbance variability and thus improve the precision with which treatment effects are estimated. • Regression coefficients associated with the covariates are not unbiased estimates of average treatment effects because the covariates are not randomly assigned • Present results both with and without covariates (or follow a pre-specified plan) “Agnostic” regressions may include pre-treatment covariates ONLY. Do not include post-treatment variables as predictors in order to detect the "mediating paths" by which a treatment influences the outcome, as this method of assessing mediation relies on implausible assumptions. When interpreting a null finding, consider the sampling distribution A noisy estimate that is indistinguishable from zero is often called a “null” finding, but its confidence interval may encompass quite large effects. Do not interpret the failure to find an effect as a failure. A precisely estimated zero may have important theoretical implications and may help redirect scholarly attention toward more effective interventions. When analyzing a cluster-randomized experiment, be sure to account for clustering when conducting estimation and inference. When analyzing a cluster-randomized experiment, be sure to account for clustering when estimating standard errors and confidence intervals. The power of a cluster-randomized experiment is more strongly determined by the number of clusters than the number of observations per cluster. Clusters of unequal size may lead to bias in difference-of-means and regression estimation; if possible, block on cluster size When analyzing experiments that encounter noncompliance, do not compare subjects who receive the treatment with subjects who do not receive the treatment. Never discard noncompliers. Start with a model that defines the estimand and shows the conditions under which it can be identified. Analysis should be grounded in a comparison between the randomly assigned treatment group and the randomly assigned control group, which have the same expected potential outcomes Groups formed after random assignment, such as the “treated” or “untreated,” may not to have comparable potential outcomes. Keep the estimand in mind when reporting and interpreting results. ATE In your sample versus in some larger population ITT CACE ATE among “Always-Reporters” Treatment effects in the presence of spillovers of various kinds Beware of interference and consider its empirical implications Unless you specifically aim to study spillover, displacement, or contagion, design your study to minimize interference between subjects. Segregate your subjects temporally or spatially so that the assignment or treatment of one subject has no effect on another subject’s potential outcomes. If you seek to estimate spillover effects, remember that you may need to use inverse probability weights to obtain consistent estimates Show appropriate caution when interpreting interaction effects, or subgroup differences in average treatment effects Adjust p-values for multiple comparisons so as to reduce the risk of attaching a substantive interpretation to what is in fact chance variation in average treatment effects. When interpreting interactions between covariates and treatments, bear in mind that you are describing how treatment effects vary across different covariate values. A factorial design is a more informative way to investigate whether changes in one variable cause changes in the influence of another factor. Expect to perform a series of experiments. In social science, one experiment is never enough. Experimental findings should be viewed as provisional, pending replication, and even several replications and extensions only begin to suggest the full range of conditions under which a treatment effect may vary across subjects and contexts. Every experiment raises new questions, and every experimental design can be improved in some way.