Multiple Regression

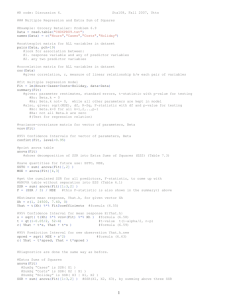

advertisement

Multiple Regression - II Extra Sum of Squares An extra sum of squares measures the marginal reduction in the error sum of squares when one or more predictor variables are added to the regression model, given that other predictor variables are already in the model. Equivalently, one can view an extra sum of squares as measuring the marginal increase in the regression sum of squares when one or several predictor variables are added to the regression model. Example: Body fat (Y) to be explained by possibly three predictors and their combinations: Triceps skinfold thickness (X1), thigh circumference (X2) and midarm circumference (X3). Case Summaries 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 Total N Triceps Skinfold Thickness 19.50 24.70 30.70 29.80 19.10 25.60 31.40 27.90 22.10 25.50 31.10 30.40 18.70 19.70 14.60 29.50 27.70 30.20 22.70 25.20 20 Thigh Circumference 43.10 49.80 51.90 54.30 42.20 53.90 58.50 52.10 49.90 53.50 56.60 56.70 46.50 44.20 42.70 54.40 55.30 58.60 48.20 51.00 20 Midarm Circumference 29.10 28.20 37.00 31.10 30.90 23.70 27.60 30.60 23.20 24.80 30.00 28.30 23.00 28.60 21.30 30.10 25.70 24.60 27.10 27.50 20 Body Fat 11.90 22.80 18.70 20.10 12.90 21.70 27.10 25.40 21.30 19.30 25.40 27.20 11.70 17.80 12.80 23.90 22.60 25.40 14.80 21.10 20 Body fat is hard to measure, but the predictor variables are easy to obtain. 1 Model (X1) Fit and ANOVA Y 1496 . .8572 X 1 SSR X 1 352.270 ANOVA a Model 1 Regres sion Residual Total Sum of Squares 352.270 143.120 495.389 df 1 18 19 Mean Square 352.270 7.951 F 44.305 Sig. .000b Mean Square 381.966 6.301 F 60.617 Sig. .000b Mean Square 192.719 6.468 F 29.797 Sig. .000b Mean Square 132.328 6.150 F 21.516 Sig. .000b a. Dependent Variable: Y b. Independent Variables: (Constant), X1 SSE X 1 143120 . Model (X2) Fit and ANOVA Y 23.634.8565 X 2 SSR X 2 381966 . ANOVA a Model 1 Regres sion Residual Total Sum of Squares 381.966 113.424 495.389 df 1 18 19 a. Dependent Variable: Y b. Independent Variables: (Constant), X2 SSE X 2 113.424 Model (X1, X2) Fit and ANOVA Y 19174 . .2224 X 1 .6594 X 2 SSR X 1 , X 2 385.439 ANOVA a Model 1 Regres sion Residual Total Sum of Squares 385.439 109.951 495.389 df 2 17 19 a. Dependent Variable: Y b. Independent Variables: (Constant), X2, X1 SSE X 1 , X 2 109.951 Model (X1, X2, X3) Fit and ANOVA Y 117.08 4.334 X 1 2.857 X 2 2186 . X3 ANOVA a Model 1 Regres sion Residual Total Sum of Squares 396.985 98.405 495.389 df 3 16 19 a. Dependent Variable: Y b. Independent Variables: (Constant), X3, X2, X1 SSR X 1 , X 2 , X 3 396.985 SSE X 1 , X 2 , X 3 98.405 2 Extra Sum of Squares SSR X 2 | X1 SSE X1 SSE X1 , X 2 143 109 .95 33.17 SSR X 2 | X1 SSR X1 , X 2 SSR X1 385 .44 352 .27 33.17 SSR X 3 | X 1 , X 2 SSE X 1 , X 2 SSE X 1 , X 2 , X 3 109.95 98.41 1154 . SSR X 3 | X 1 , X 2 SSR X 1 , X 2 , X 3 SSR X 1 , X 2 396.98 385.44 1151 . Decomposition of SSR into Extra Sum of Squares SSR X1 , X 2 , X 3 SSR X1 SSR X 2 | X1 SSR X 3 | X1 , X 2 What are other possible decompositions? Note that the order of the X variables is arbitrary. ANOVA Table Containing Decomposition of SSR Source of Variation SS df MS Regression SSR X1 , X 2 , X 3 3 MSR X1 , X 2 , X 3 X1 SSR X1 1 MSR X1 X 2 | X1 SSR X 2 | X1 1 MSR X 2 | X1 X 3| X1 , X 2 SSR X 3 | X1 , X 2 1 MSR X 3 | X1 , X 2 Error SSE X1 , X 2 , X 3 n4 MSE X1 , X 2 , X 3 Total SS TOT n 1 3 ANOVA Table with Decomposition of SSR - Body Fat Example with Three Predictor Variables. Source of Variation SS df MS Regression 396.98 3 132.27 X1 352.17 1 352.27 X 2 | X1 33.17 1 33.17 X 3| X1 , X 2 11.54 1 11.54 Error 98.41 n4 6.15 Total 495.39 n 1 Computer Packages SAS use the term “Type I” to refer to the extra sum of squares. Example in SAS Using Body Fat Data The GLM Procedure Dependent Variable: y Source DF Sum of Squares Mean Square F Value Pr > F Model 3 396.9846118 132.3282039 21.52 <.0001 Error 16 98.4048882 6.1503055 Corrected Total 19 495.3895000 R-Square Coeff Var Root MSE y Mean 0.801359 12.28017 2.479981 20.19500 Source x1 x2 x3 Source x1 x2 x3 Parameter DF Type I SS Mean Square F Value Pr > F 1 1 1 352.2697968 33.1689128 11.5459022 352.2697968 33.1689128 11.5459022 57.28 5.39 1.88 <.0001 0.0337 0.1896 DF Type III SS Mean Square F Value Pr > F 1 1 1 12.70489278 7.52927788 11.54590217 12.70489278 7.52927788 11.54590217 2.07 1.22 1.88 0.1699 0.2849 0.1896 Estimate Standard Error t Value Pr > |t| 4 Intercept x1 x2 x3 117.0846948 4.3340920 -2.8568479 99.78240295 3.01551136 2.58201527 1.17 1.44 -1.11 0.2578 0.1699 0.2849 -2.1860603 1.59549900 -1.37 0.189 For more details on the decomposition of the SSR into extra sums of squares, please see the Schematic Representation in Figure 7.1 on page 261 Mean Squares SSR X 2 | X1 MSR X 2 | X1 1 Note that each extra sum of squares involving a single extra X variable has associated with it one degree of freedom. Extra Sum of Squares from Several Variables SSR X 2 , X 3 | X1 SSE X1 SSE X1 , X 2 , X 3 SSR X 2 | X1 SSR X 3 | X1 , X 2 MSR X 2 , X 3 | X1 SSR X 2 , X 3 | X1 2 SSR X 2 , X 3 | X 1 SSE X 1 SSE X 1 , X 2 , X 3 14312 . 98.41 44.71 SSR X 2 , X 3 | X 1 SSR X 1 , X 2 , X 3 SSR X 1 396.98 352.27 44.7 Extra sums of squares involving two extra X variables, such as SSR(X2, X3| X1), have two degrees of freedom associated with them. This follows because we can express such an extra sum of squares as a sum of two extra sums of squares, each associated with one degree of freedom. Uses of Extra Sums of Squares in Tests for Regression Coefficients Test Whether a Single Beta Coefficient is Zero (two tests are available). 5 (1) t-test (6.51b) discussed in chapter 6 (2) General Linear Test Approach Yi 0 1 X i1 2 X i 2 3 X i3 i Full Model SSE F SSE X 1 , X 2 , X 3 df F n 4 H 0 : 3 0 Hypotheses H a : 3 0 Reduced Model when H0 holds Yi 0 1Xi1 2 Xi 2 i SSE R SSE X1 , X 2 df R n 3 General Form of Test Statistic F* Form of Test Statistic for Testing a Single Beta Coefficient Equal Zero F* SSE R SSE F SSE F df R df F df F SSE X1 , X 2 SSE X1 , X 2 , X 3 SSE F n 3 n 4 n4 SSR X 3 | X1 , X 2 SSE X1 , X 2 , X 3 1 n4 MSR X 3 | X1 , X 2 MSE X1 , X 2 , X 3 We don’t need to fit both the full model and the reduced model. Only fitting a full model in SAS will provide the MSR(X3| X1 , X2) and MSE(X1,X2,X3). See the SAS output Note: (1) here the t-test and F-test are equivalent test. (2) the F test to test whether or not 3=0 is called a partial F test (3) the F test to test whether or not all k=0 is called the overall F test. 6 Test Whether Several Beta Coefficients Are Zero (only one test available). General Linear Test Approach Full Model Yi 0 1 X i1 2 X i 2 3 X i3 i SSE F SSE X 1 , X 2 , X 3 df F n 4 Hypotheses Reduced Model when H0 holds H 0 : 2 3 0 H a : at least one j 0 Yi 0 1 X i1 i SSE R SSE X 1 df R n 2 General Form of Test Statistic F* Form of Test Statistic for Testing Several Beta Coefficients Equal Zero F * SSE R SSE F SSE F df R df F df F SSE X 1 SSE X 1 , X 2 , X 3 n 2 n 4 SSR X 2 , X 3 | X 1 2 MSR X 2 , X 3 | X 1 SSE F n4 SSE X 1 , X 2 , X 3 n4 MSE X 1 , X 2 , X 3 7 Example: Body Fat SSR X 2 , X 3 | X 1 SSE X 1 SSE X 1 , X 2 , X 3 14312 . 98.41 44.71 SSR X 2 , X 3 | X 1 SSE X 1 , X 2 , X 3 2 n4 44.71 98.41 3.63 2 16 .05 F* F .95;2,16 3.63 F * F .95;2,16 H a Take no action Other Tests When Extra Sum of Squares Cannot be Used, therefore both full model and reduced model have to be fitted. Example Yi 0 1 X i1 2 X i 2 3 X i 3 i H 0 : 1 2 H a : 1 2 Yi 0 c X i1 X i 2 3 X i3 i SSE R SSE F SSE F F df R df F df F df F n 4 * Full Model Hypotheses Reduced Model when H0 holds General Test Statistic df R df F n 3 n 4 1 8 Coefficients of Partial Determination Descriptive Measures of relation ships, uses extra sum of squares. Useful in describing causal relationships. Two Predictor Variables The coefficient of multiple determination measures the proportionate reduction in the variation of Y achieved by the introduction of the entire set of X variables. Coefficient of Partial Determination uses Y and X1 both “adjusted for X2” and measure the proportionate reduction in the variation of the “adjusted Y” by including the “adjusted X1.” (comments 2 on page 270) 2 Y1.2 r SSE X 2 SSE X 1 , X 2 SSE X 2 SSR X 1 | X 2 SSE X 2 9 General Case 2 Y 1.23 r 2 Y 2.13 r 2 Y 3.12 r 2 Y 4.123 r Example When X2 is added to model containing X1, SSE is reduced by 23.2% When X3 is added to model containing X1 and X2, SSE is reduced by 10.5% When X1 is added to model containing X2, SSE is reduced by only 3.1% rY22.1 2 Y 3.12 r 2 Y 1.2 r SSR X 1 | X 2 , X 3 SSE X 2 , X 3 SSR X 2 | X 1 , X 3 SSE X 1 , X 3 SSR X 3 | X 1 , X 2 SSE X 1 , X 2 SSR X 4 | X 1 , X 2 , X 3 SSE X 1 , X 2 , X 3 SSR X 2 | X 1 SSE X 1 3317 . .232 14312 . SSR X 3 | X 1 , X 2 SSE X 1 , X 2 SSR X 1 | X 2 SSE X 2 1154 . .105 109.95 3.47 .031 113.42 10 Multicollinearity and Its Effects Some questions frequently asked are: 1. What is the relative importance of the effects of the different predictor variables? 2. What is the magnitude of the effect of a given predictor variable on the response variable? 3. Can any predictor variable be dropped from the model because it has little or no effect on the response variable? 4. Should any predictor variable not yet included in the model be considered for possible inclusion? If the predictor variables included in the model are uncorrelated among themselves and uncorrelated with any other predictor variables that are related to the response variable but are omitted from the model then relative simple answers can be given. Unfortunately, in many nonexperimental situations in business economics, and social and biological sciences, the predictor variables are correlated. For example: Family food expenditures (Y). Correlated predictors in model: Family income (X1), Family savings (X2), Age of head of household (X3). Correlated with predictors outside model: Family size (X4). When the predictor variables are correlated among themselves, intercorrelation or multicollinearity among them is said to exist. 11 Example of Perfectly Uncorrelated Predictor Variables (Table 7.6) X1 and X2 are uncorrelated. Models: (1) Ŷ 0.375 5.375 X1 9.250 X 2 Source of Variation Regression (2) SS df MS 402.250 2 201.125 Error 17.625 5 Total 419.875 7 3.525 Ŷ 23.500 5.375 X1 the regression coefficient for X1 is the same for both model (1) and (2). The same holds for regression coefficient for X2. conduct controlled experiments since the levels of the predictor variables can be chosen to ensure they are uncorrelated SSR(X1|X2)=SSR(X1) SSR(X2|X1)=SSR(X2) Source of Variation Regression (3) df MS 231.125 1 231.125 Error Total SS 188.75 6 31.458 419.875 7 Ŷ 27.250 9.250 X 2 Source of Variation Regression SS df MS 171.125 1 171.125 Error 248.75 6 Total 419.875 7 41.458 12 Example of Perfectly Correlated Predictor Variables Case i Xi1 Xi2 Y Pred-Y (Model 1) Pred-Y (Model 2) 1 2 6 23 23 23 2 8 9 83 83 83 3 6 8 63 63 63 4 10 10 103 103 103 Models: (1) Y 87 X1 18 X 2 Perfect Relation between predictors: (2) Y 7 9 X1 2 X 2 X2=5+0.5 X1 Two Key Implications 1. The perfect relation between X1 and X2 do not inhibit our ability to obtain a good fit to the data. 2. Since many different response functions provide the same good fit, we cannot interpret any one set of regression coefficients as reflecting the effect of different predictor variables. Effects of Multicollinearity We seldom find variables that are perfectly correlated. However, the implication just noted in our idealized example still have relevance. 1. The fact that some or all predictor variables are correlated among themselves does not, in general, inhibit our ability to obtain a good fit. 2. The counterpart in real life to many different regression functions providing equally good fits to the data in our idealized example is that the estimated regression coefficients tend to have large sampling variability when the predictor variables are highly correlated. 3. The common interpretation of a regression coefficient as measuring the change in the expected value of the response variable when the given predictor variable is increased by one unit while all the other predictors are held constant is not fully applicable when multicollinearity exits. 13 Example: Body Fat Correl ations Pearson Correlation Sig. (2-tailed) N Triceps Sk infold Thickness Thigh Circumference Midarm Circumference Triceps Sk infold Thickness Thigh Circumference Midarm Circumference Triceps Sk infold Thickness Thigh Circumference Midarm Circumference Triceps Sk infold Thickness Thigh Circumference Midarm Circumference 1.000 .924 .458 .924 1.000 .085 .458 .085 1.000 . .000 .042 .000 . .723 .042 .723 . 20 20 20 20 20 20 20 20 20 14 Coeffi cientsa Effects on Regression Coefficients 1. Estimates of coefficients change a lot as each variable is entered in the model. 2. In Model (3) although the Ftest is significant, none of the t-tests for individuals coefficients is significant. Model 1 2 3 (Const ant) Triceps Sk infold Thickness (Const ant) Triceps Sk infold Thickness Thigh Circumference (Const ant) Triceps Sk infold Thickness Thigh Circumference Midarm Circumference Unstandardized Coeffic ient s St d. B Error -1. 496 3.319 St andar diz ed Coeffic i ents Beta .843 t -.451 Sig. .658 6.656 .000 -2. 293 .035 .857 .129 -19.174 8.361 .222 .303 .219 .733 .474 .659 .291 .676 2.265 .037 117.085 99.782 1.173 .258 4.334 3.016 4.264 1.437 .170 -2. 857 2.582 -2. 929 -1. 106 .285 -2. 186 1.595 -1. 561 -1. 370 .190 a. Dependent Variable: B ody Fat 3. In Model (3) the variances of the coefficients are inflated. 15 4. The standard error of estimate is not substantially improved as more variables are entered in the model. Thus fitted values and predictions are neither more nor less precise. Model Summary a,b Model 1 2 3 Variables Entered Removed Triceps Skinfold . Thickne c,d ss Thigh Circumfe,d . erence Midarm Circumff,d . erence R Square Adjusted R Square Std. Error of the Es timate .843 .711 .695 2.8198 .882 .778 .752 2.5432 .895 .801 .764 2.4800 R a. Dependent Variable: Body Fat b. Method: Enter c. Independent Variables: (Constant), Triceps Skinfold Thickness d. All requested variables entered. e. Independent Variables: (Constant), Triceps Skinfold Thickness , Thigh Circumference f. Independent Variables: (Constant), Triceps Skinfold Thickness , Thigh Circumference, Midarm Circumference 16 Theoretical reason for inflated variance: As the correlation between the predictors increases to one, the variance increases to infinity. 1. The primed variables Y’, X1’, X2’ are called the “correlation transformation.” 2. The X’X matrix of the primed variables is the correlation matrix rXX. 3. As (r12)2 approaches 1 the variances march off to infinity. Yi 0 1 X i1 2 X i 2 i Correlation Transformation Yi Y s n 1 Y 1 Yi X ik X n 1 sX 1 X ik Yi 1X i1 2 X i2 i Correlation Matrix X X r XX 1 r12 r12 1 r12 1 X X r r 1 12 Variance - Covariance Matrix 1 2 2 1 XX b 1 1 r122 2 1 1 r122 1 r 12 r12 1 2 b b 1 r 2 1 2 2 12 For more details, please read page 272-278 of ALSM 17