Where do the linear regression equations come

advertisement

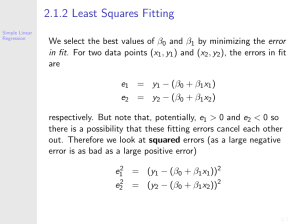

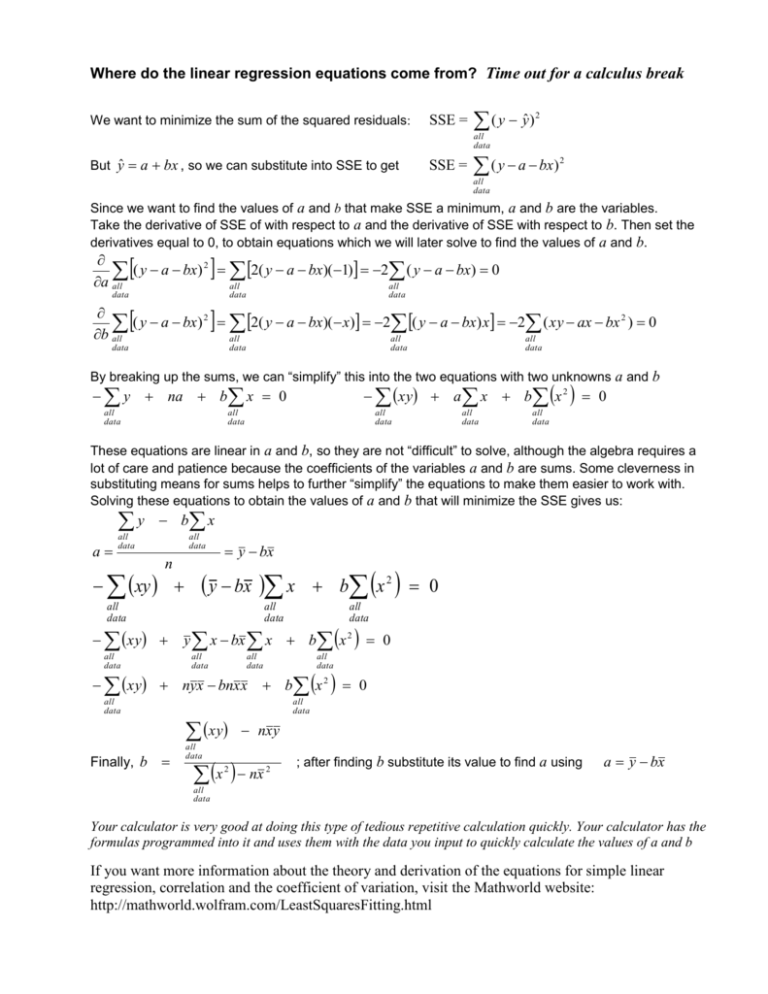

Where do the linear regression equations come from? Time out for a calculus break We want to minimize the sum of the squared residuals: SSE = ( y yˆ ) 2 all data But yˆ a bx , so we can substitute into SSE to get SSE = ( y a bx) 2 all data Since we want to find the values of a and b that make SSE a minimum, a and b are the variables. Take the derivative of SSE of with respect to a and the derivative of SSE with respect to b. Then set the derivatives equal to 0, to obtain equations which we will later solve to find the values of a and b. ( y a bx) 2 2( y a bx)( 1) 2 ( y a bx) 0 a all all all data data data ( y a bx) 2 2( y a bx)( x) 2 ( y a bx) x 2 ( xy ax bx 2 ) 0 b all all all all data data data data By breaking up the sums, we can “simplify” this into the two equations with two unknowns a and b xy a x b x 2 0 y na b x 0 all data all data all data all data all data These equations are linear in a and b, so they are not “difficult” to solve, although the algebra requires a lot of care and patience because the coefficients of the variables a and b are sums. Some cleverness in substituting means for sums helps to further “simplify” the equations to make them easier to work with. Solving these equations to obtain the values of a and b that will minimize the SSE gives us: y a b x all data all data n xy y bx all data b x 2 0 all data xy all data all data y x bx x b x 2 0 all data all data xy nyx bnx x all data b x 2 0 all data all data xy Finally, b y bx x nx y all data x nx 2 2 ; after finding b substitute its value to find a using a y bx all data Your calculator is very good at doing this type of tedious repetitive calculation quickly. Your calculator has the formulas programmed into it and uses them with the data you input to quickly calculate the values of a and b If you want more information about the theory and derivation of the equations for simple linear regression, correlation and the coefficient of variation, visit the Mathworld website: http://mathworld.wolfram.com/LeastSquaresFitting.html