Statistics Tutorial

advertisement

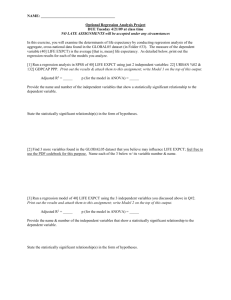

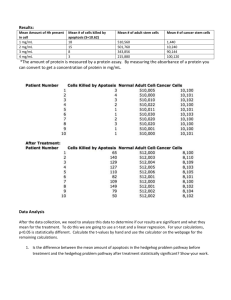

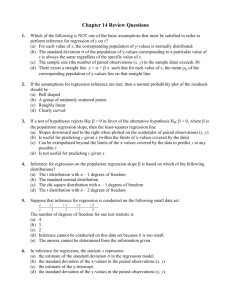

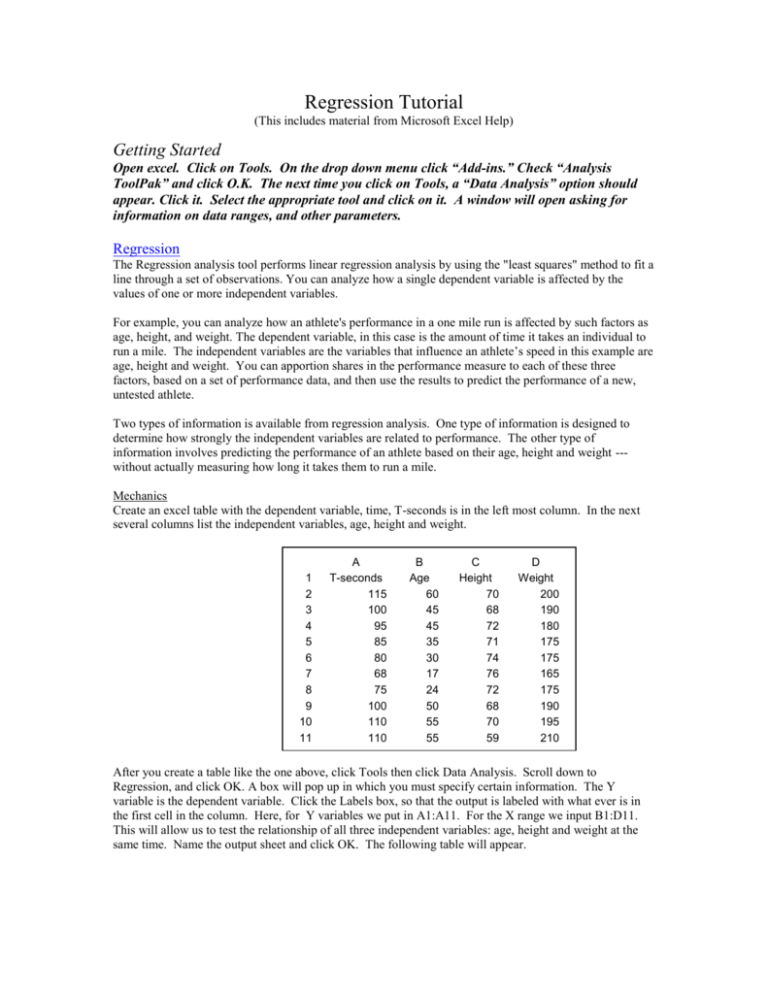

Regression Tutorial (This includes material from Microsoft Excel Help) Getting Started Open excel. Click on Tools. On the drop down menu click “Add-ins.” Check “Analysis ToolPak” and click O.K. The next time you click on Tools, a “Data Analysis” option should appear. Click it. Select the appropriate tool and click on it. A window will open asking for information on data ranges, and other parameters. Regression The Regression analysis tool performs linear regression analysis by using the "least squares" method to fit a line through a set of observations. You can analyze how a single dependent variable is affected by the values of one or more independent variables. For example, you can analyze how an athlete's performance in a one mile run is affected by such factors as age, height, and weight. The dependent variable, in this case is the amount of time it takes an individual to run a mile. The independent variables are the variables that influence an athlete’s speed in this example are age, height and weight. You can apportion shares in the performance measure to each of these three factors, based on a set of performance data, and then use the results to predict the performance of a new, untested athlete. Two types of information is available from regression analysis. One type of information is designed to determine how strongly the independent variables are related to performance. The other type of information involves predicting the performance of an athlete based on their age, height and weight --without actually measuring how long it takes them to run a mile. Mechanics Create an excel table with the dependent variable, time, T-seconds is in the left most column. In the next several columns list the independent variables, age, height and weight. 1 2 3 4 5 6 7 8 9 10 11 A T-seconds 115 100 95 85 80 68 75 100 110 110 B Age 60 45 45 35 30 17 24 50 55 55 C Height 70 68 72 71 74 76 72 68 70 59 D Weight 200 190 180 175 175 165 175 190 195 210 After you create a table like the one above, click Tools then click Data Analysis. Scroll down to Regression, and click OK. A box will pop up in which you must specify certain information. The Y variable is the dependent variable. Click the Labels box, so that the output is labeled with what ever is in the first cell in the column. Here, for Y variables we put in A1:A11. For the X range we input B1:D11. This will allow us to test the relationship of all three independent variables: age, height and weight at the same time. Name the output sheet and click OK. The following table will appear. SUMMARY OUTPUT Regression Statistics Multiple R 1.00 R Square 0.99 Adjusted R Square 0.99 Standard Error Observations 1.51 10.00 ANOVA df SS MS Regression 3.00 2325.98 775.33 Residual 6.00 13.62 2.27 Total 9.00 2339.60 Coefficients Intercept Standard Error t Stat F Significance F 341.50 P-value 0.00 Lower 95% Upper 95% -51.14 42.20 -1.21 0.27 -154.40 52.12 Age 0.84 0.10 8.33 0.00 0.59 1.08 Height 0.46 0.26 1.73 0.13 -0.19 1.10 Weight 0.42 0.16 2.71 0.04 0.04 0.80 t-statistic The t-statistic is a measure of how strongly a particular independent variable explains variations in the dependent variable. The larger the t-statistic the better the independent variable’s explanatory power. Next to each t-stat is a P-value. The P-value is used to interpret the t-stat. In short, the P-value is the probability that the independent variable in question has nothing to do with the dependent variable. Generally, we look for a P-value of less than .05, which means there is a 5% chance that the dependent variable is unrelated to the dependent variable. If the P-value is higher .10, a strong argument can be made for eliminating this particular independent variable from a model because it “isn’t statistically significant.” In the above model we can see that Age and Weight are statistically significant, but Weight isn’t statistically significant. F-statistic F is similar to the t-stat, but F looks at the quality of the entire model, meaning with all independent variables included. The larger the F the better. By eliminating independent variables with a low t-stat, you can improve the quality of the overall model, as seen by an increasing F. The term “Significance F” serves roughly the same function as the P-value. If it is less than .10 then we say the model is statistically significant. R Square and Adjusted R Square R Square is another measure of the explanatory power of the model. In theory, R square compares the amount of the error explained by the model as compared to the amount of error explained by averages. The higher the R-Square the better. An R-Square above .5 is generally considered quite good, but may not be attainable for every model. Statisticians have noticed that as more data points are analyzed, R Square values rise, even though no more of the model error is explained. Adjusted R Square is a modified version of R Square, and has the same meaning, but includes computations that prevent a high volume of data points from artificially driving up the measure of explanatory power. An Adjust R Square above .20 is generally considered quite good. Some published research has an Adjusted R-Square as low as .07. Using the Model to Predict Information Assume, for the moment, that all variables in our example: Age, Height and Weight are significant. We would be able to predict the performance of an athlete we did not test if we knew their Age, Height and Weight. The model uses the Coefficients from the Summary Output above. Time-Seconds = .84 x Age + .46 x Height + .42 x Weight -51.1 So if we had an untested athlete 40 years old, 5’10 inches tall or 60 inches, with a weight of 190 pounds we could predict their time to run the mile as follows: = .84 x 40 + .46 x 60 + .42 x 190 -51.1 = 33.6 + 27.6 + 79.8 – 51.1 = 98.9 seconds